OceanProtect Backup Storage

NetBackup OST Compatibility Testing Report

1. Overview

1.1 Objective

The objective of this testing report is to test the compatibility between OceanProtect backup storage and NetBackup OST plugin(hereinafter referred to as ‘NBU OST’).

1.2 Scenario

The test covers different backup and restoration scenarios and workloads, including basic functions, target A.I.R, accelerator, granular recovery, WORM, optimized deduplication, optimized synthesis, etc.

2. Environment Configuration

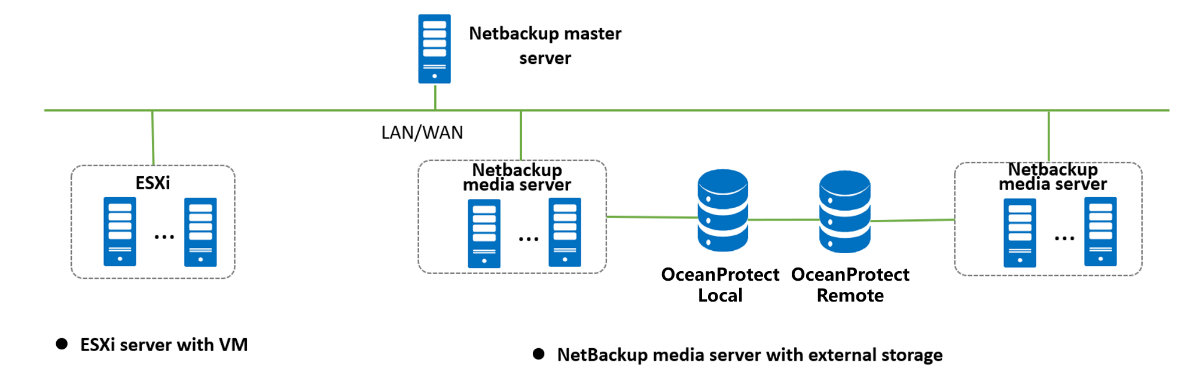

2.1Networking Diagrams for Verification

Networking diagram for verifying functions

The Windows and Linux hosts are deployed on the ESXI Server.

2.2 Hardware and Software Configurations

2.2.1 OceanProtect X8000 Configuration

Table 2-1 Single OceanProtect X8000 configuration (SSD)

Name | Description | Quantity |

|---|---|---|

OceanProtect engine | OceanProtect X8000 with two controllers | 2 |

10GE front-end interface module | 4 x 10 Gbit/s SmartIO interface module | 4 |

SAS SSD | 3.84 TB SAS SSD | 16 |

2.2.2 Hardware Configuration

Table 2-2 Hardware configuration

Name | Description | Quantity | Function |

|---|---|---|---|

VMware ESXi server | x86 server

| 1 | Install testing Windows and Linux hosts Backup, restoration, and remote replication of VMware VMs |

Hyper-V server | x86 server

| 1 | Install testing Windows and Linux hosts Backup, restoration, and remote replication of Hyper-V VMs |

10GE switch | CE6800 | 2 | 10GE switch on the backup & replication service plane |

Management switch | GE switch | 1 | Network switch on the management plane |

2.2.3 Test Software and Tools

Table 2-3 Test software and tools

Software | Description | Quantity |

|---|---|---|

VMware ESXi 7.0 | VMware virtualization platform | 1 |

vCenter 7.0 | VMware virtualization management software | 1 |

NetBackup 10.3 | NetBackup data management software | 1 |

Red Hat 8.4 | Linux OS | 1 |

SSH client software | SSH terminal connection tool. | 1 |

3. Test Cases

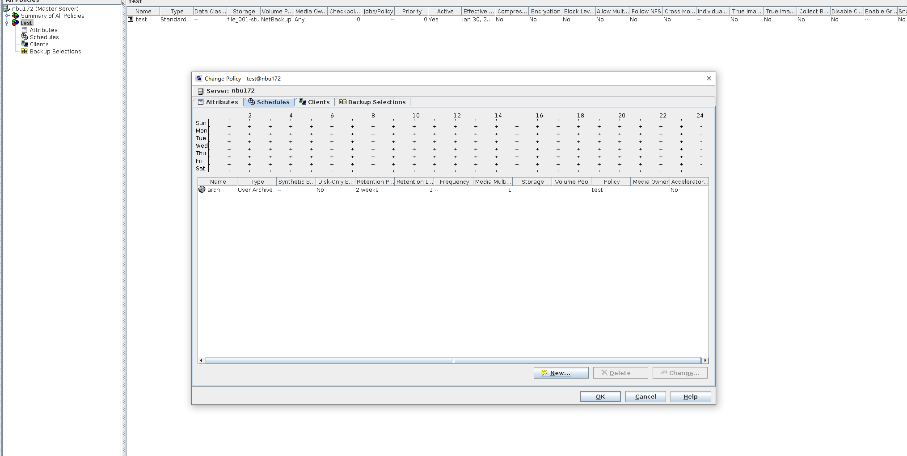

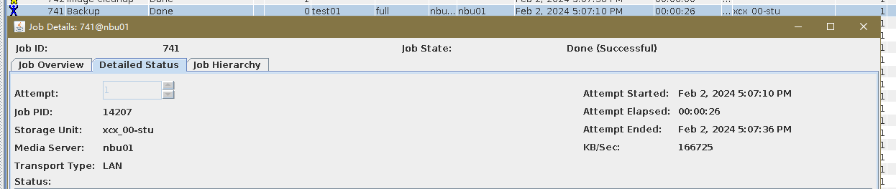

3.1 Basic Functions

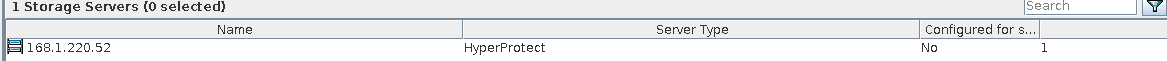

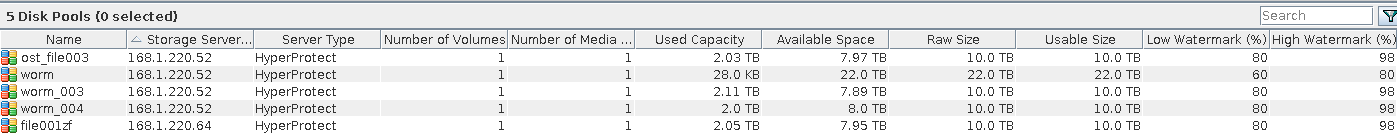

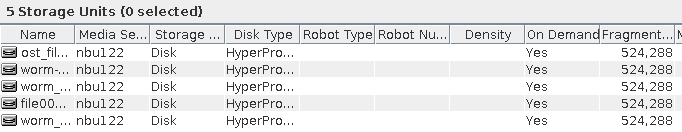

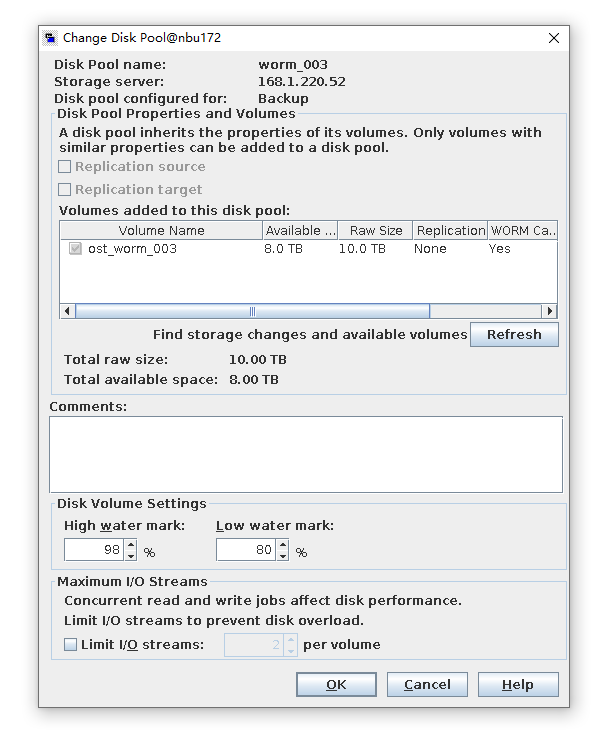

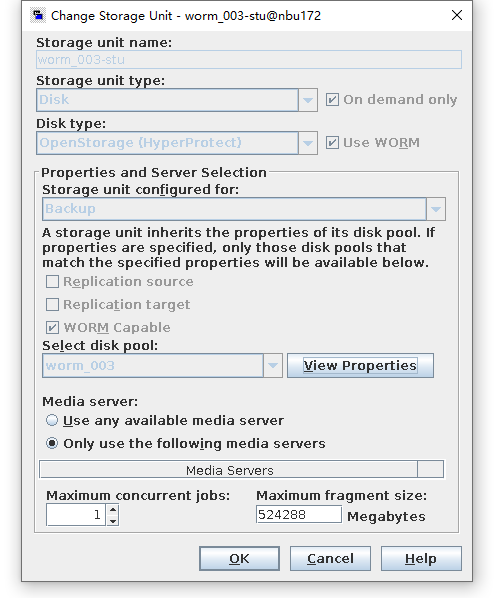

3.1.1 Creation of Storage Server, Diskpool and STU

To verify that the backup system can create storage server, disk pool and STU, and can backup up with policy name same as client name | |

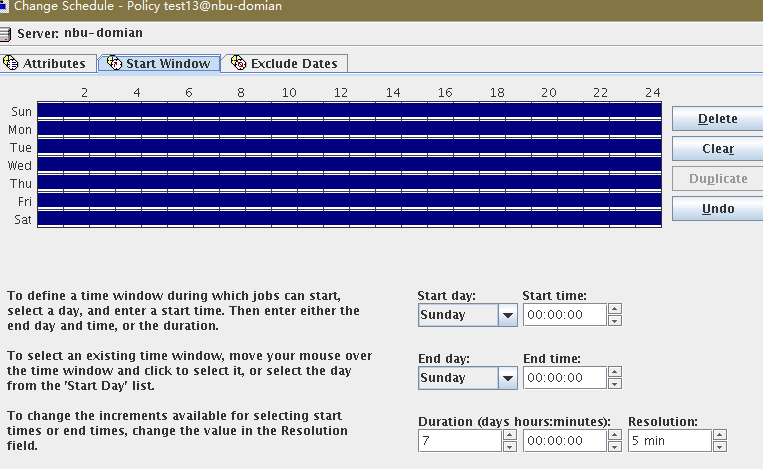

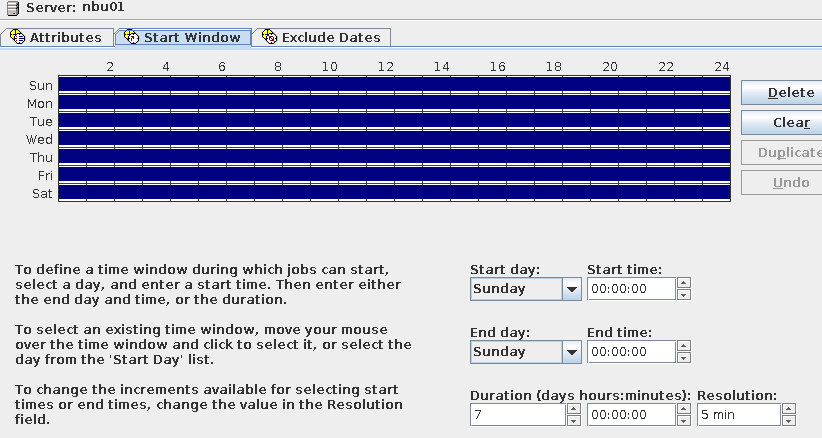

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

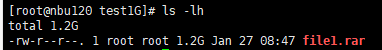

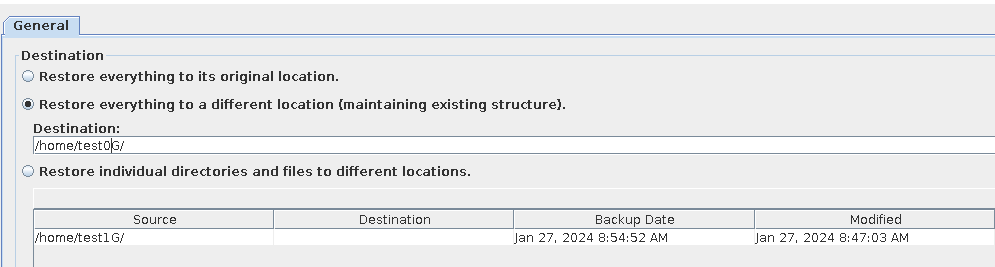

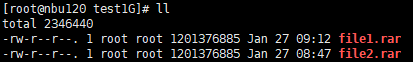

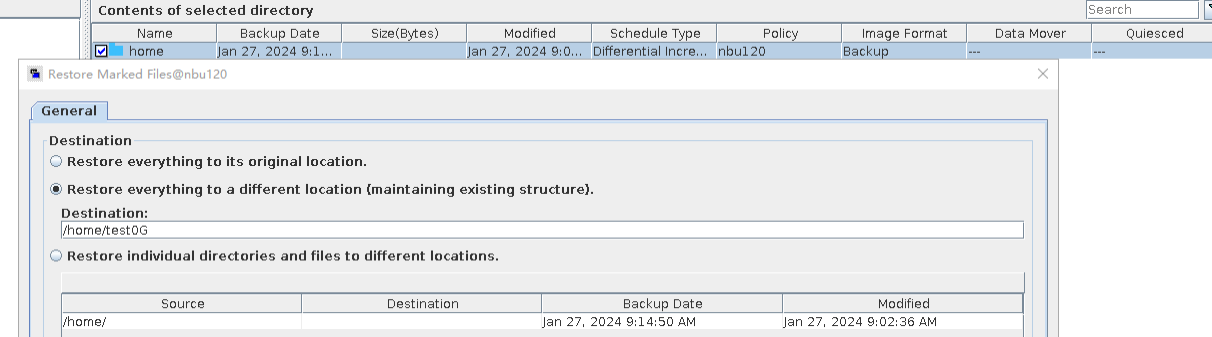

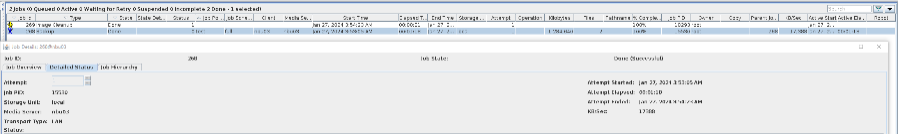

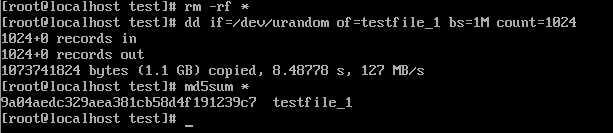

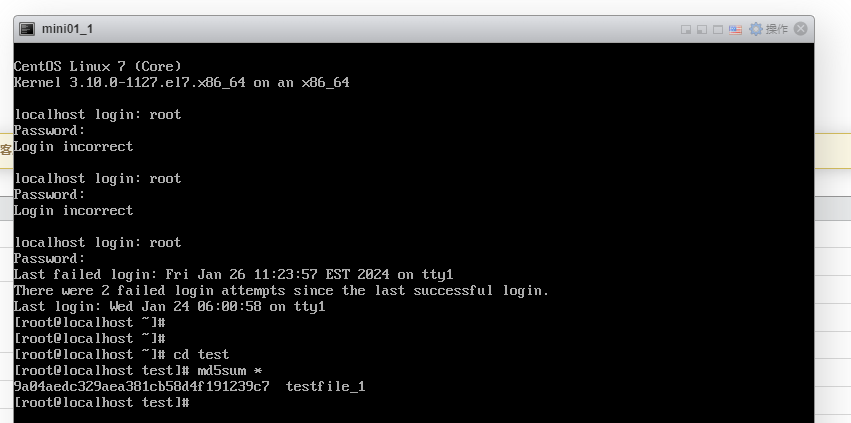

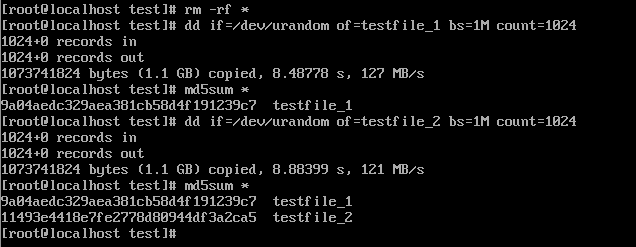

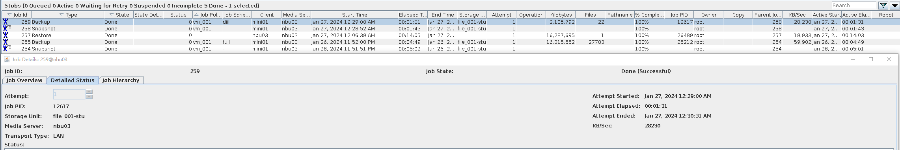

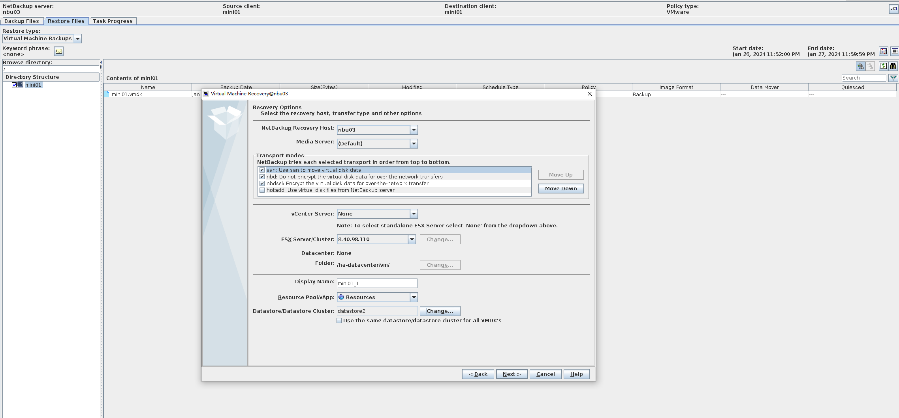

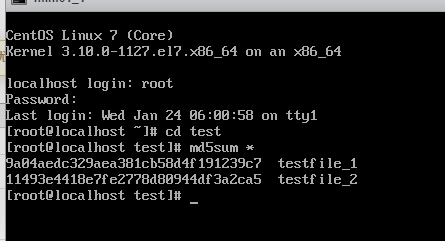

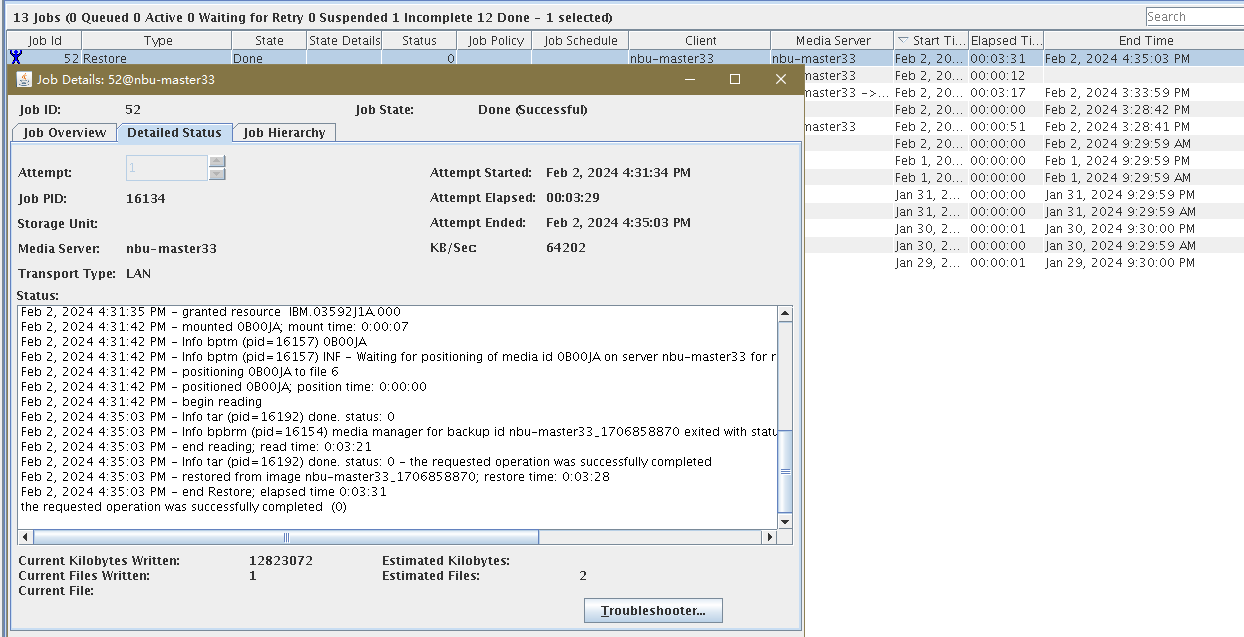

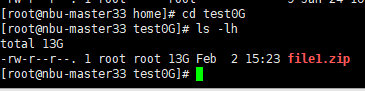

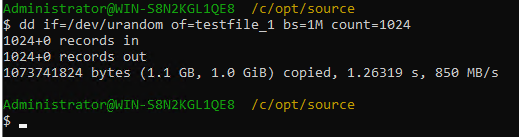

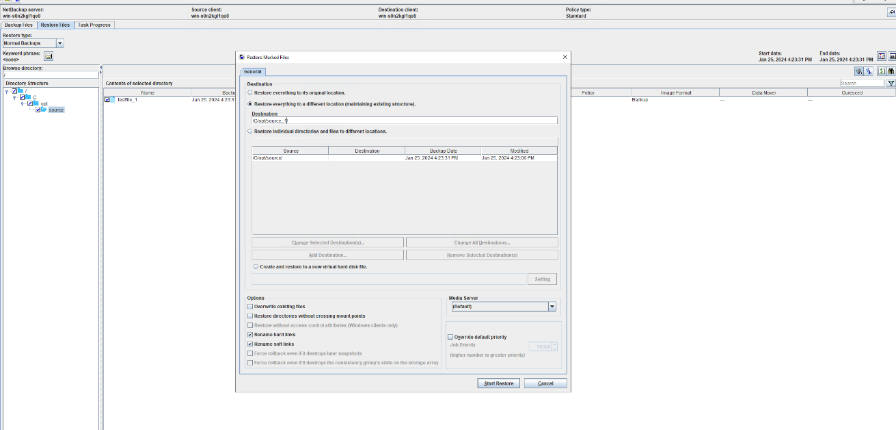

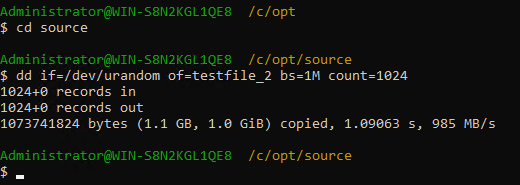

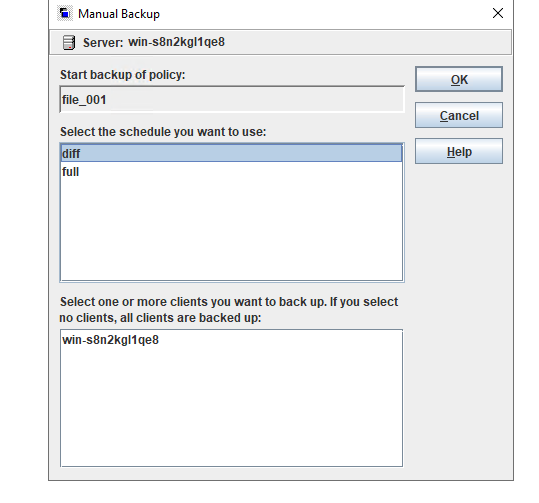

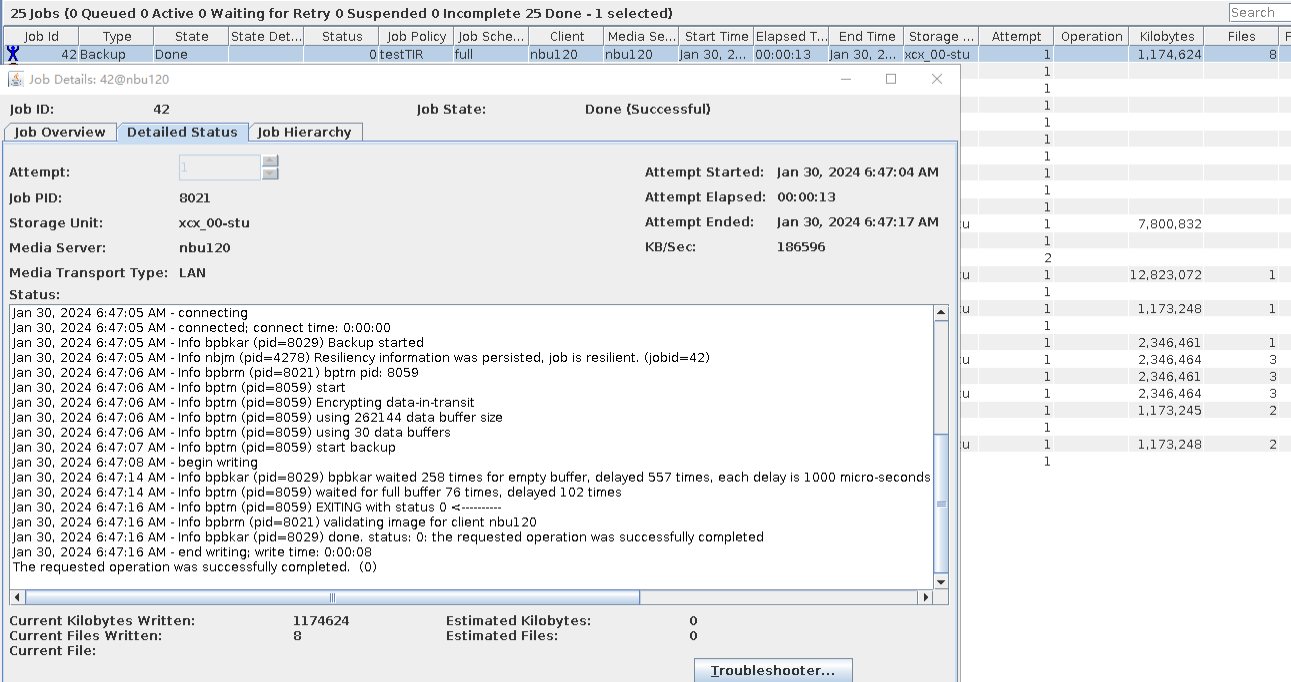

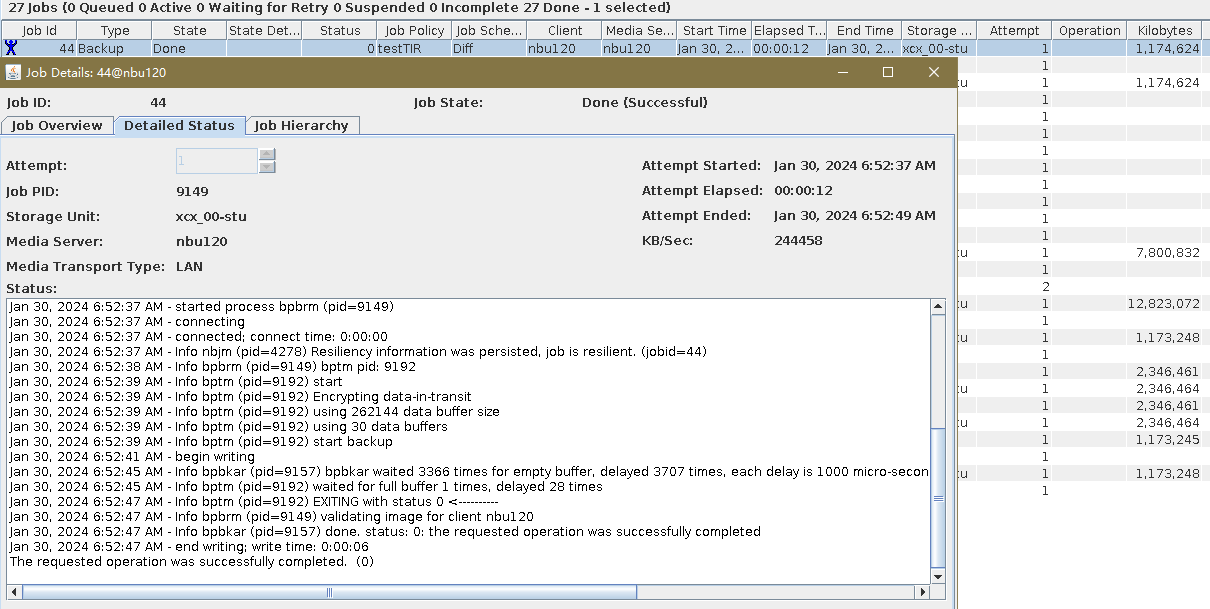

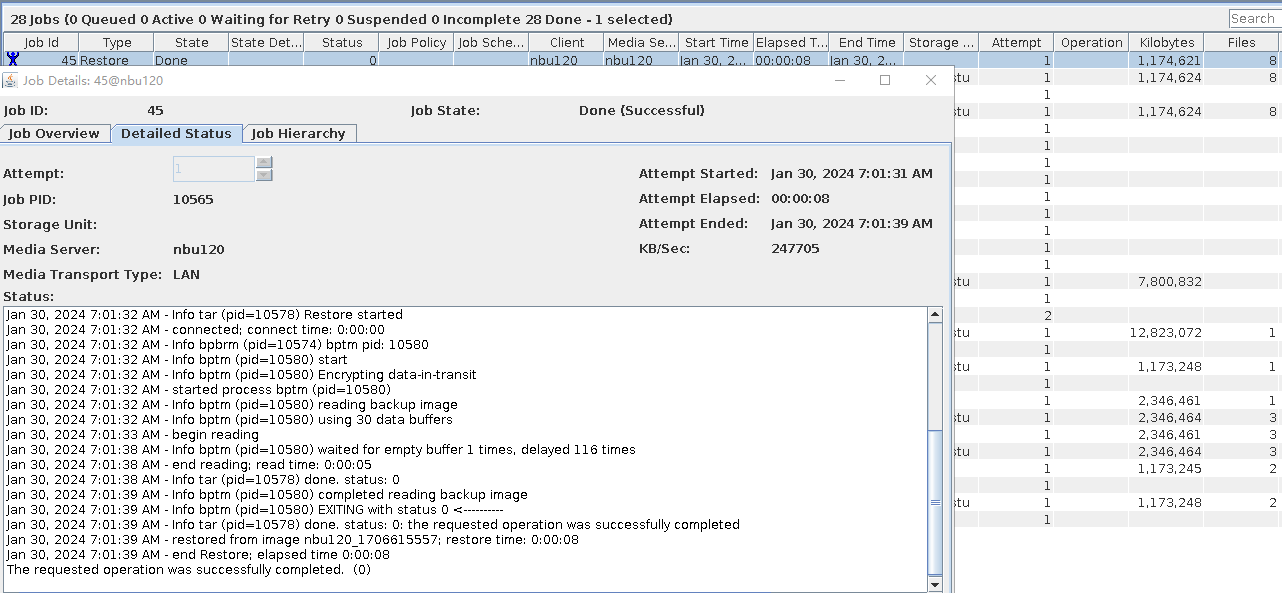

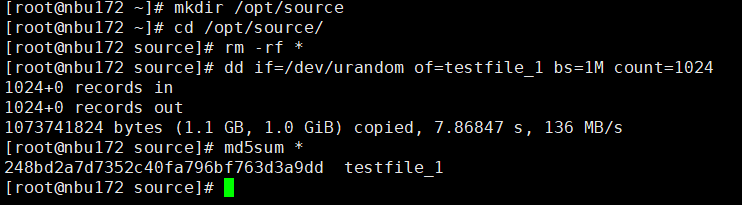

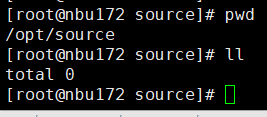

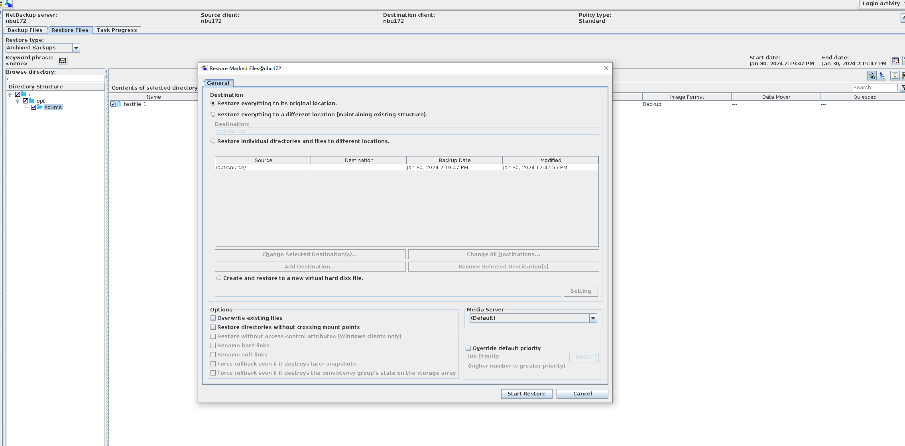

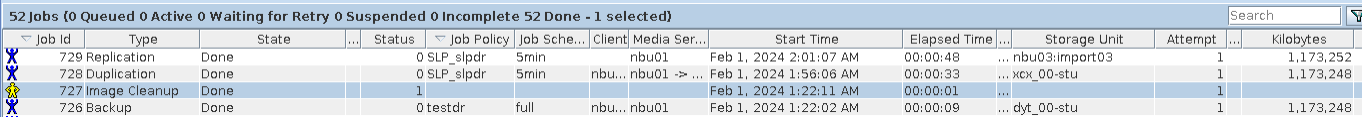

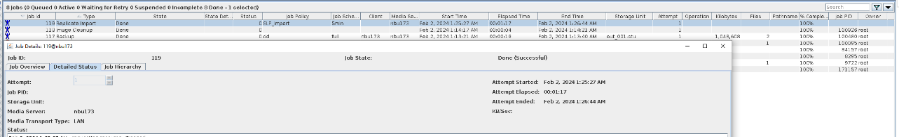

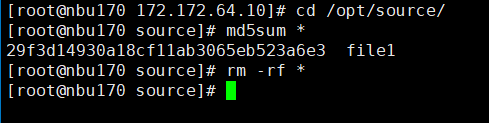

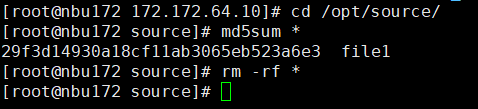

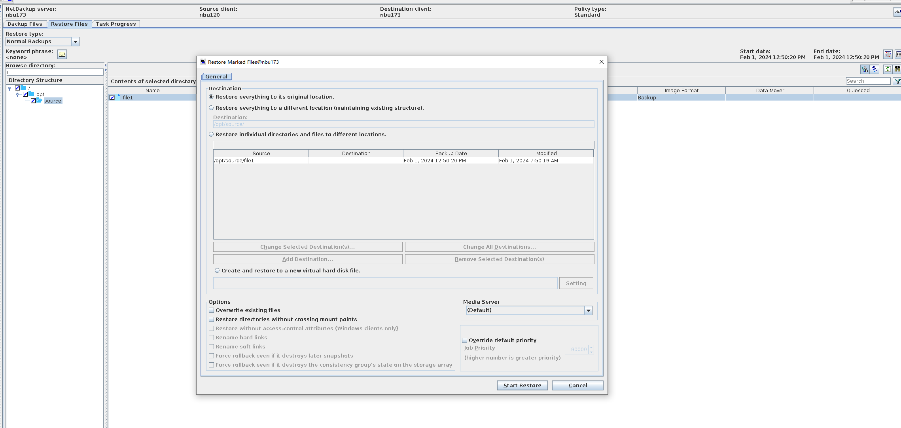

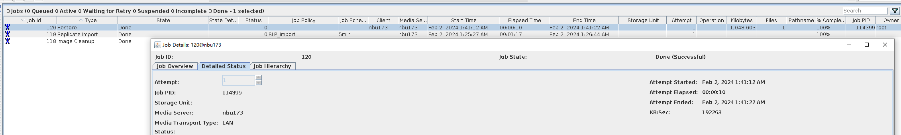

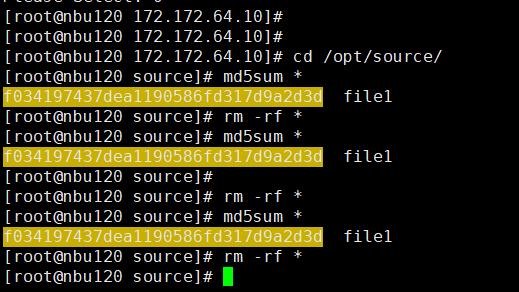

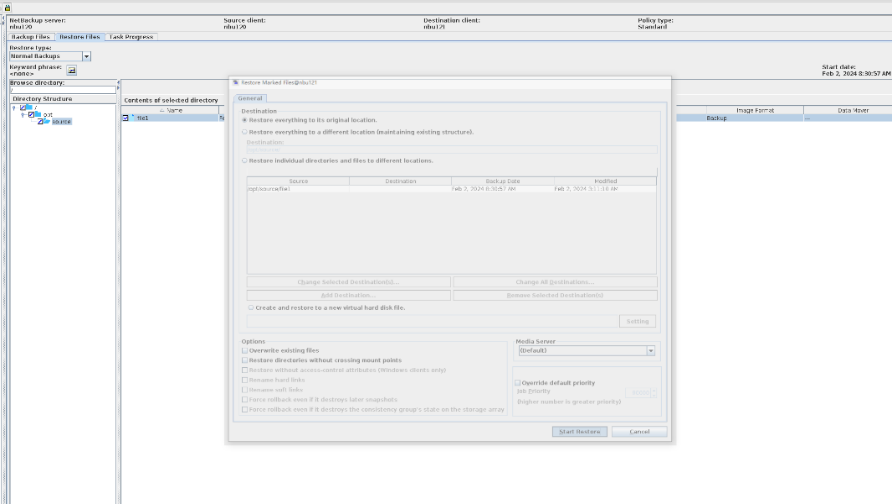

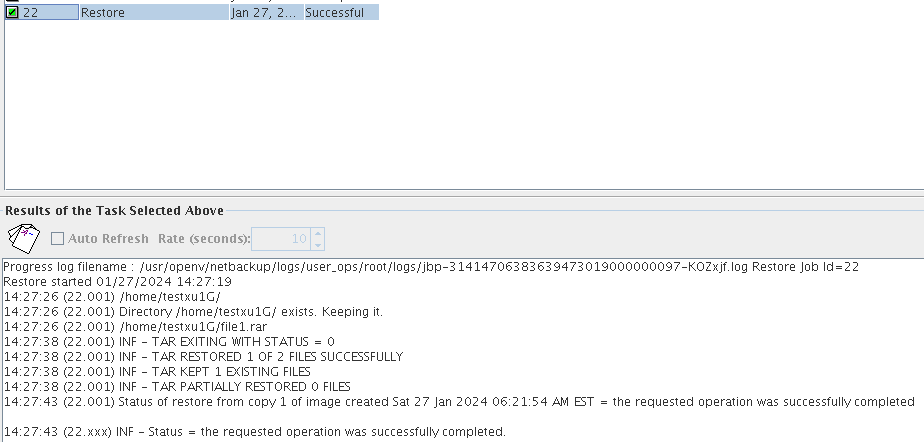

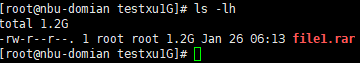

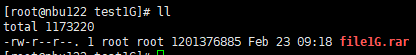

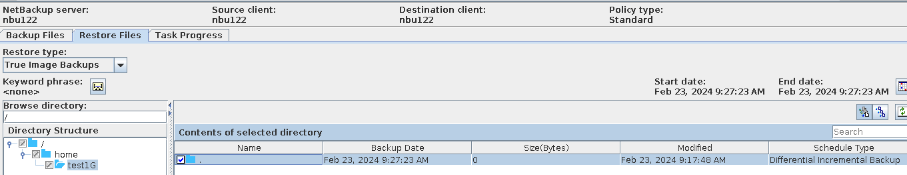

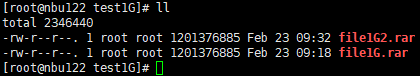

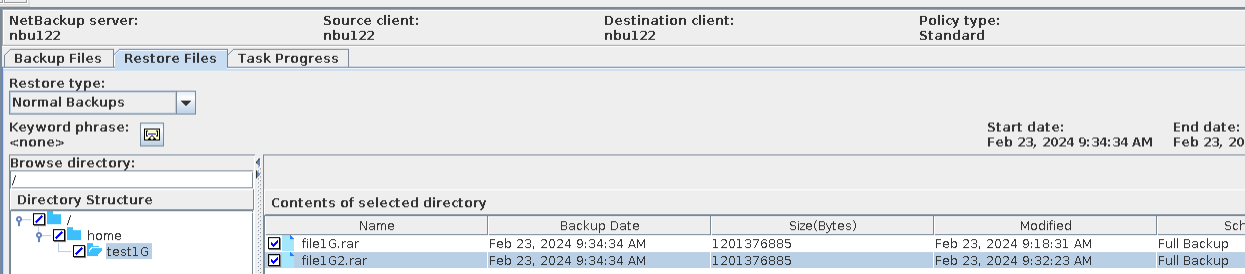

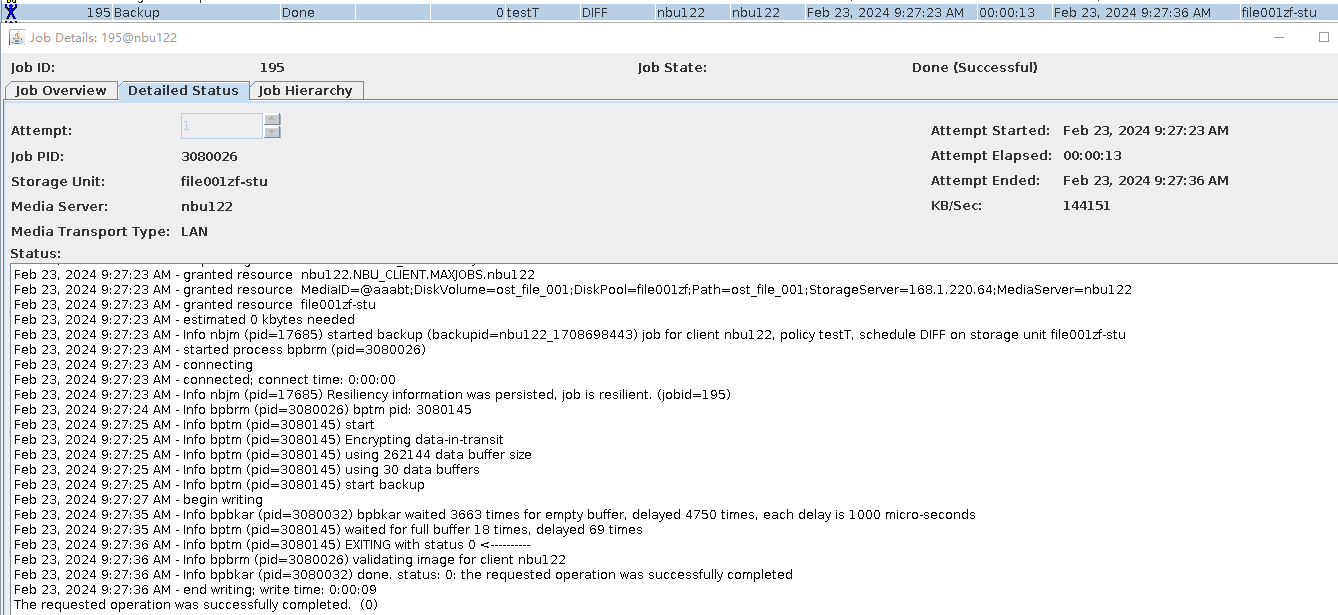

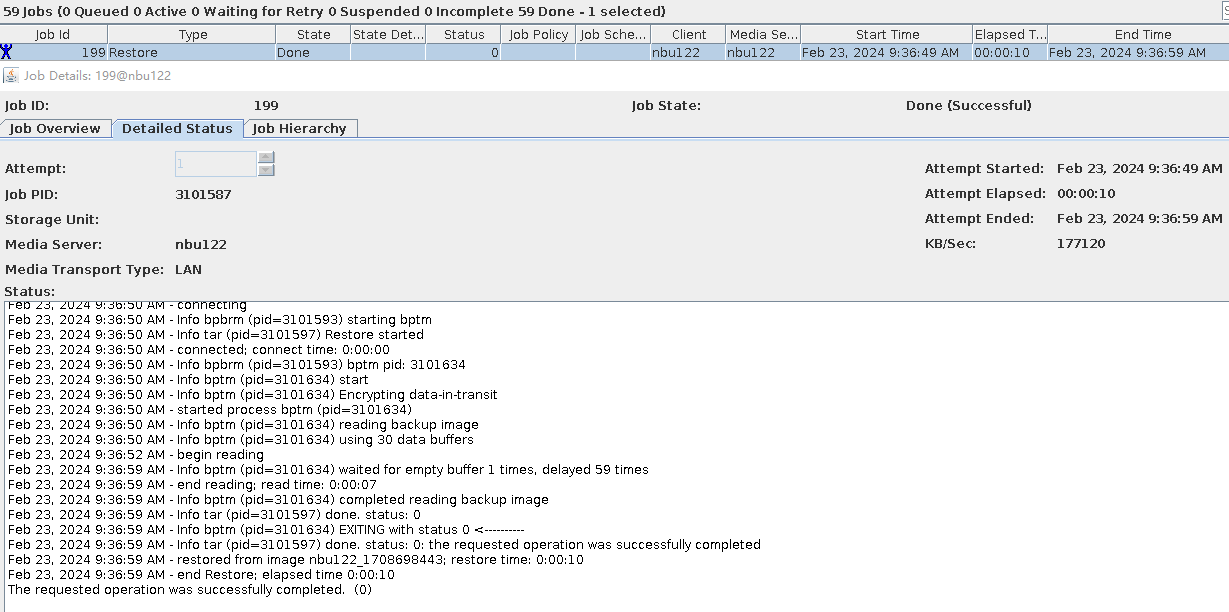

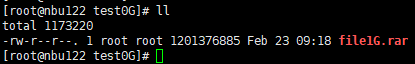

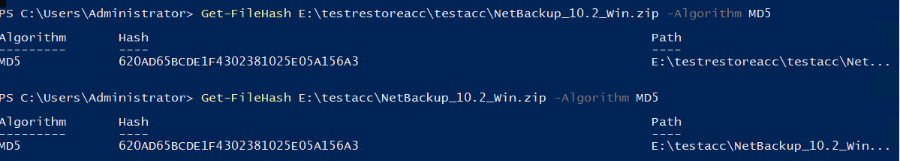

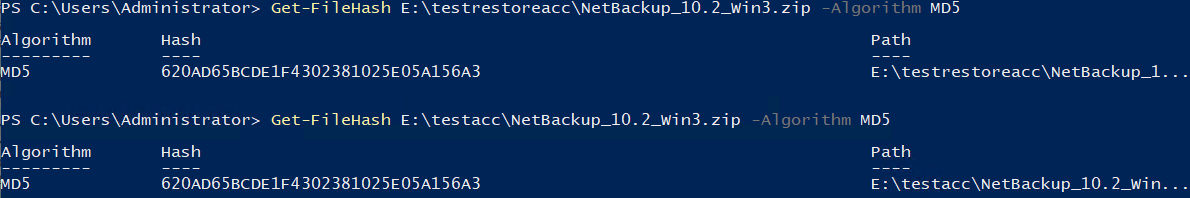

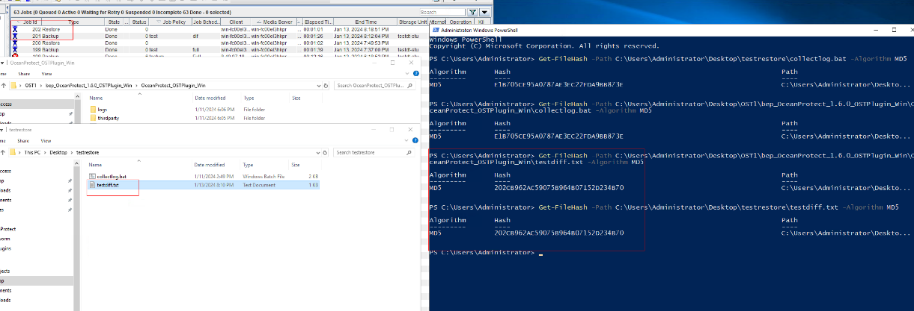

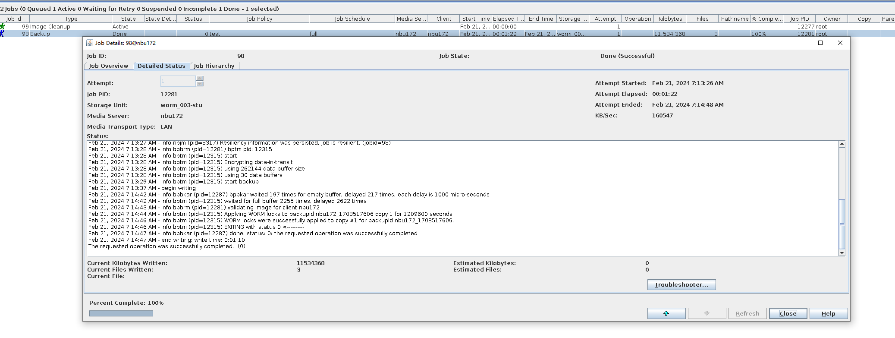

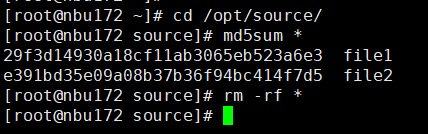

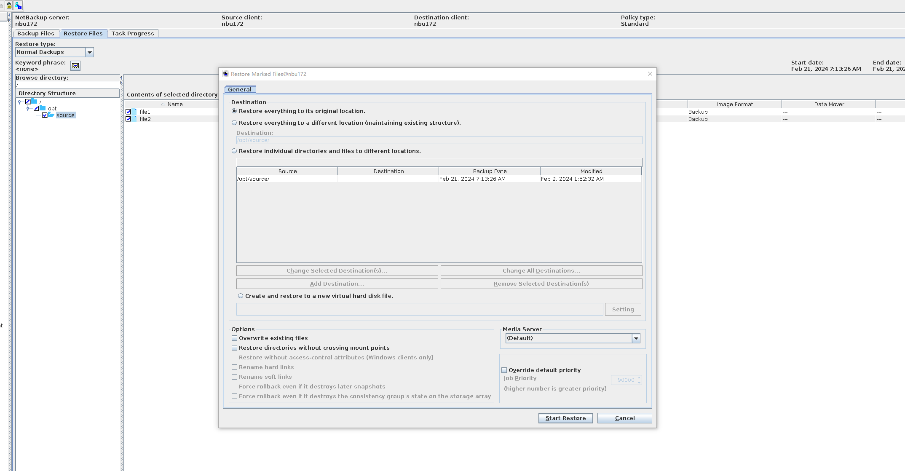

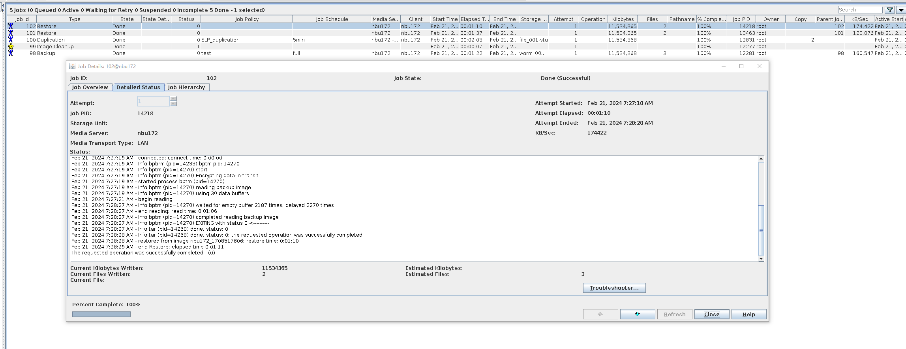

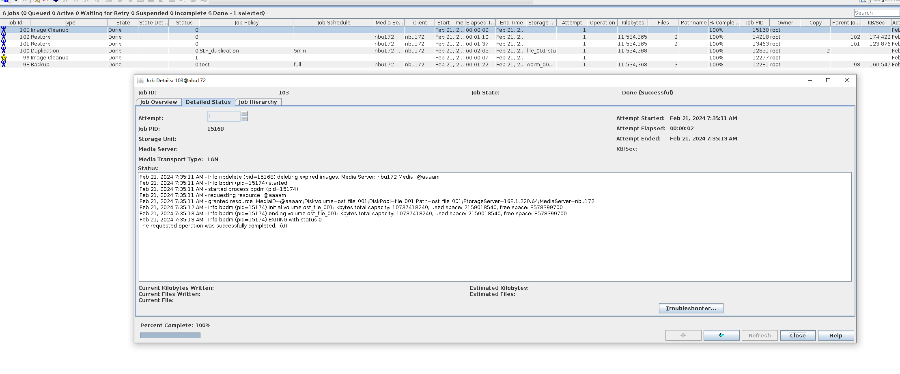

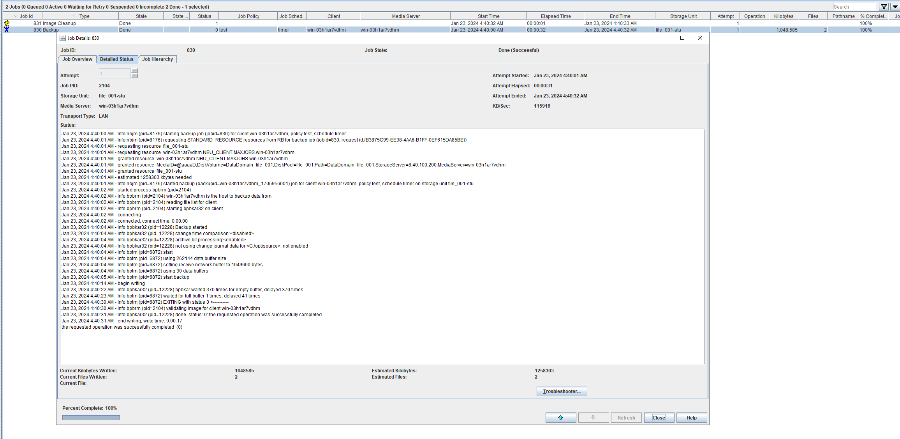

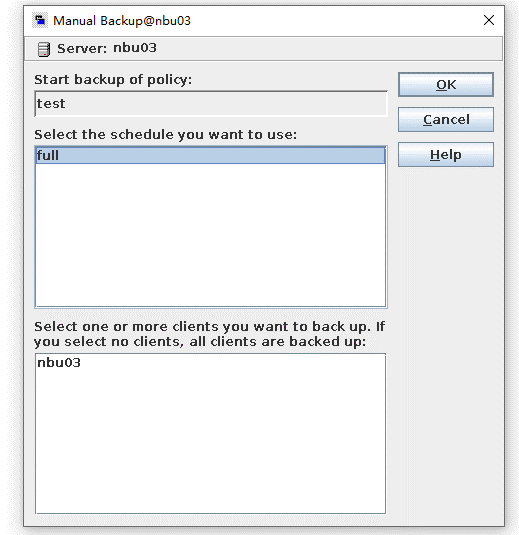

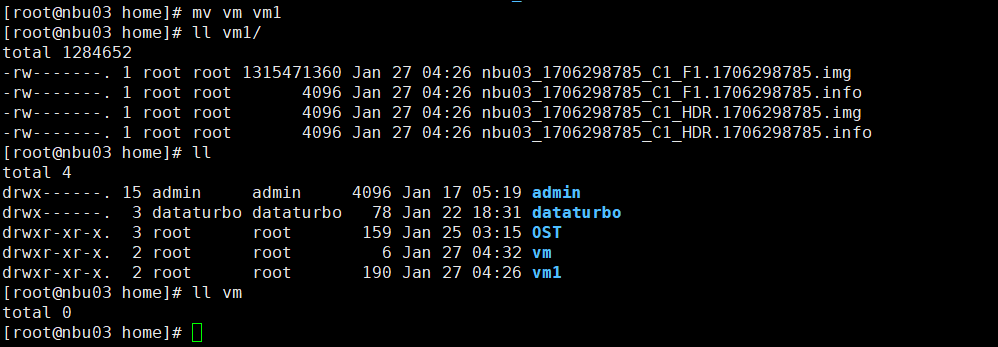

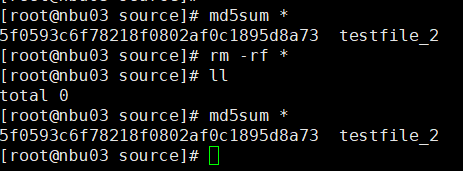

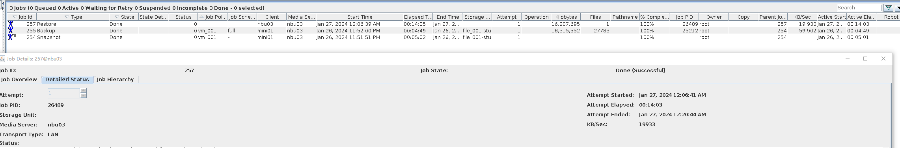

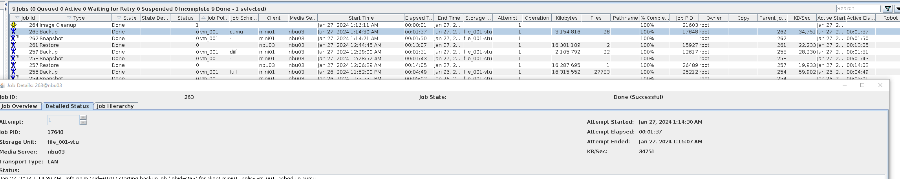

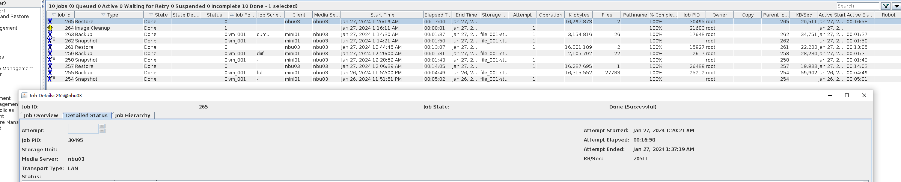

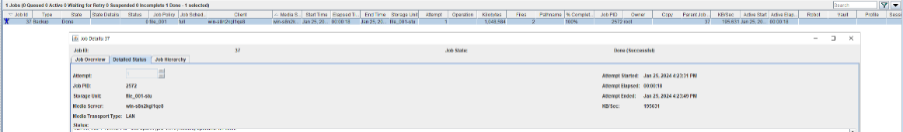

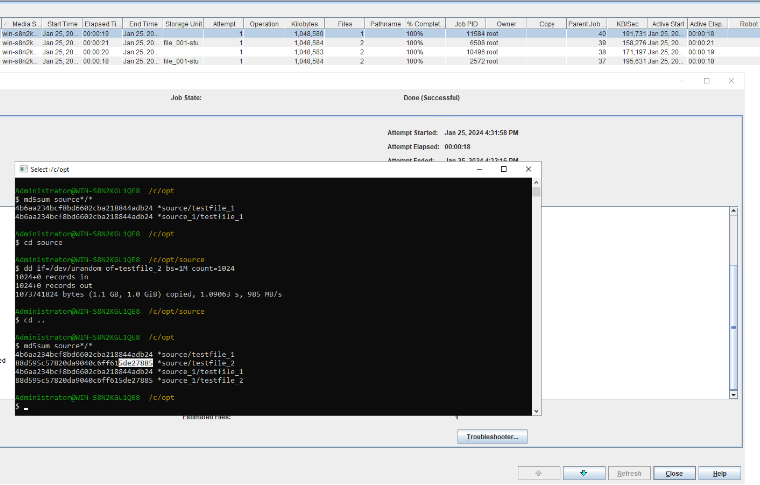

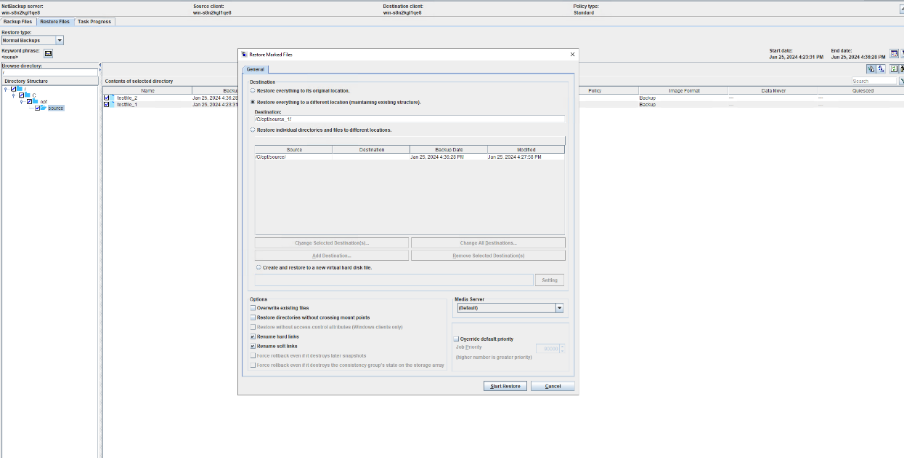

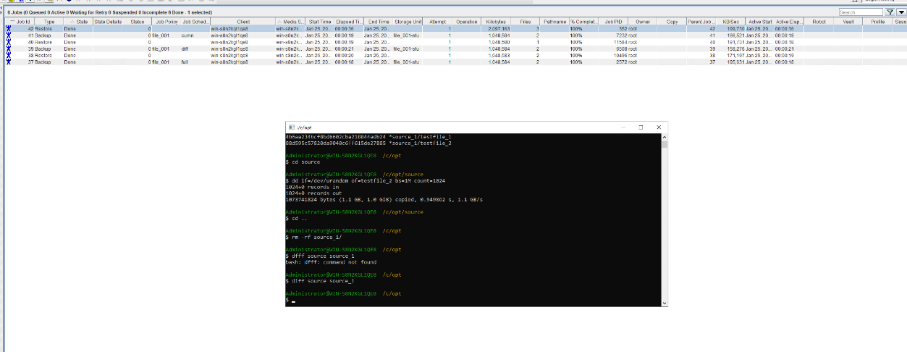

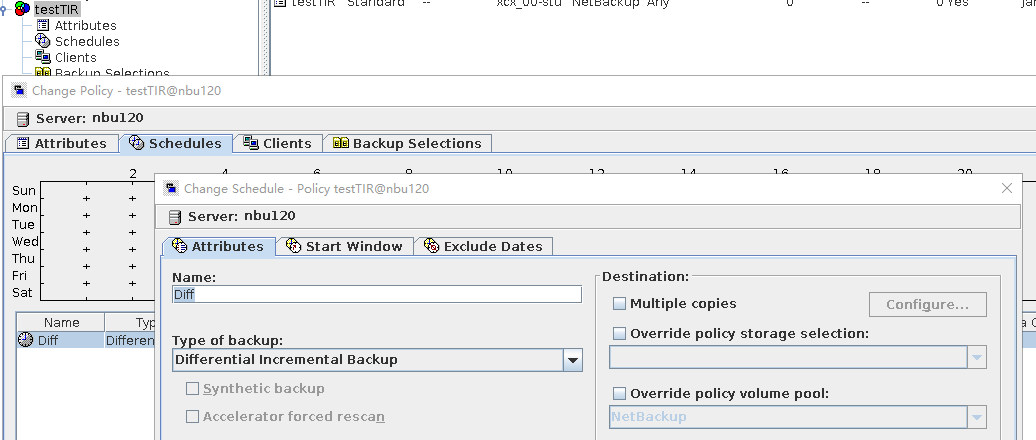

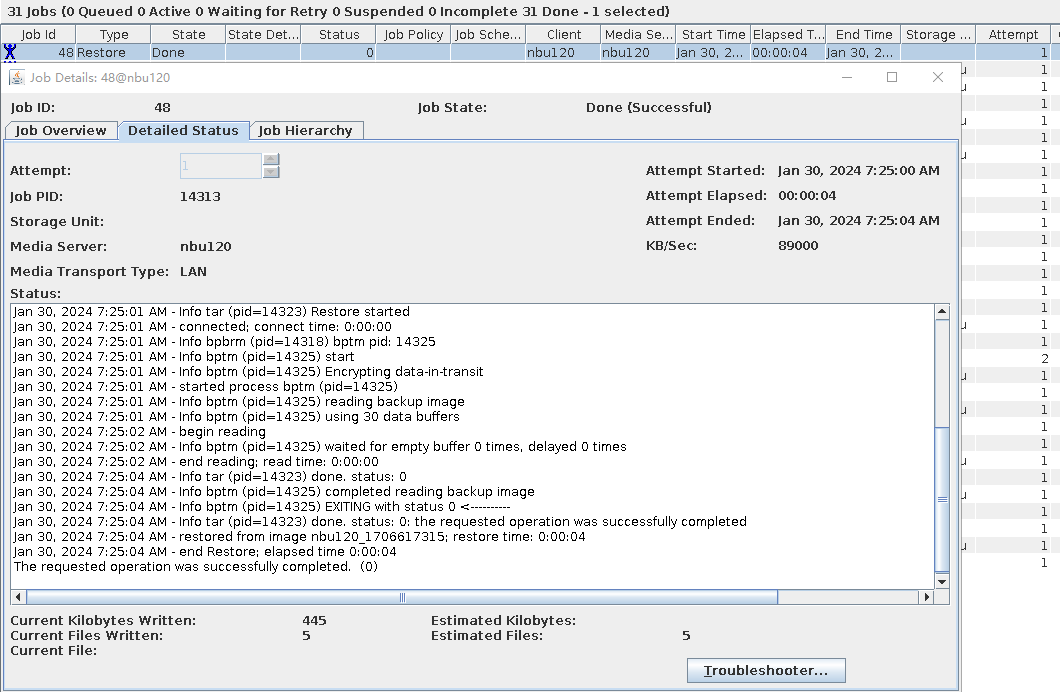

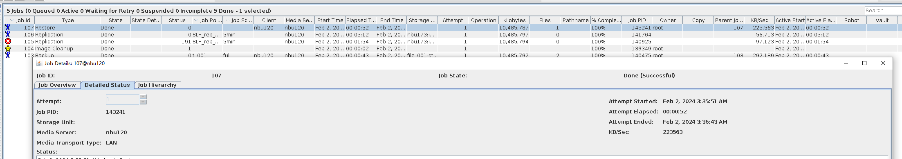

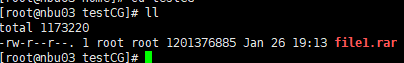

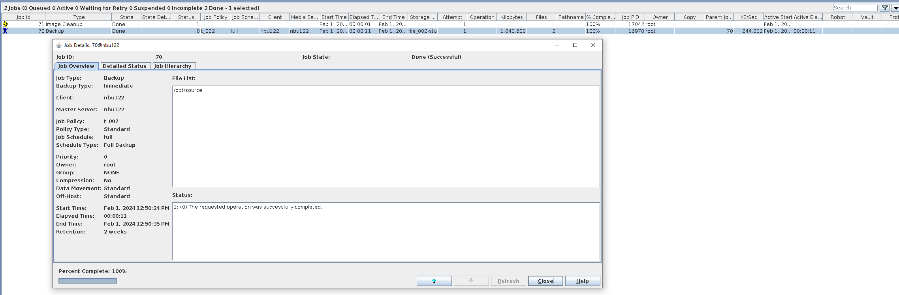

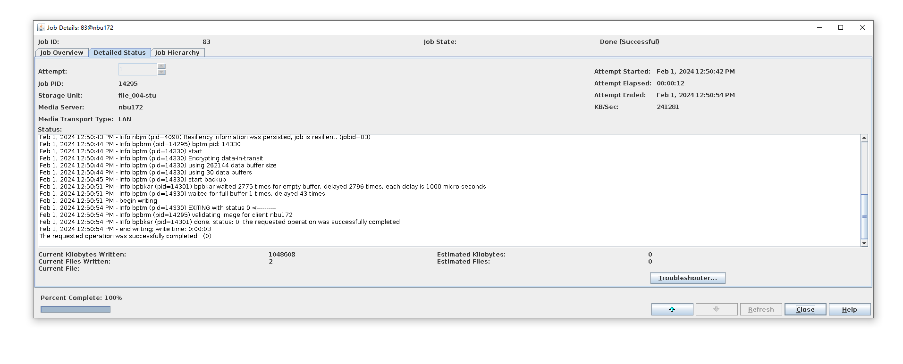

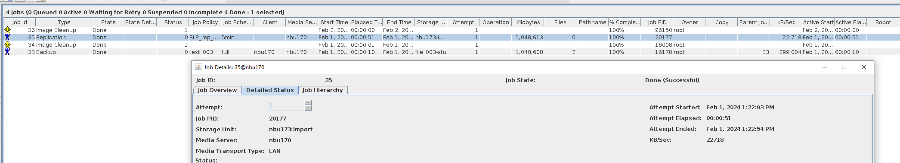

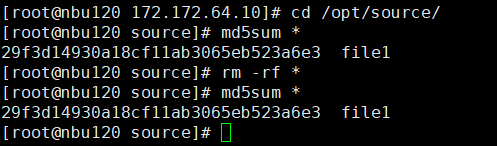

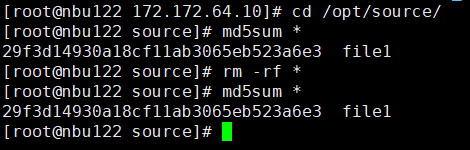

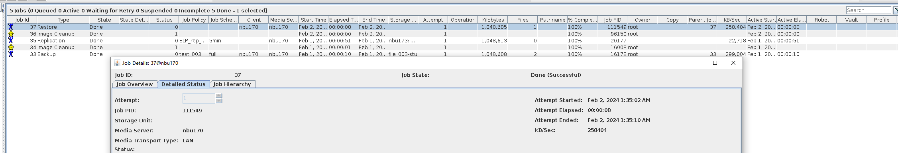

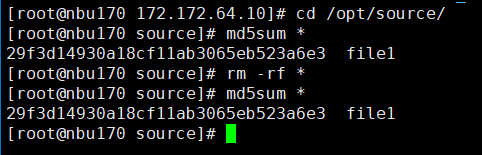

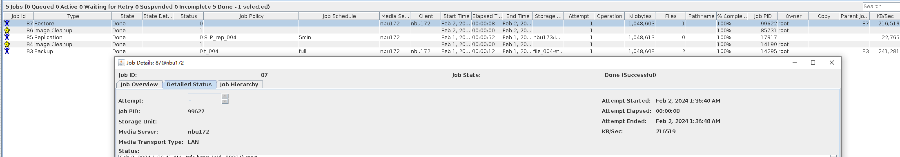

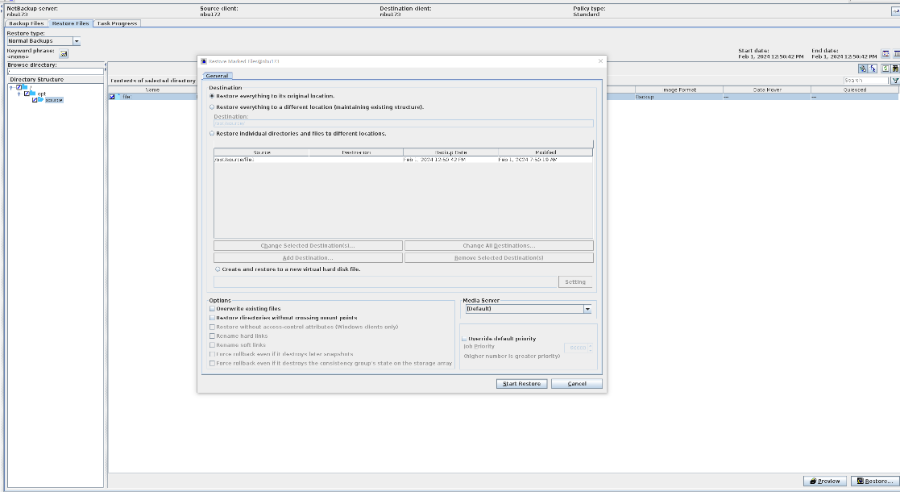

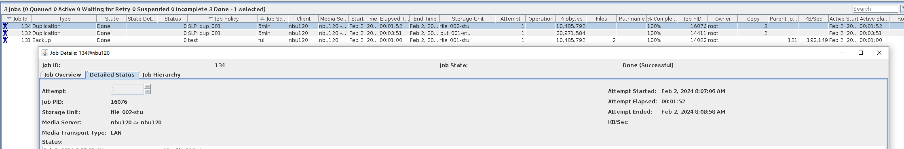

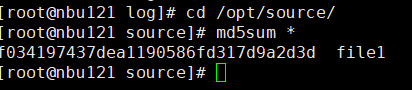

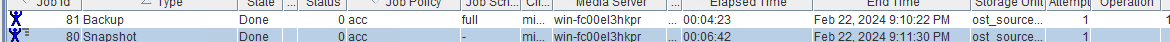

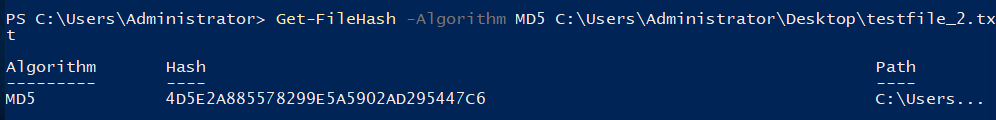

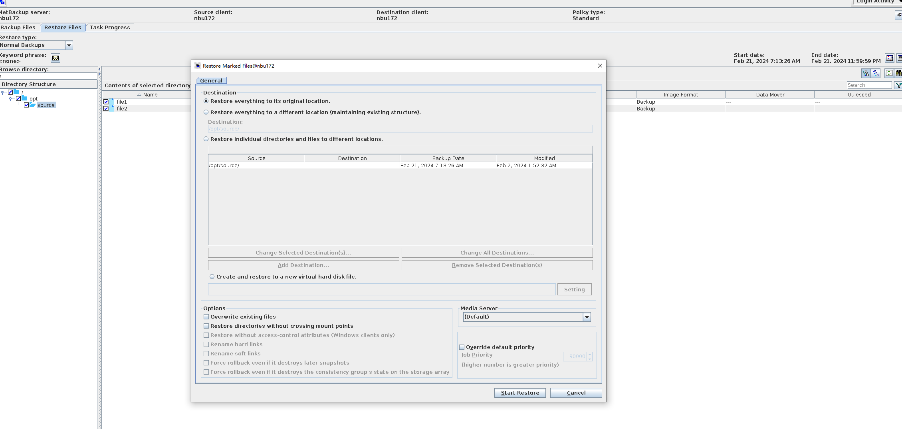

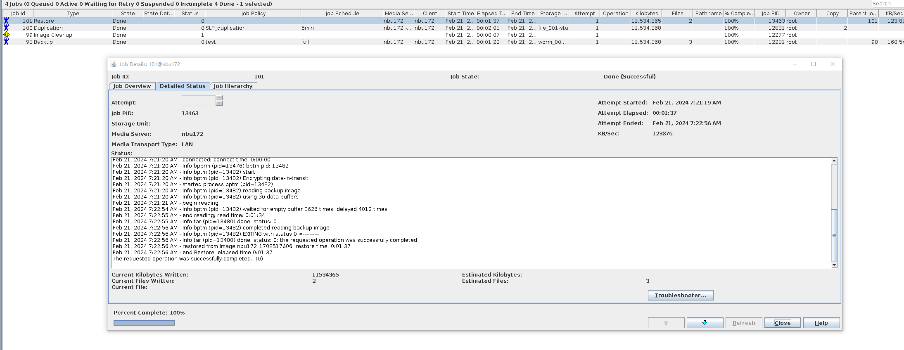

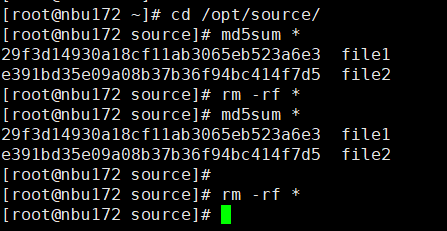

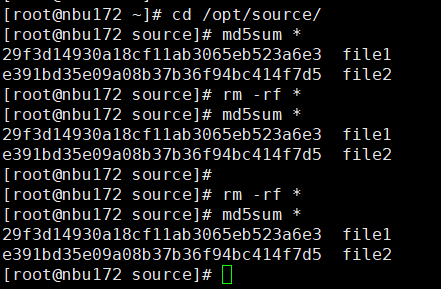

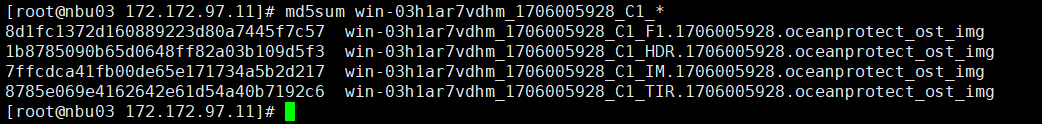

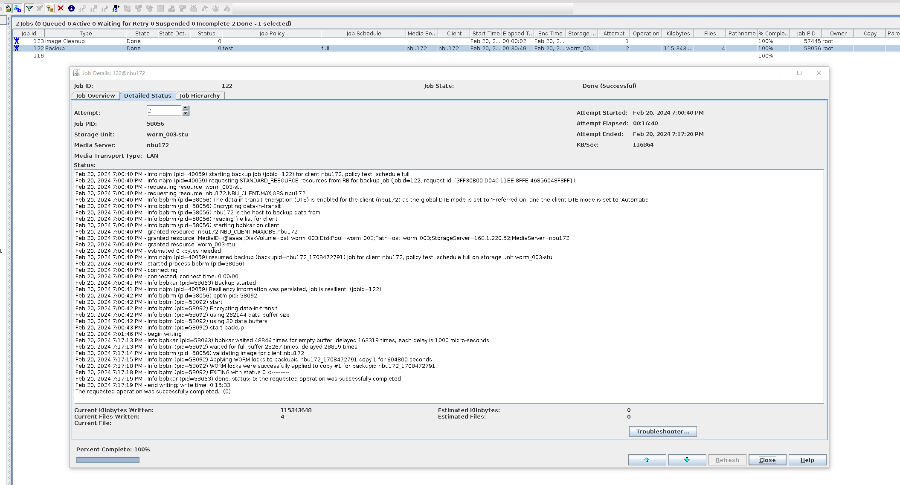

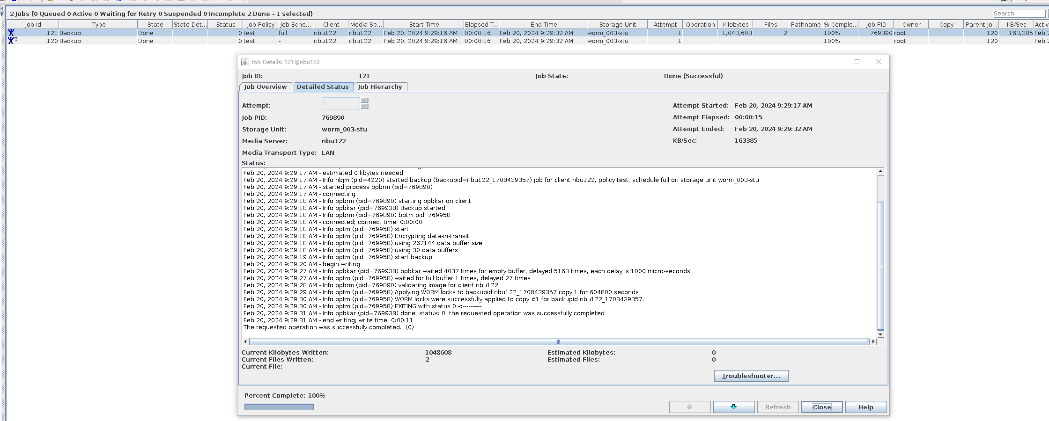

Test Result | 1. Create a storage server, disk pool, storage unit, and policy. The policy name is the same as the client name.   2. Insert the 1 GB file testfile_1 and perform full backup.

3. Restore data and verify data consistency in testfile_1.  4. Insert the 1 GB file testfile_2 and perform differential incremental backup.

5. Restore the data and verify the data consistency of testfile_2.  6. Modify the 1 GB file testfile_2 and perform permanent incremental backup.

7. Restore the data and verify the data consistency of testfile_2.  |

Conclusion |

|

Remarks |

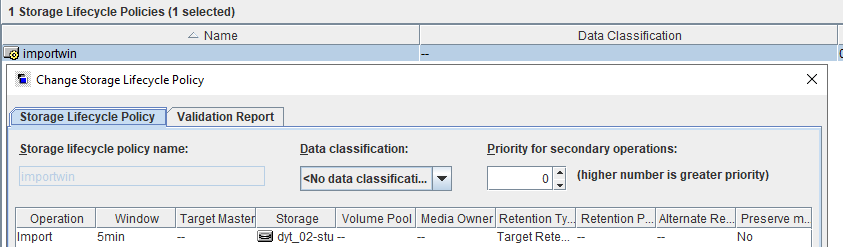

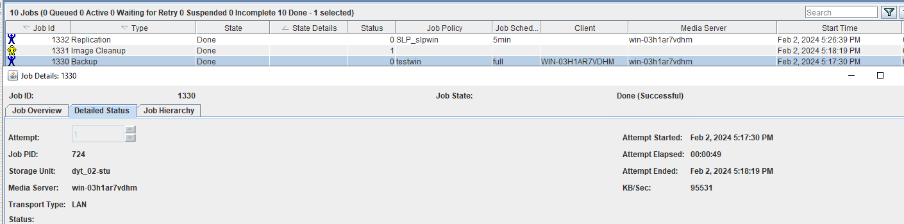

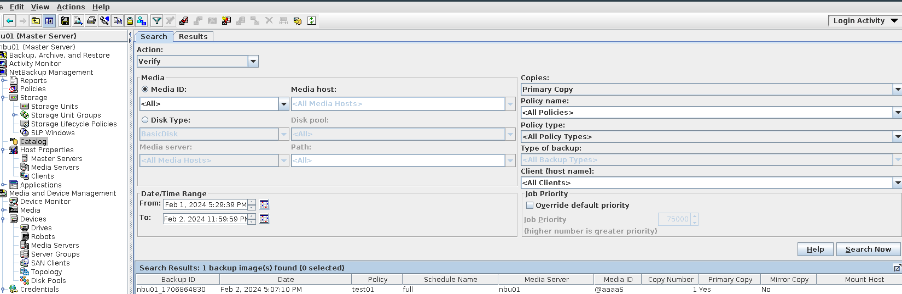

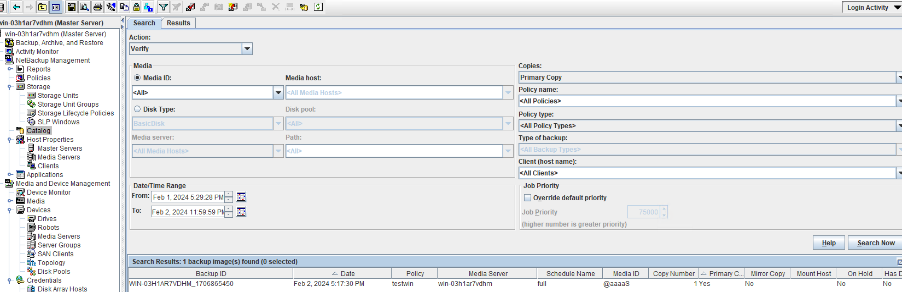

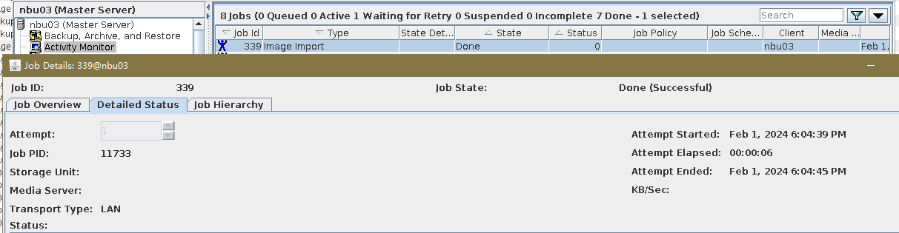

3.1.2 Open Storage Backup, Import, Verify

Objective | To verify that the backup system with OceanProtect can backup, import and pass the verification |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

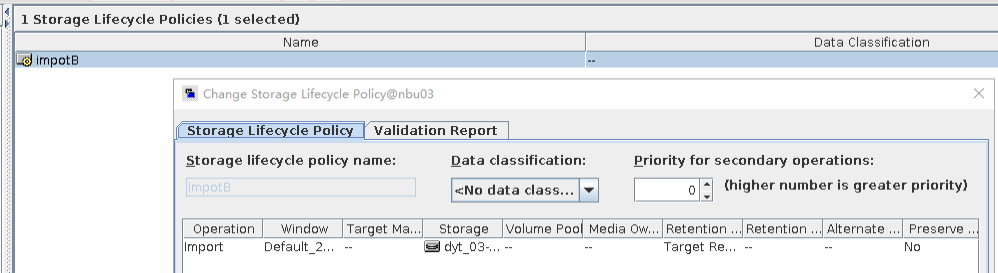

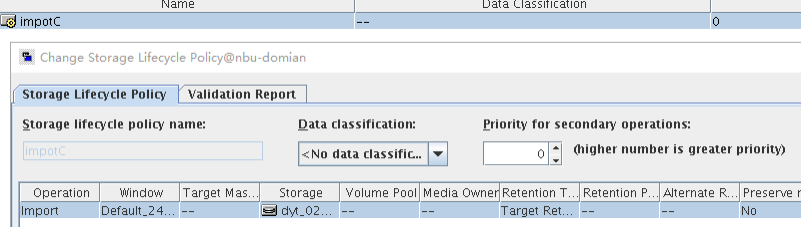

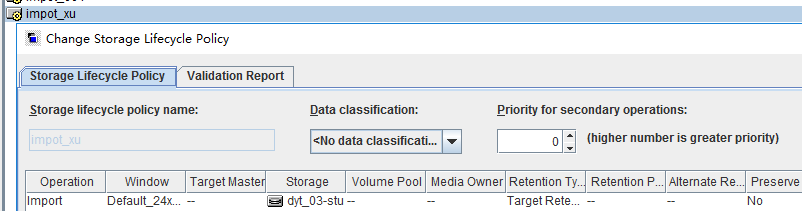

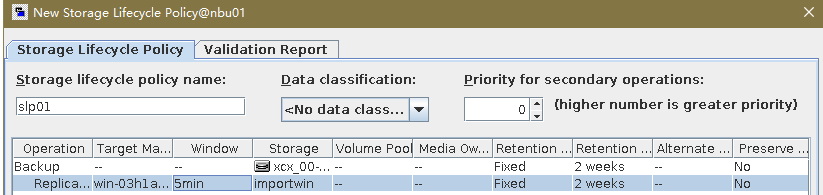

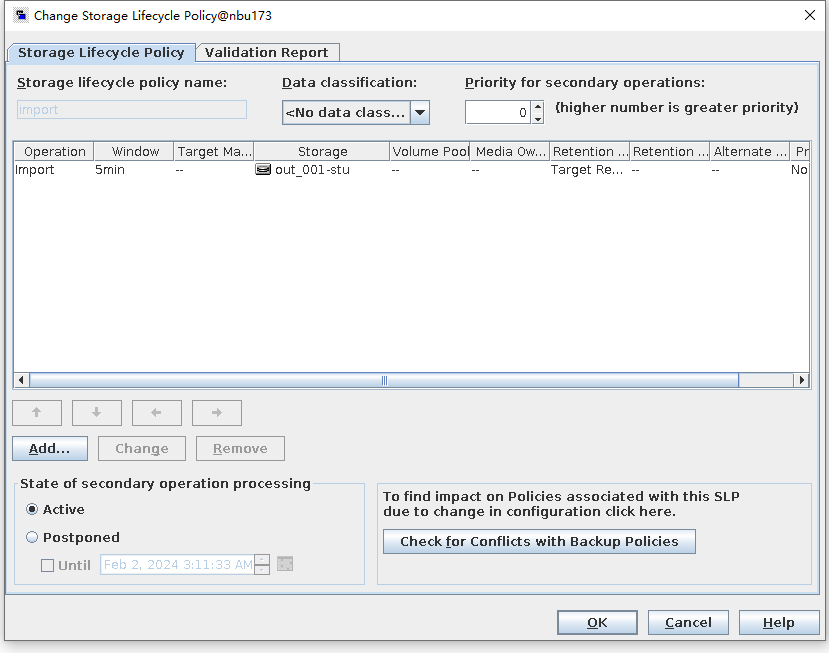

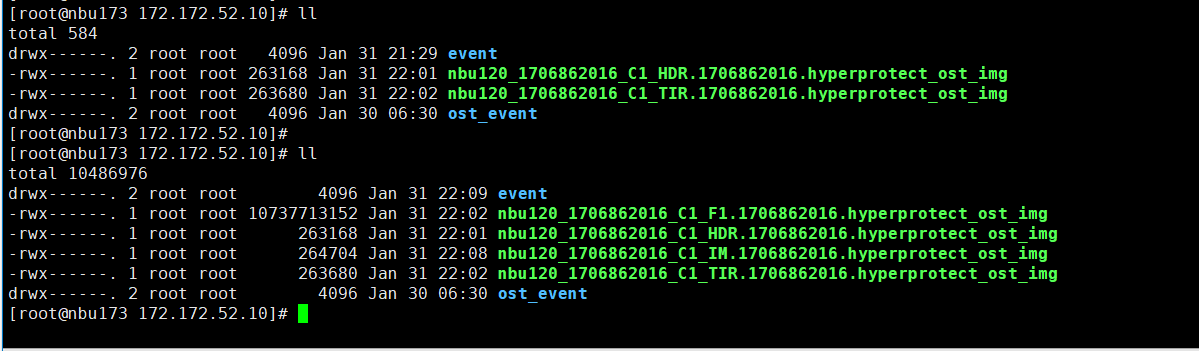

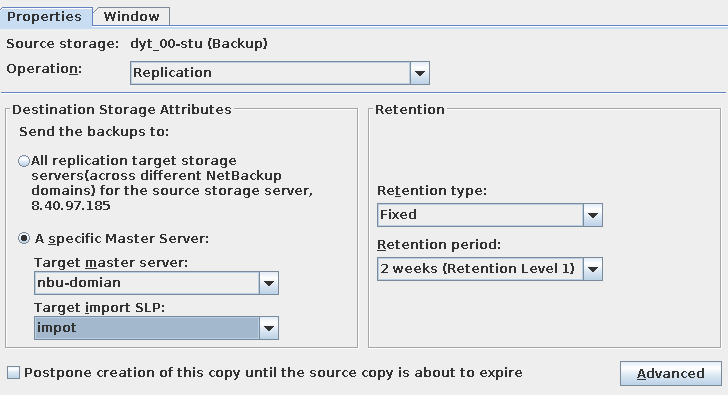

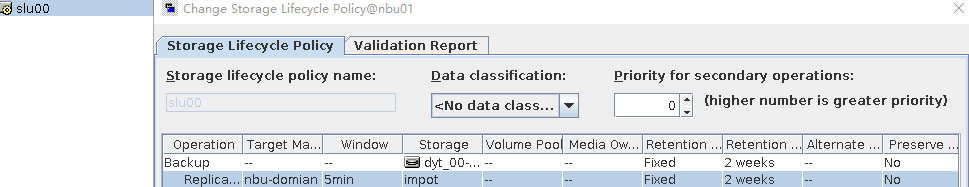

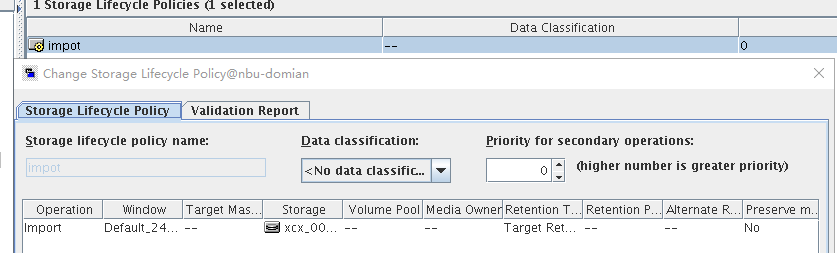

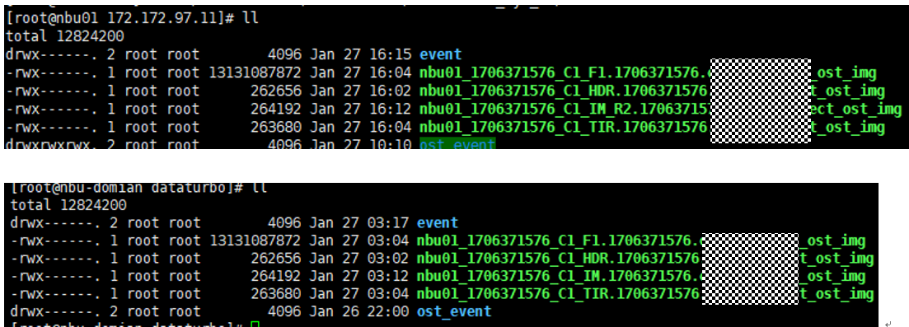

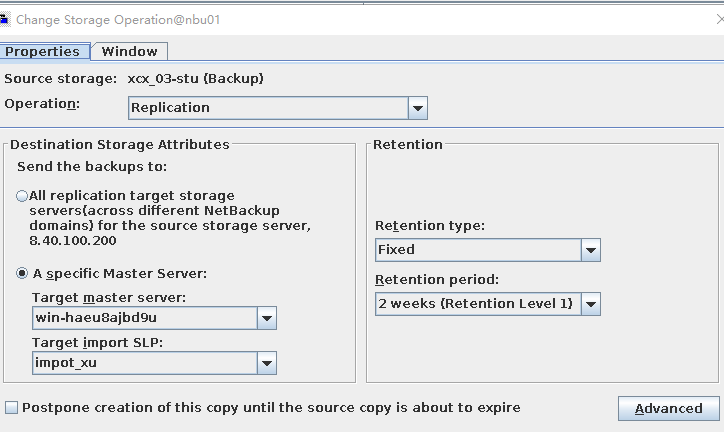

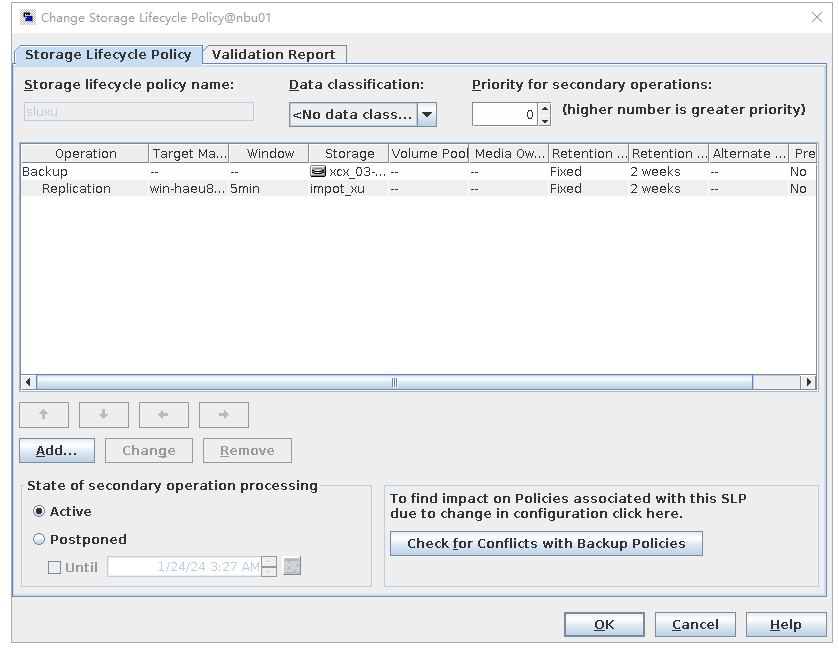

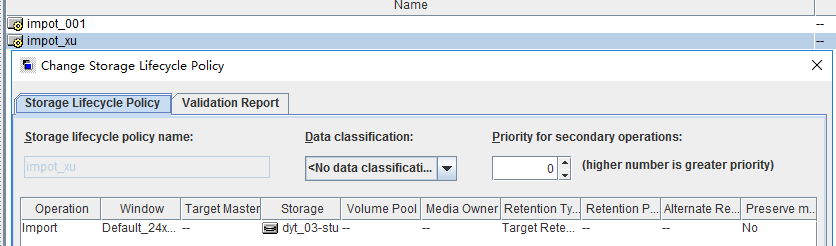

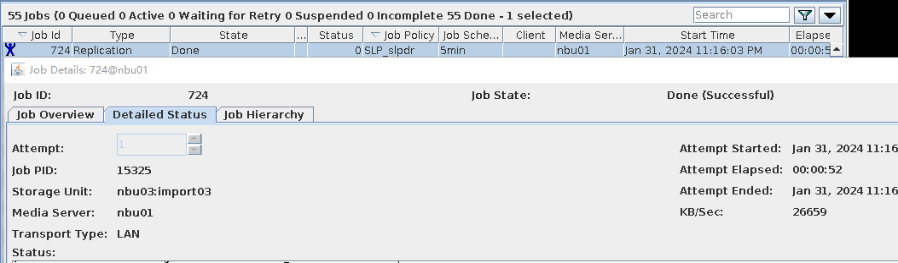

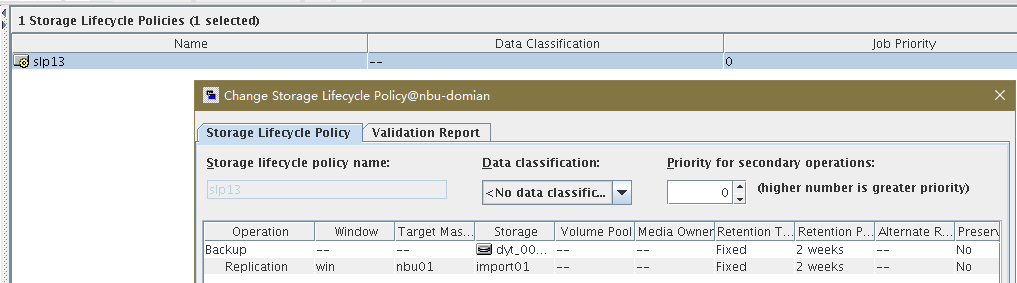

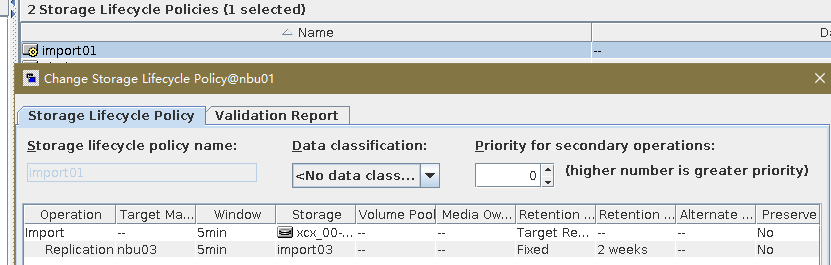

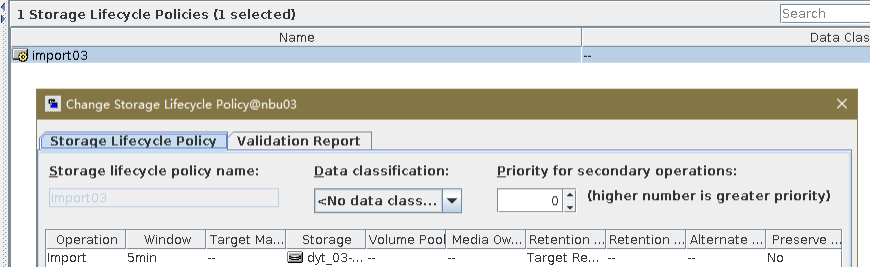

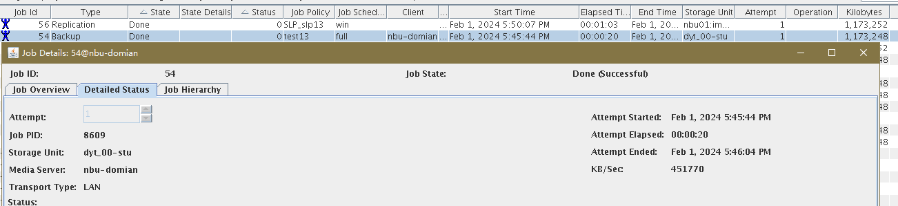

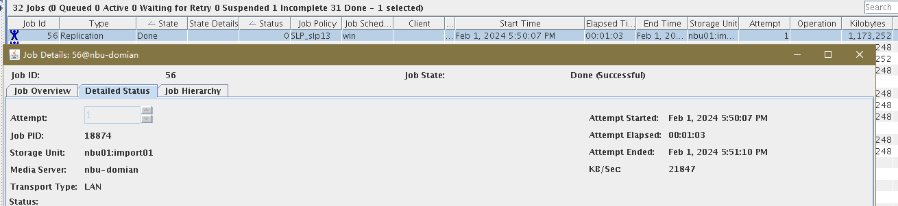

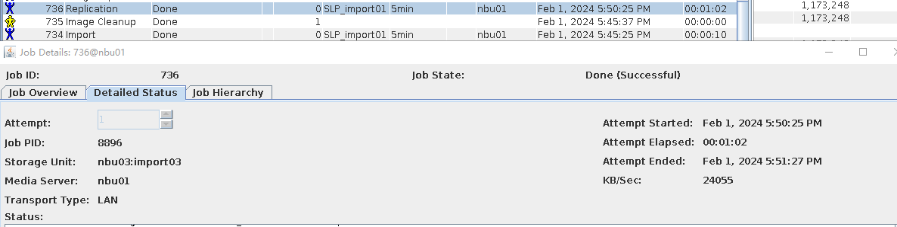

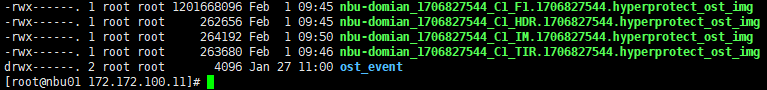

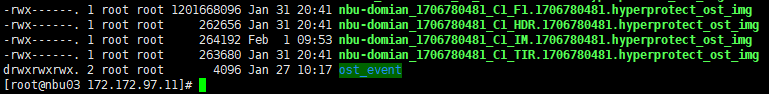

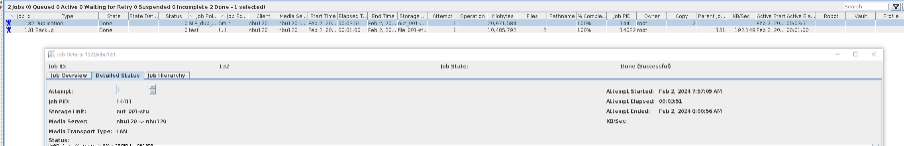

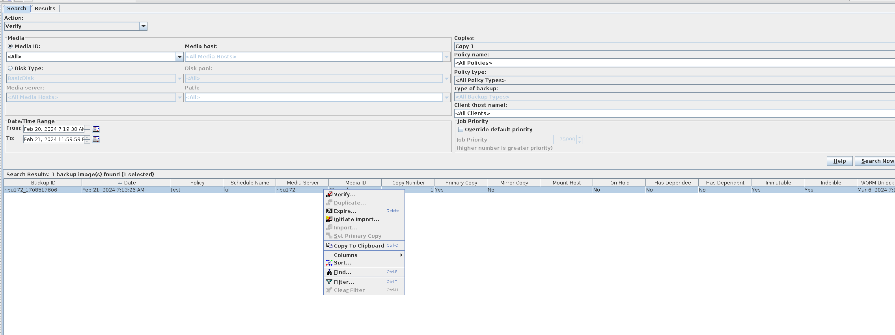

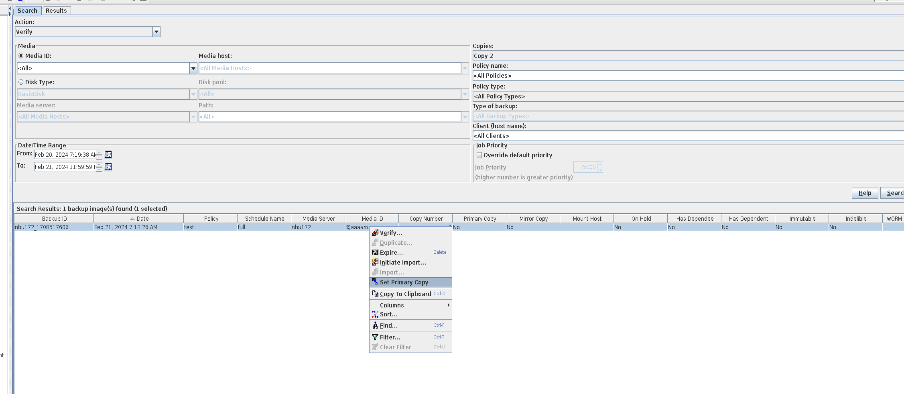

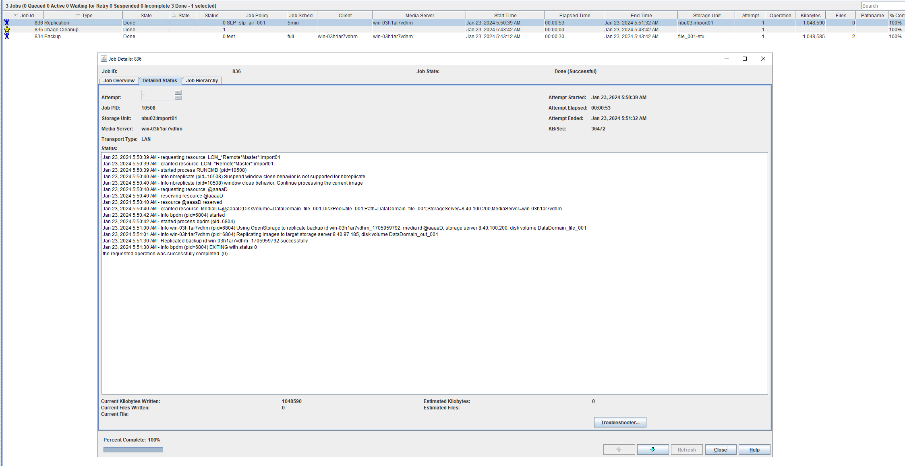

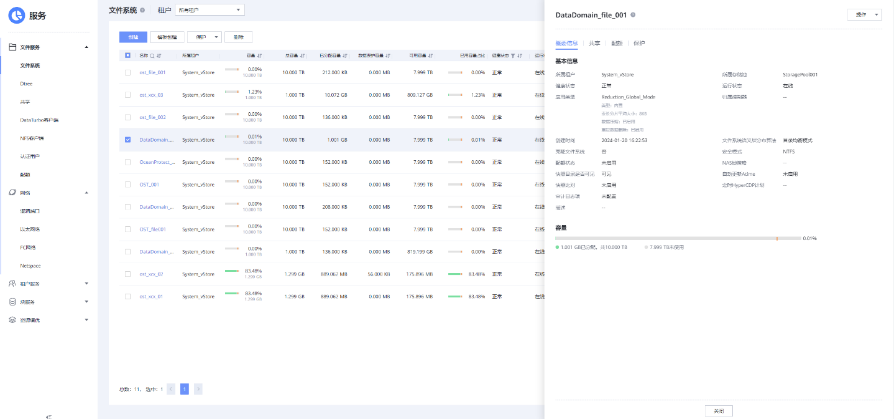

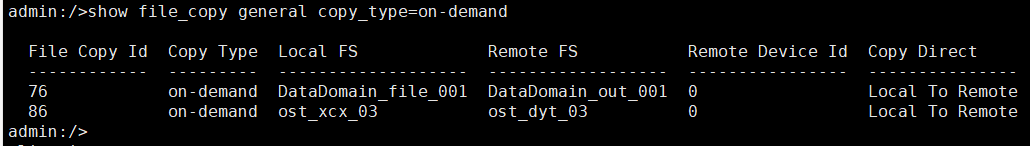

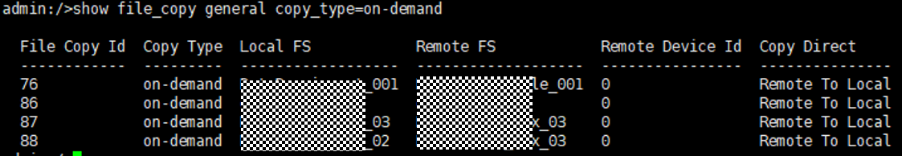

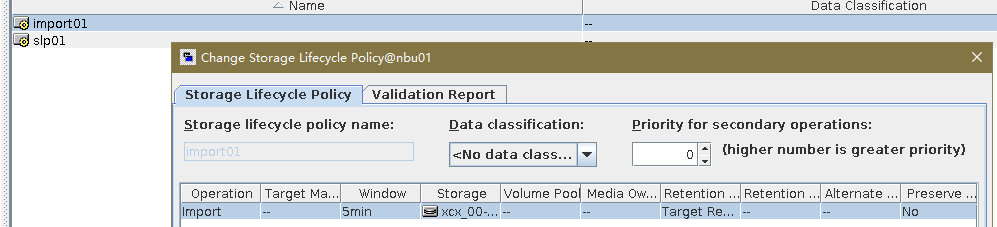

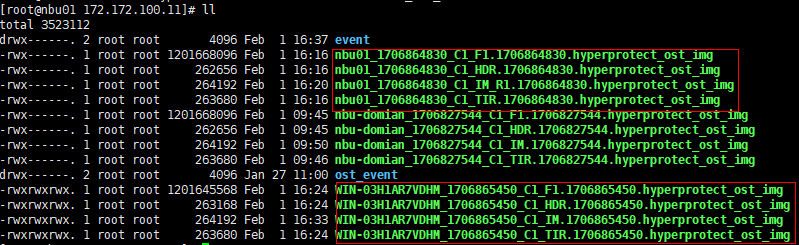

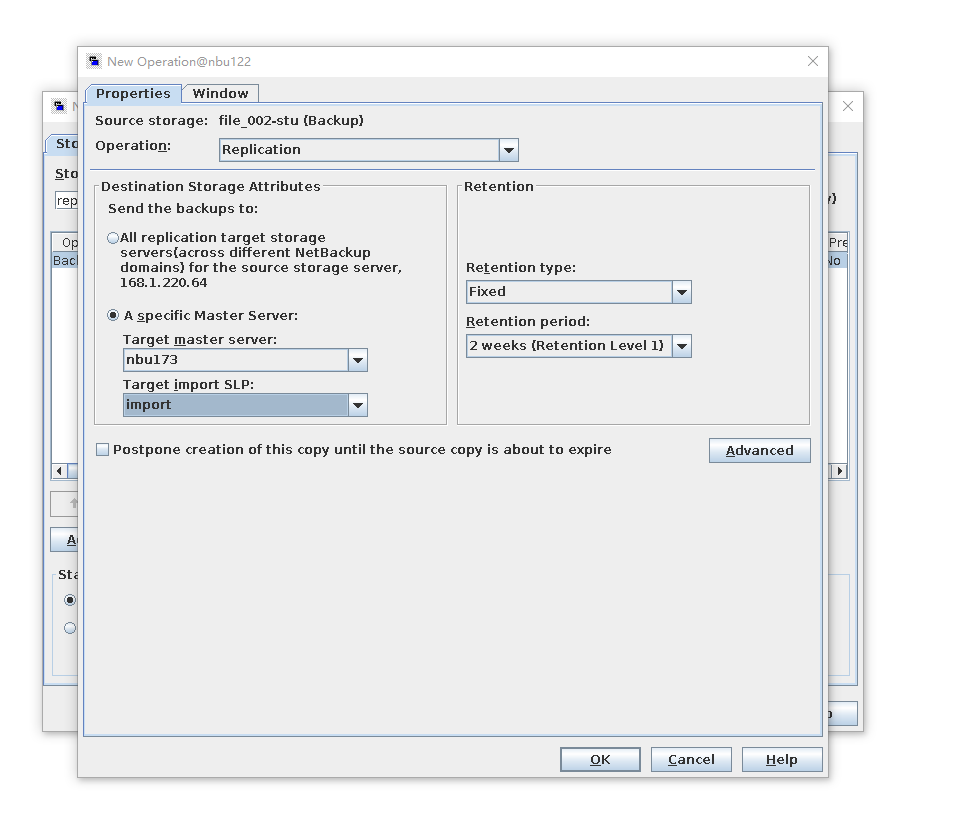

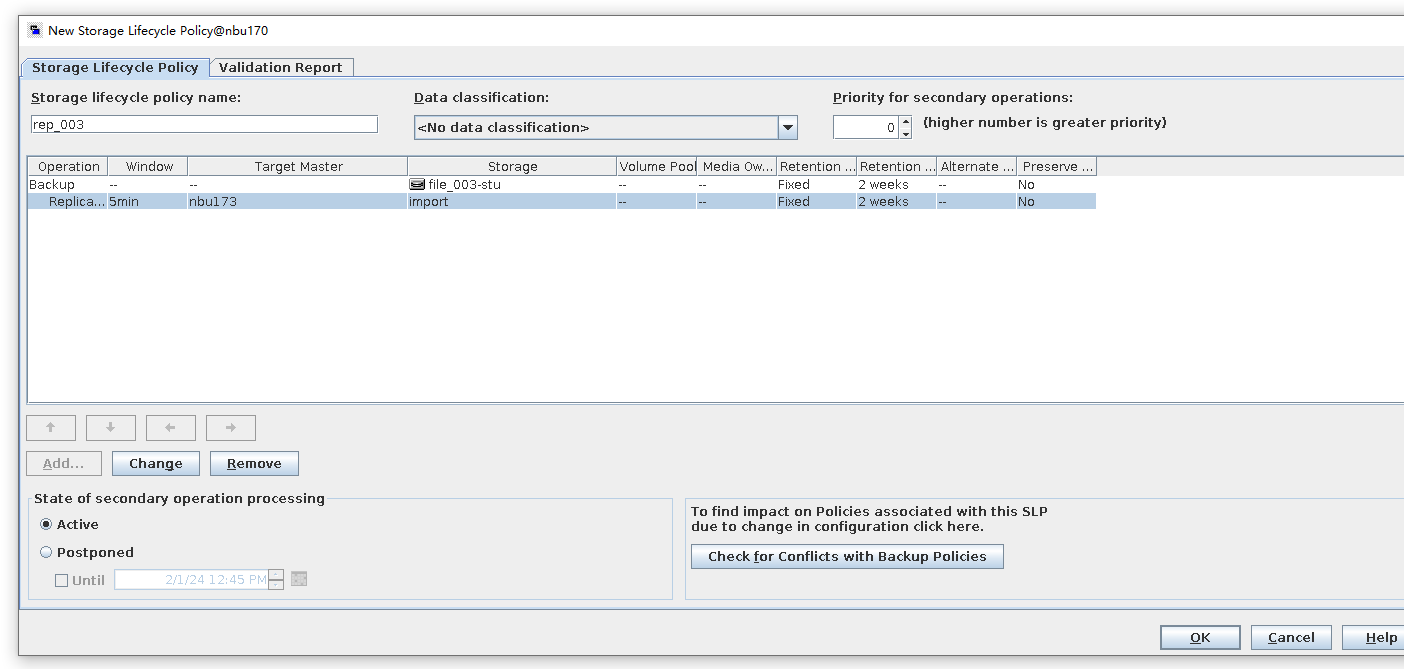

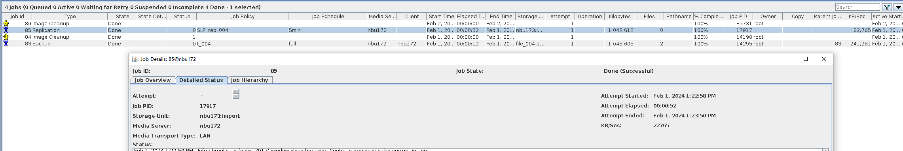

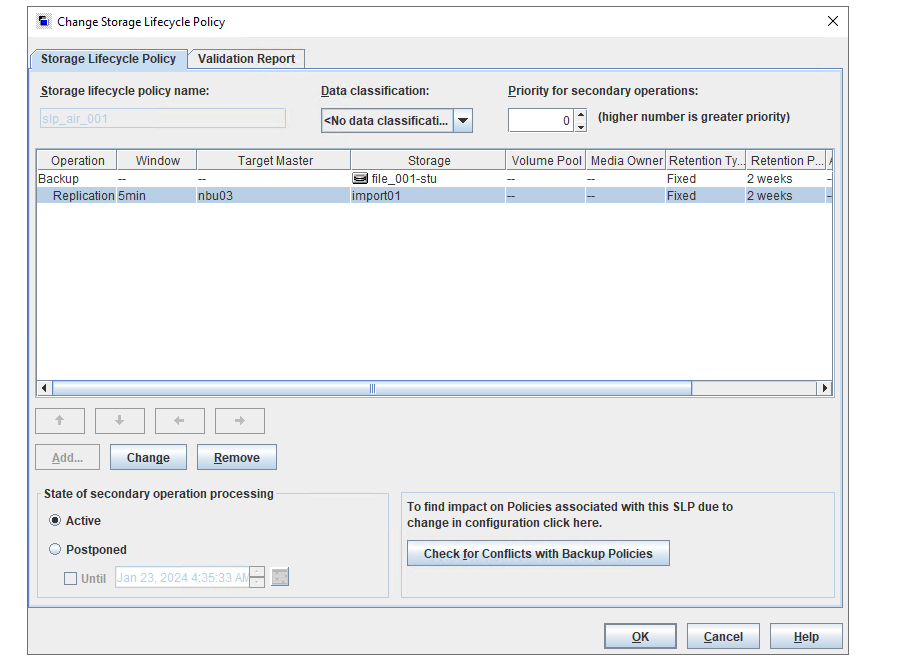

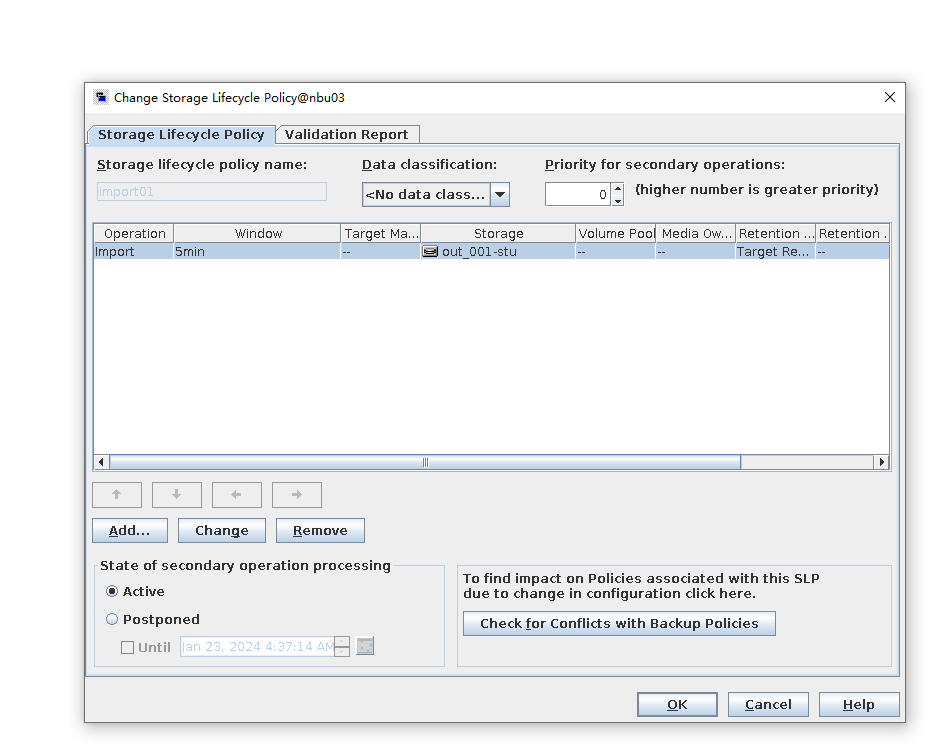

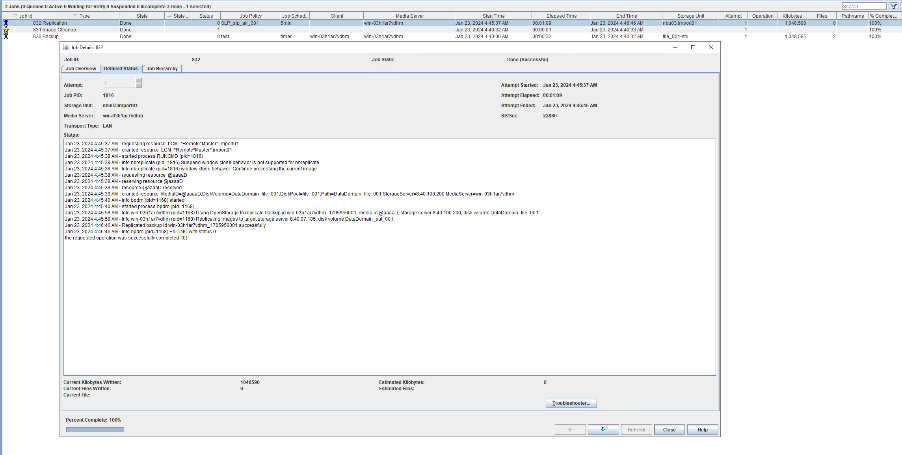

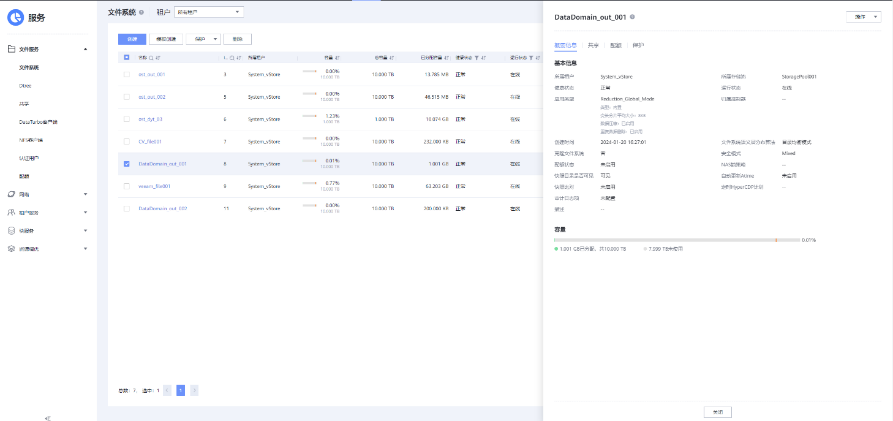

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationships between the source LSU and multiple destination LSUs (A->B and A->C). Primary end

3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server.  //The configuration is successful. 4. Configure storage units and import policies on each destination end. From B  From C  5. On the source end, configure a backup policy based on the replication SLP policy.  6. Set a scheduled backup task and perform the backup.

7. Execute the replication task when the replication period arrives.  //The replication is successful. The copy is imported to the specified Master Server on the destination end. 8. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage. //The primary/secondary data and capacity are consistent, and the link relationship exists. Data  link

9. Check whether data is written to node C. //No data is written to node C. |

Conclusion |

|

Remarks |

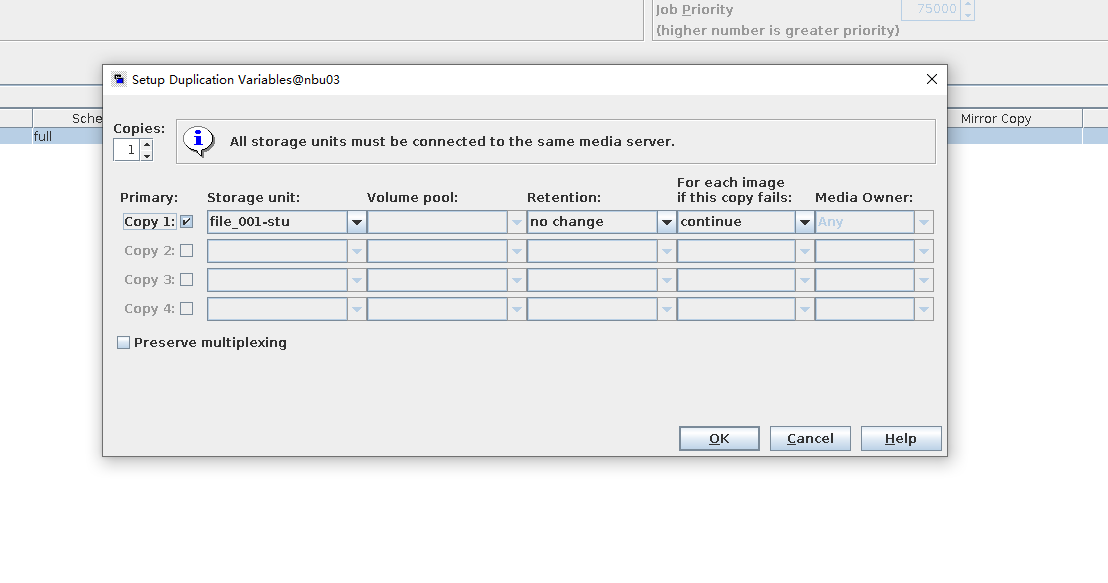

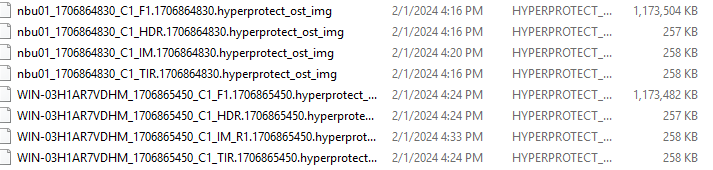

3.1.3 Duplicate from BasicDisk to OST

Objective | To verify that the backup system can duplicate from basic disk to OST |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

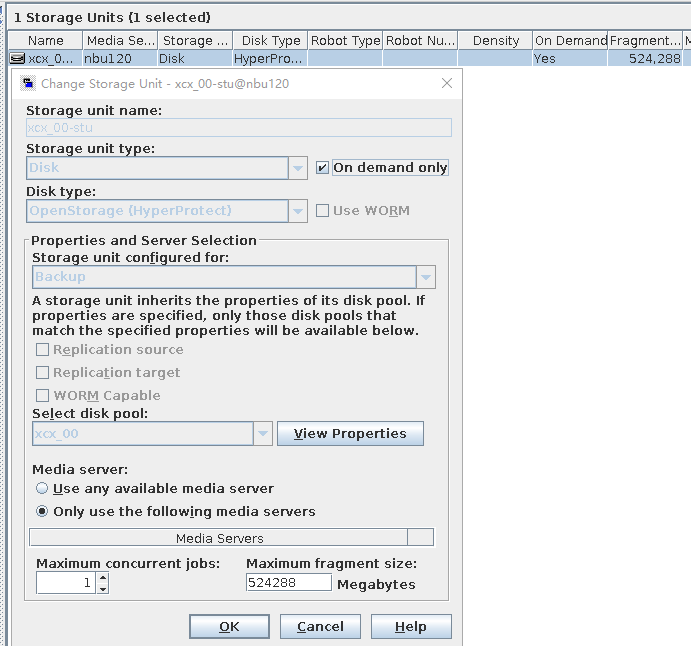

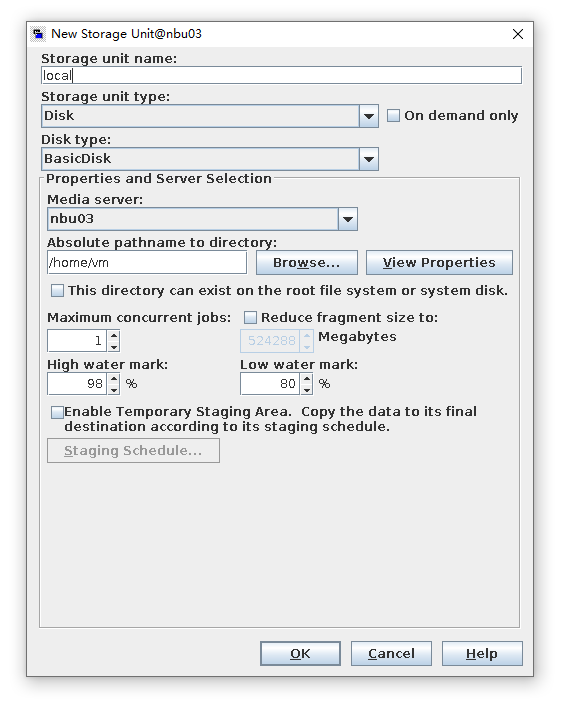

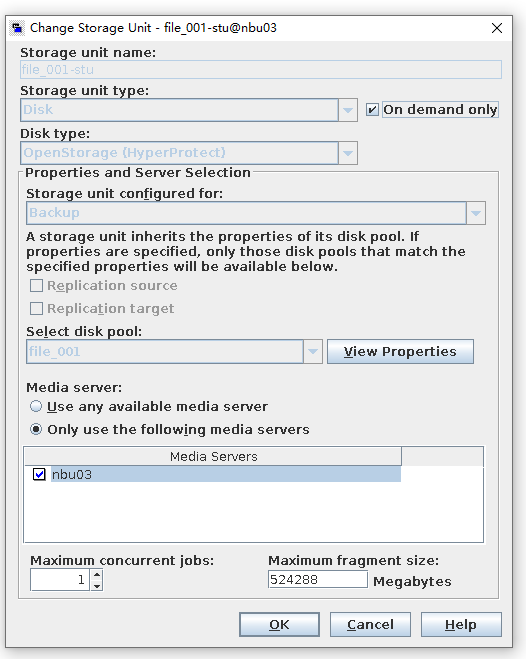

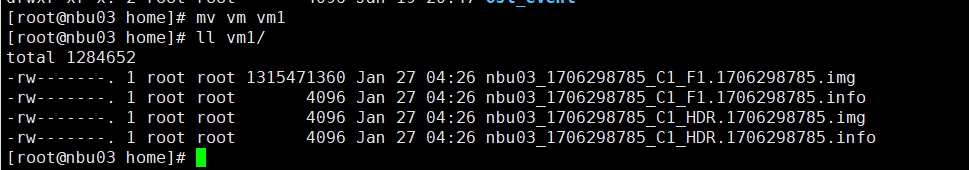

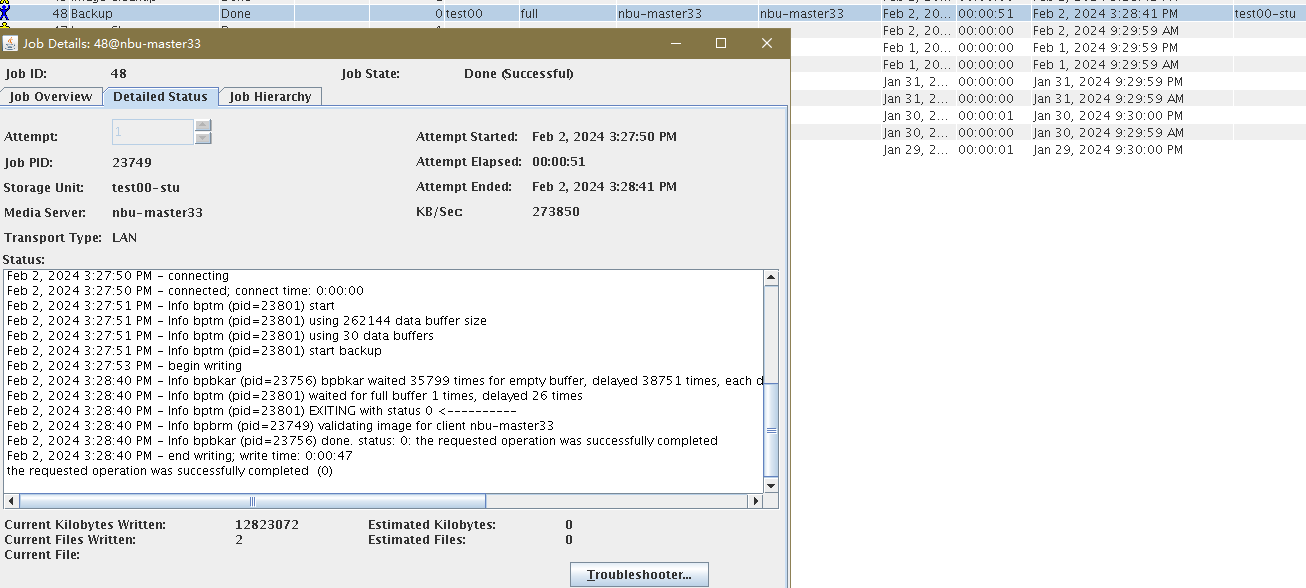

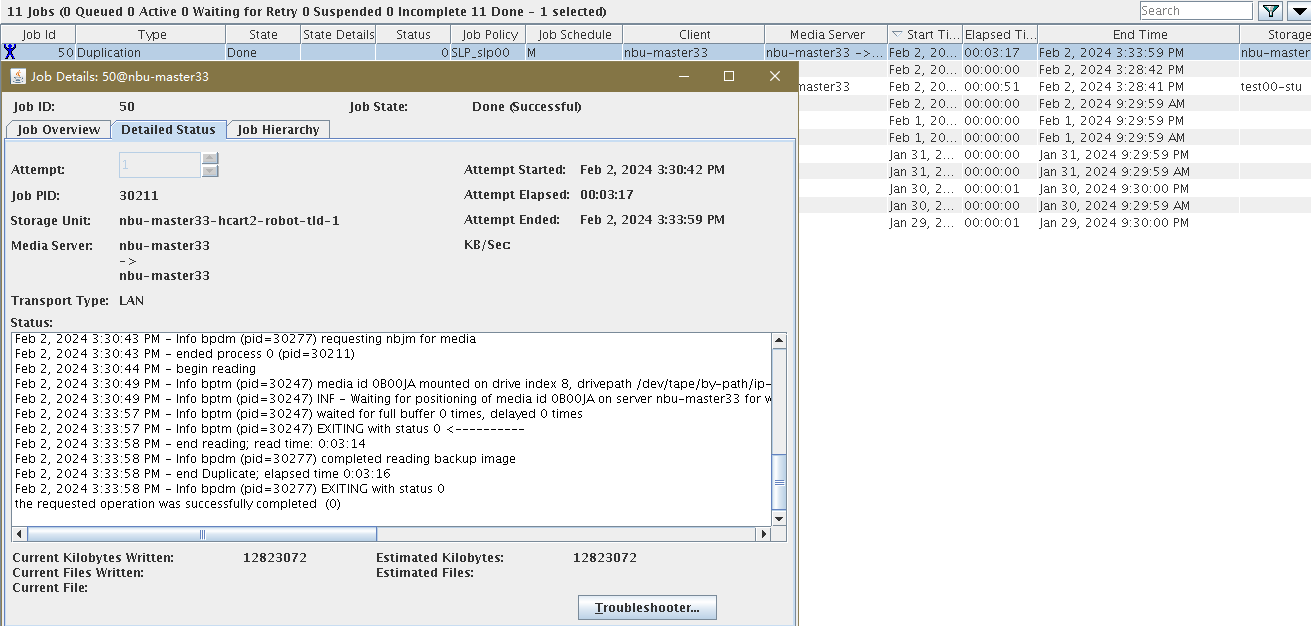

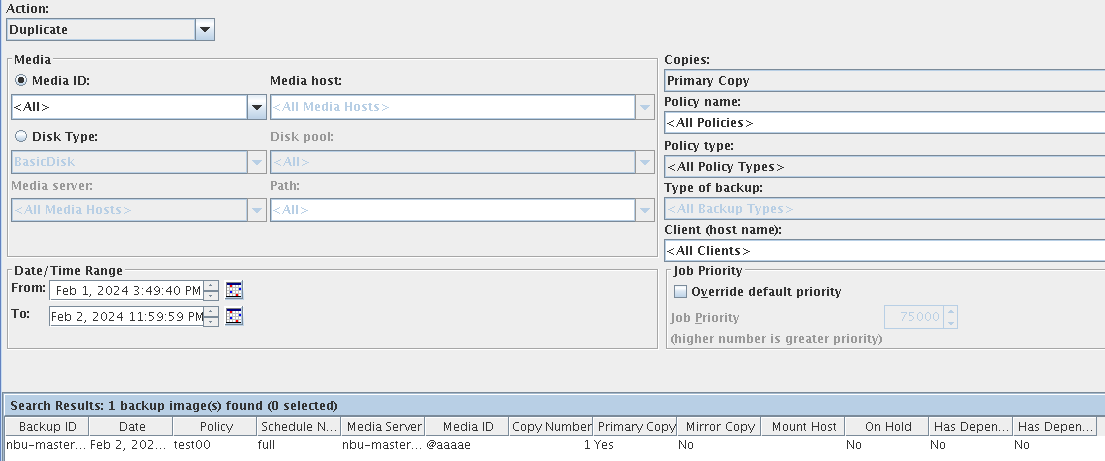

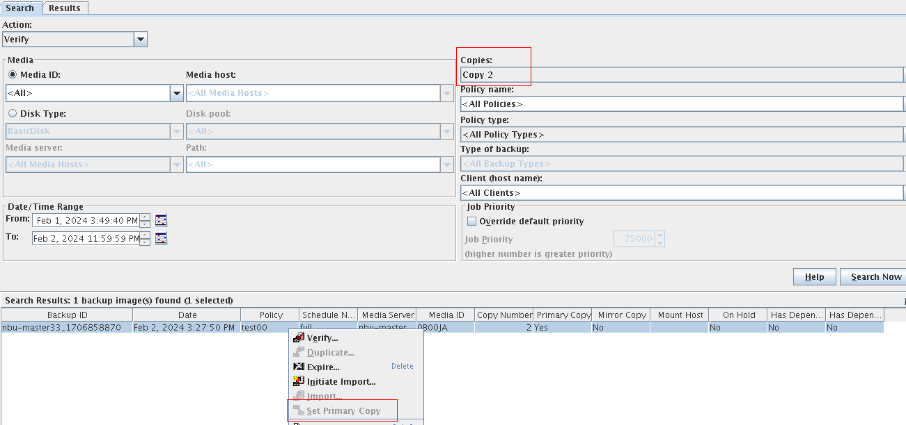

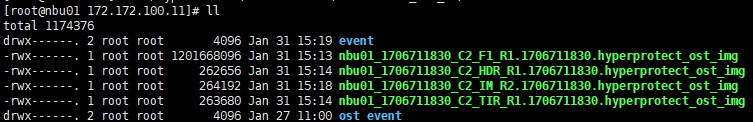

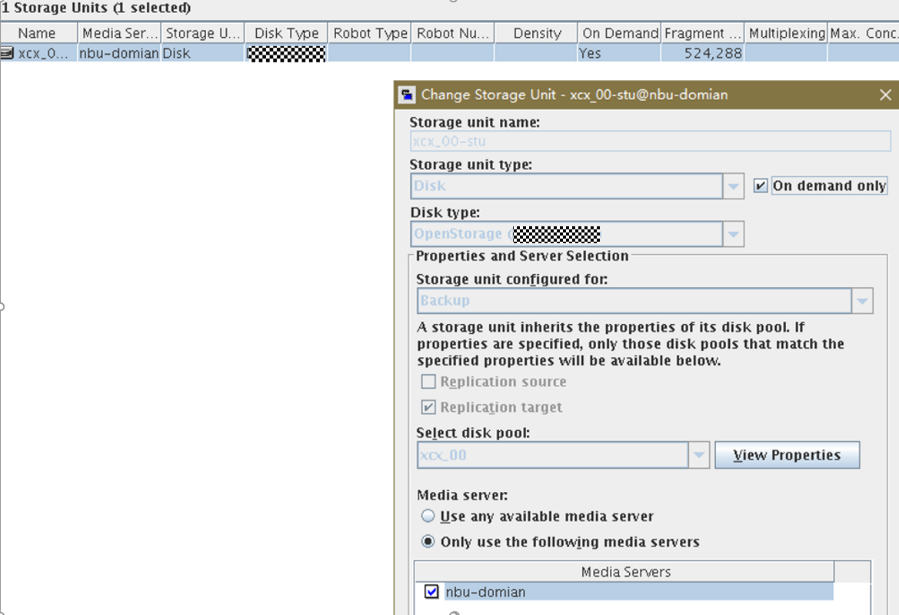

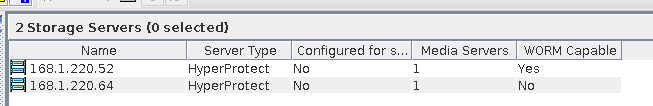

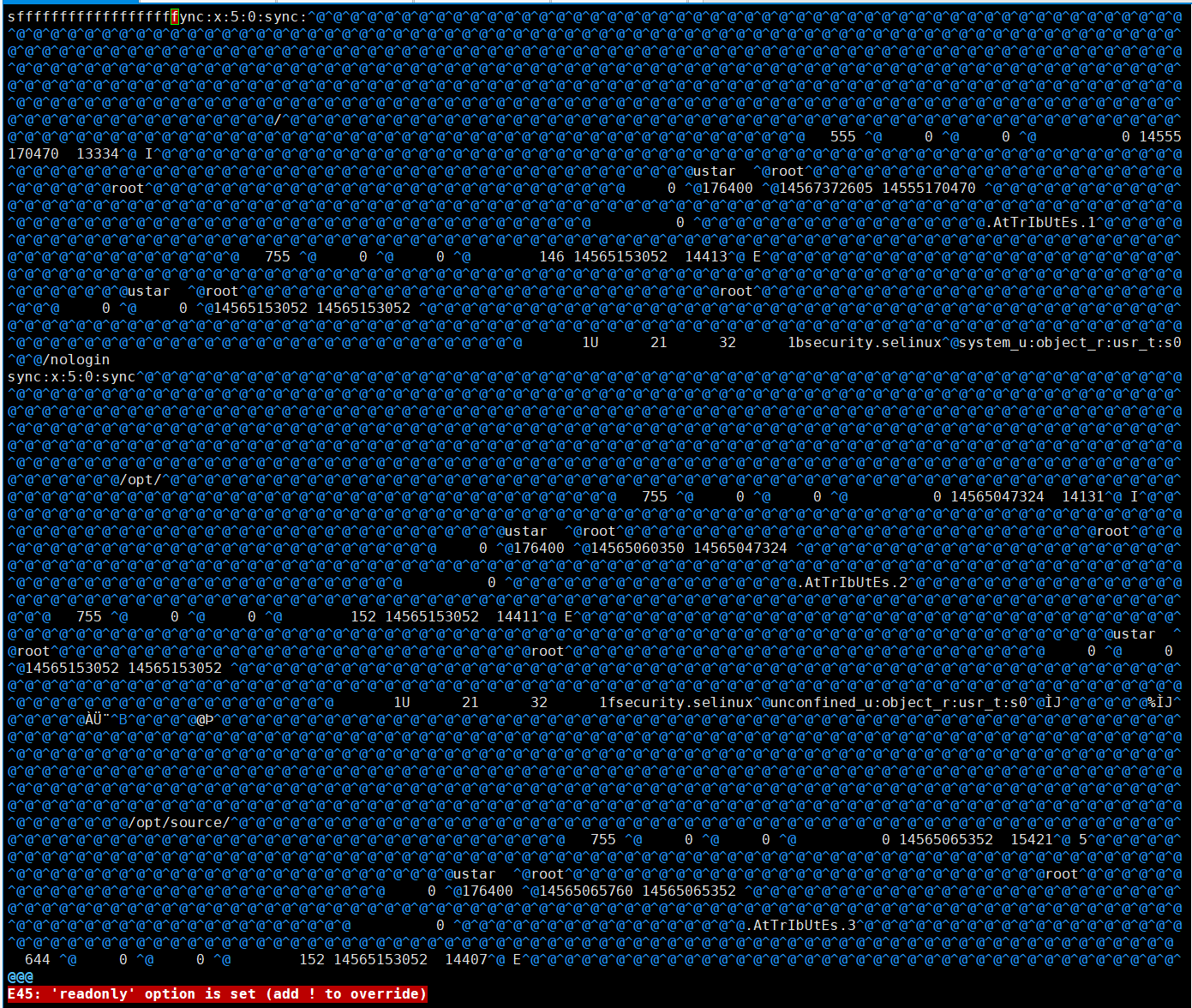

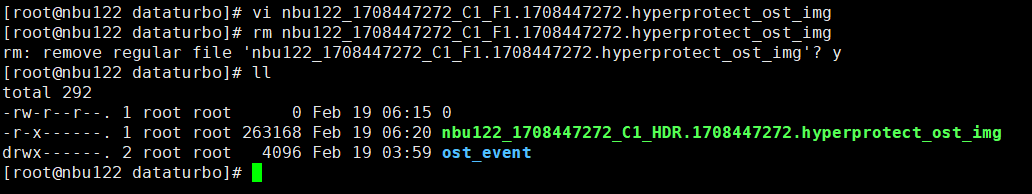

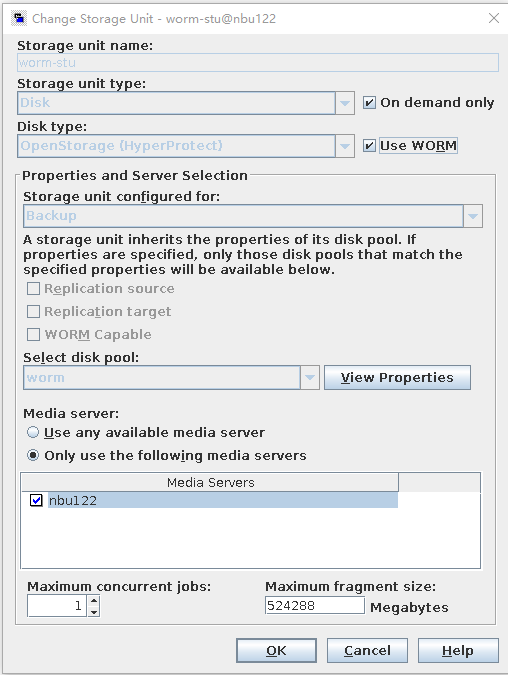

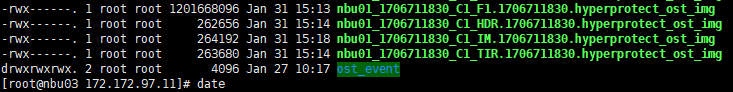

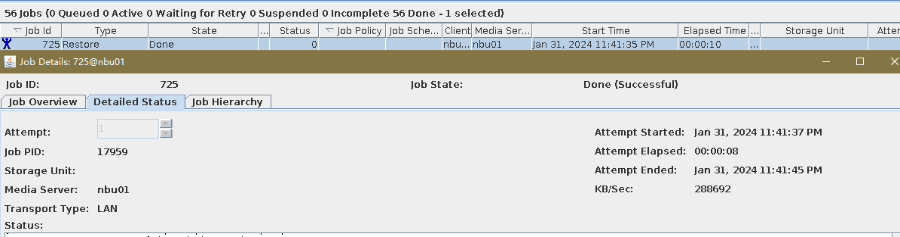

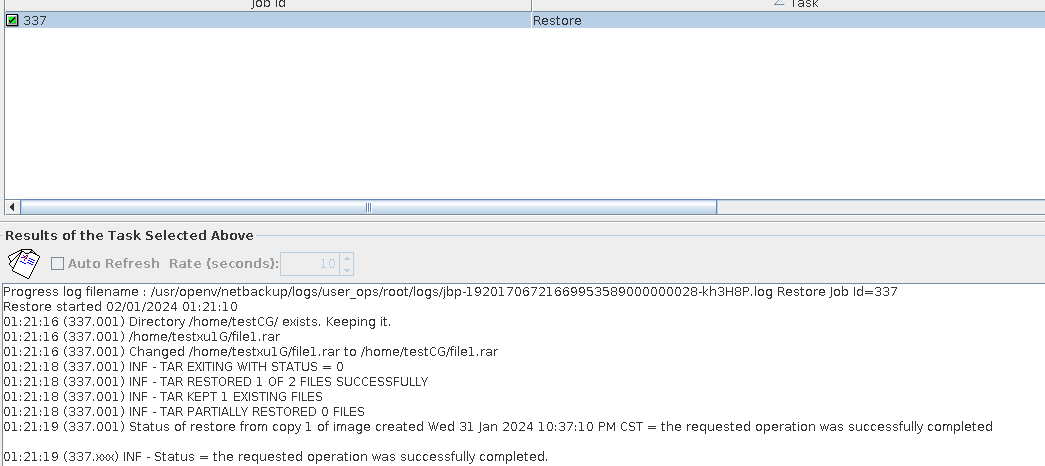

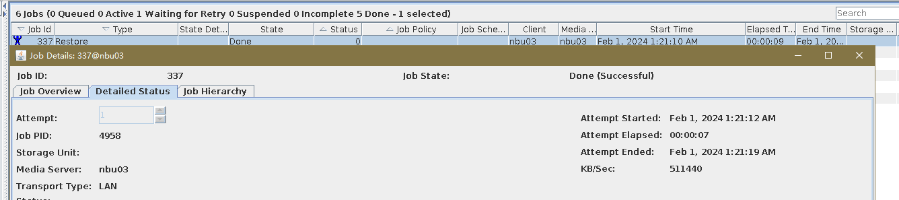

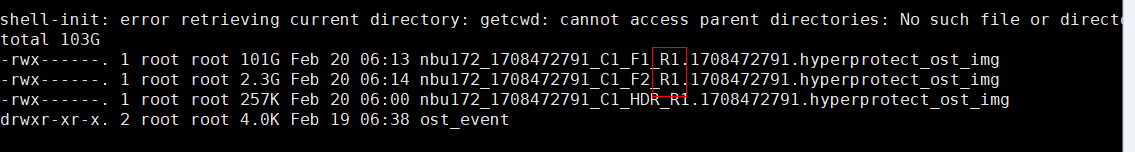

Test Result | 1. Create a storage unit of the basicdisk type and complete a backup. (Software compression is enabled in the policy.)

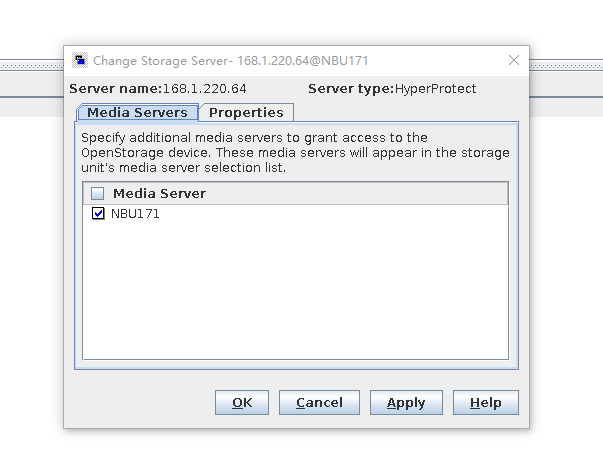

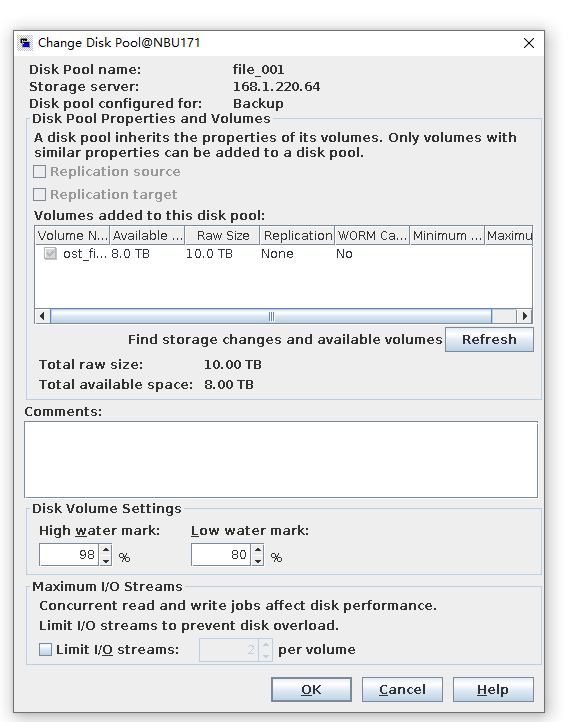

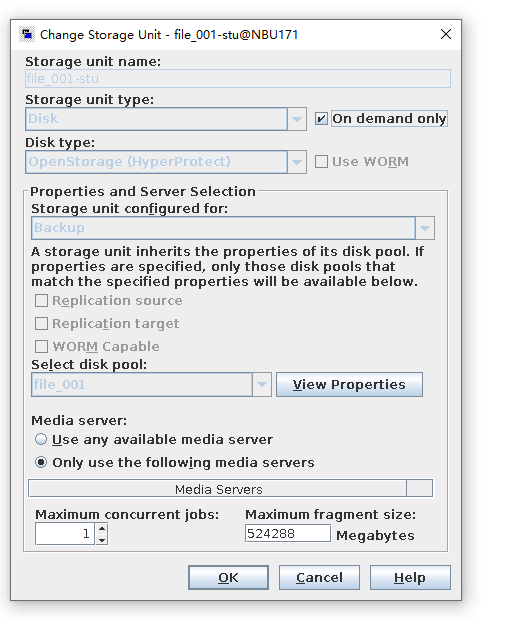

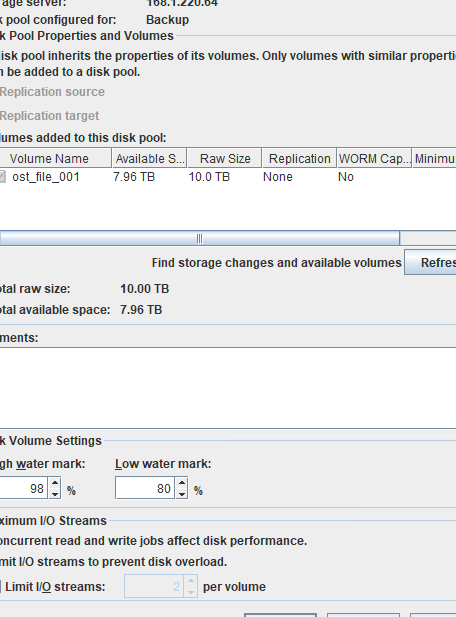

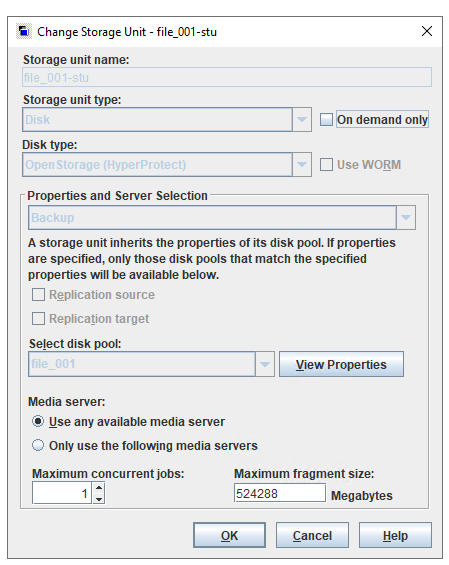

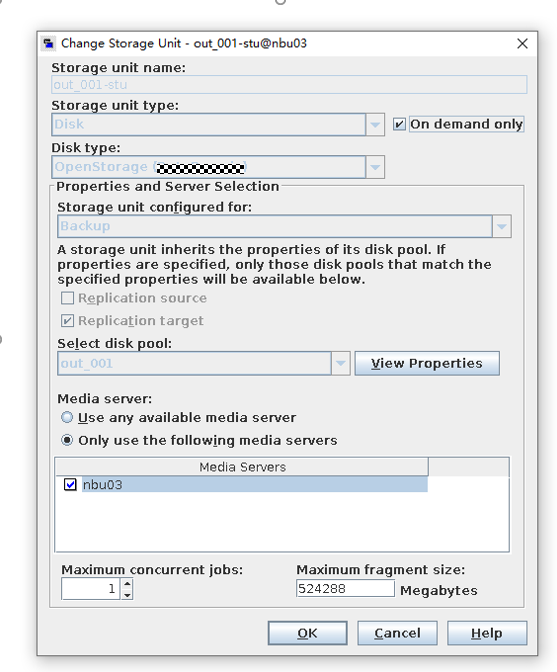

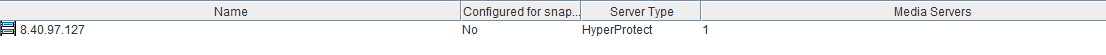

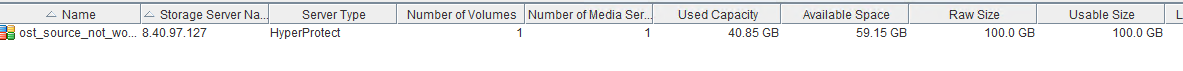

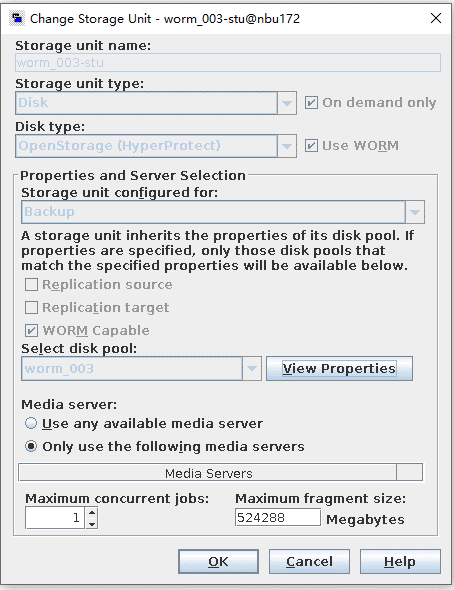

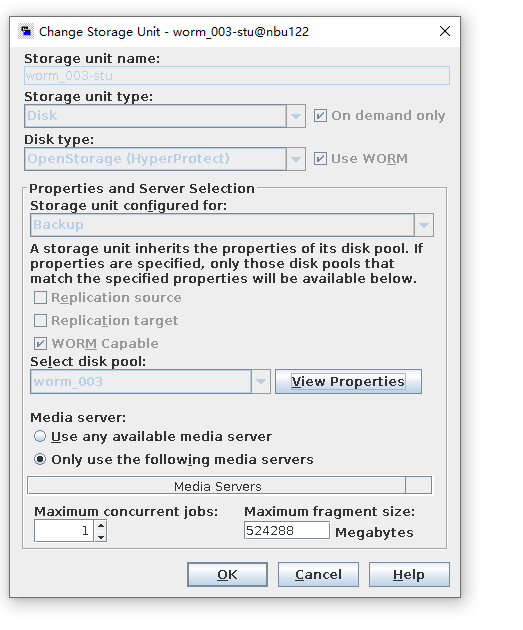

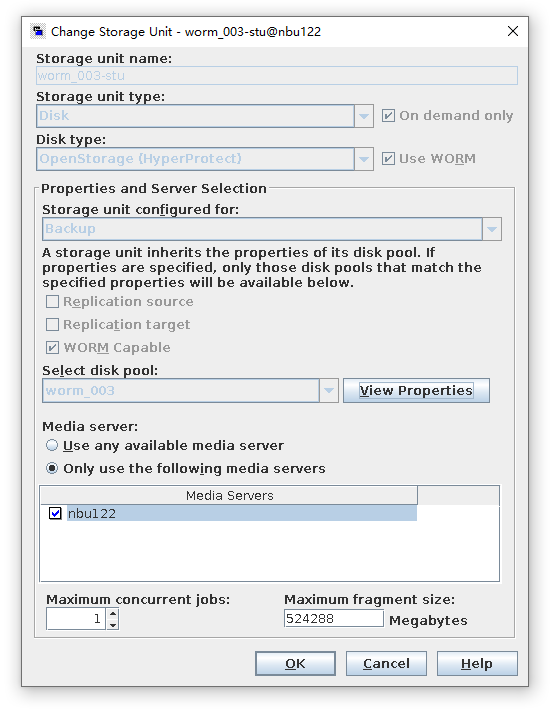

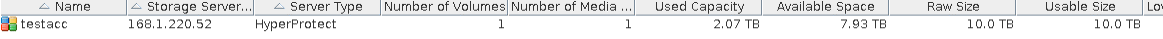

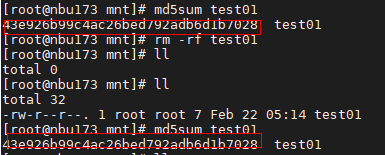

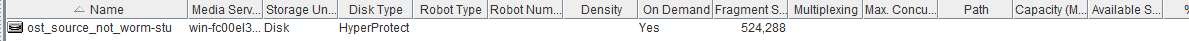

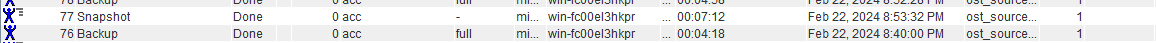

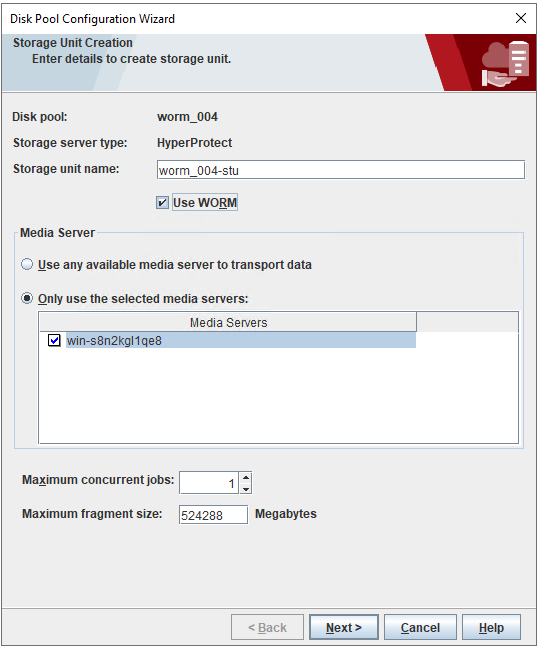

2. Create a storage server, diskpool, and storage unit, and set the type to HyperProtect.

3. Copy the backup copy in step 1 to the OST storage created in step 2.  4. Restore data in the OST storage and verify data consistency.

|

Conclusion |

|

Remarks |

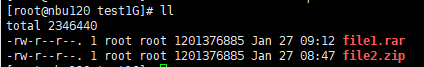

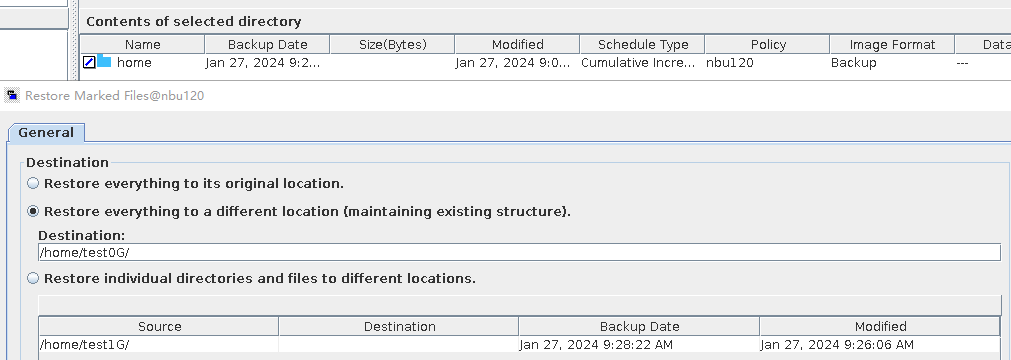

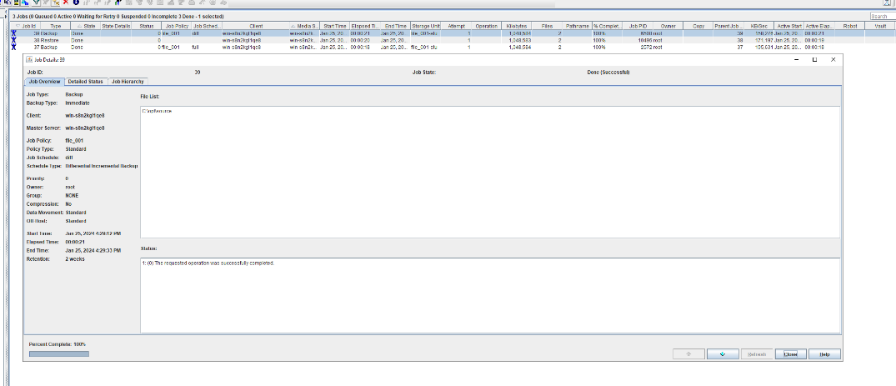

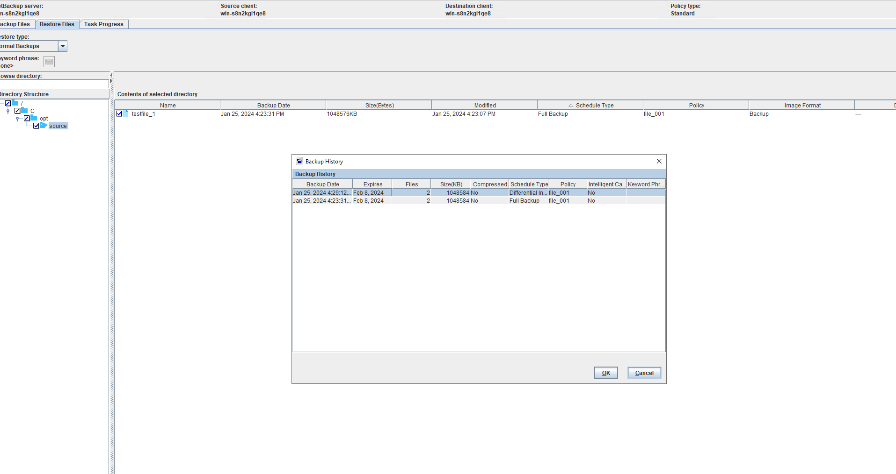

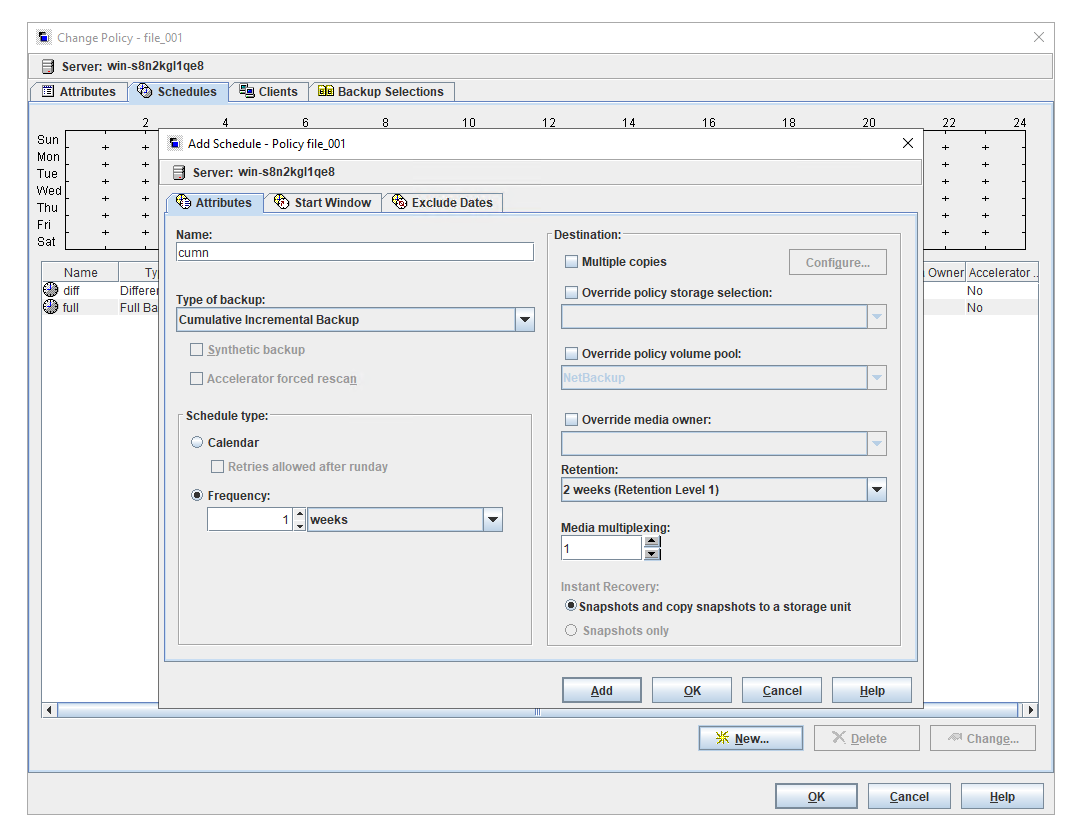

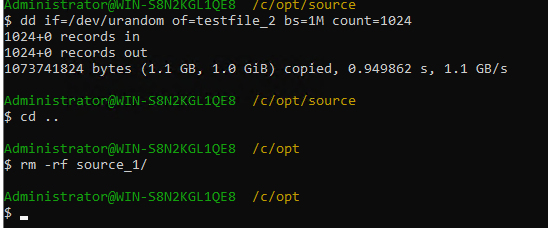

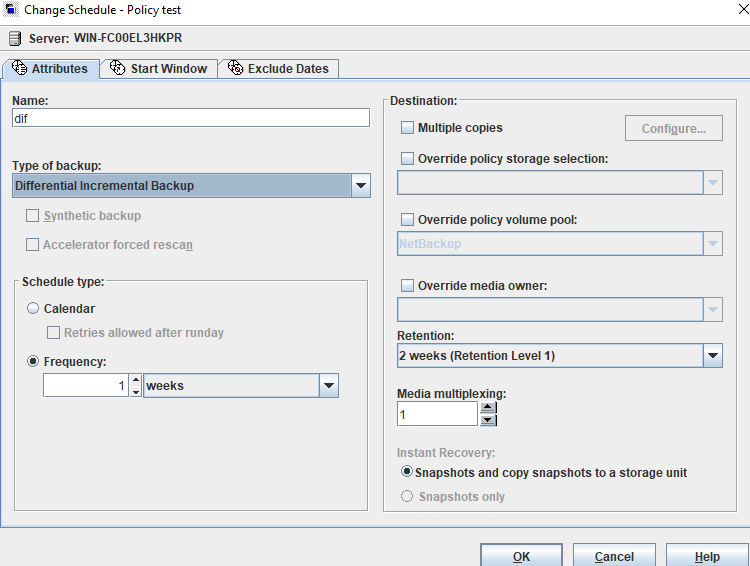

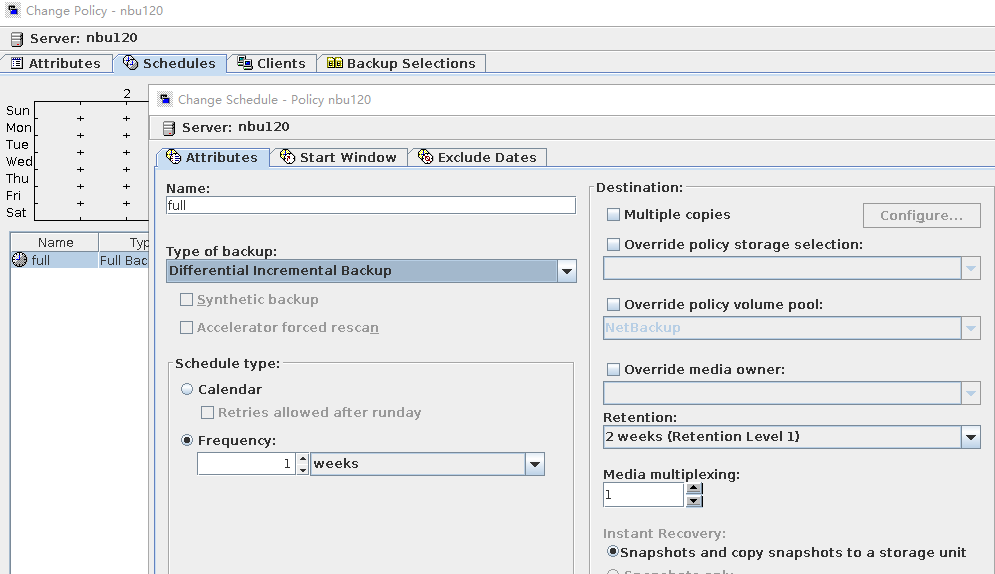

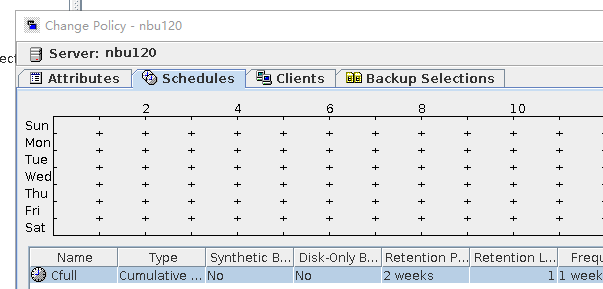

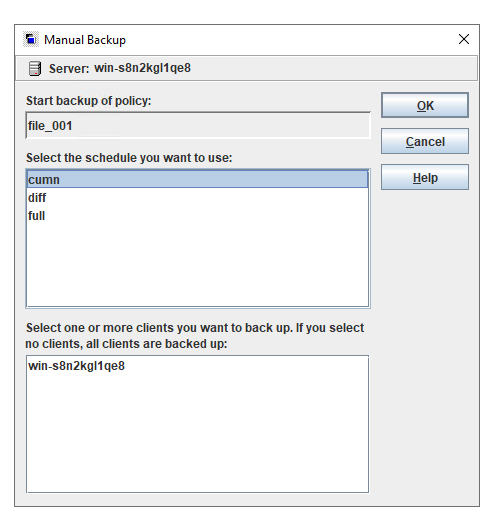

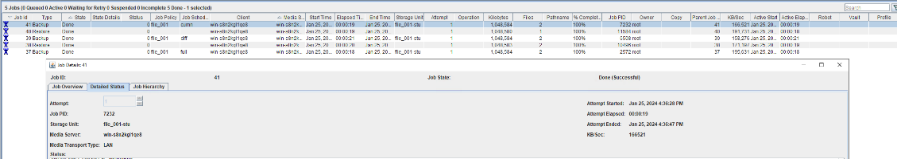

3.1.4 Cumulative Incremental Backup

Objective | To verify that the backup system can perform full restore of backup image and cumulative incremental backup |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

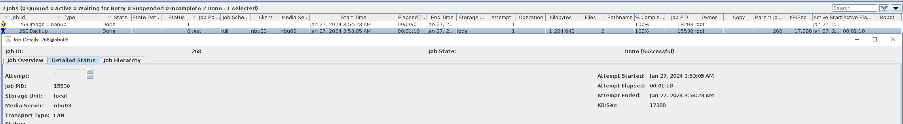

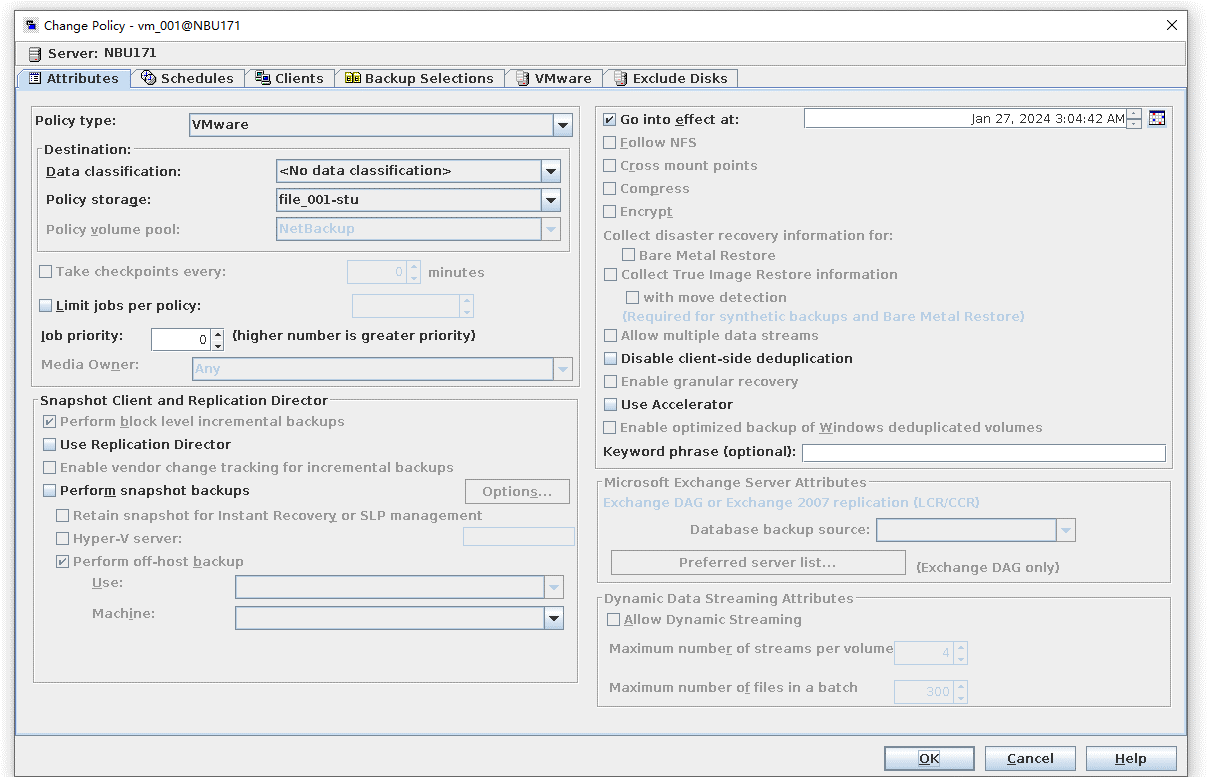

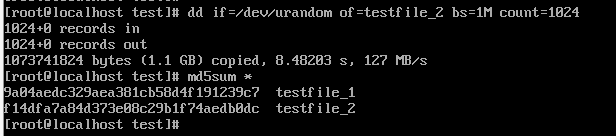

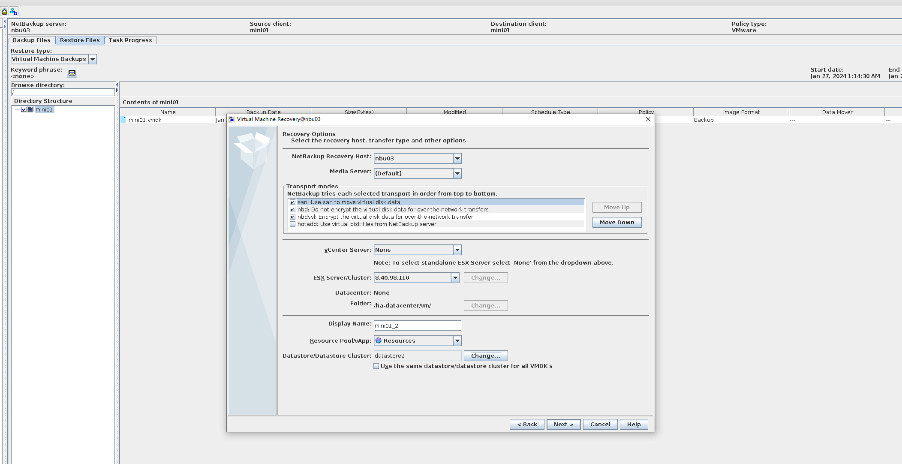

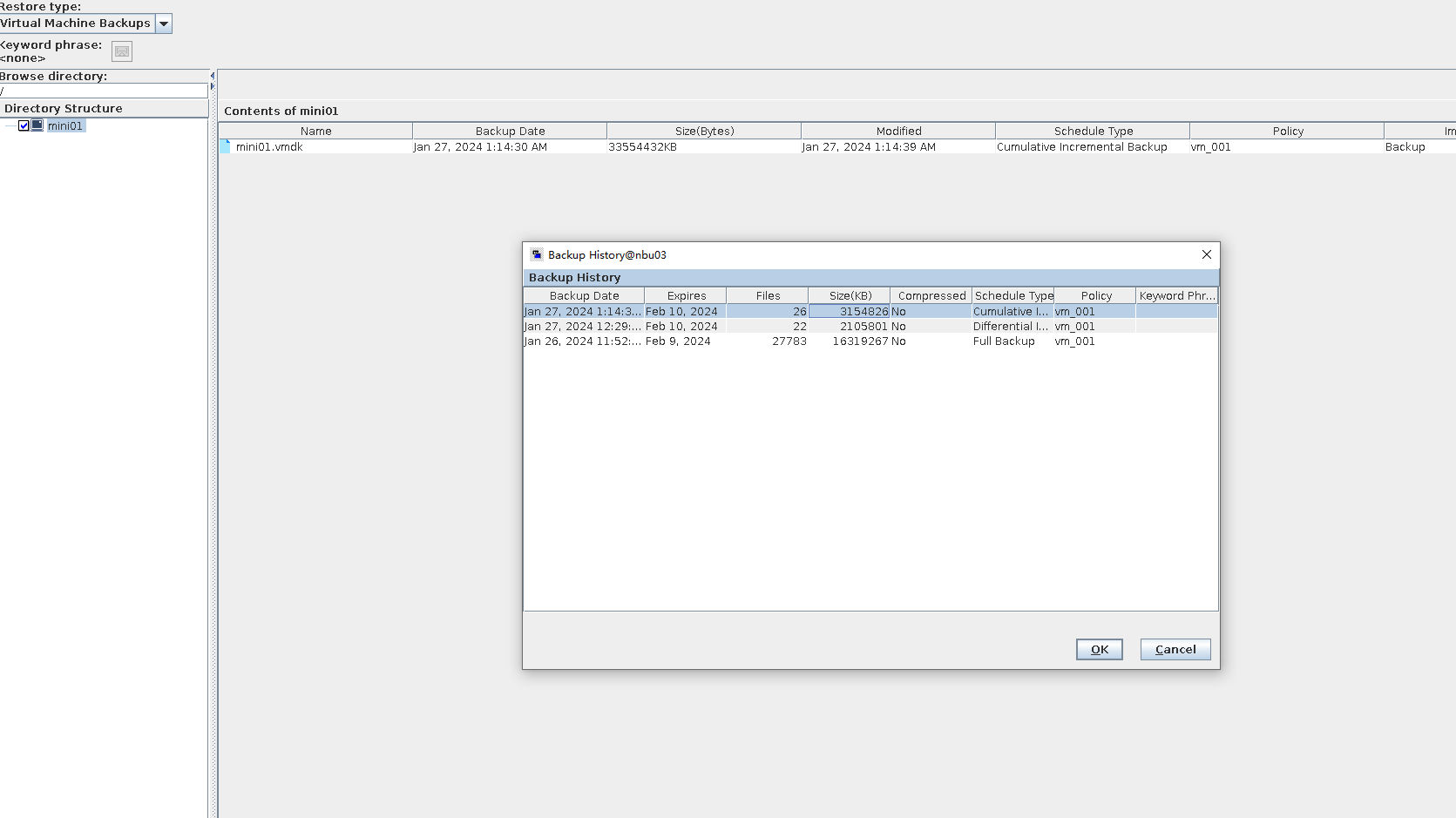

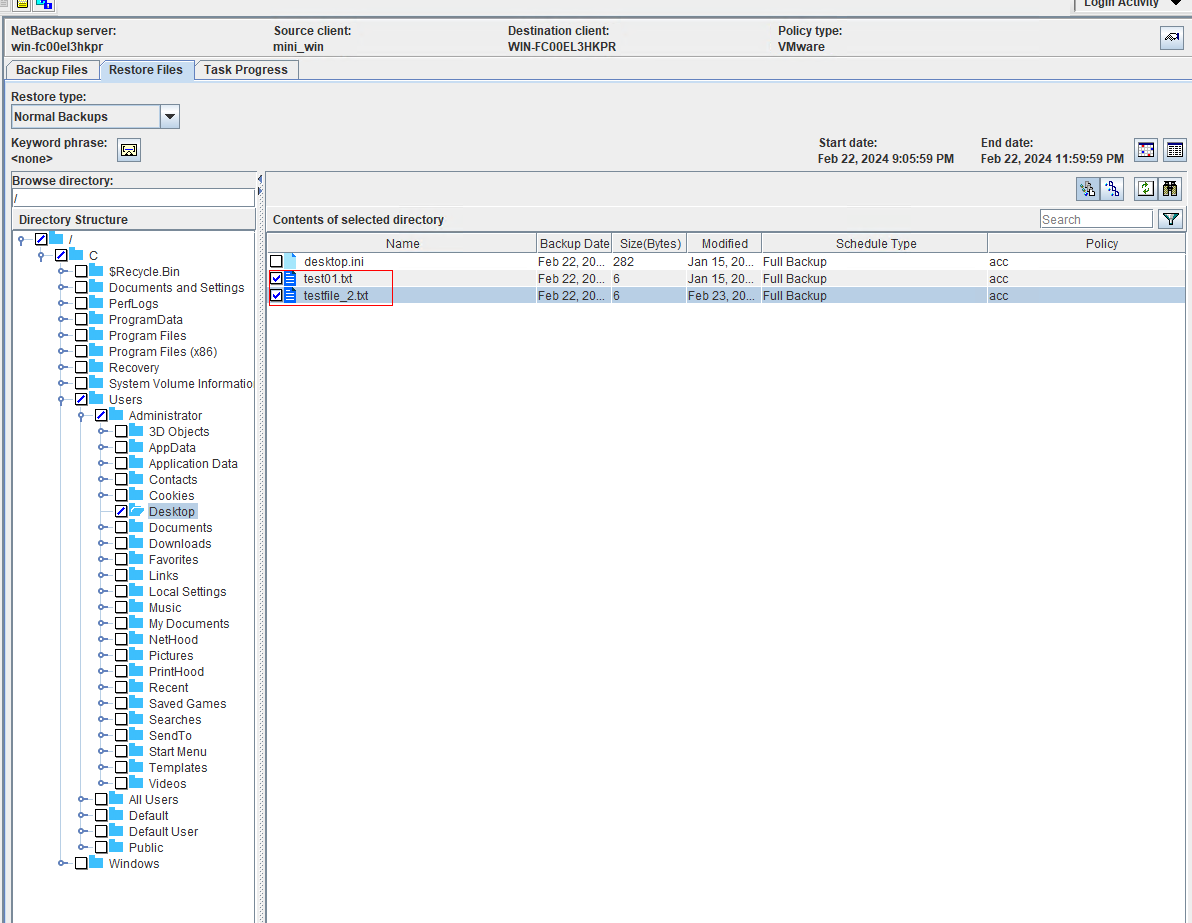

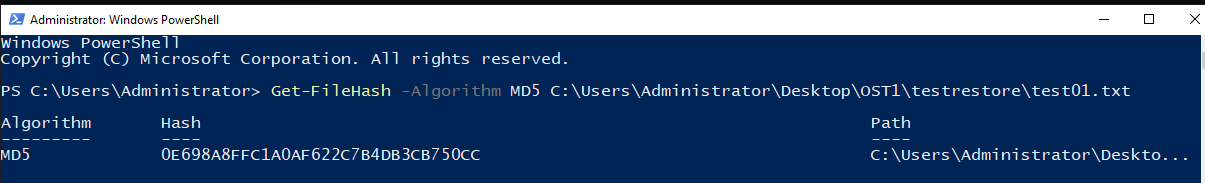

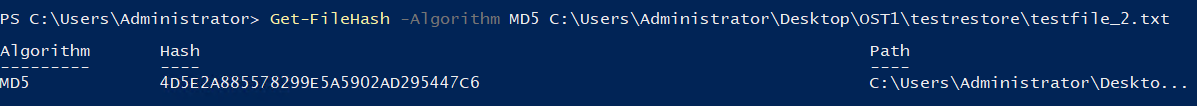

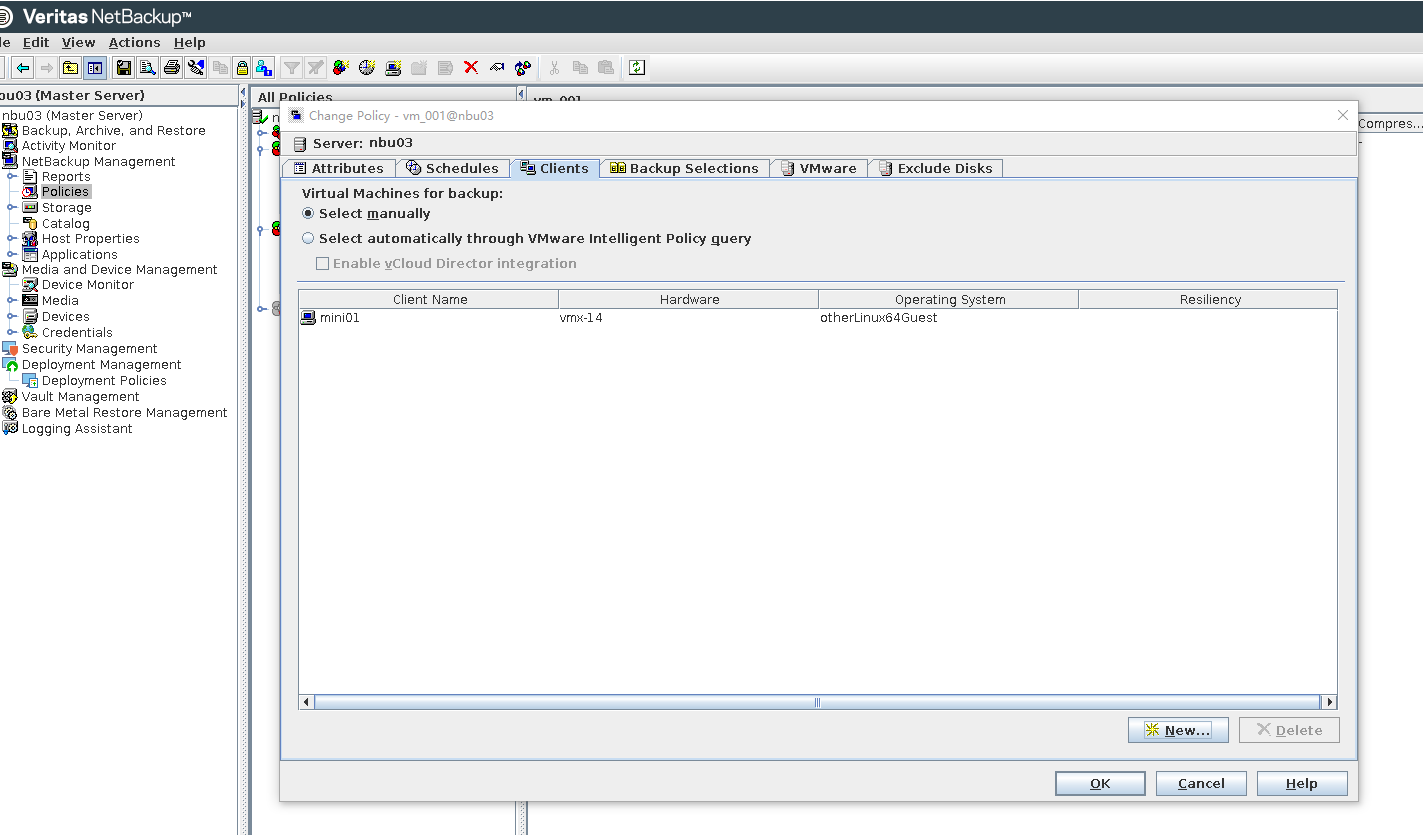

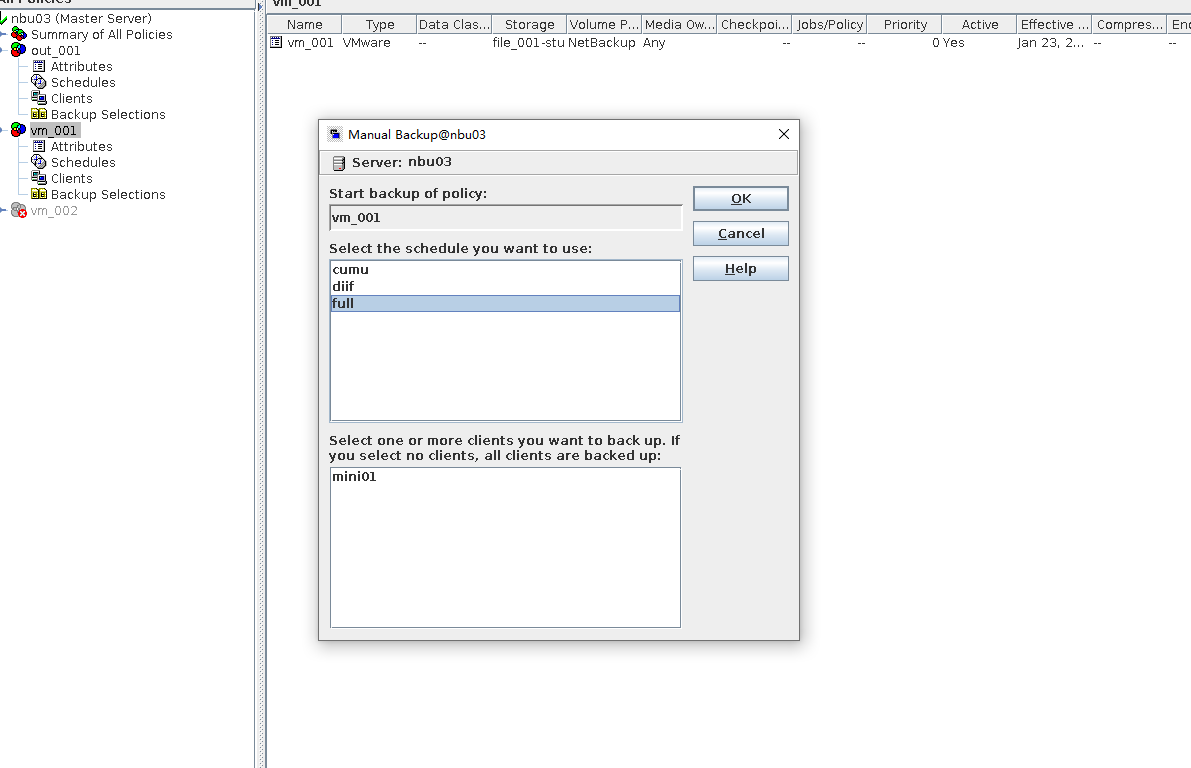

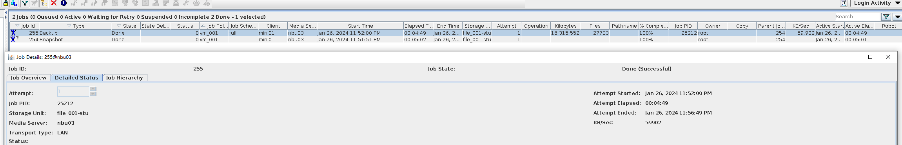

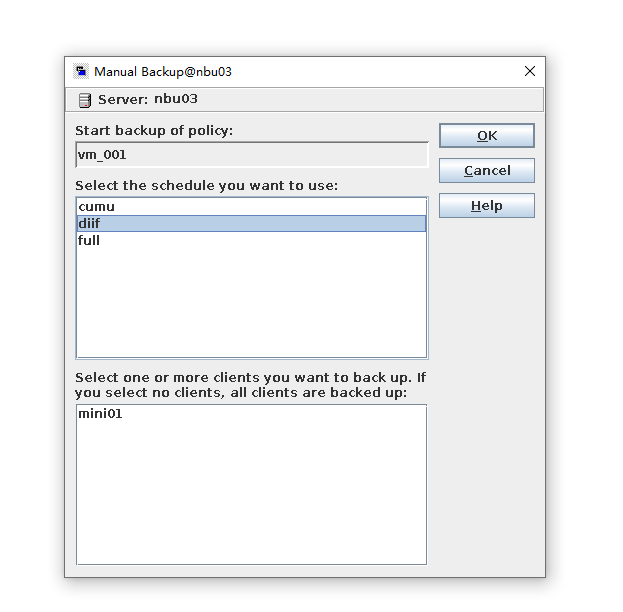

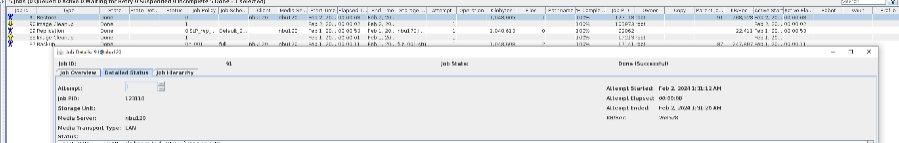

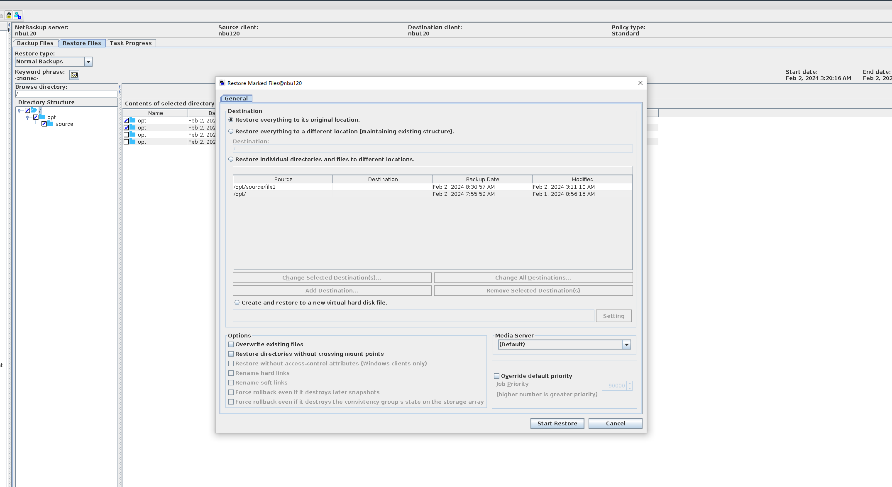

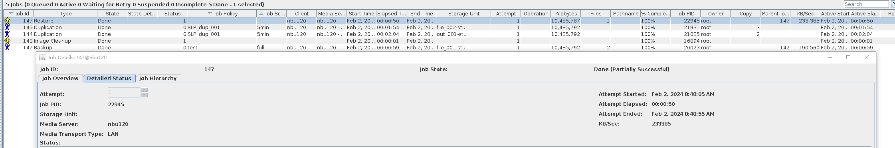

Test Result | 1. Create a storage server, disk pool, storage unit, and policy. The VM does not support compression.

2. Insert the 1 GB file testfile_1 into the VM and perform a full backup of the VM.

3. Restore data and verify data consistency in testfile_1.

4. Insert the 1 GB file testfile_2 into the VM and perform differential incremental backup for the VM.

5. Restore the data and verify the data consistency of testfile_2.

6. Modify the 1 GB file testfile_2 on the VM and perform permanent incremental backup.

7. Restore the data and verify the data consistency of testfile_2.

|

Conclusion |

|

Remarks |

3.1.5 Inline Tape Copy Backup and Restore

Objective | To verify that the backup system can perform the inline tape copy backup and restore |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

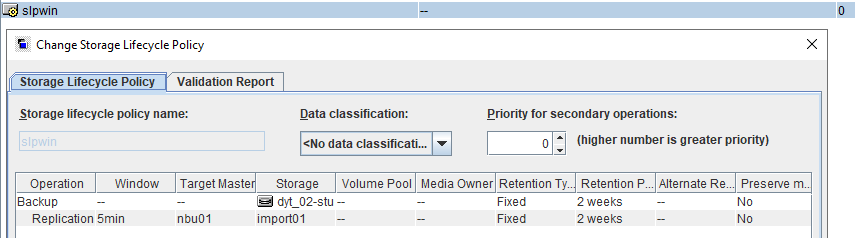

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the Duplication SLP policy on the source master server and select Common Duplication. //The configuration is successful.  3. On the source end, configure a backup policy based on the Duplication SLP policy.  4. Set a scheduled backup task and perform the backup. Timed  Backing Up  5. When the replication period arrives, the replication task is executed.  //The copy is successfully copied and imported to the tape library.  6. Use the mirroring recovery in the tape library to verify data consistency. Select mirror to copy to tape library  Restored successfully.  // Data consistency  |

Conclusion |

|

Remarks |

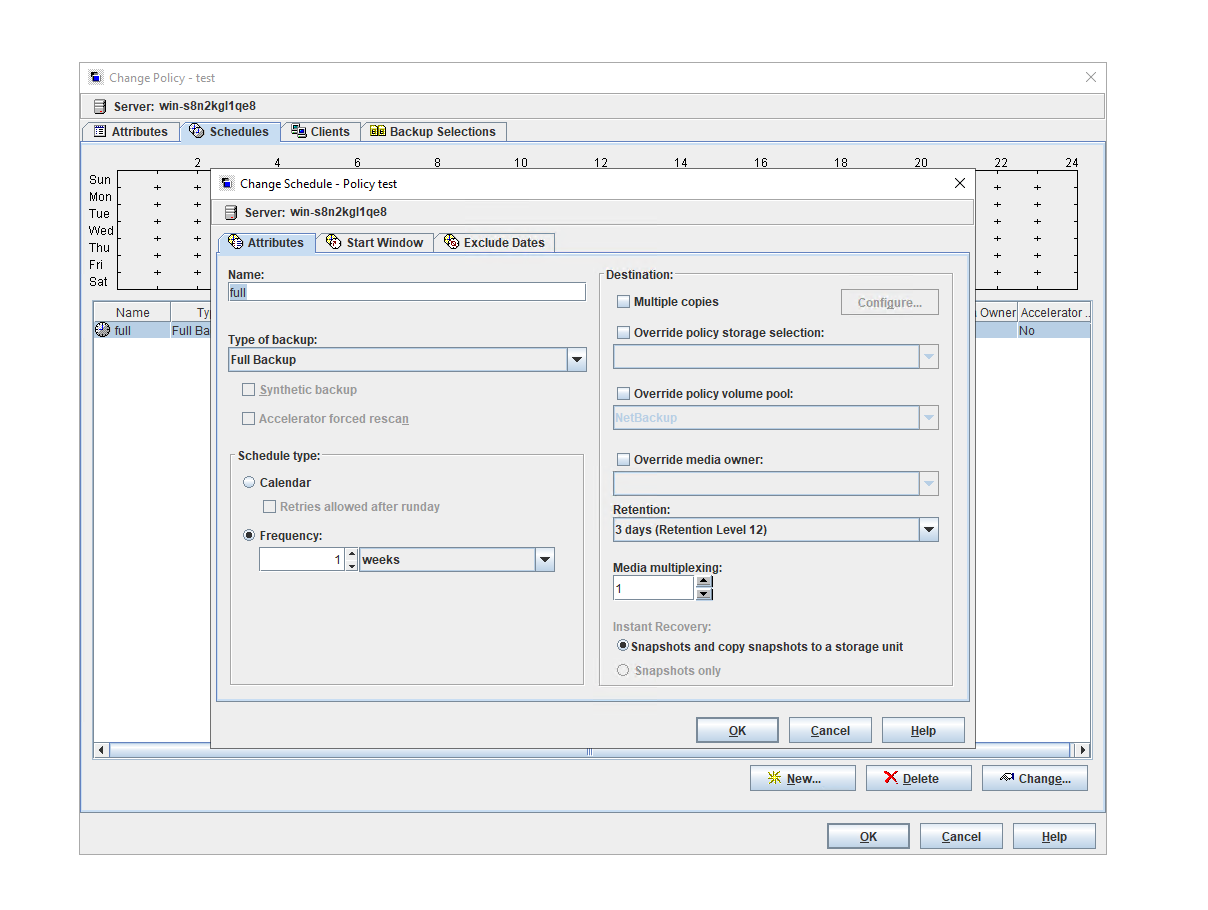

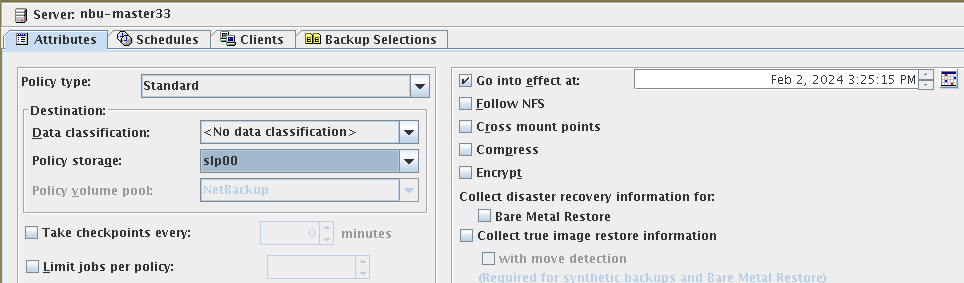

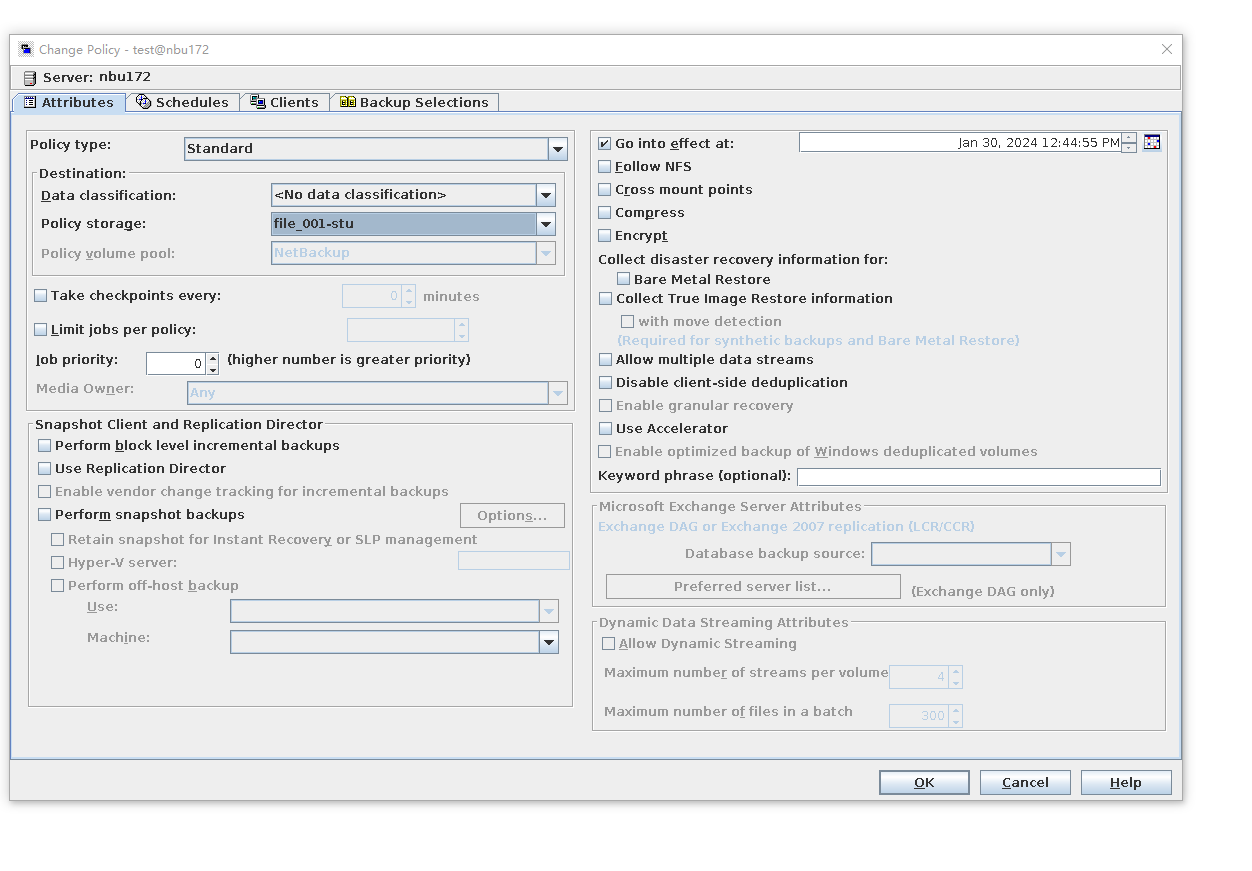

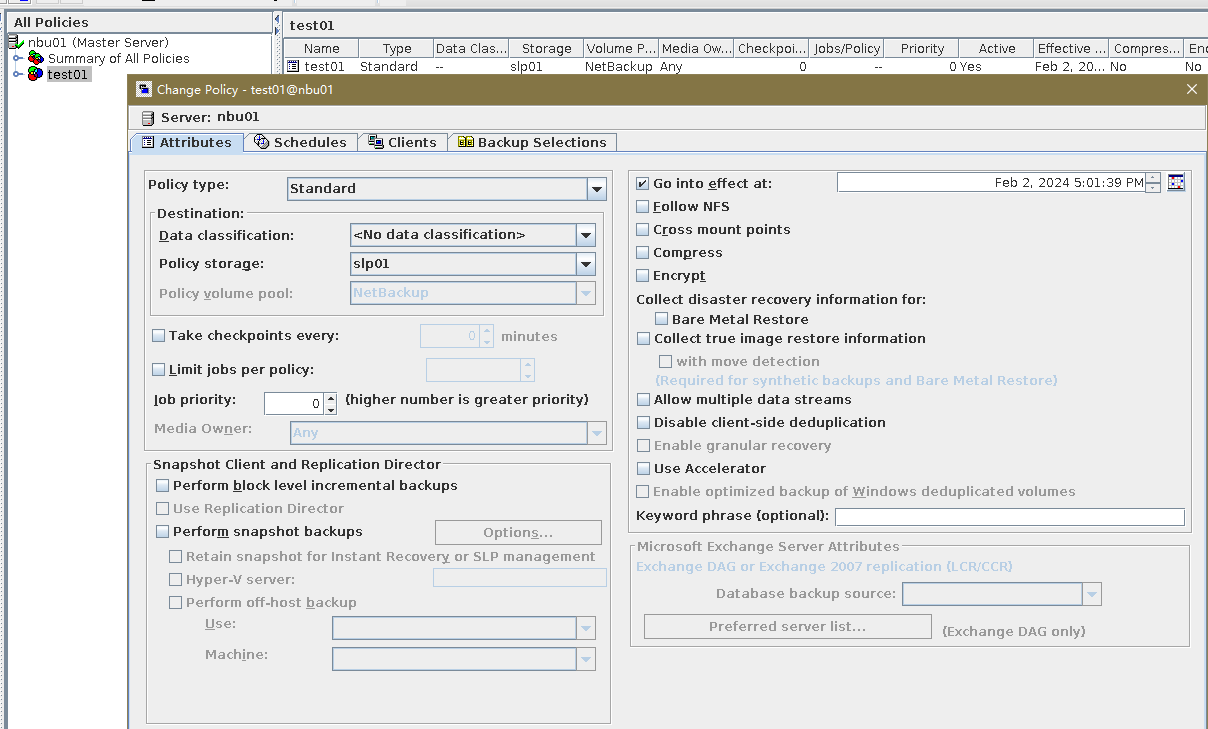

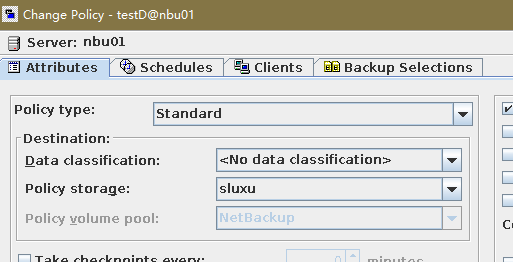

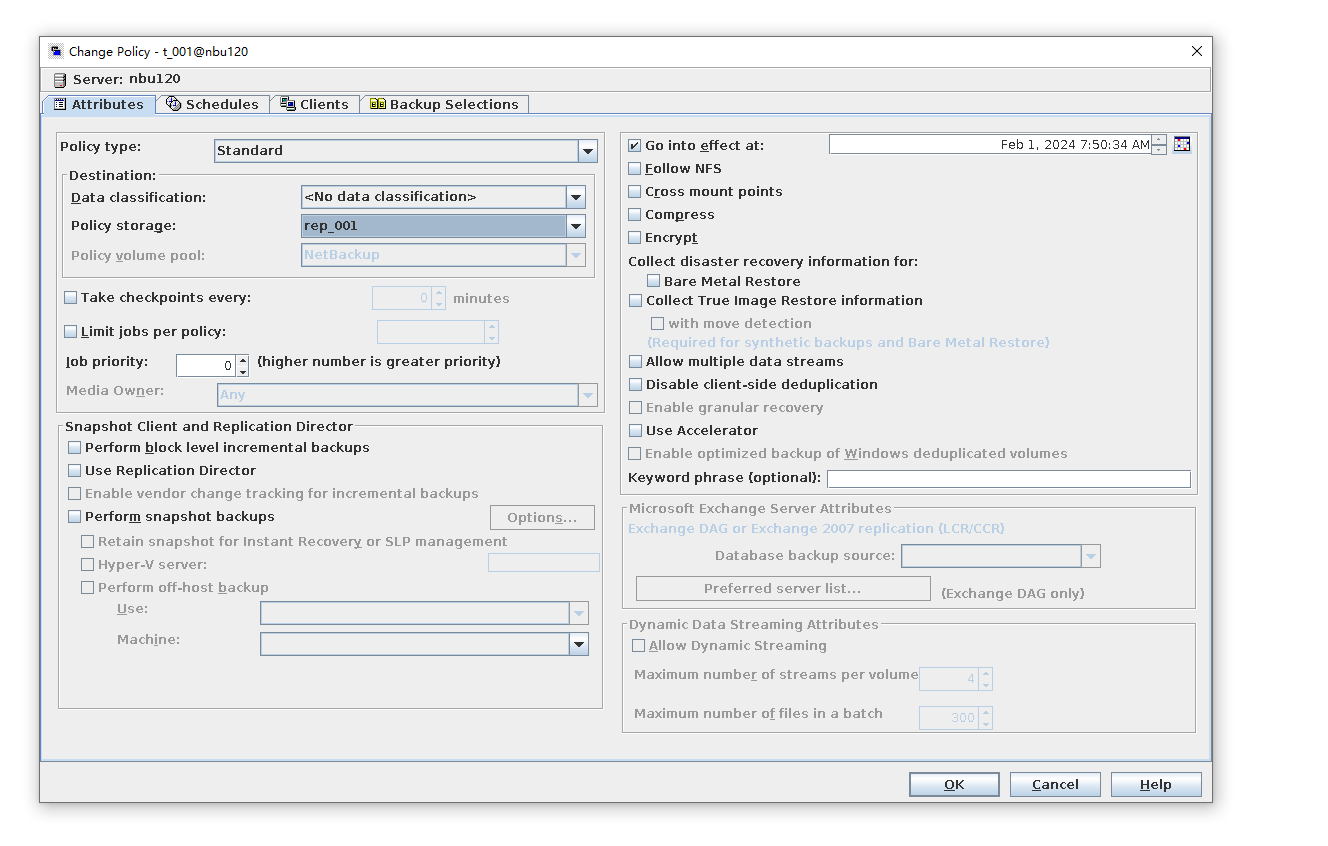

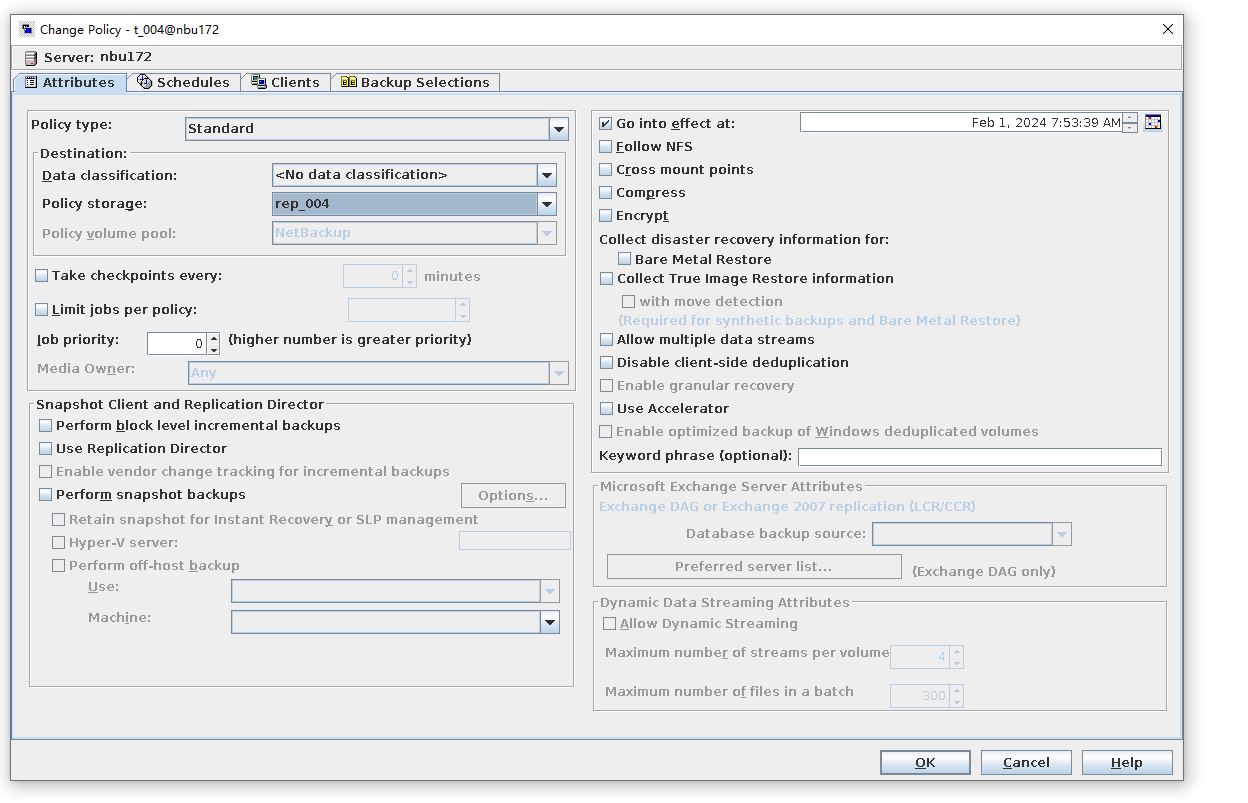

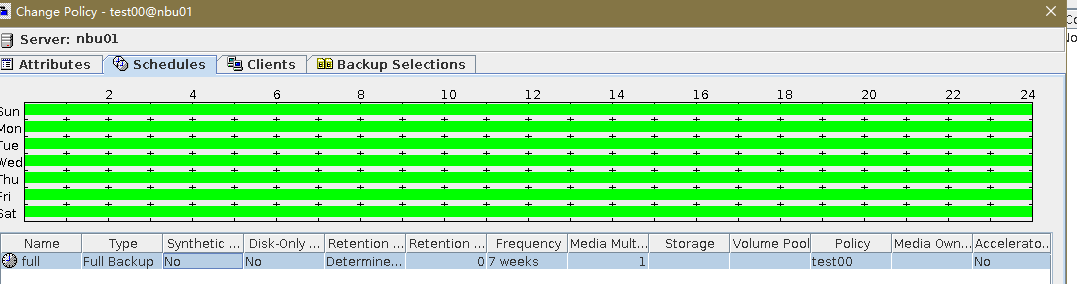

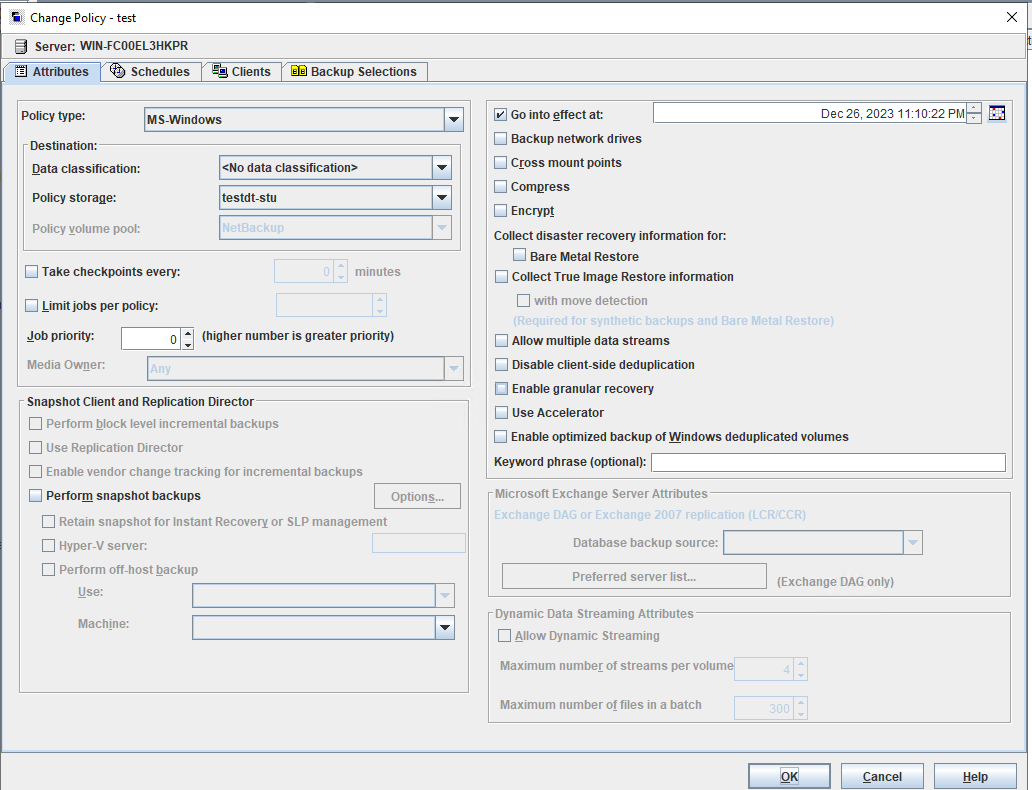

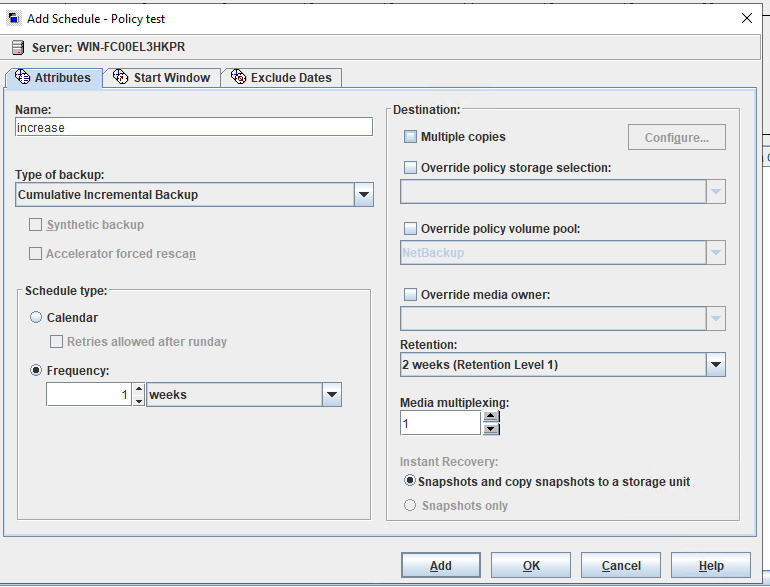

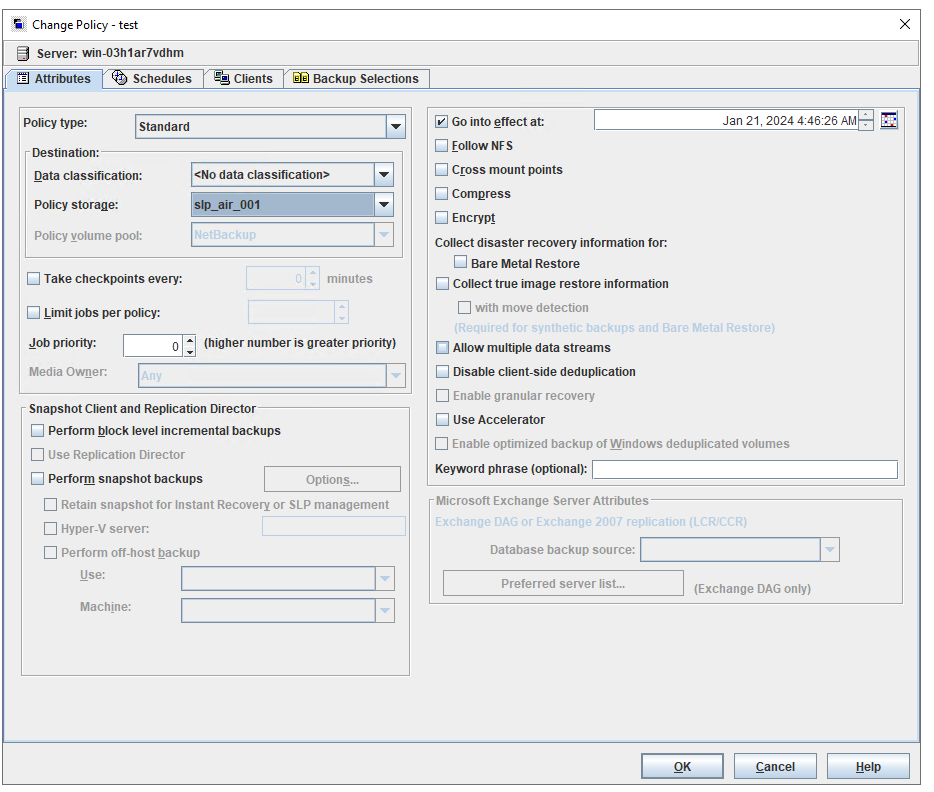

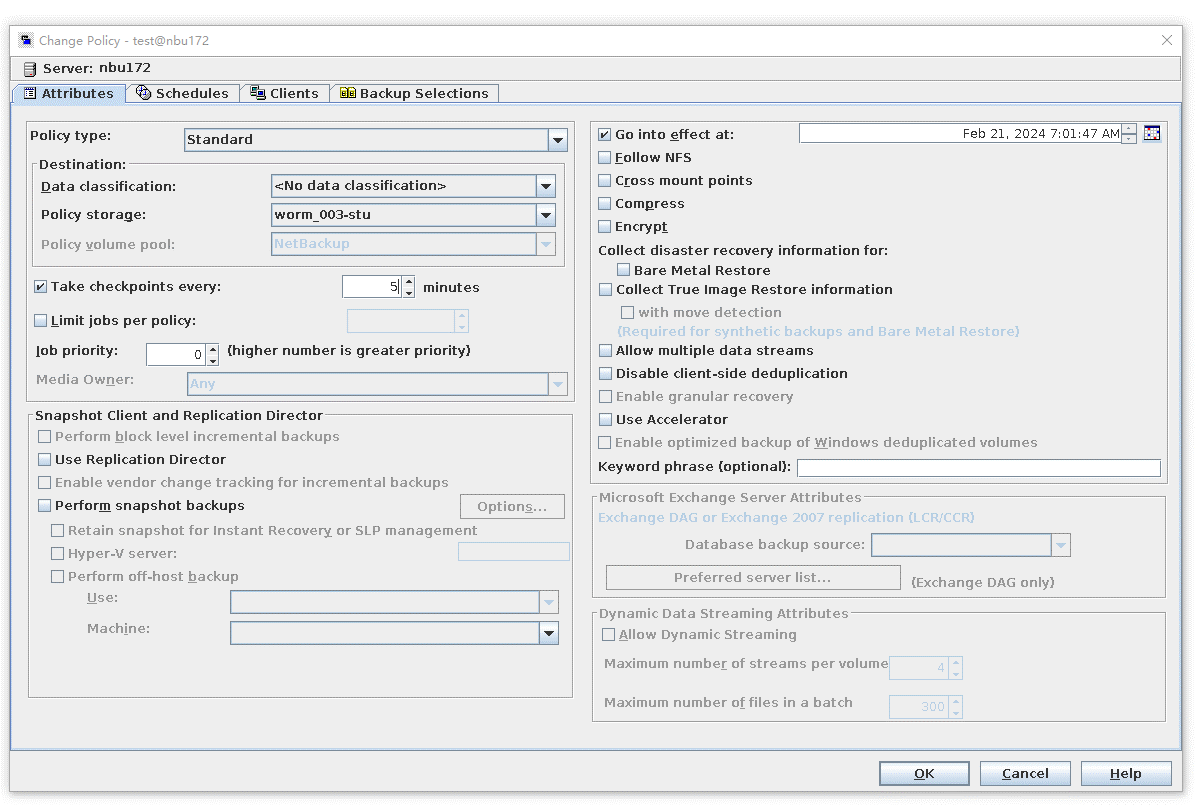

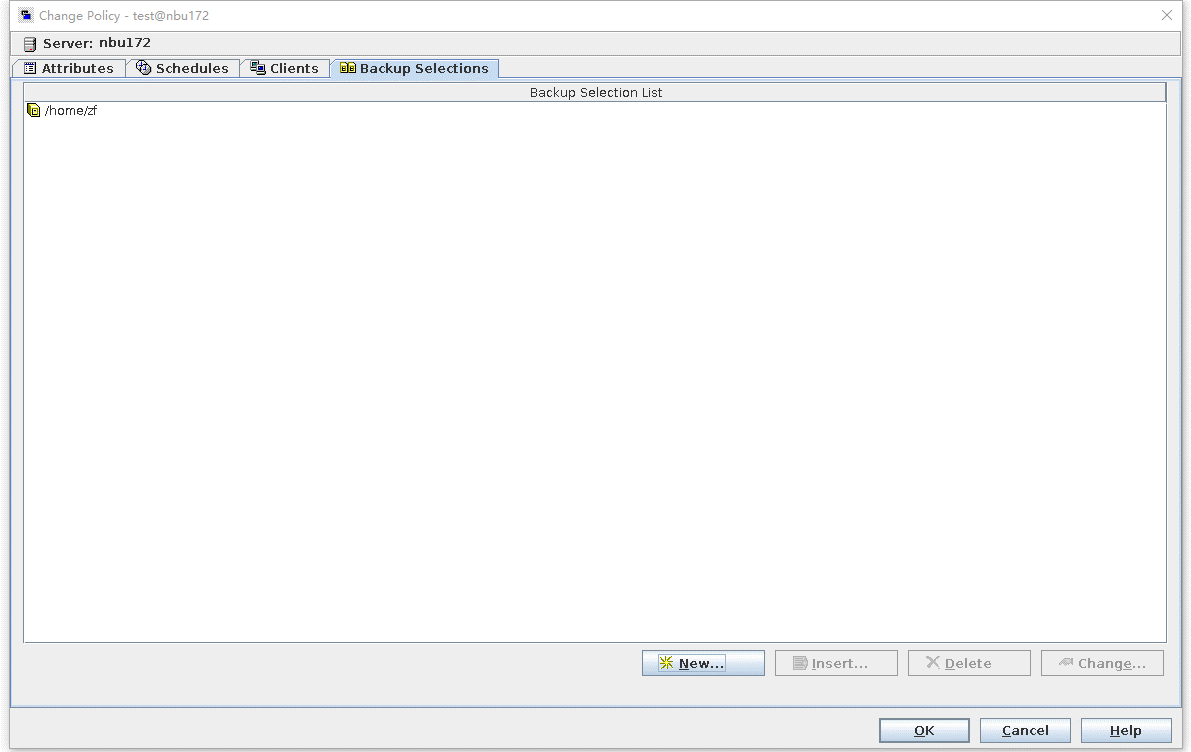

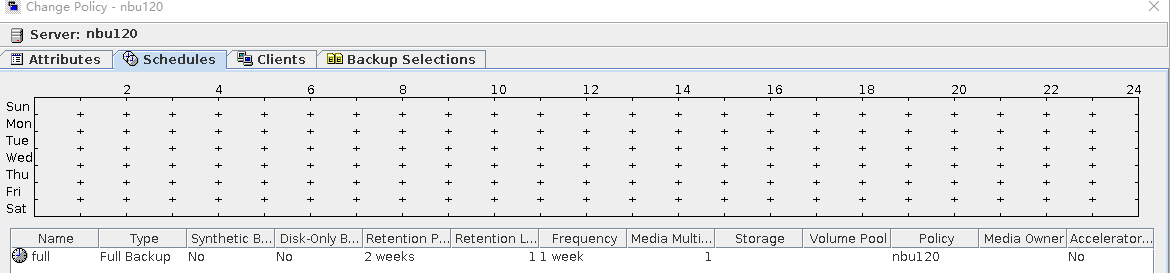

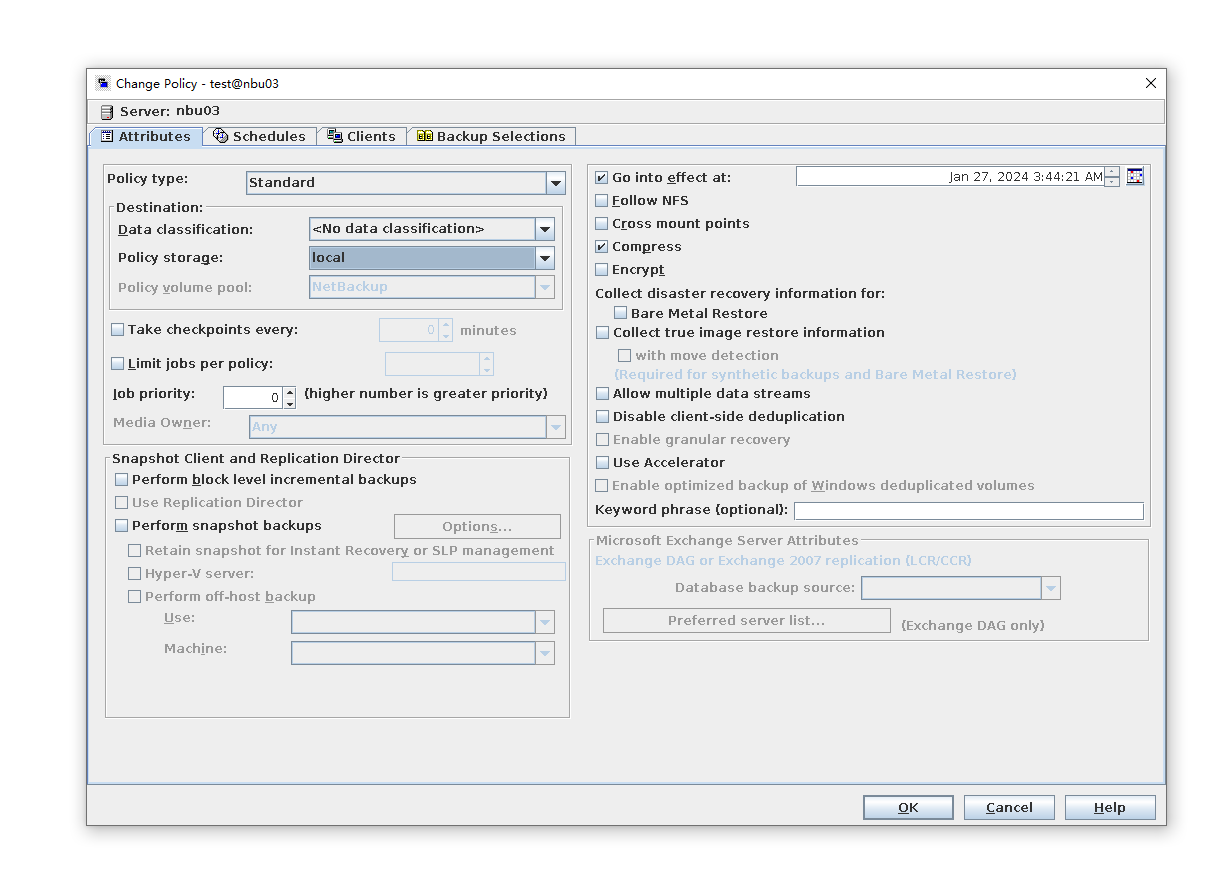

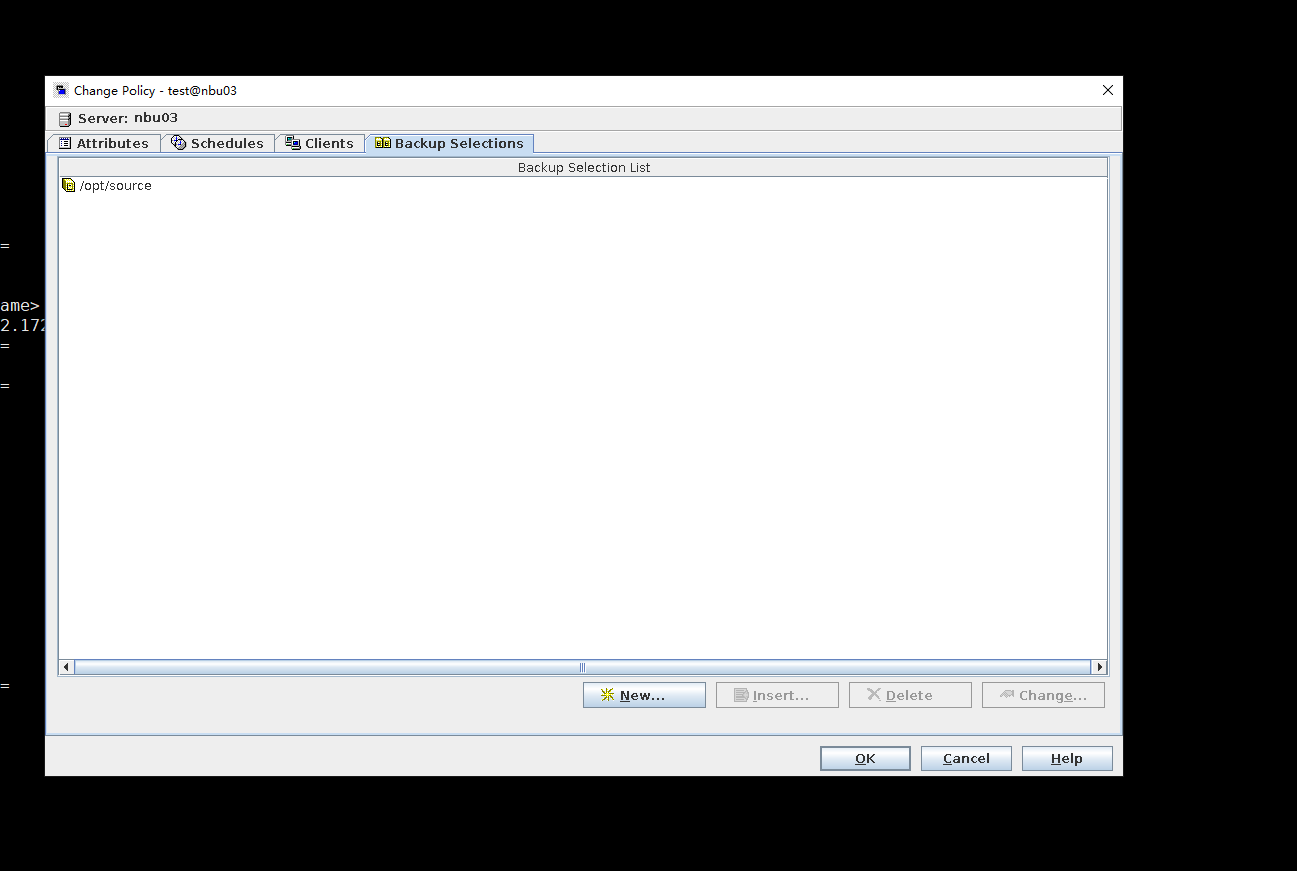

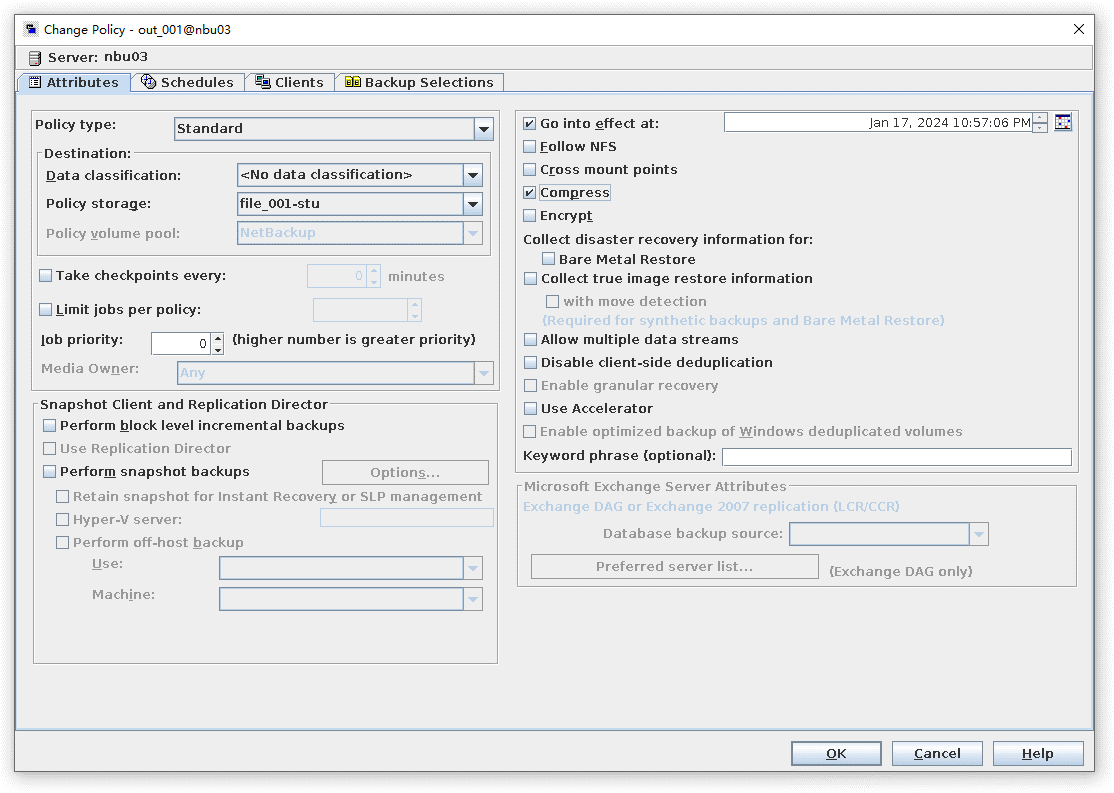

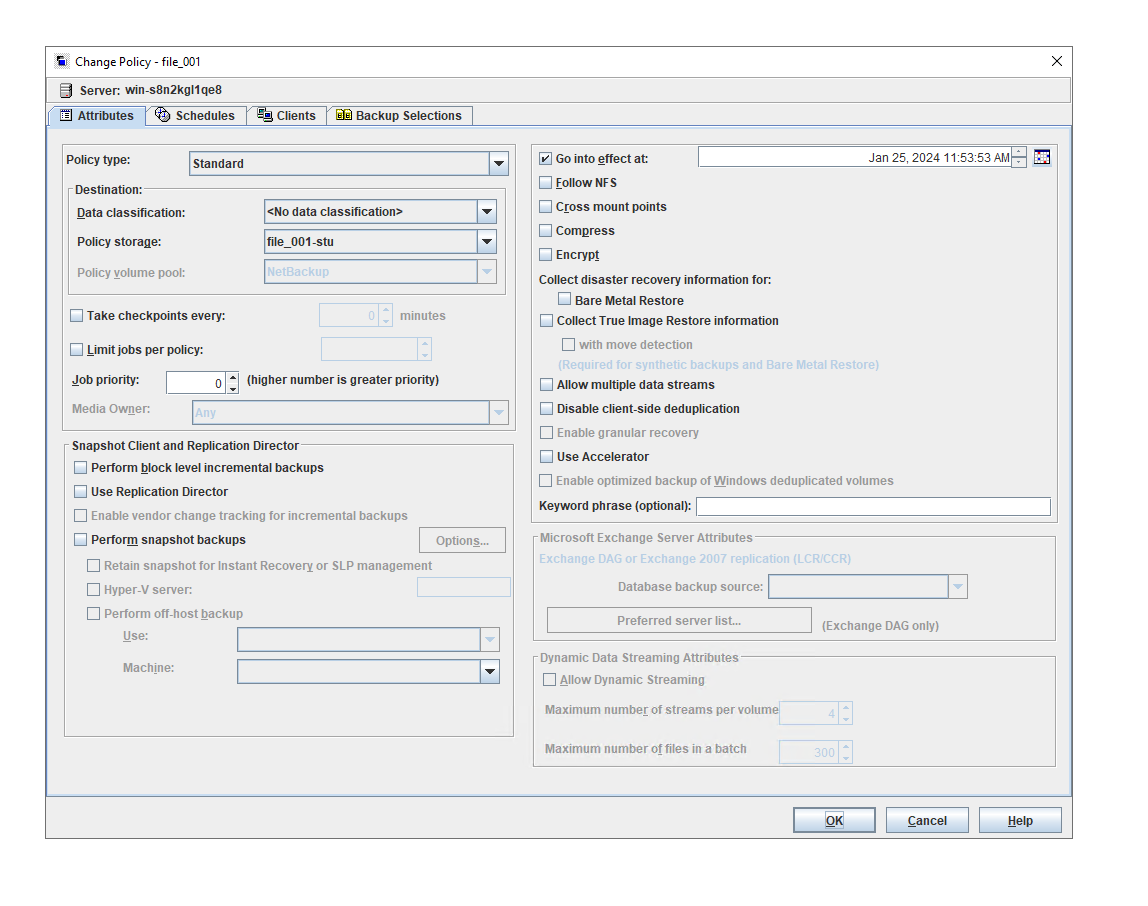

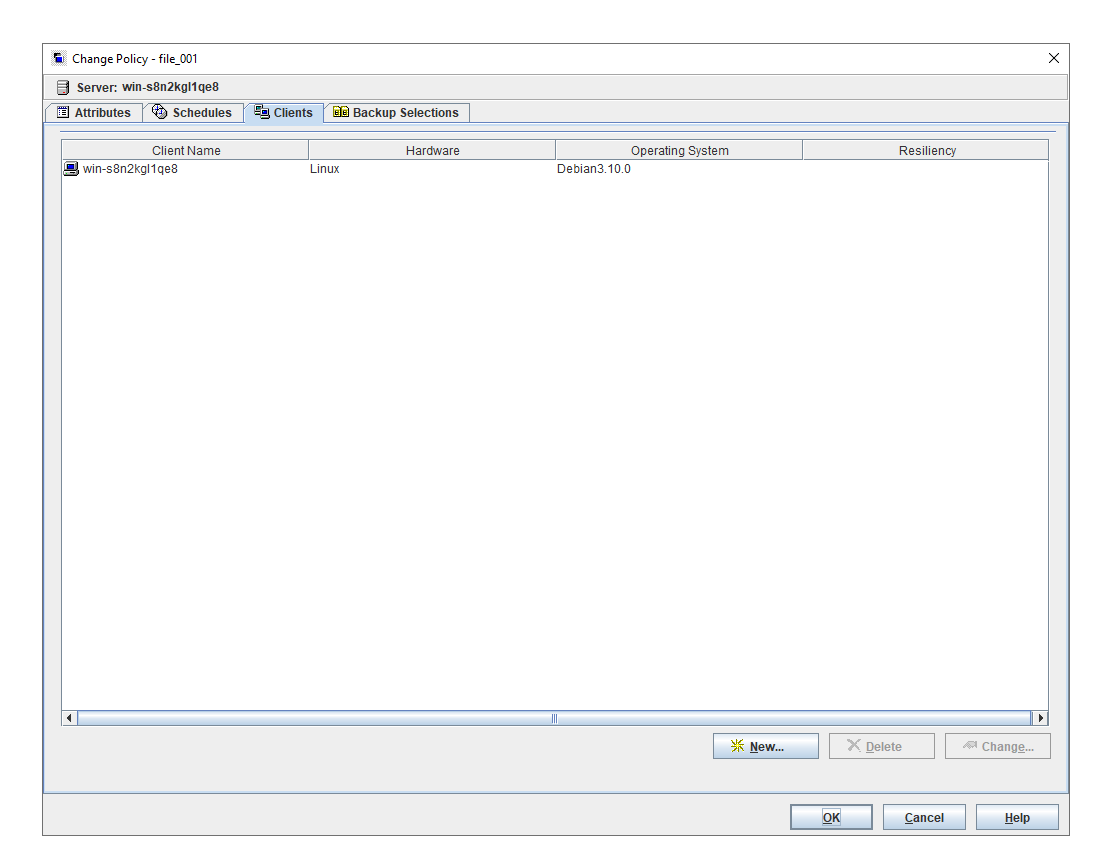

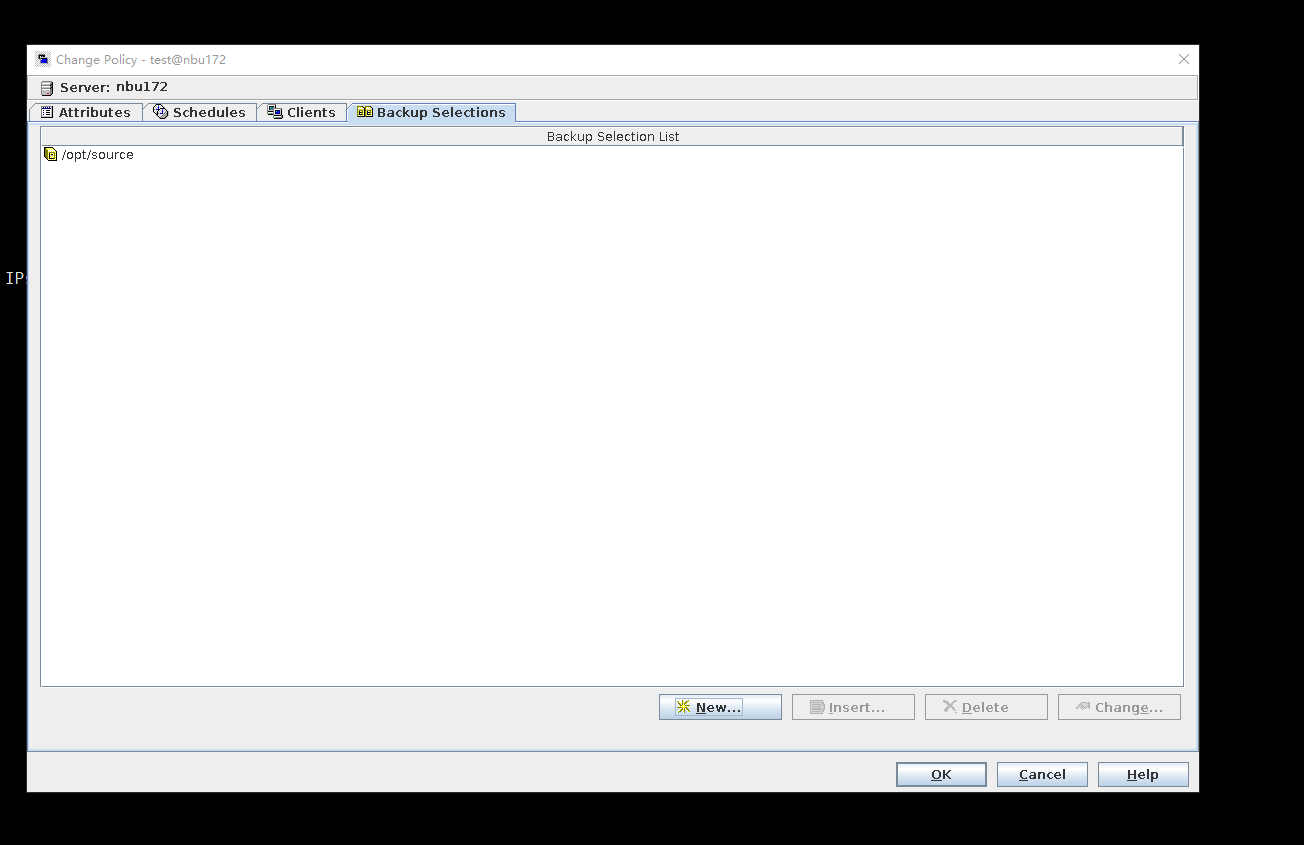

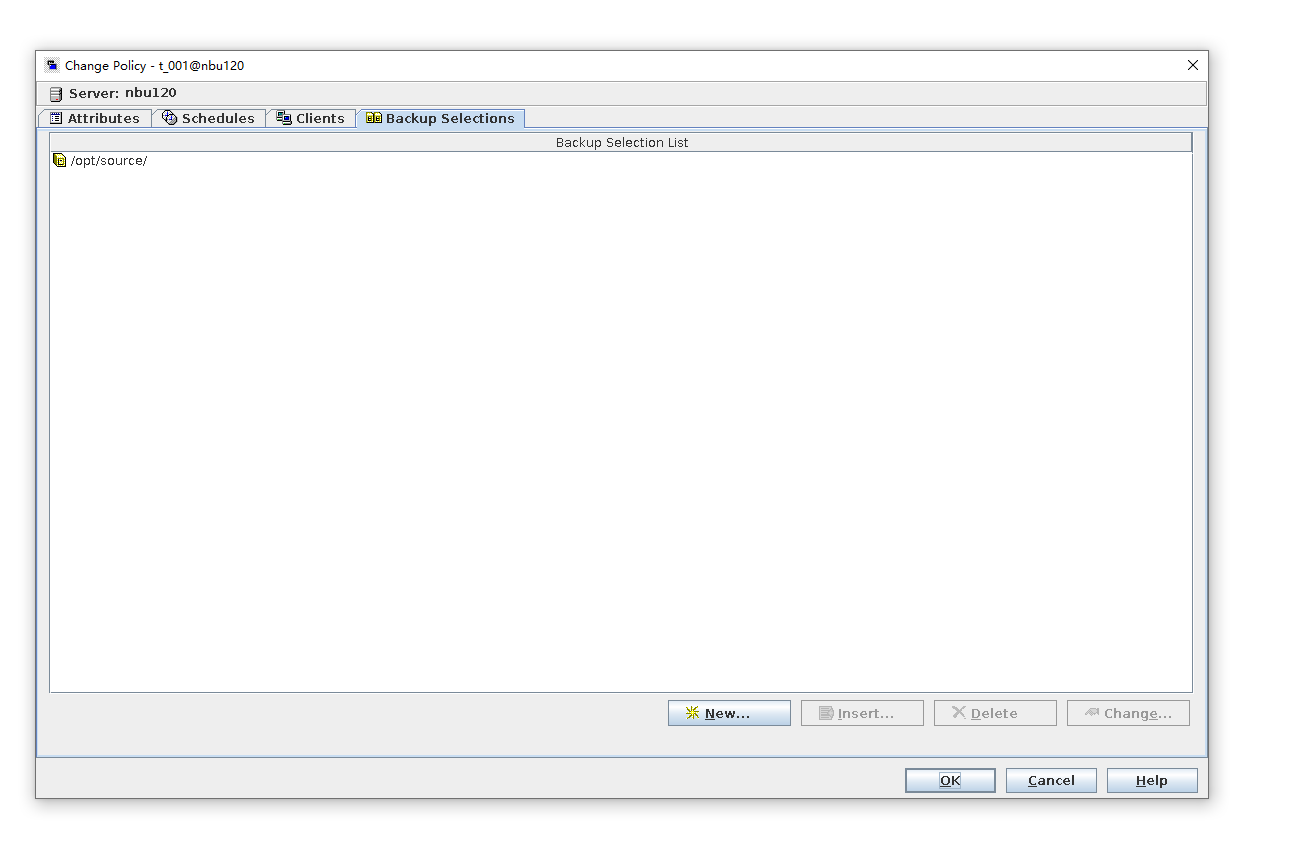

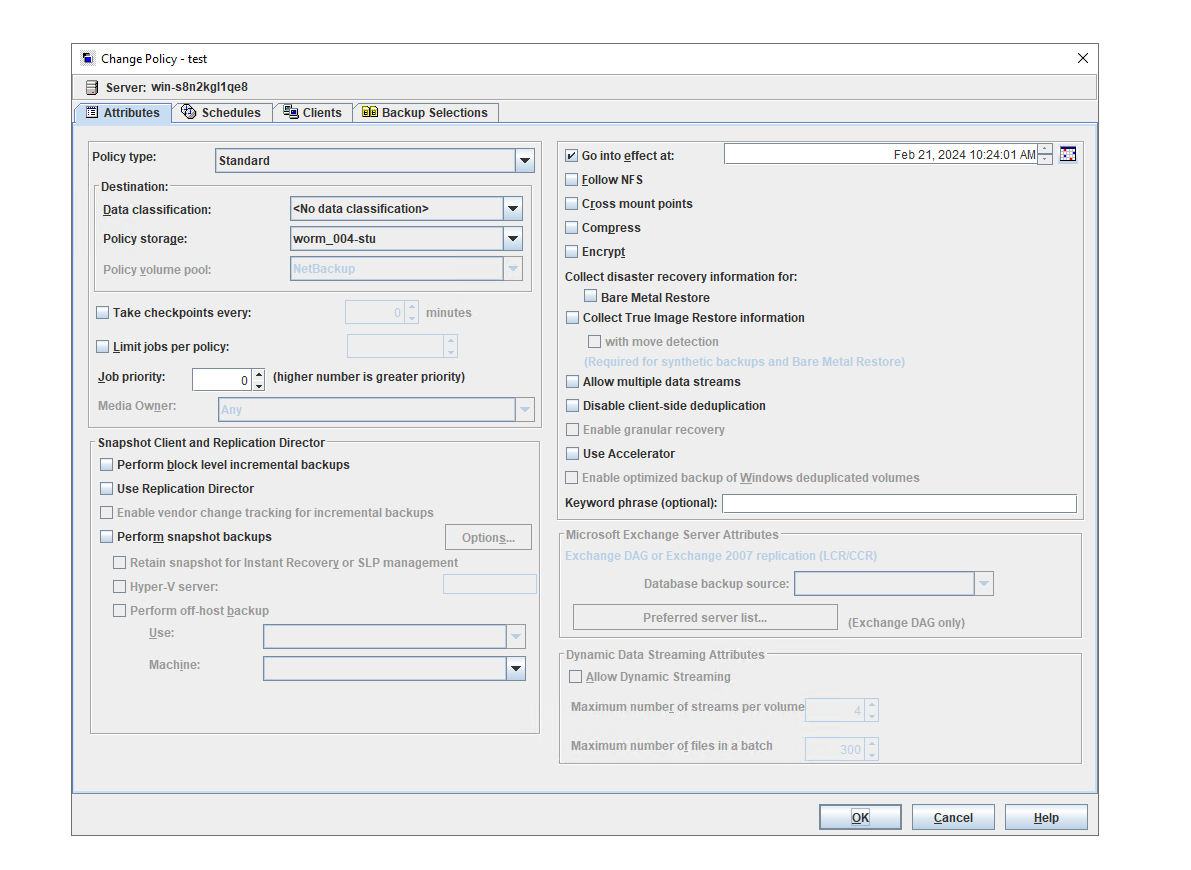

3.1.6 Policy Configuration for OpenStorage

Objective | To verify that the backup system can config policy for the OceanProtect |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

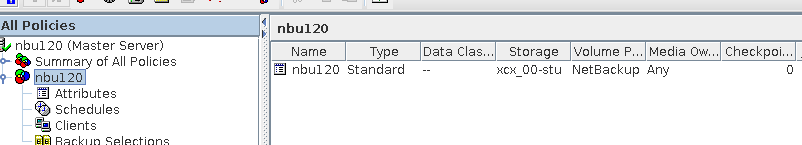

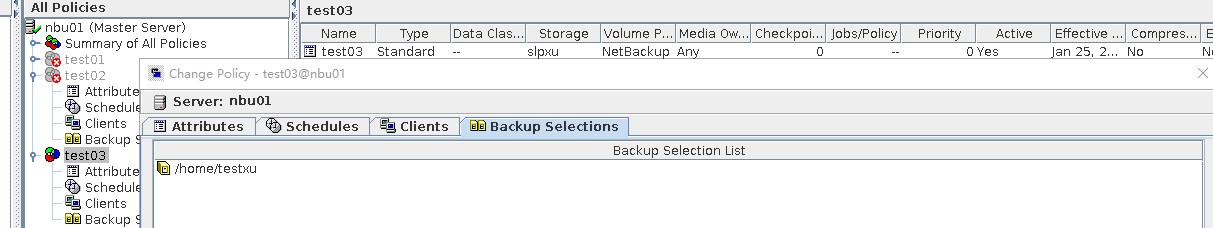

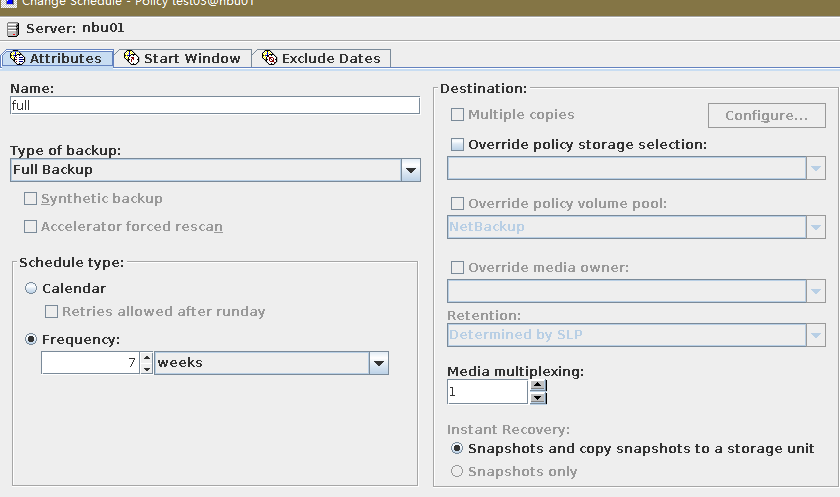

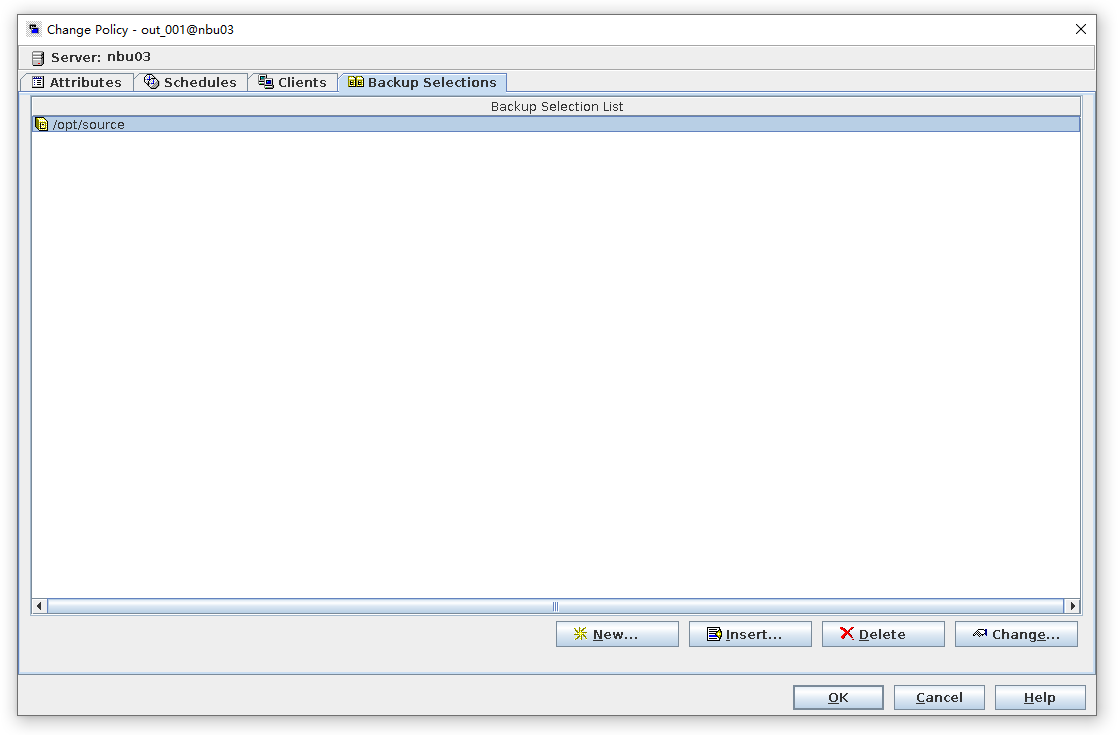

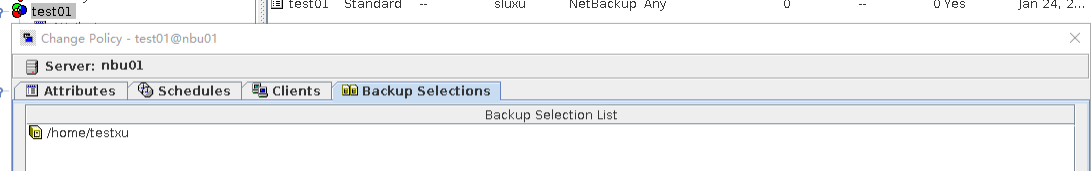

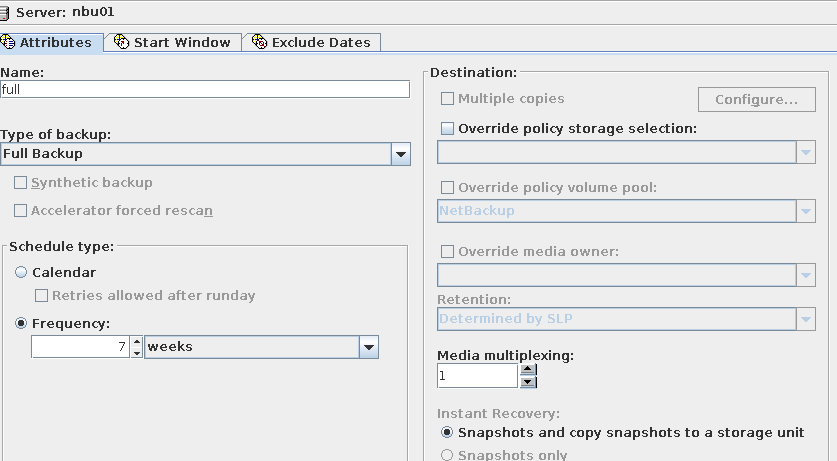

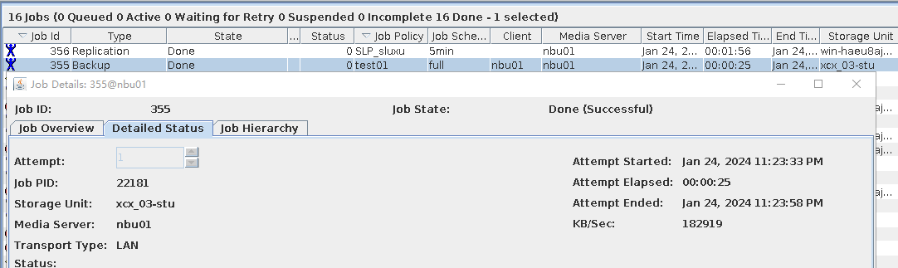

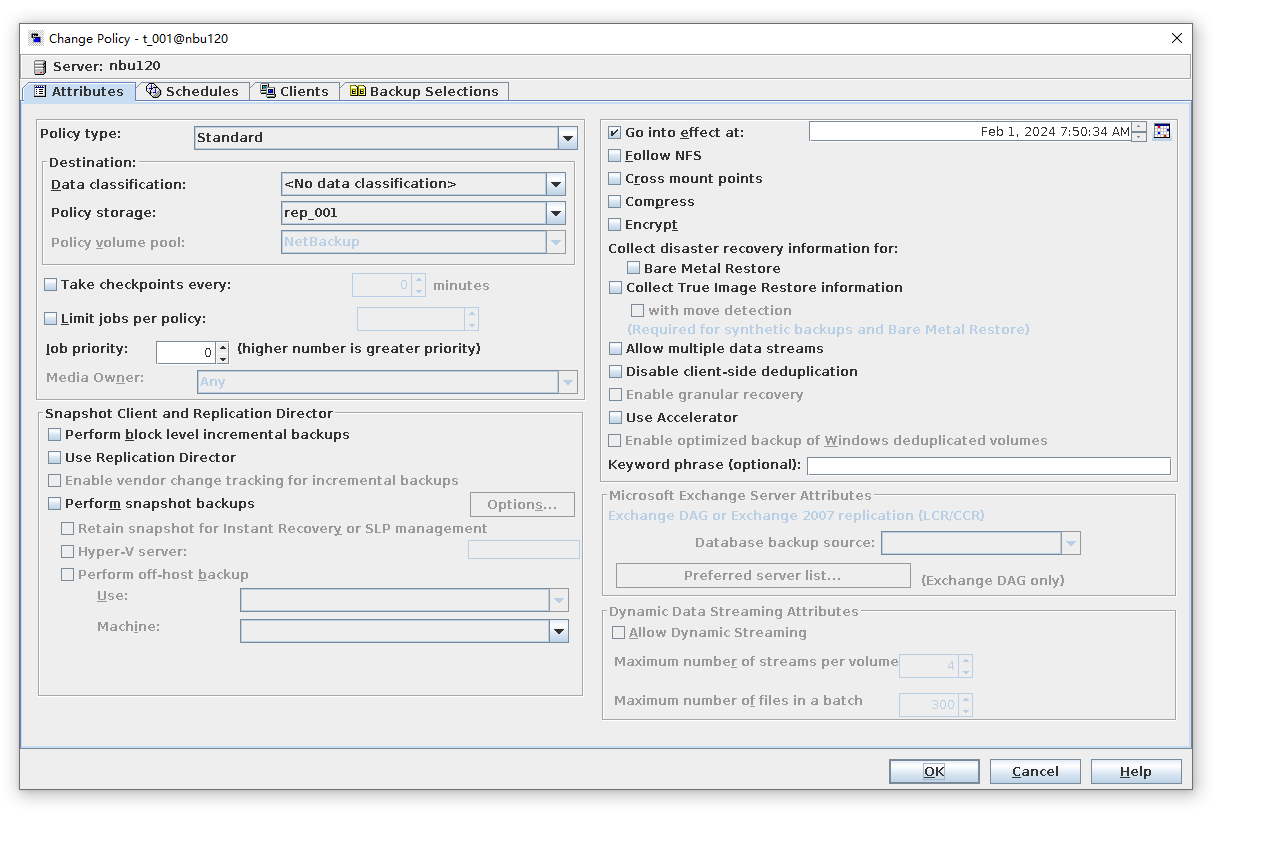

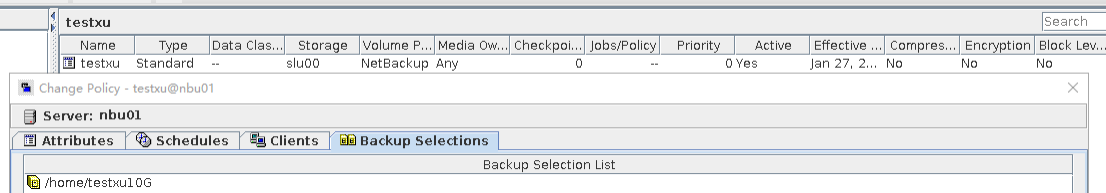

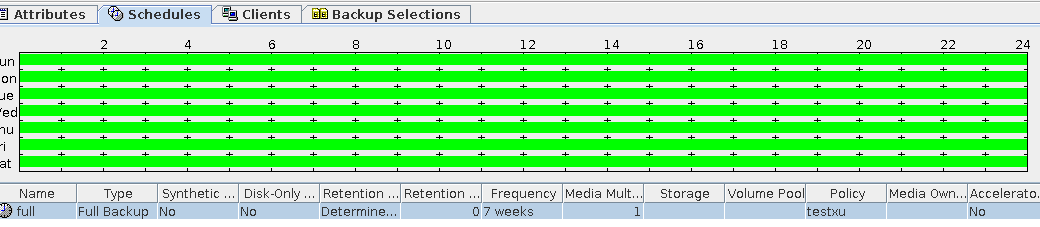

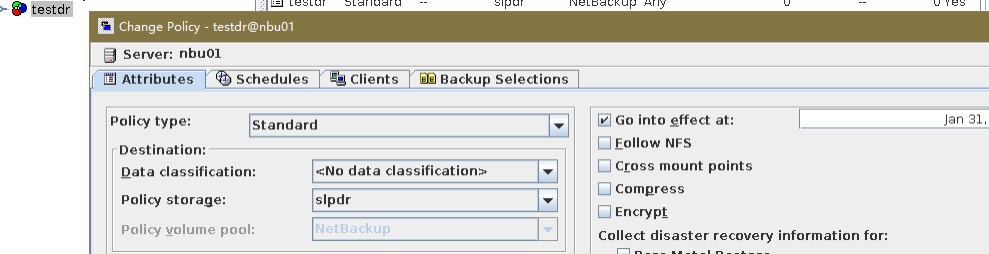

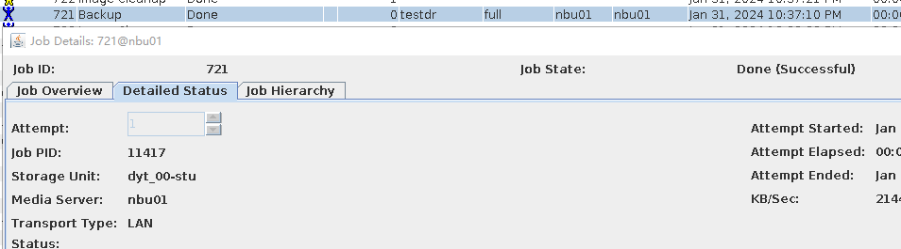

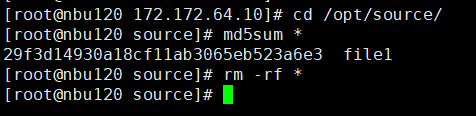

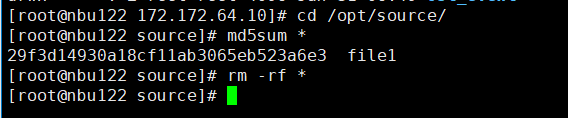

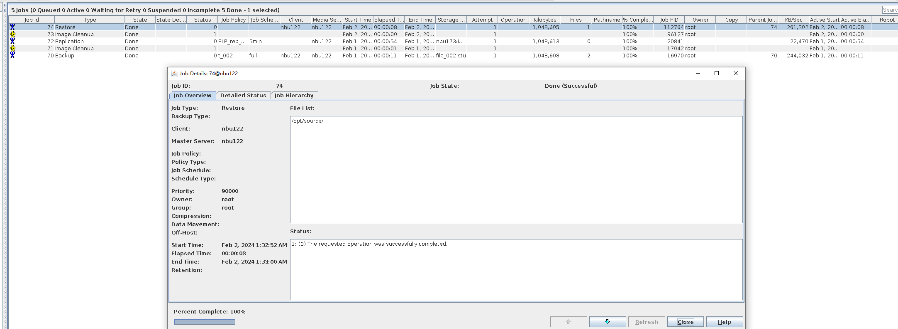

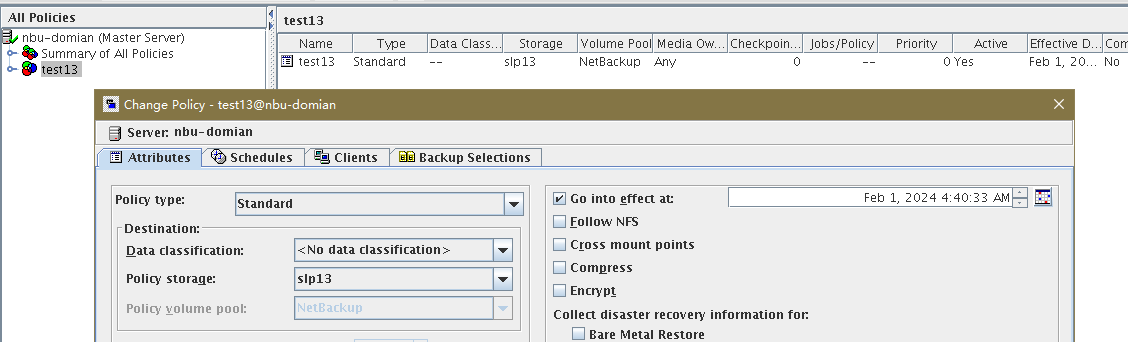

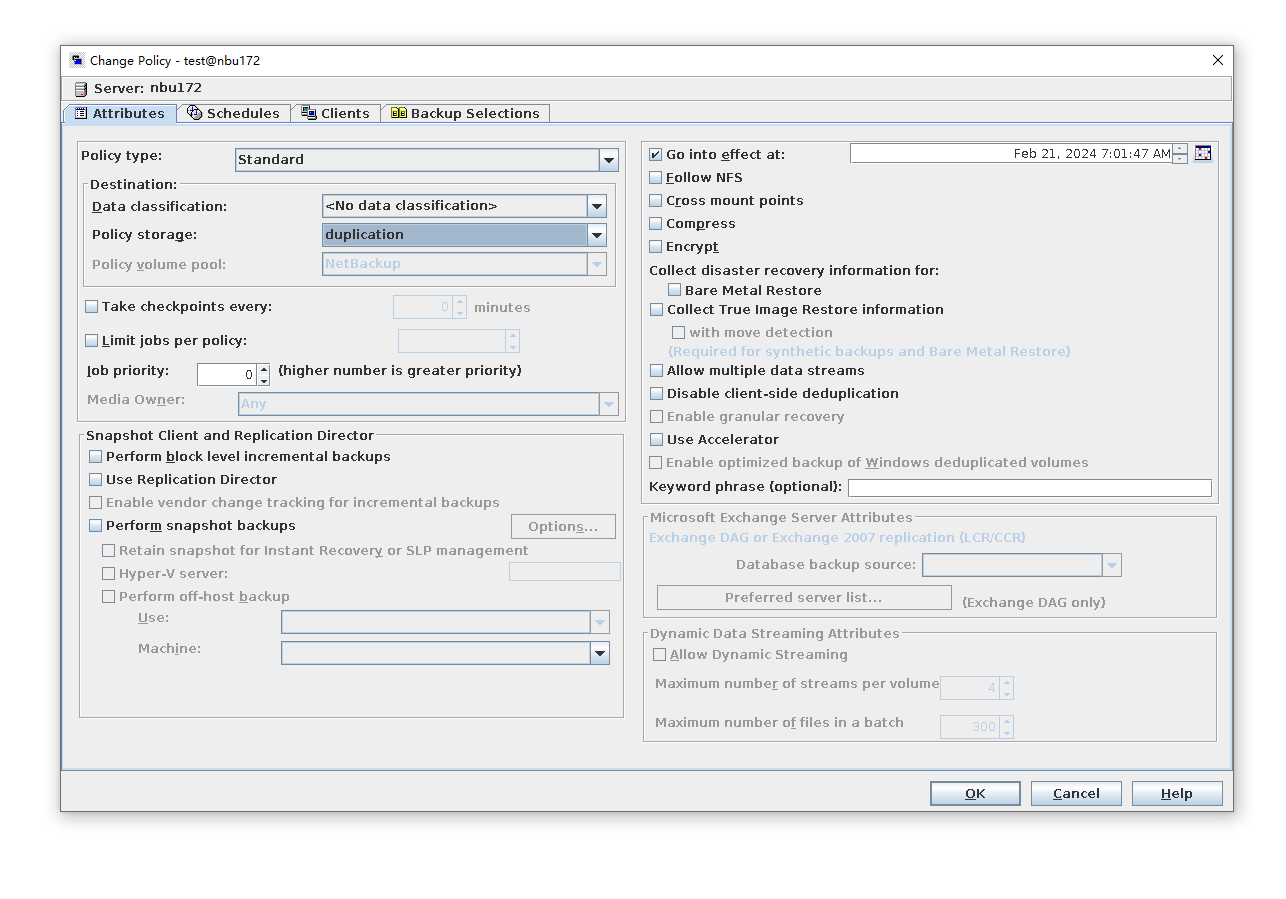

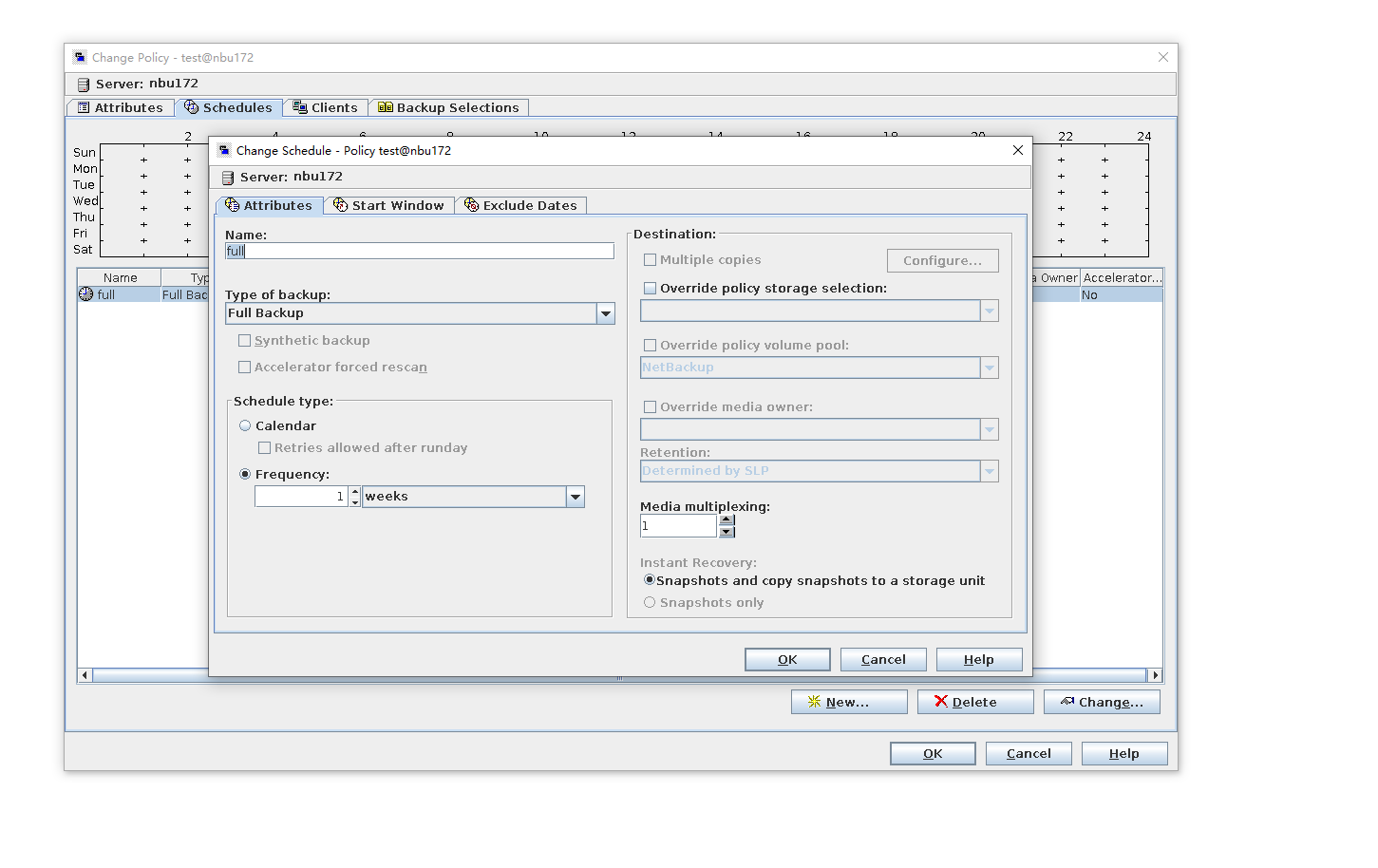

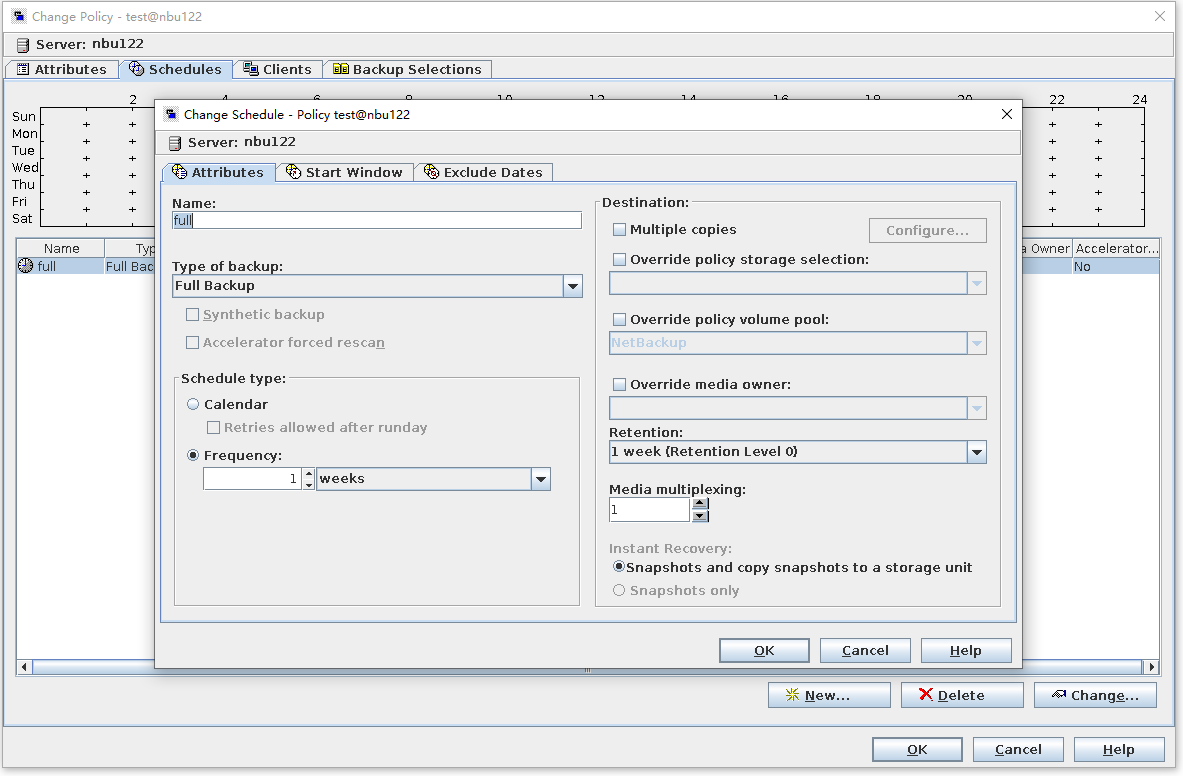

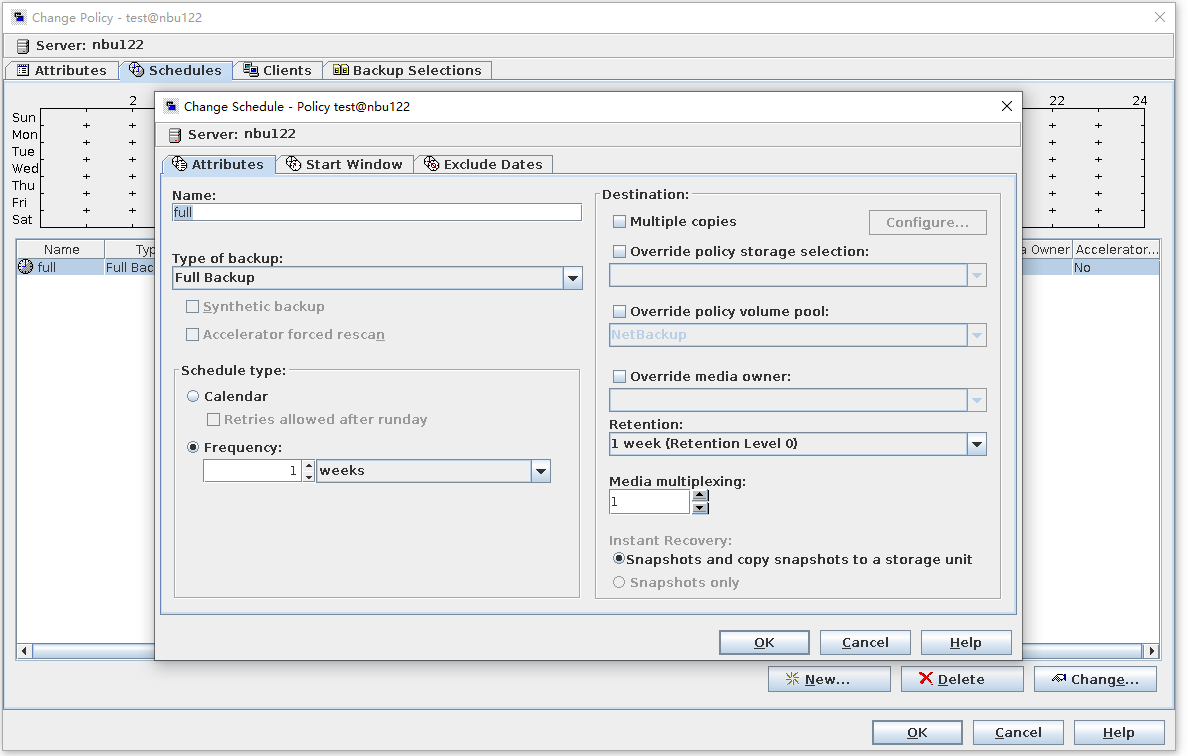

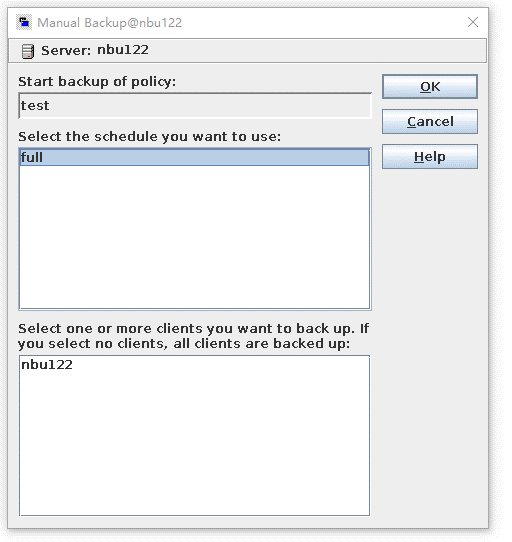

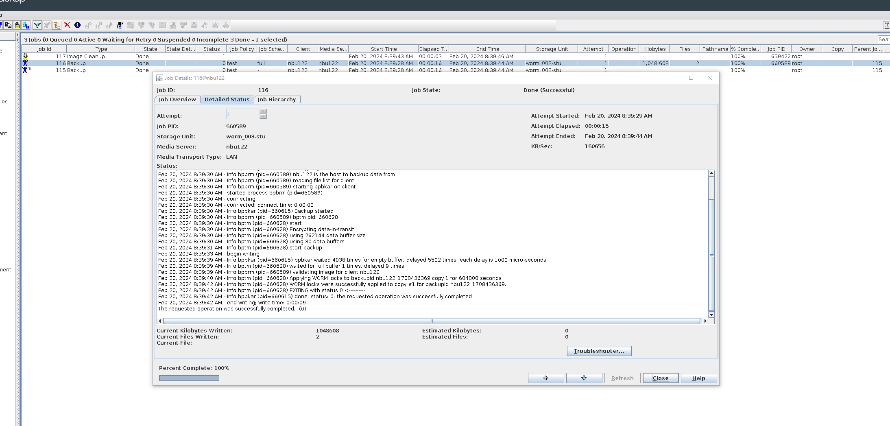

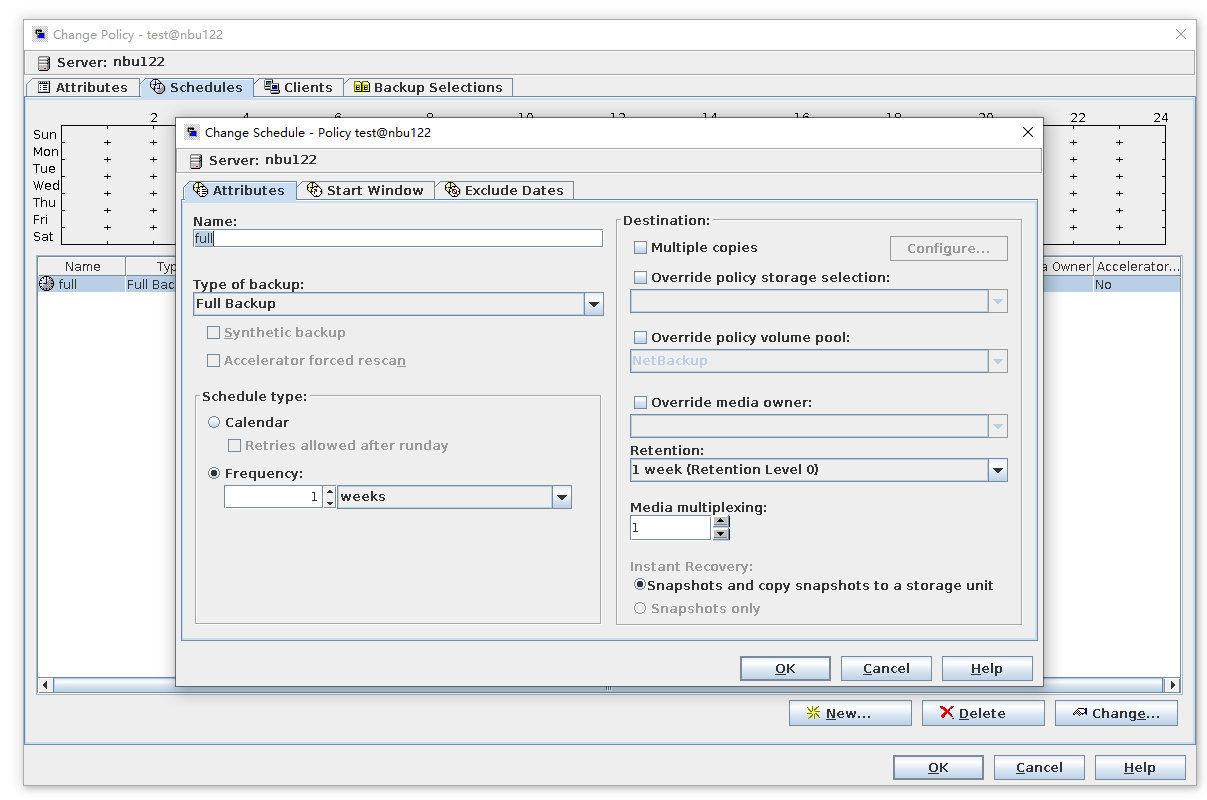

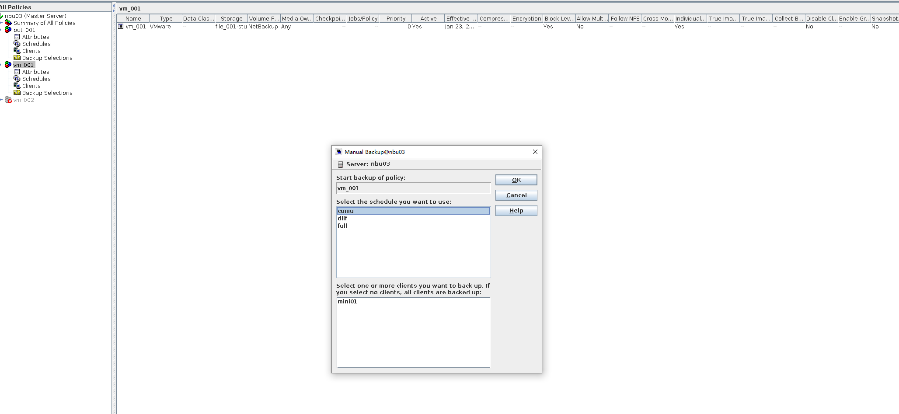

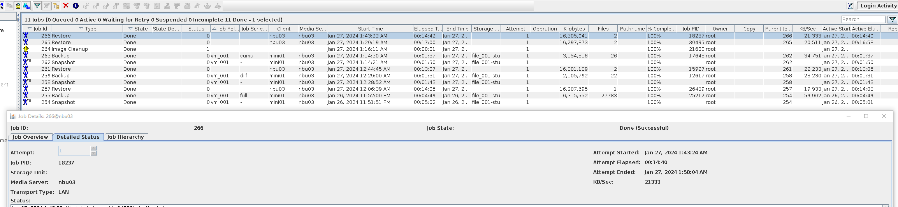

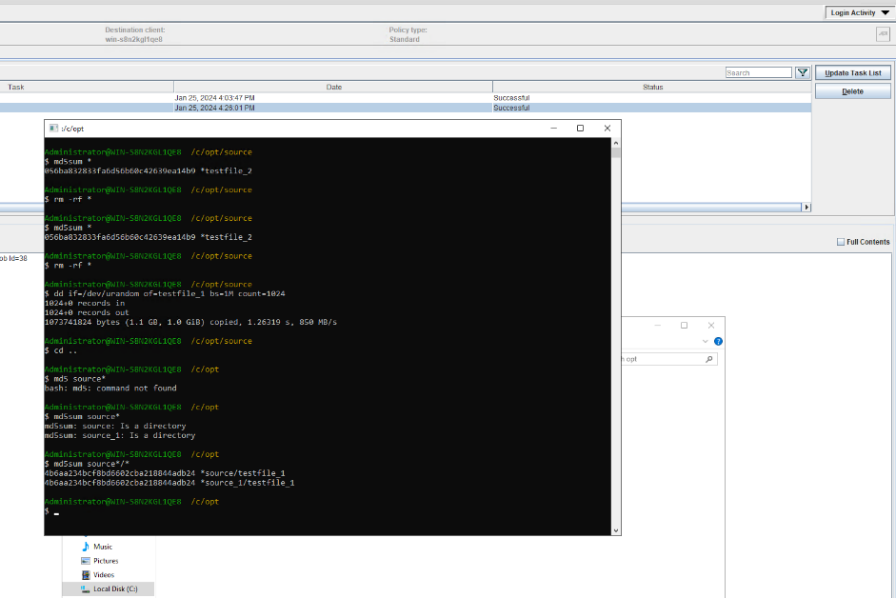

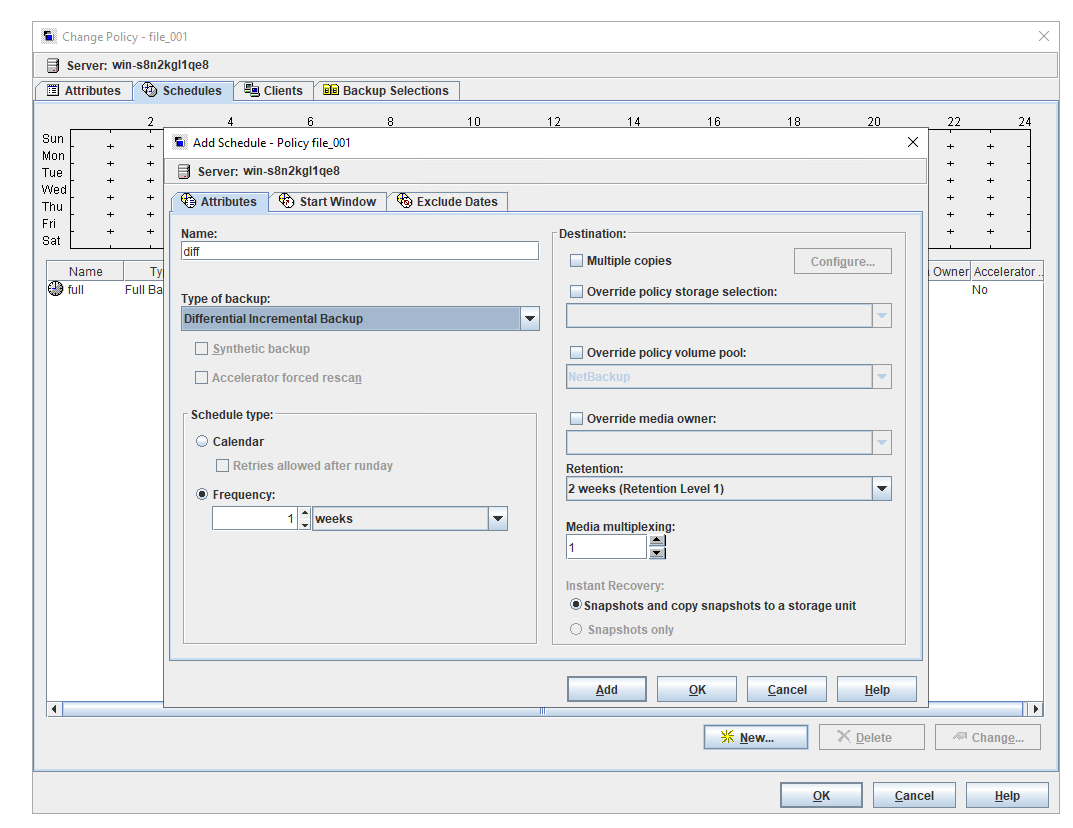

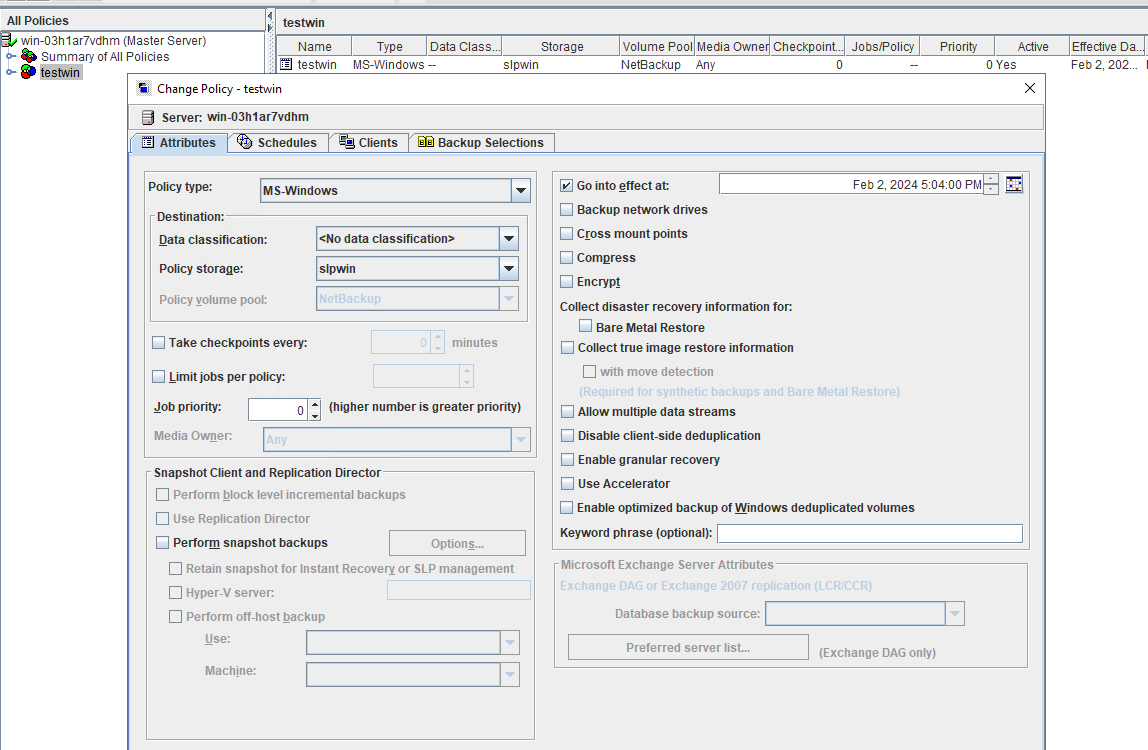

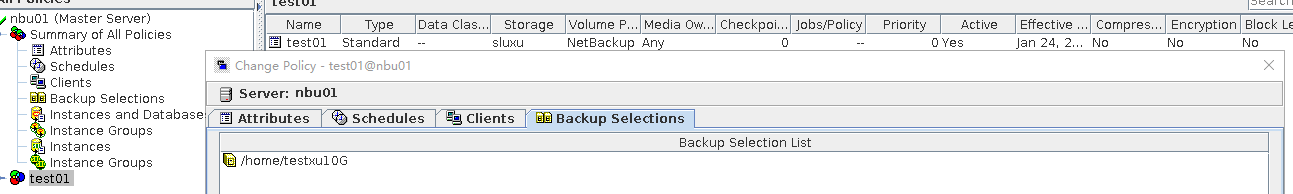

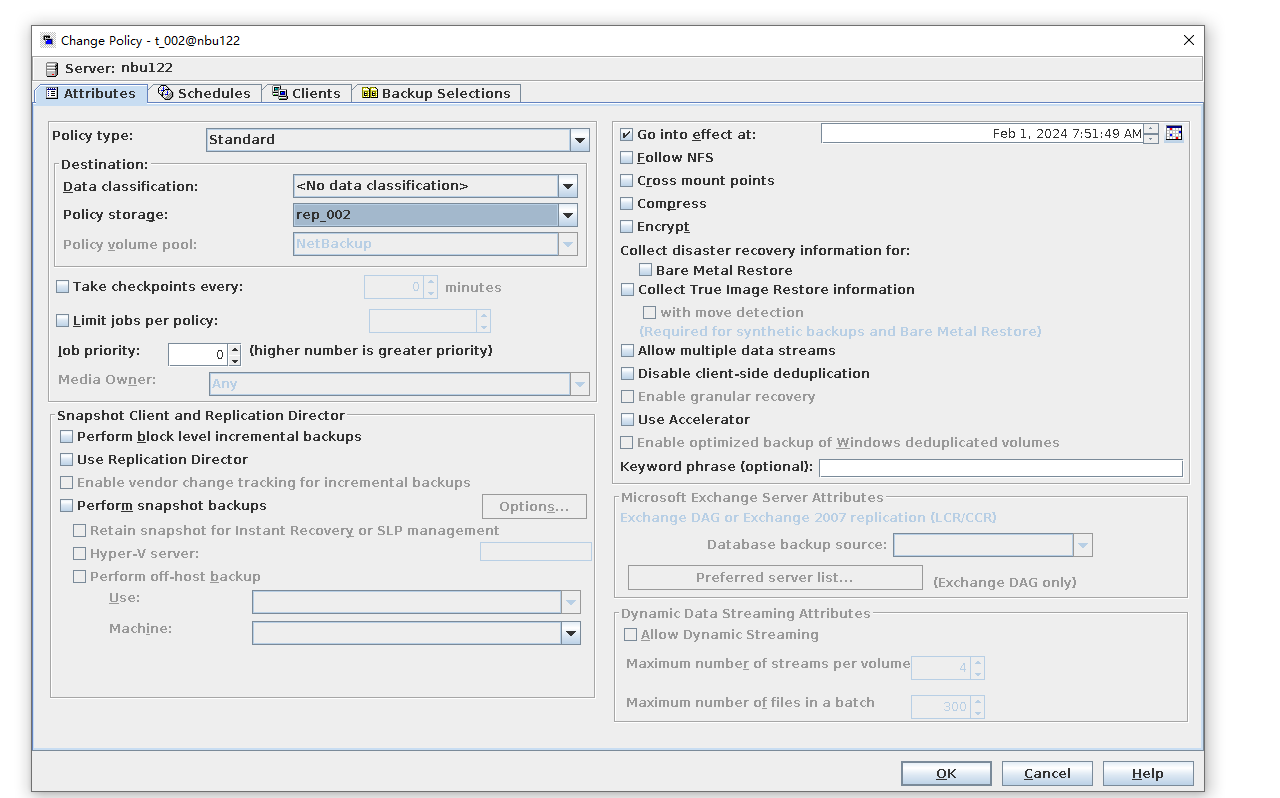

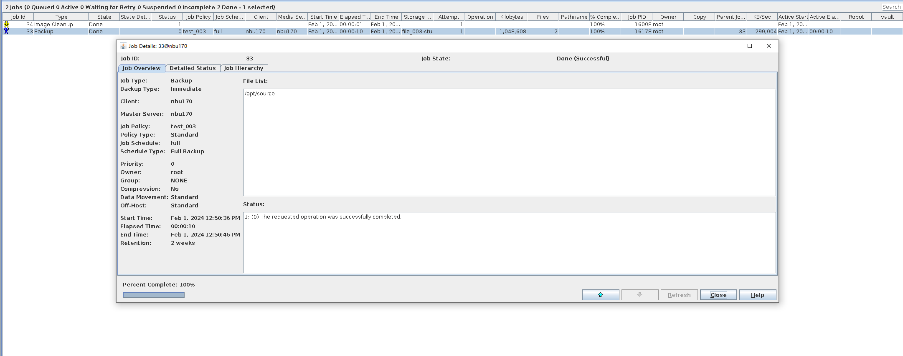

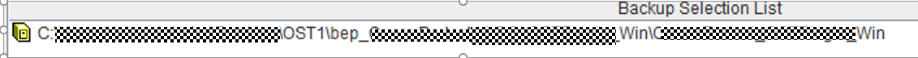

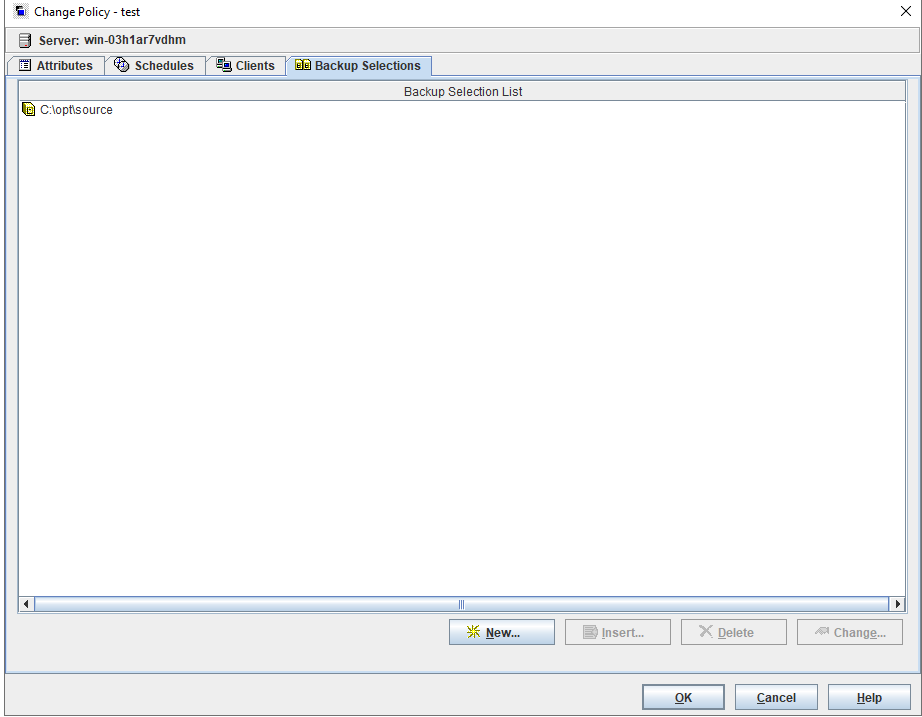

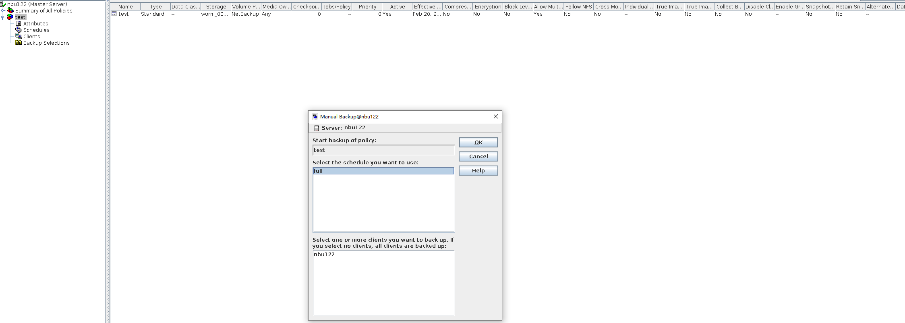

Test Result | 1. Create a storage server, disk pool, storage unit, and policy.

2. Insert the 1 GB file testfile_1 and perform full backup.

3. Restore data and verify data consistency in testfile_1.

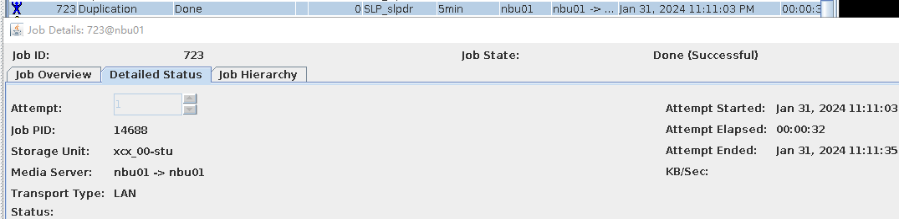

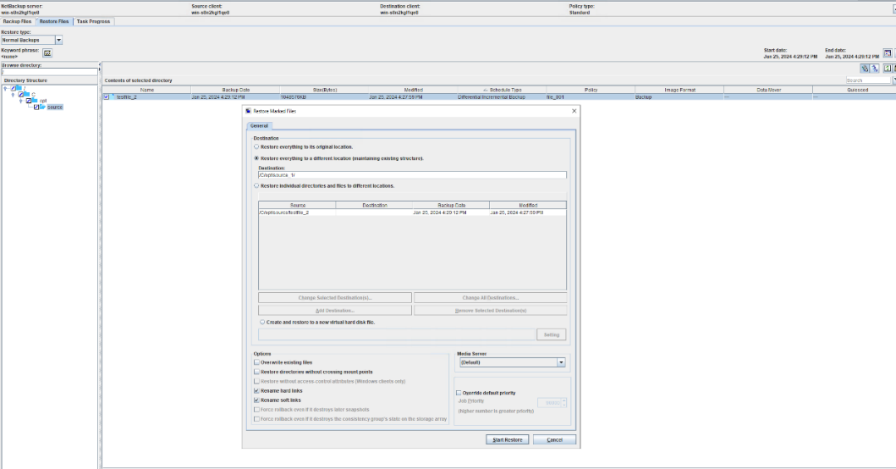

4. Insert the 1 GB file testfile_2 and perform differential incremental backup.

5. Restore the data and verify the data consistency of testfile_2.

6. Modify the 1 GB file testfile_2 and perform permanent incremental backup.

7. Restore the data and verify the data consistency of testfile_2.

|

Conclusion |

|

Remarks |

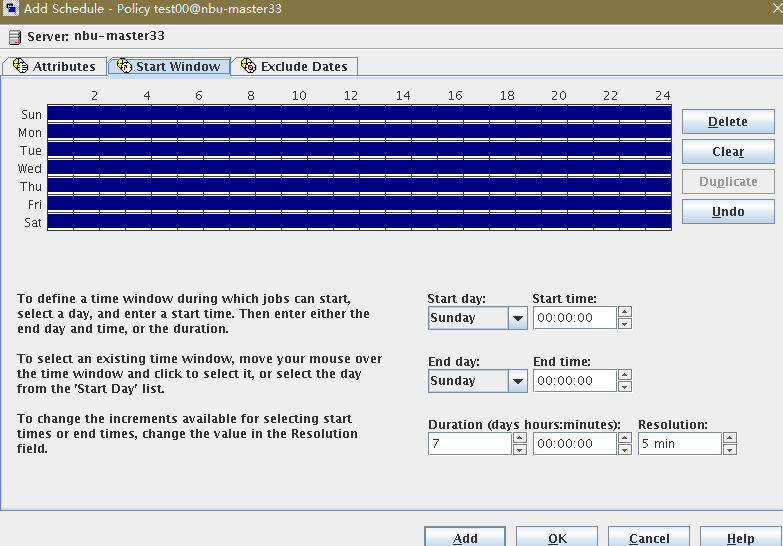

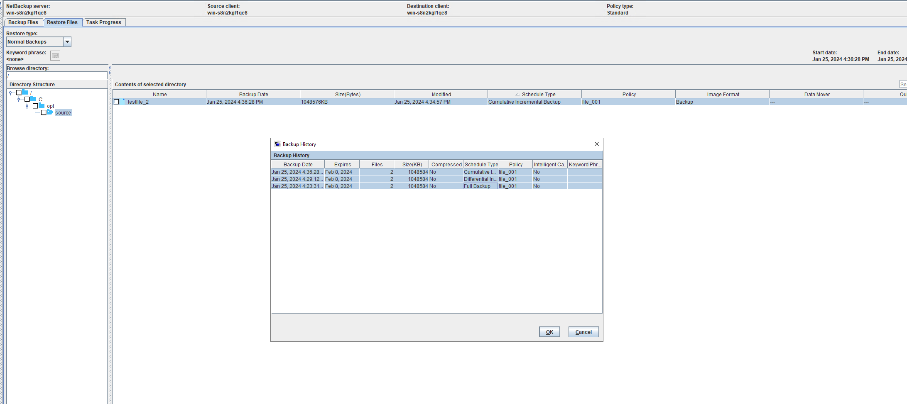

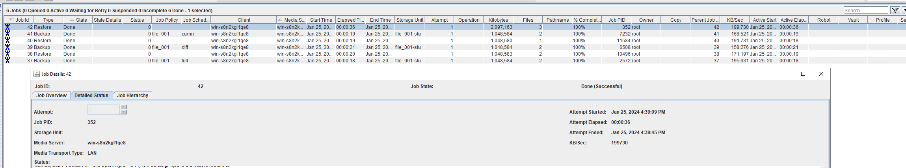

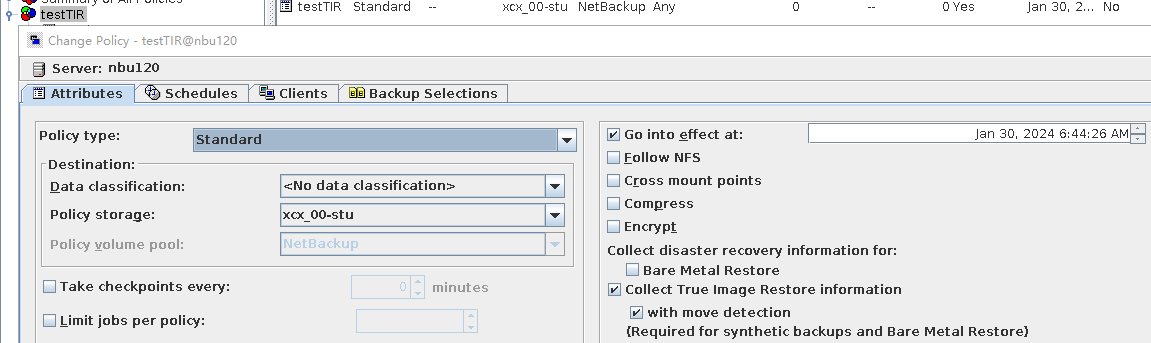

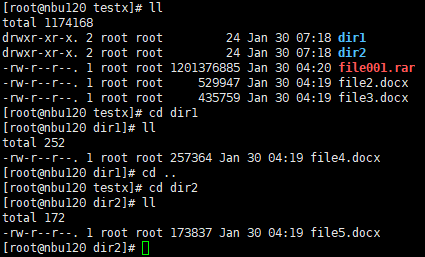

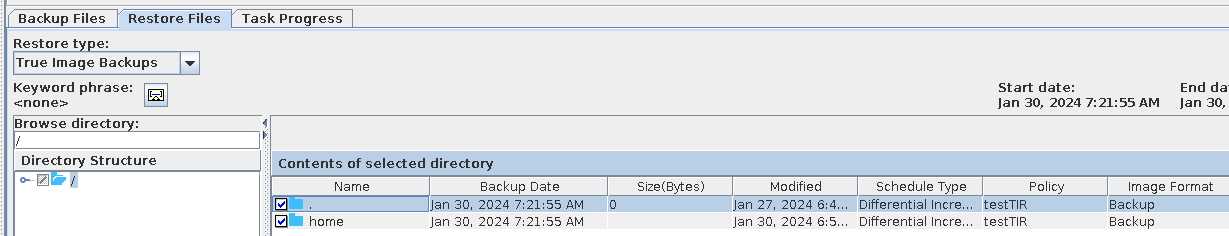

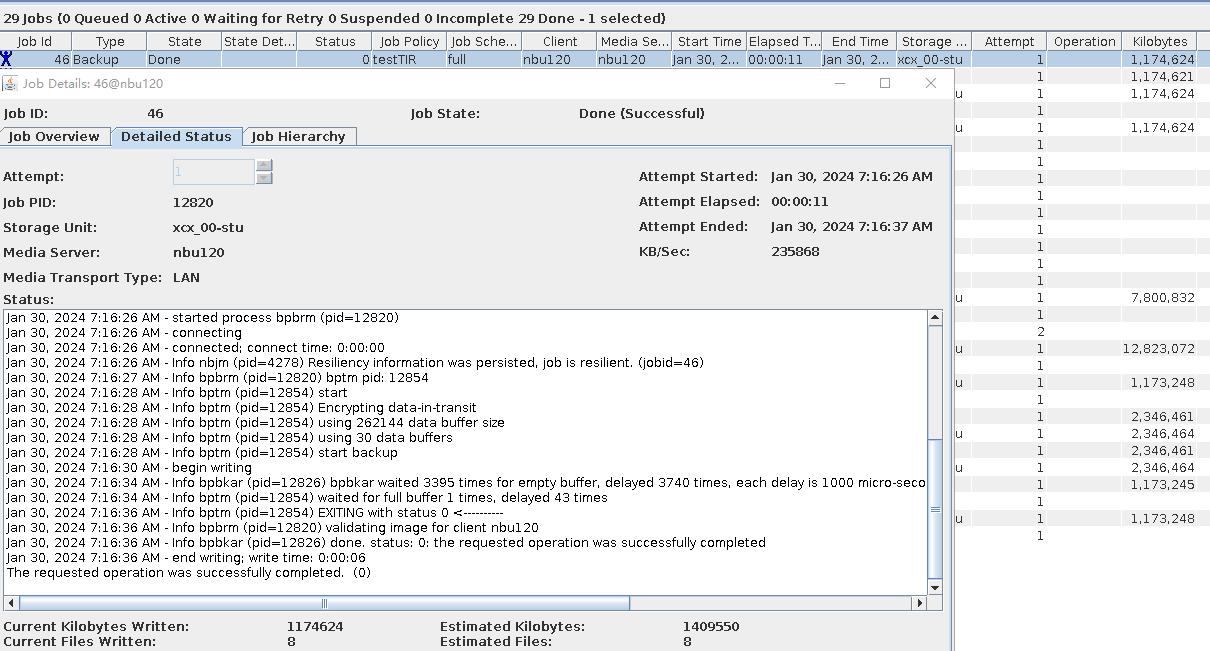

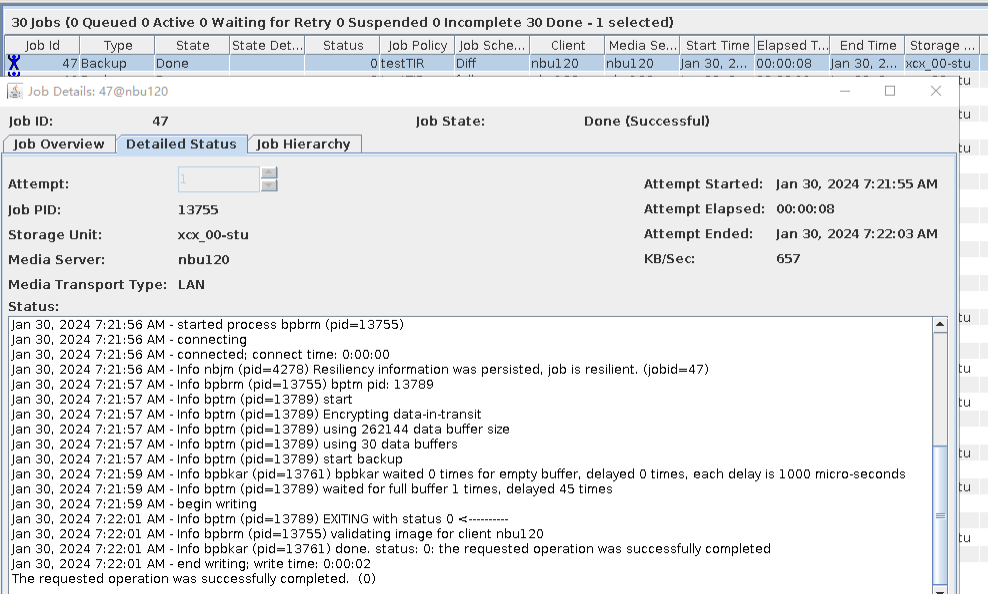

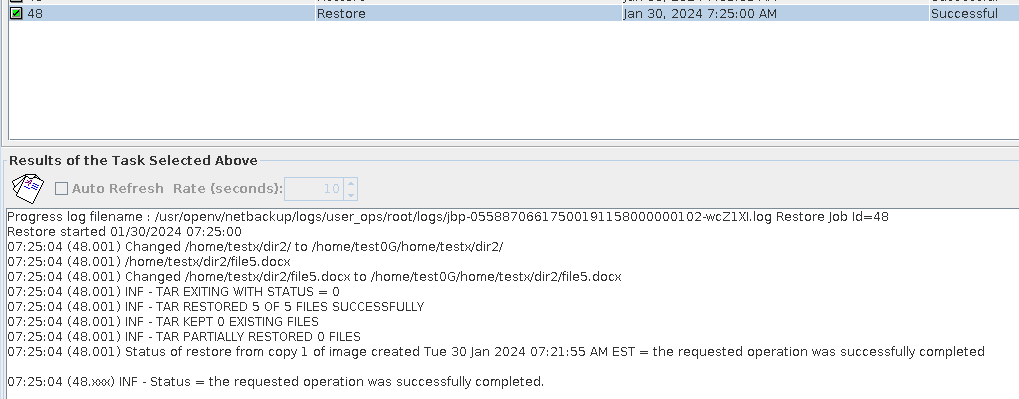

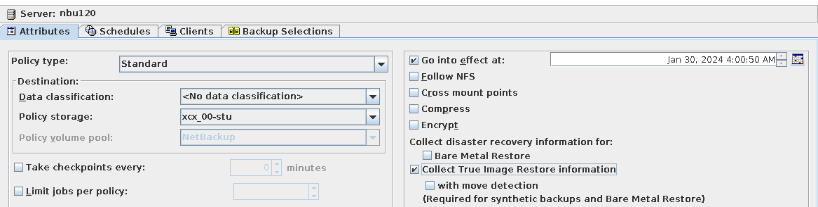

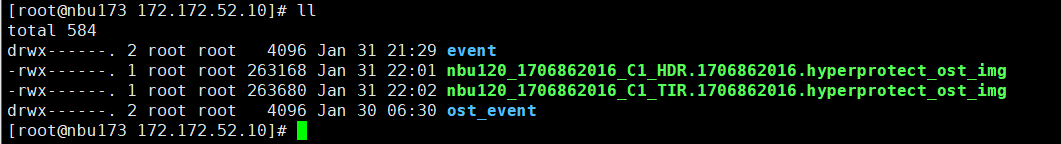

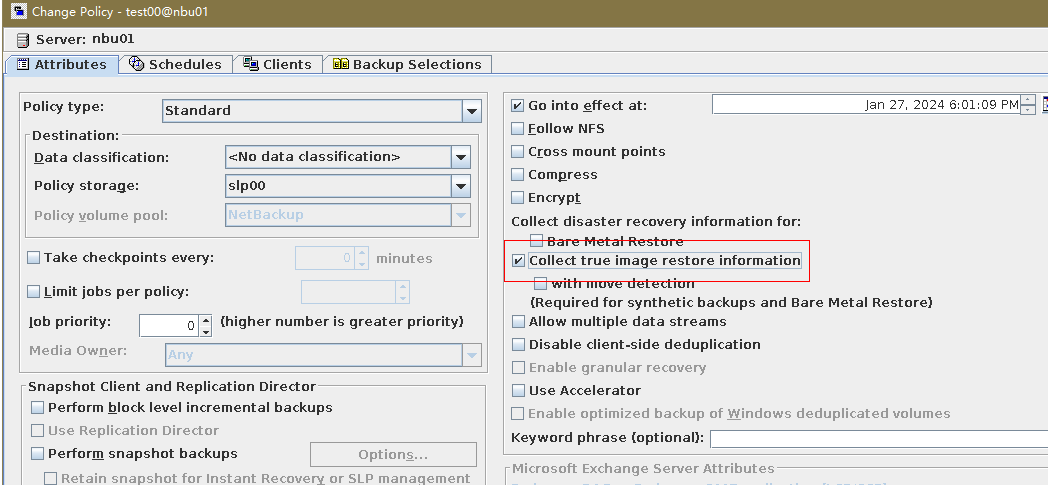

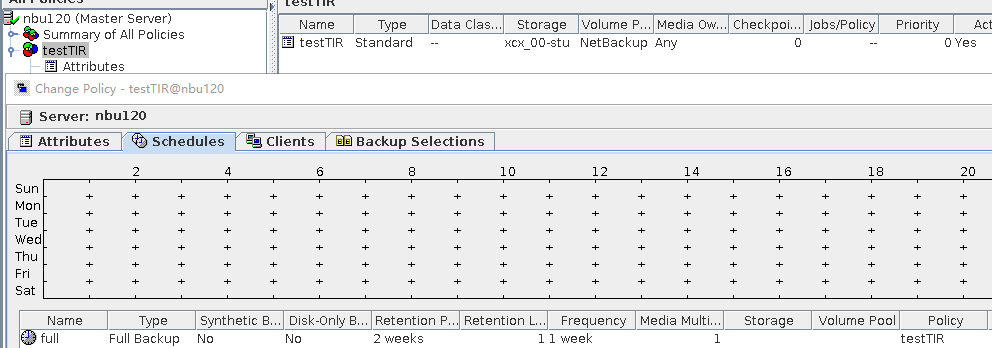

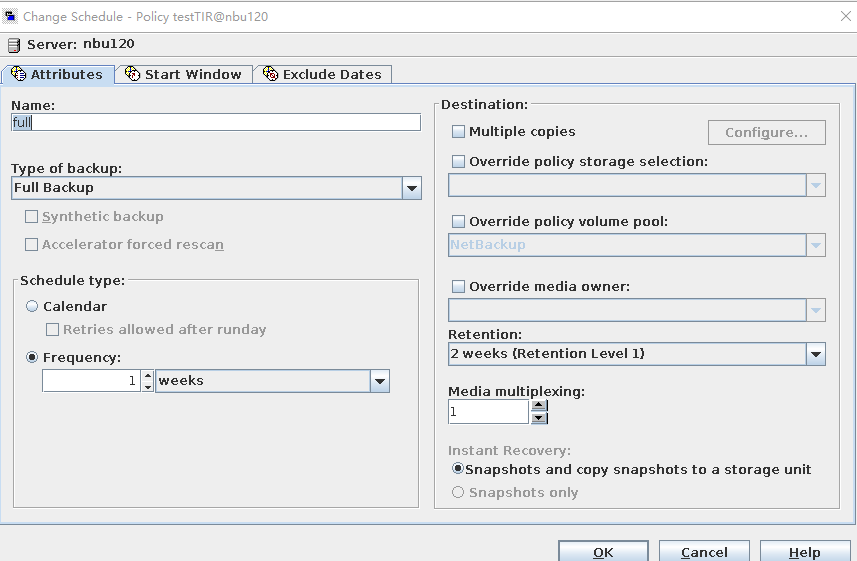

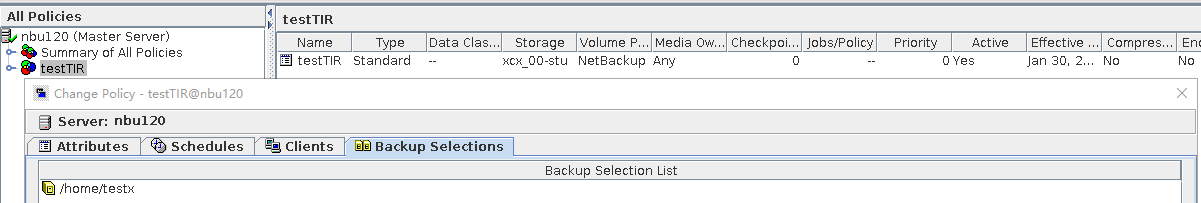

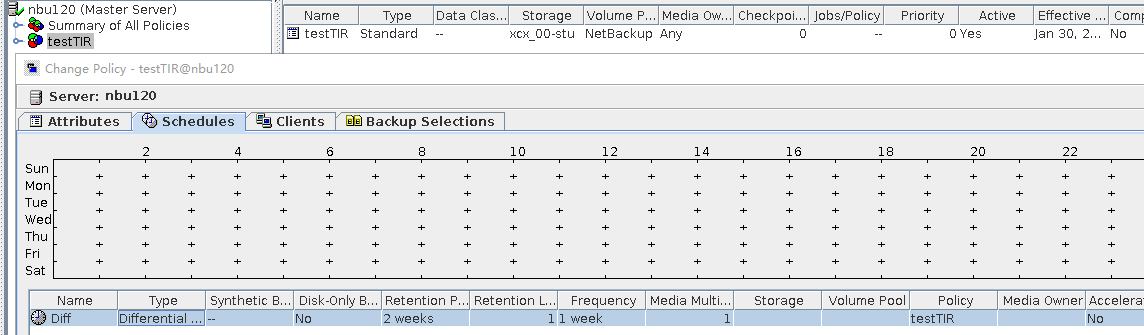

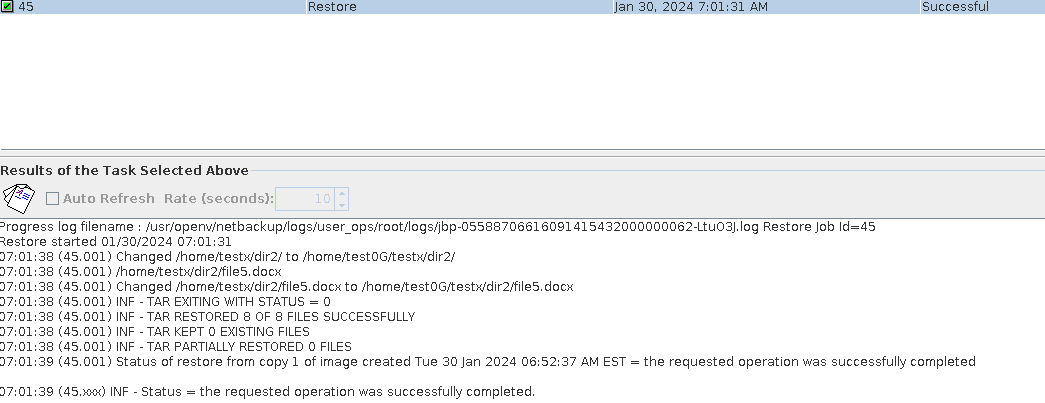

3.1.7 Backup with TIR Move Detect

Objective | To verify that the backup system can backup with TIR Move Detect |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

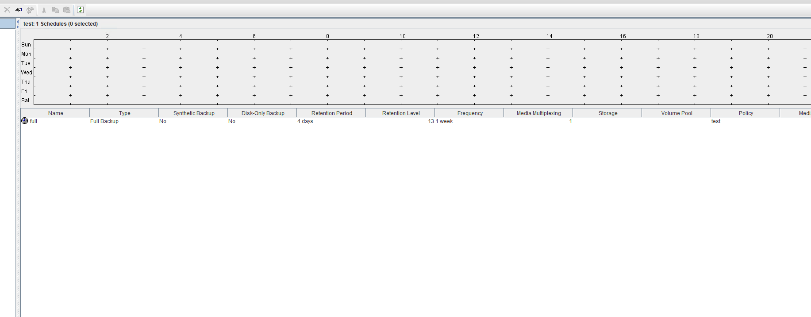

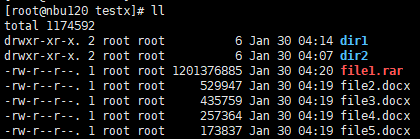

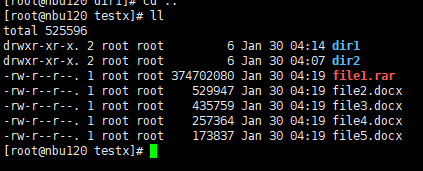

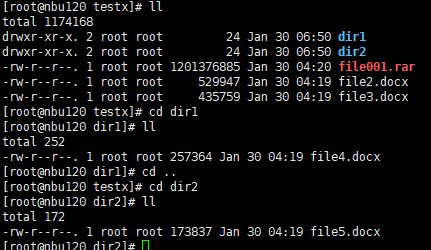

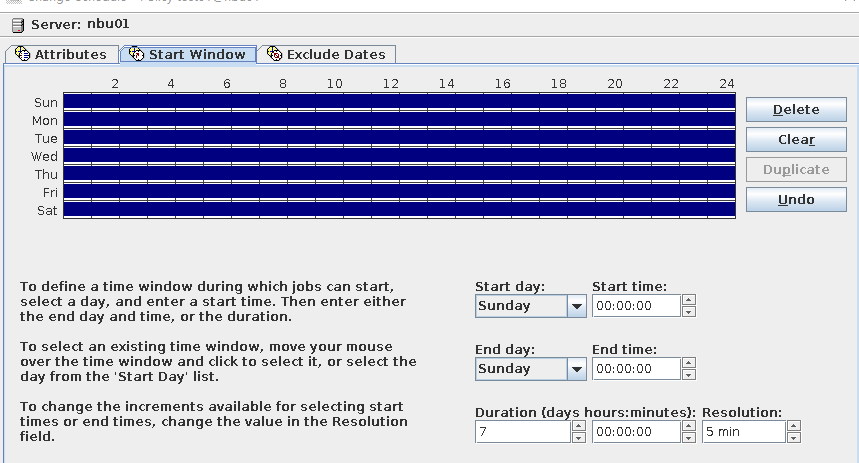

Procedure | 1. When creating a standard backup policy, select the TIR and mobile monitoring functions.  2. Create multiple folders dir1 and dir2 and files file1, file2, file3, file4, and file5 in the directory and perform a full backup.

3. Change file1 to file001, move file4 and file5 to dir1 and dir2, and perform an incremental backup.

4. Use the TIR copy to perform a full restoration.  |

Expected Result |

|

Test Result | 1. After the switch is turned on, the policy is successfully created. 2. The full backup is successful.  3. The incremental backup is successful.  4. Data is successfully restored. The restored data is file001, file2, and file3, and file4 and file5 are stored in dir1 and dir2, respectively.  |

Conclusion |

|

Remarks |

3.1.8 Backup with TIR No Move Detect

Objective | To verify that the backup system can backup with TIR No Move Detect |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure | 1. When creating a standard backup policy, select TIR and disable the mobile monitoring function.  2. Create multiple folders dir1 and dir2 and files file1, file2, file3, file4, and file5 in the directory and perform a full backup.

3. Change file1 to file001, move file4 and file5 to dir1 and dir2, and perform an incremental backup.

4. Use the TIR copy to perform a full restoration. |

Expected Result |

|

Test Result | 1. After the switch is turned on, the policy is successfully created. 2. The full backup is successful.  3. The incremental backup is successful.  4. Data is successfully restored. After the restoration, the data is file001, file2, and file3. File4 and file5 are stored in dir1 and dir2, respectively.

|

Conclusion | Passed |

Remarks |

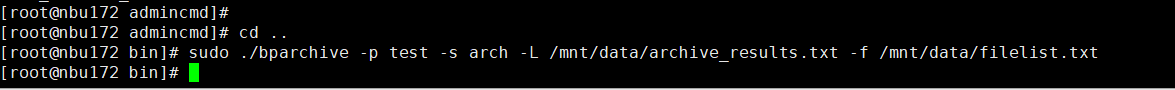

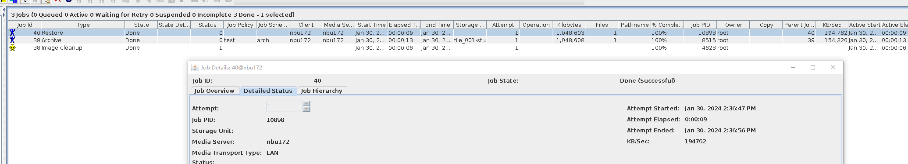

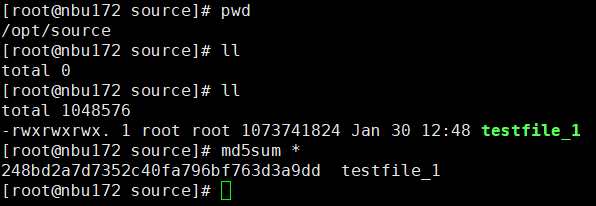

3.1.9 User Archive Backup

Objective | To verify that the backup system can perform the user archive backup |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

Test Result | 1. Create a standard backup policy (create the backup type of the archive backup).

2. Insert the testfile_1 file and manually perform the archive backup.

3. After the backup is complete, check whether testfile_1 exists.  4. Restore testfile_1 and verify data consistency.

|

Conclusion |

|

Remarks |

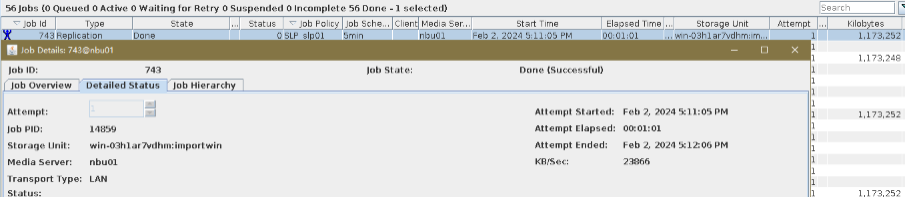

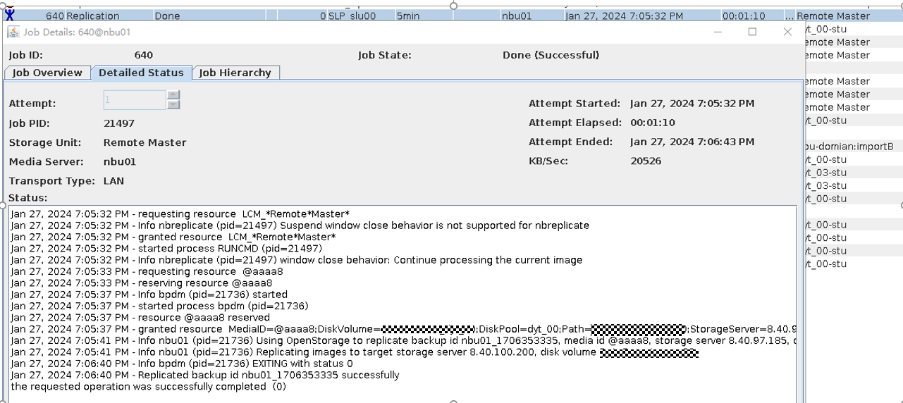

3.2 Replication

3.2.1 Targeted Auto Image Replication

3.2.1.1 Targeted A.I.R. Manual Replication, Target STS Session via Source STS

Objective | To verify that the backup system can configure one-to-one LSU replication relationship; To verify that the backup system can perform backups/restores with one-to-one targeted A.I.R.; To validate persistence and copying of image_def on targeted A.I.R. related images; To verify the targeted A.I.R. manual replication, target STS session via source STS |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

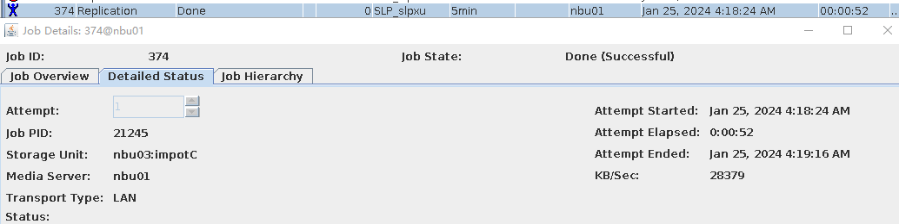

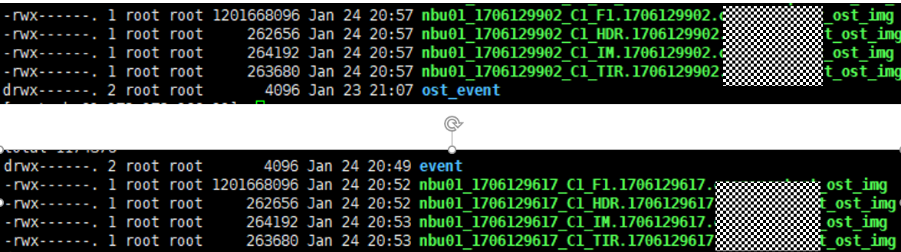

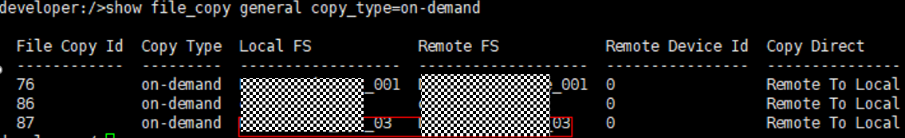

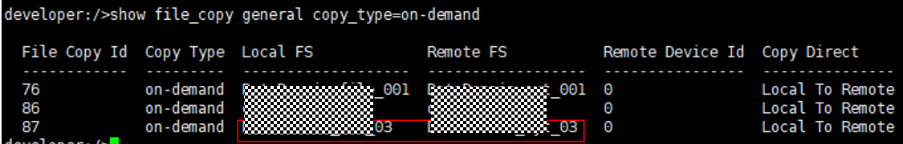

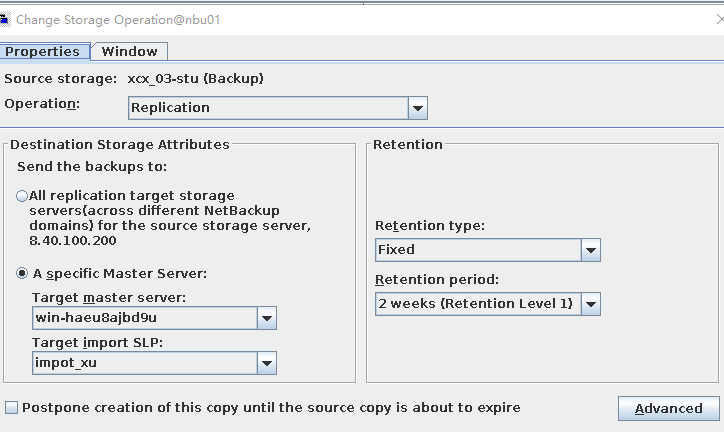

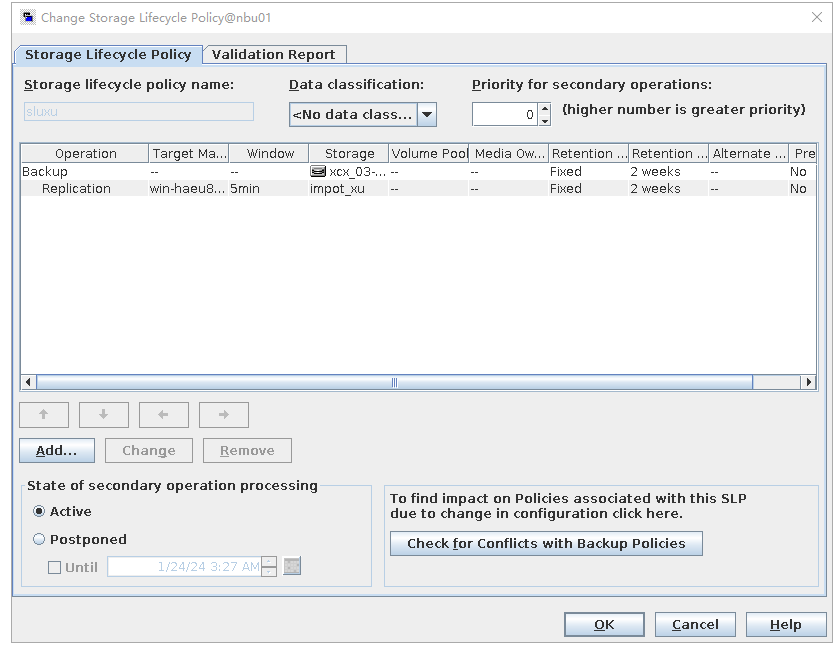

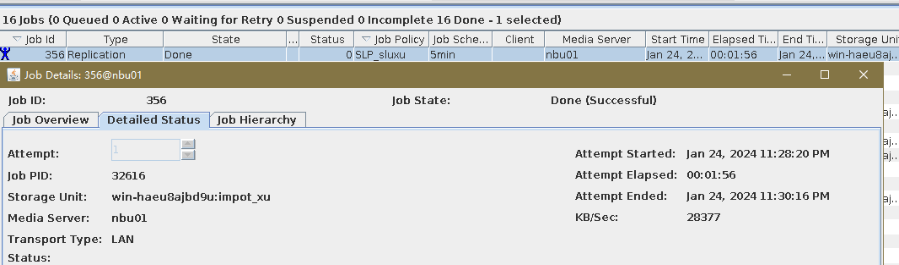

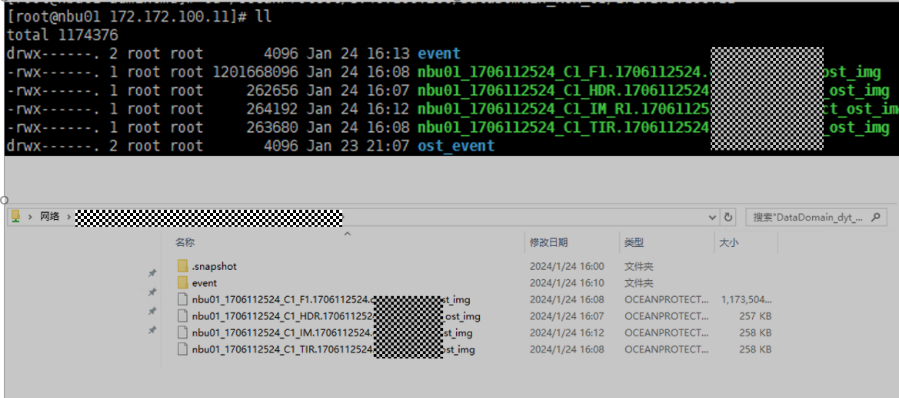

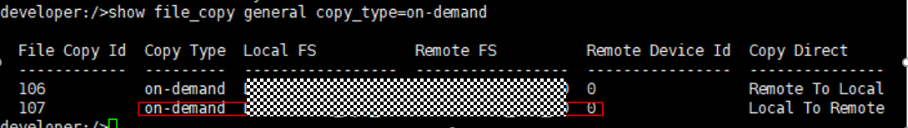

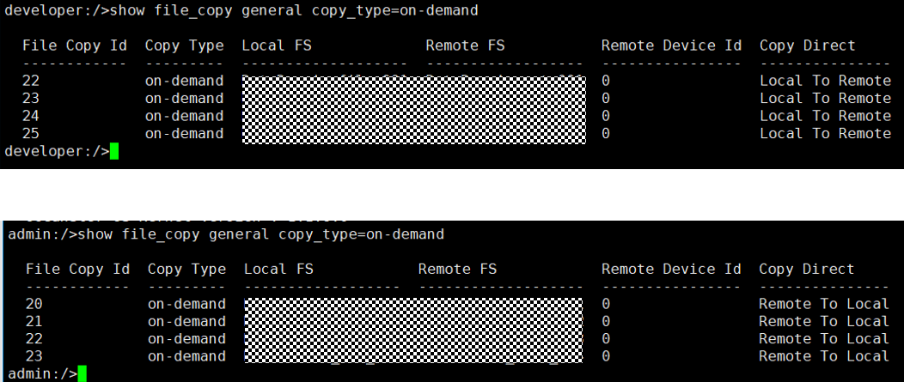

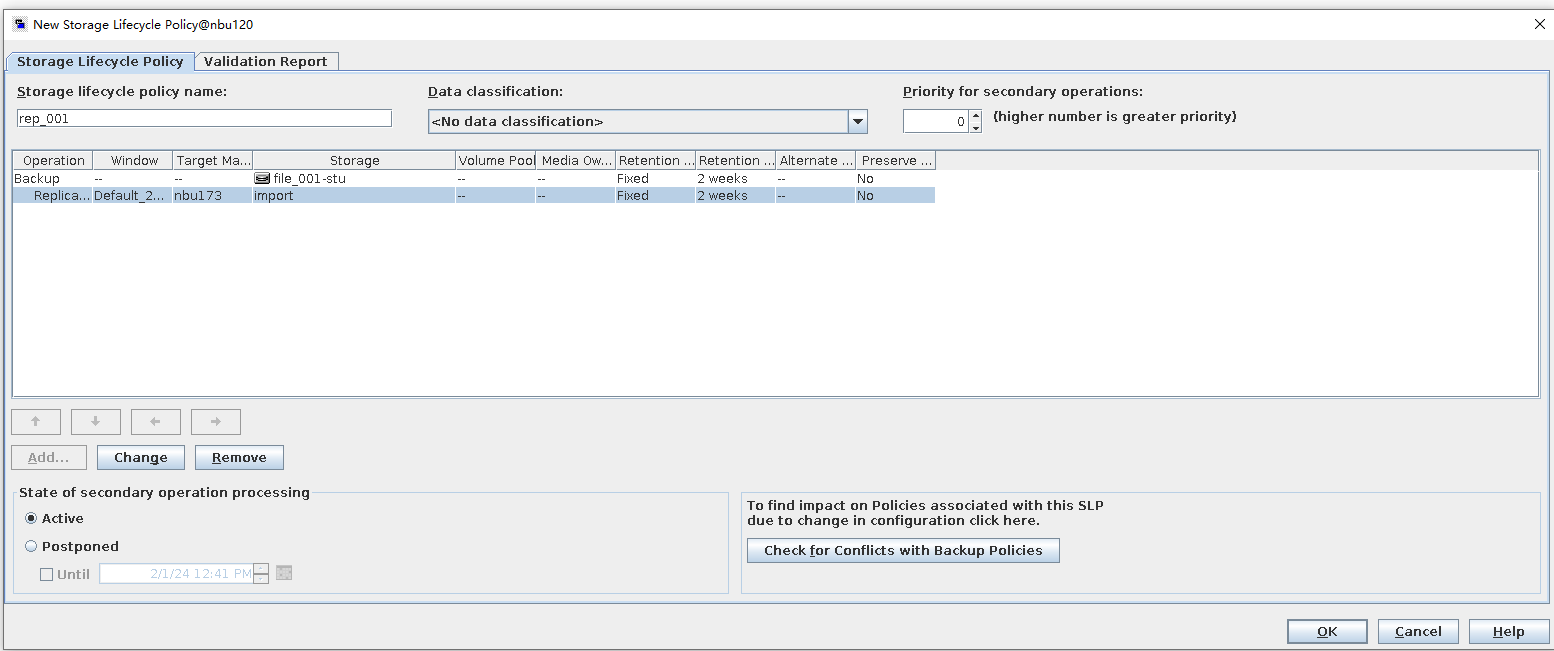

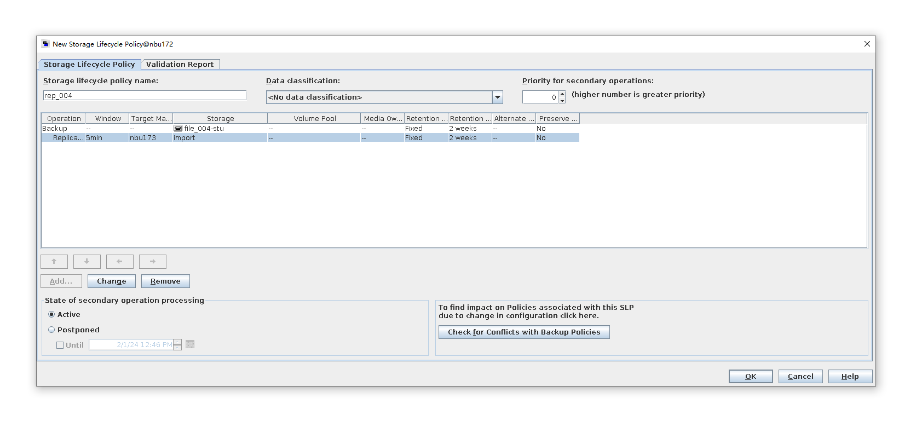

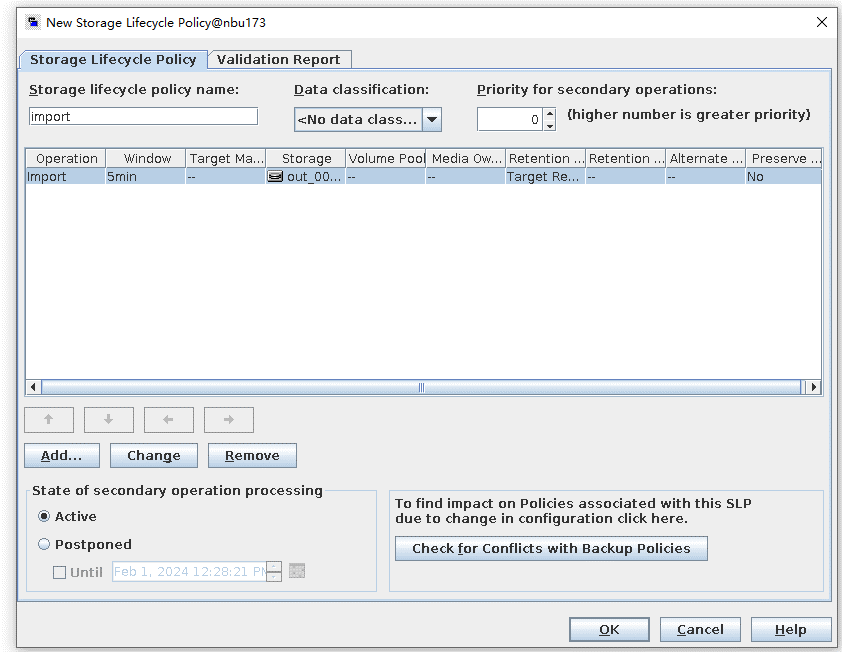

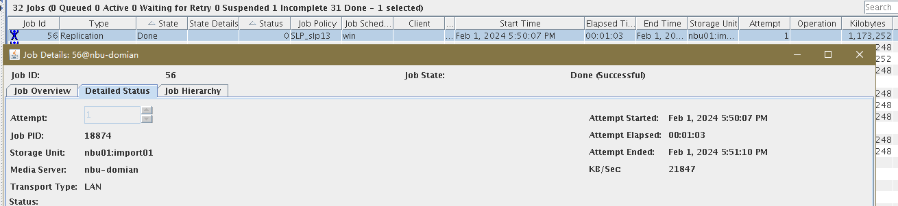

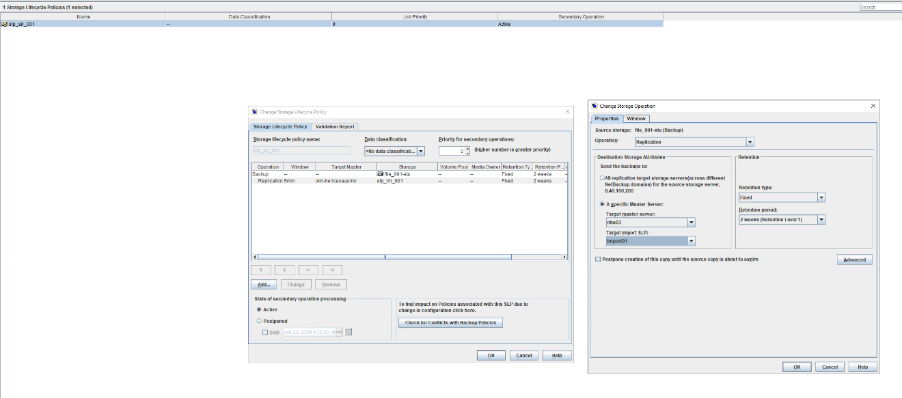

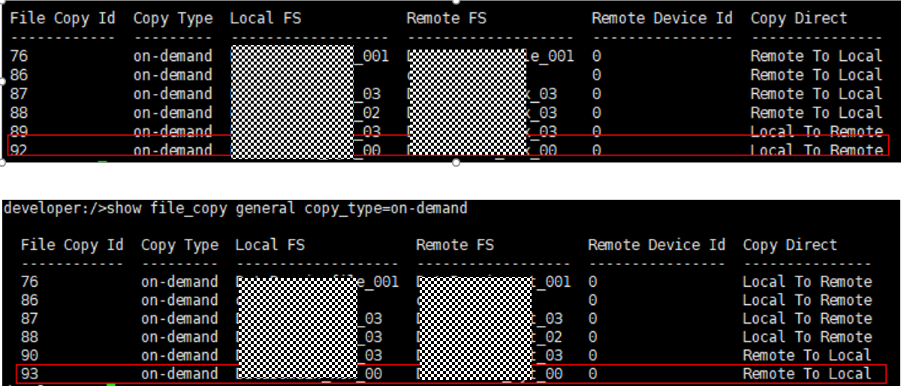

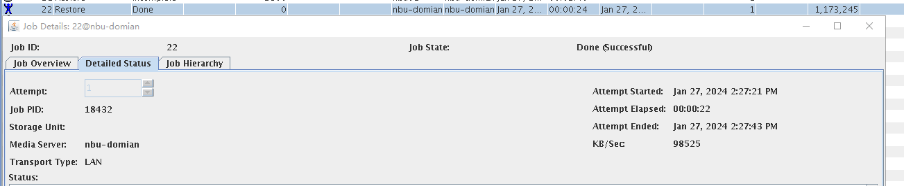

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link between the source Linux LSU and the target Windows LSU (A->B). From the end  Primary end  3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server.  //The configuration is successful.  4. Configure storage units and import policies on each destination end.

6. Set a scheduled backup task and perform the backup.

7. Execute the replication task when the replication period arrives.  //The replication is successful. The copy is imported to the specified Master Server on the destination end.  8. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage. Data  //The primary/secondary data and capacity are consistent, and the link relationship exists. |

Conclusion |

|

Remarks |

3.2.1.2 Bi-directional Targeted A.I.R. Replication

Objective | To verify that the backup system can perform bi-directional targeted A.I.R. replication |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

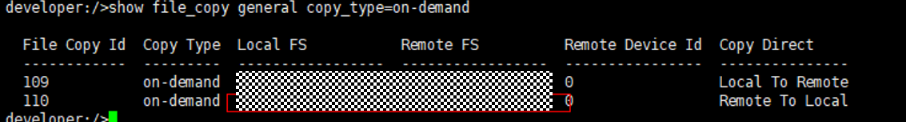

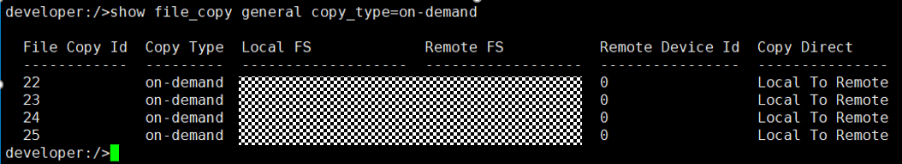

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship between the source Linux LSU and the target Windows LSU (A->B and B->A). A-B The main  From the  B-A The main  From the  3. Configure the replication SLP policy on the master servers of source A and source B. Select Replication and Target master server.  //The configuration is successful.  4. Configure the storage unit and import policy on each destination end.

5. Configure a backup policy based on the replication SLP policy on source ends A and B.

6. Set a scheduled backup task and perform the backup. Timed  Backup 1  Backup 2  7. Execute the replication task when the replication period arrives. Replication 1  Replication 2  //The replication is successful. The copy is imported to the specified Master Server at the destination end.   8. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage.

link //The primary/secondary data and capacity are consistent, and the link relationship exists. |

Conclusion |

|

Remarks |

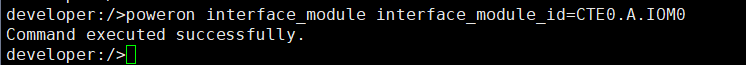

3.2.1.3 Inject NetBackup Failure During Targeted A.I.R. Replication

Objective | To verify that the backup system can perform normally when injecting NetBackup failure during targeted A.I.R. replication.

|

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

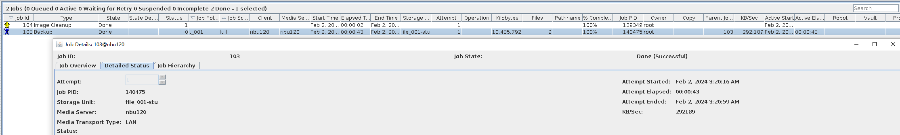

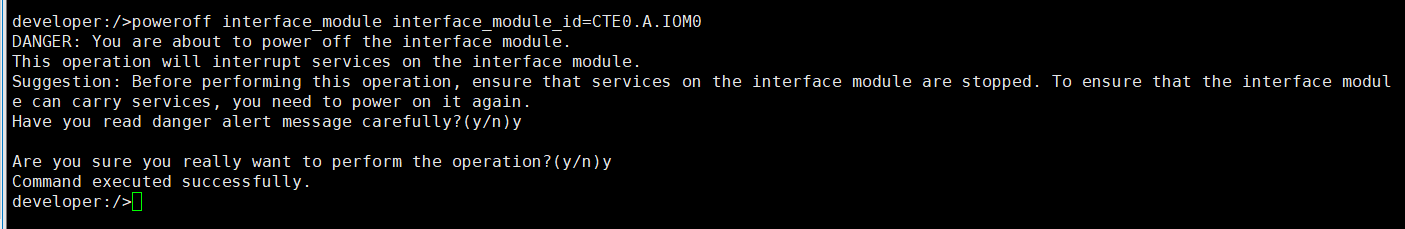

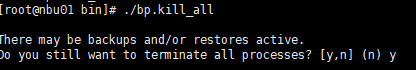

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship (A -> B) between the source LSU and a destination LSU.  3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server. //The configuration is successful.  4. Configure storage units and import policies on each destination end.  5. On the source end, configure a backup policy based on the replication SLP policy.

6. Set a scheduled backup task and perform the backup.  7. When the replication period arrives, the replication task is executed. During the replication process, power off the interface module where the replication port resides.  //Replication failed. The copy remains in the target file system.  8. The interface card is powered on. The replication link recovers and data is successfully replicated in the next replication period.

9. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage. // primary/secondary data is consistent, Master:

From the

Consistent capacity,  The link relationship exists.  |

Conclusion |

|

Remarks |

3.2.1.4 Break Implementation Replication Link During Targeted A.I.R. Replication

Objective | To verify that the backup system can perform normally when breaking implementation replication link during targeted A.I.R replication |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

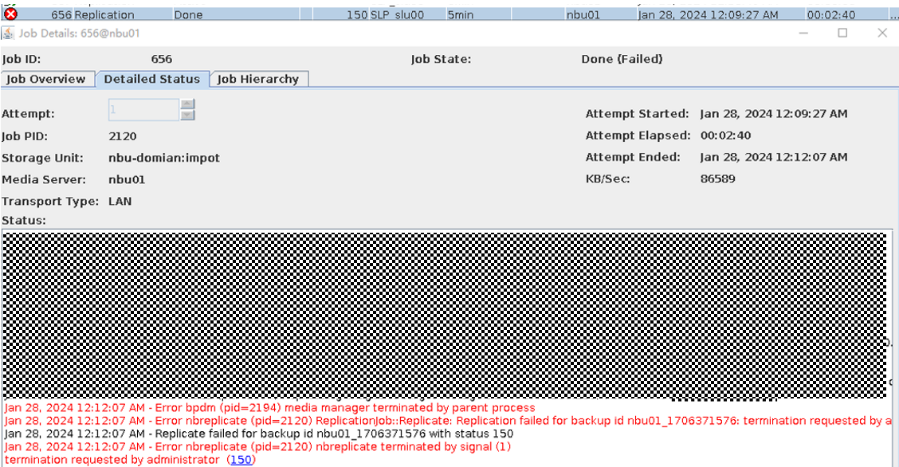

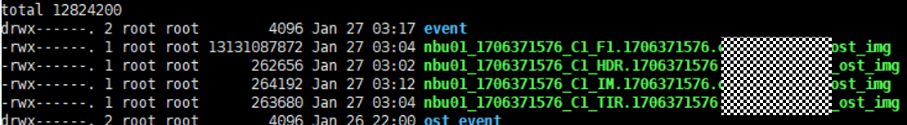

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship (A -> B) between the source LSU and a destination LSU.  3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server.  //The configuration is successful.  4. Configure storage units and import policies on each destination end.  5. On the source end, configure a backup policy based on the replication SLP policy.  6. Set a scheduled backup task and perform the backup.  7. When the replication period arrives, the replication task is executed. During the replication, restart the NetBackup process.  //Replication failed. The copy remains in the target file system.  Residue  8. After the NetBackup process restarts, data is successfully replicated in the next replication period. 9. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage. //The primary/secondary data and capacity are consistent, and the link relationship exists. Data  |

Conclusion |

|

Remarks |

3.2.1.5 Targeted A.I.R. Replication when Target Data Storage is Full

Objective | To verify that the backup system can perform targeted A.I.R. replication when target data storage is full To verify that the targeted AIR can import non-existent images |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship (A->B) between the source Linux LSU and the target Windows LSU. B. The capacity of the storage LSU is less than 10 GB. 3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server.  //The configuration is successful.  4. Configure storage units and import policies on each destination end.  5. On the source end, configure a backup policy based on the replication SLP policy and perform backup.

//The backup is successful.  6. After the backup is complete, manually execute the replication task. // Replication failed and an error indicating insufficient capacity is reported.  |

Conclusion |

|

Remarks |

3.2.1.6 Trigger Multiple Events for A Single Targeted AIR Image (previous replication succeeded)

Objective | To verify that the backup system can trigger multiple events for a single targeted AIR image (previous replication succeeded) |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure | 1. Configure the SLP. Site A replicates data to site B in the domain, and then transmits data from site B to site C through the target air.  2. Manually start the backup task and wait until the replication task is complete. 3. Import and restore data at sites B and C, and verify data consistency.

4. Perform the backup again and verify that the replications are still successful. |

Expected Result |

|

Test Result | 1. The policy is created successfully.  2. The backup task, intra-domain replication, and Target Air are successful. Backing Up  Intra-domain  AIR  3. All the restoration tasks are successful, and the data is consistent after the restoration. B Recovery

C Recovery

File

4. After the backup is successful, the backup is still successful.  |

Conclusion |

|

Remarks |

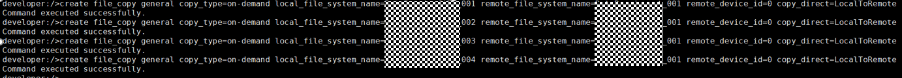

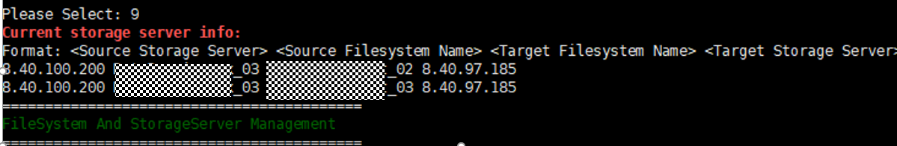

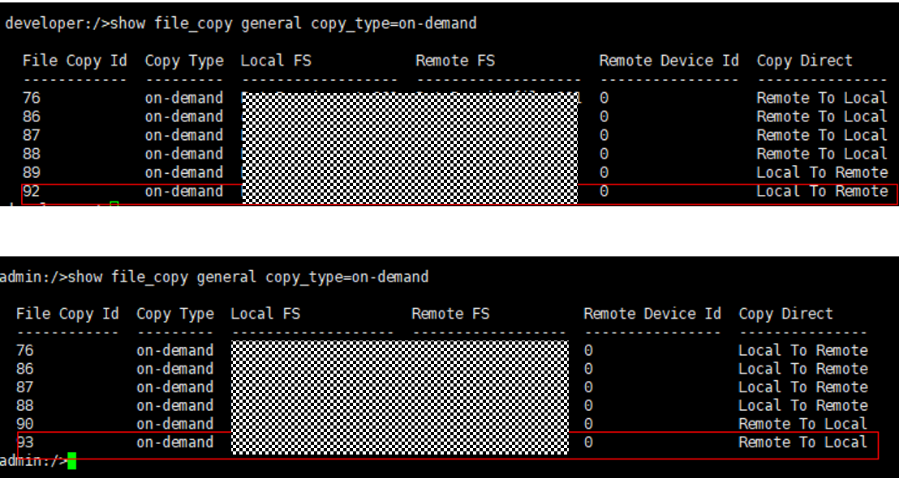

3.2.1.7 Perform Backups/Restores with Fan-out Targeted A.I.R

Objective | To verify that the backup system can configure fan-out (one-to-many) LSU replication To verify that the backup system can perform backups/restores with fan-out targeted A.I.R. |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

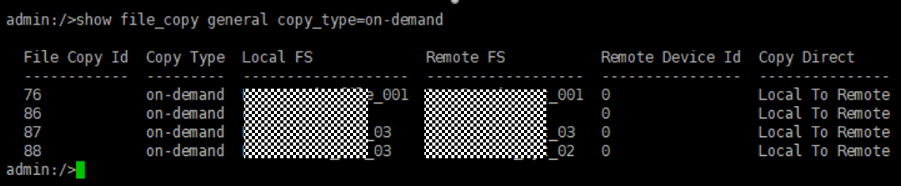

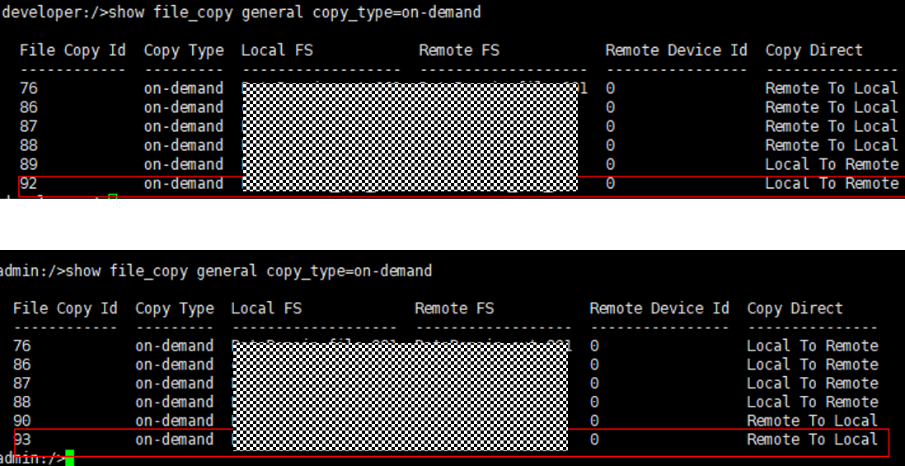

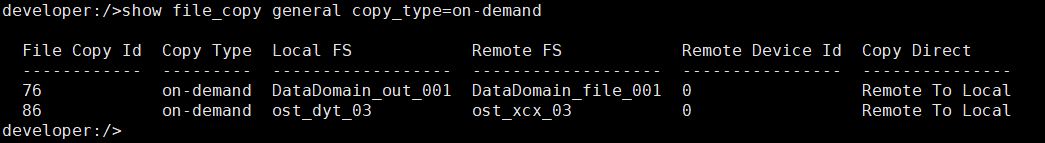

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication links between the source LSU and multiple destination LSUs (A->B、A->C、A->D和A->E). 3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server. //The configuration is successful.

4. Configure the storage unit and import policy on the destination end.  5. On the source end, configure a backup policy based on the replication SLP policy.

6. Set a scheduled backup task and perform the backup.

7. Execute the replication task when the replication period arrives.

//The replication is successful. The copy is imported to the specified Master Server at the destination end.  8. Import and restore the secondary copy and verify data consistency. // Data consistency Primary 001

Primary 002

Main 003

Main 004

From 001   From 002

From 003

From 004

|

Conclusion |

|

Remarks |

3.2.1.8 Perform Backups/Restores with Cascaded Targeted A.I.R

Objective | To verify that the backup system can configure cascaded LSU replication To verify that the backup system can perform backups/restores with cascaded targeted A.I.R. |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship between the source LSU and multiple destination LSUs (A->B->C). 3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server.  //The configuration is successful. 4. Configure storage units and import policies on each destination end. B  C  5. On the source end, configure a backup policy based on the replication SLP policy.  6. Set a scheduled backup task and perform the backup. Timed  Backing Up  7. Execute the replication task when the replication period arrives. A Replication  B Replication  //The replication is successful. The copy is imported to the specified Master Server at the destination end. Imported  8. Compare the data on the primary/secondary of A, B, and C, observe the capacity change, link relationship, and bottom-layer resource usage. //The primary/secondary data and capacity are consistent, and the link relationship exists.  C  |

Conclusion |

|

Remarks |

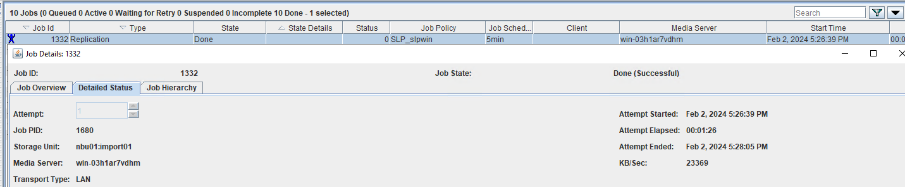

3.2.1.9 OST – Targeted A.I.R [ Backup – Duplication – Replication – Import from Replica]

Objective | To verify that the backup system can perform targeted A.I.R with following orders [ Backup – Duplication – Replication – Import from Replica] and [Backup – Duplication – Duplication] |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

Test Result | 1. Configure the SLP. Site A replicates data to site B within the domain, and then site B replicates data to site C within the domain.  2. Manually start the backup task and wait until the replication task is complete.

3. Import and restore data at sites B and C, and verify data consistency. A

B   C

|

Conclusion |

|

Remarks |

3.2.1.10 OST – Targeted A.I.R [ Backup – Replication – Import from Replica]

Objective | To verify that the backup system can perform targeted A.I.R with following orders [Backup – Replication – Import from Replica] |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship between the Windows source LSU and the Linux target LSU (A->B). 3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server. //The configuration is successful.

4. Configure the storage unit and import policy on each destination end.  |

Conclusion |

|

Remarks |

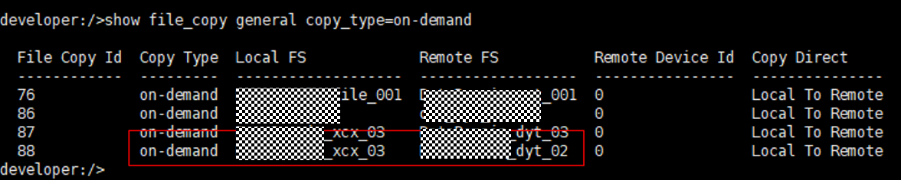

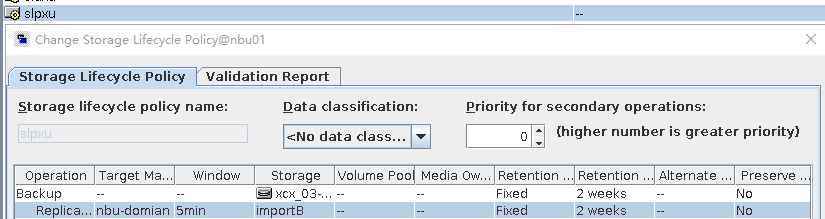

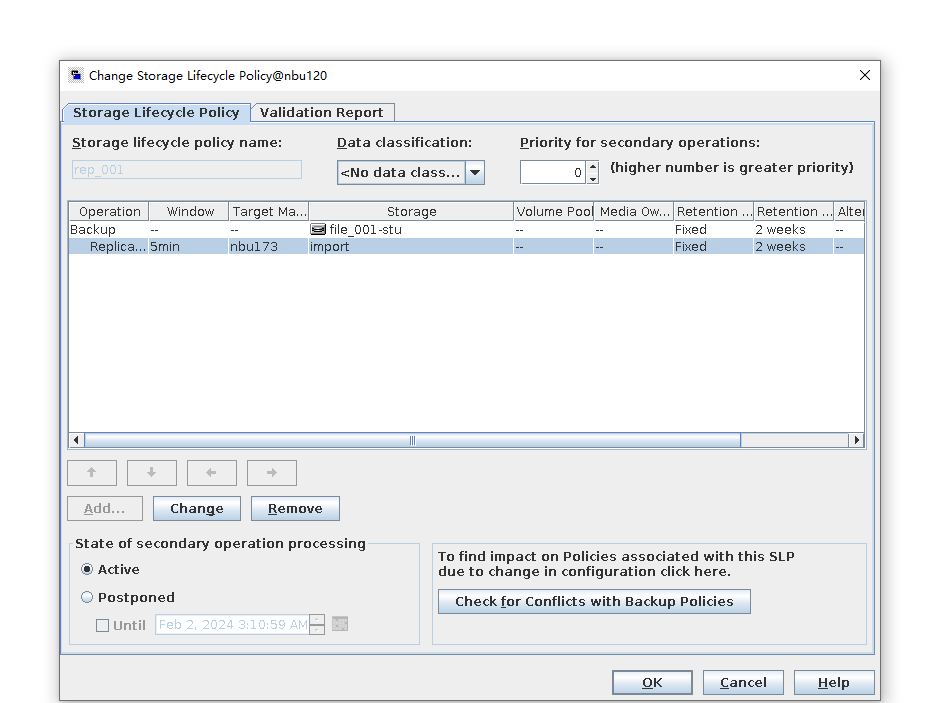

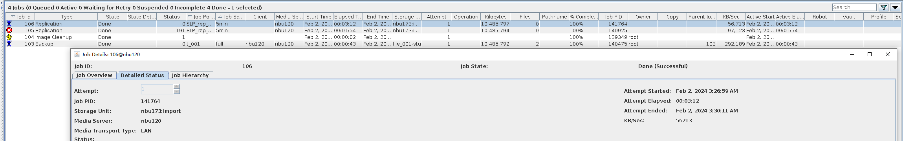

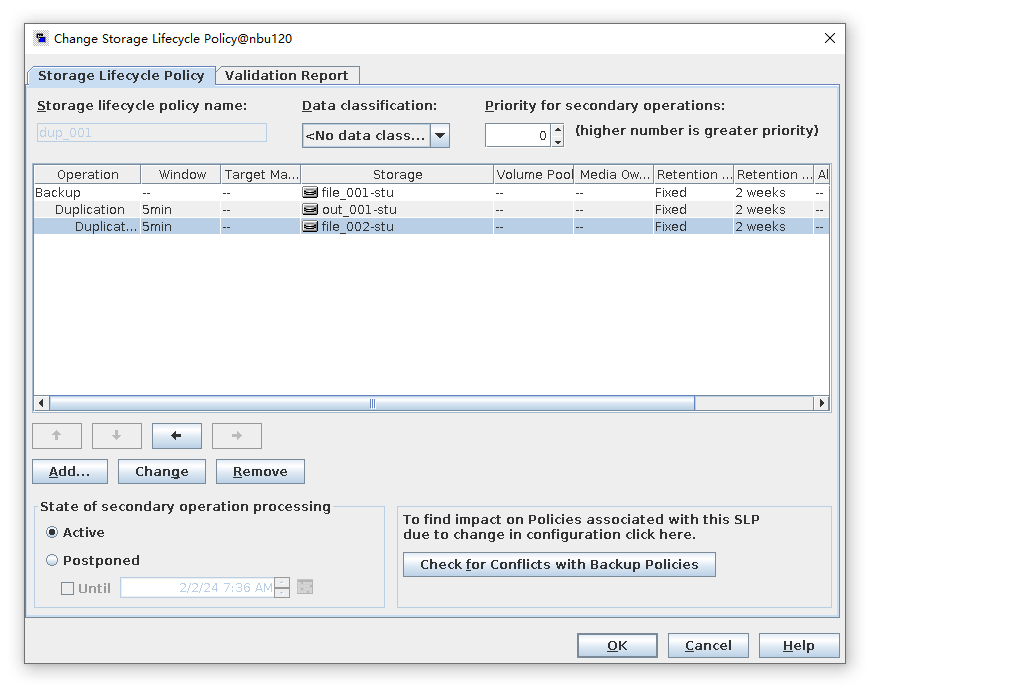

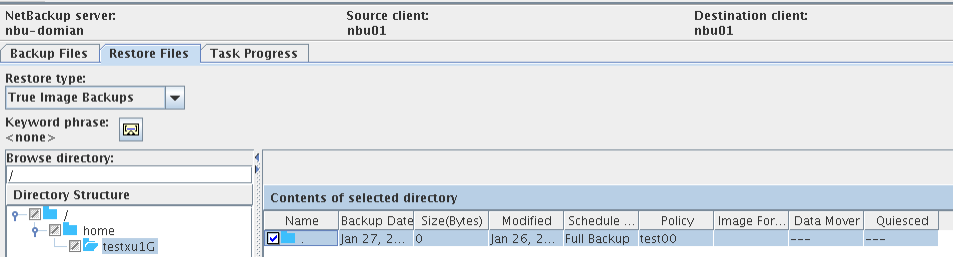

3.2.2 Optimized Duplication

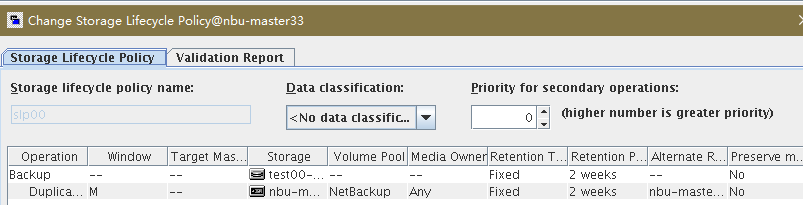

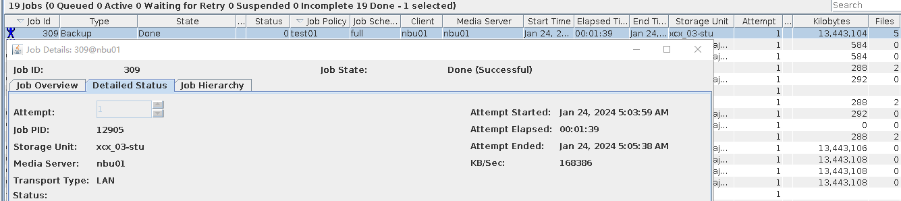

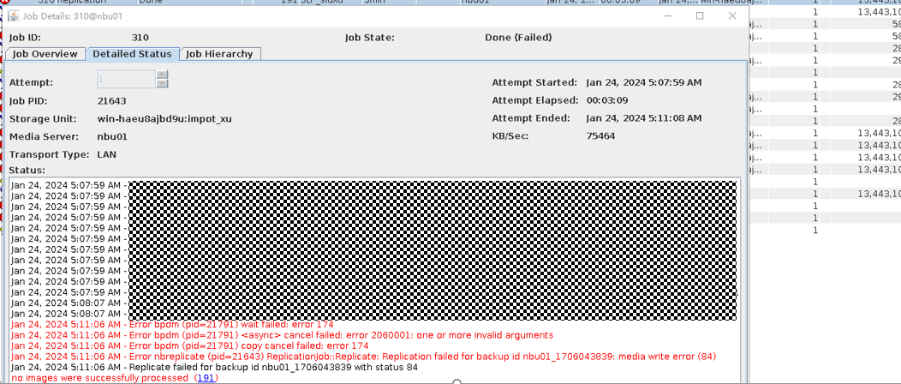

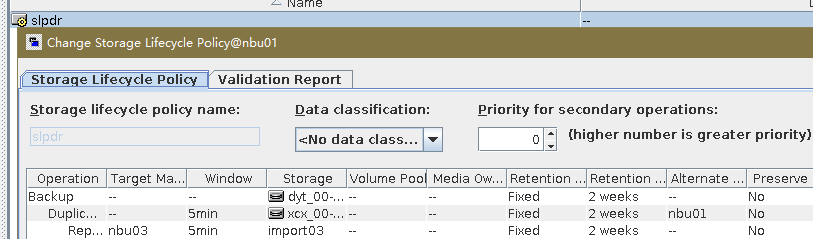

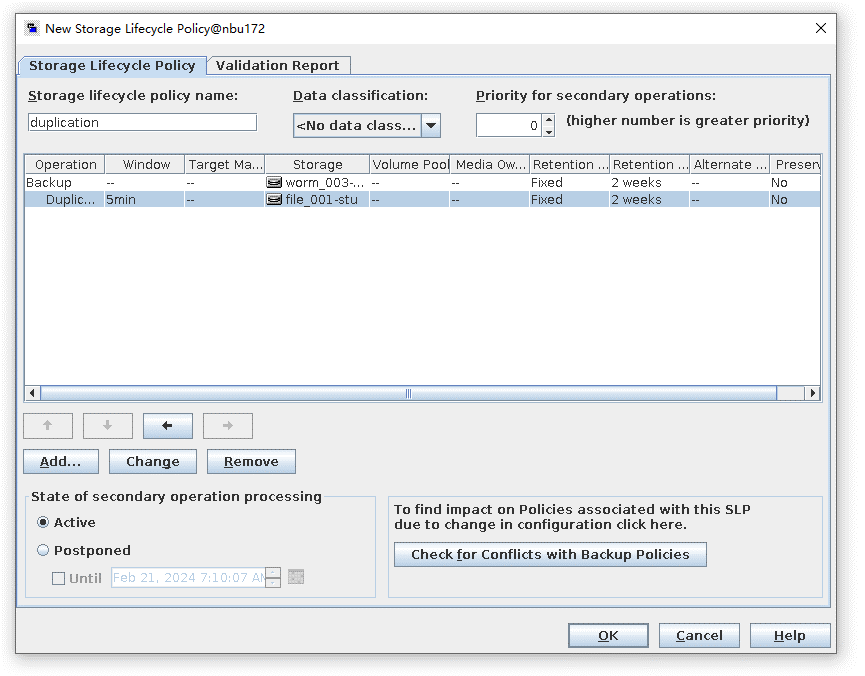

3.2.2.1 OST – Backup and Restore Using Optimized Duplication-Enabled Storage Lifecycle Policy

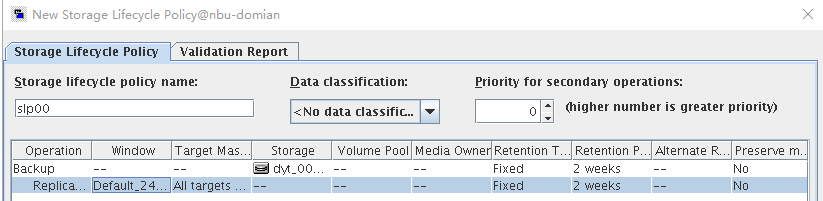

Objective | To verify that the backup system can backup using optimized duplication-enabled storage lifecycle policy To verify that the backup system can backup with true image restore using optimized duplication-enabled storage lifecycle To verify that the backup system can restore optimized duplicate duplicated image To verify that the backup system can restore TIR enable optimized duplicate duplicated image |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

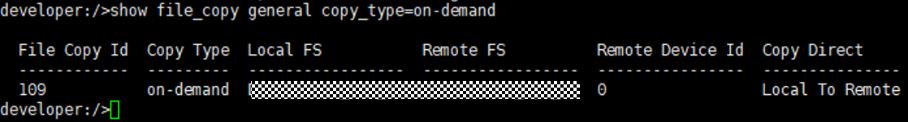

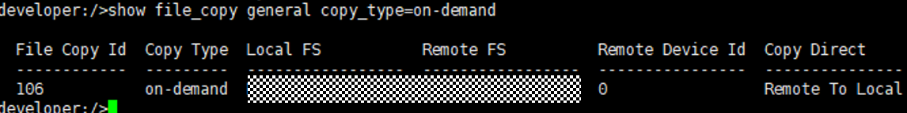

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship (A->B) between the source LSU and multiple destination LSUs.  3. On the source master server, configure the replication SLP policy and select Common Replication. //The configuration is successful.  4. Configure storage units on each destination end.  5. On the source end, configure a backup policy based on the replication SLP policy (TIR is enabled for the backup policy).  6. Set a scheduled backup task and perform the backup.  7. When the replication period arrives, the replication task is executed. //The replication is successful. The copy is imported to the specified Master Server on the destination end.   8. Import a copy from the secondary end and use the TIR mirror to restore the data to verify data consistency.  Restored successfully.

Secondary data  |

Conclusion |

|

Remarks |

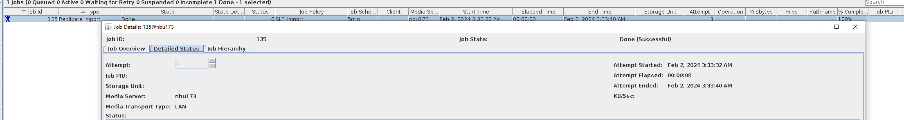

3.3 Accelerator

3.3.1 Accelerator

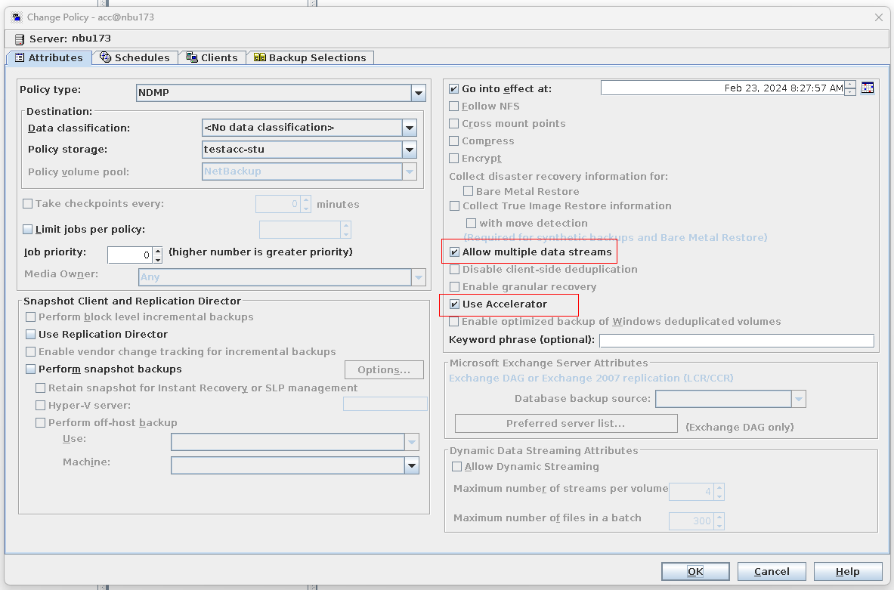

3.3.1.1 OST – Confirm STS Functionality and Performance with Accelerator

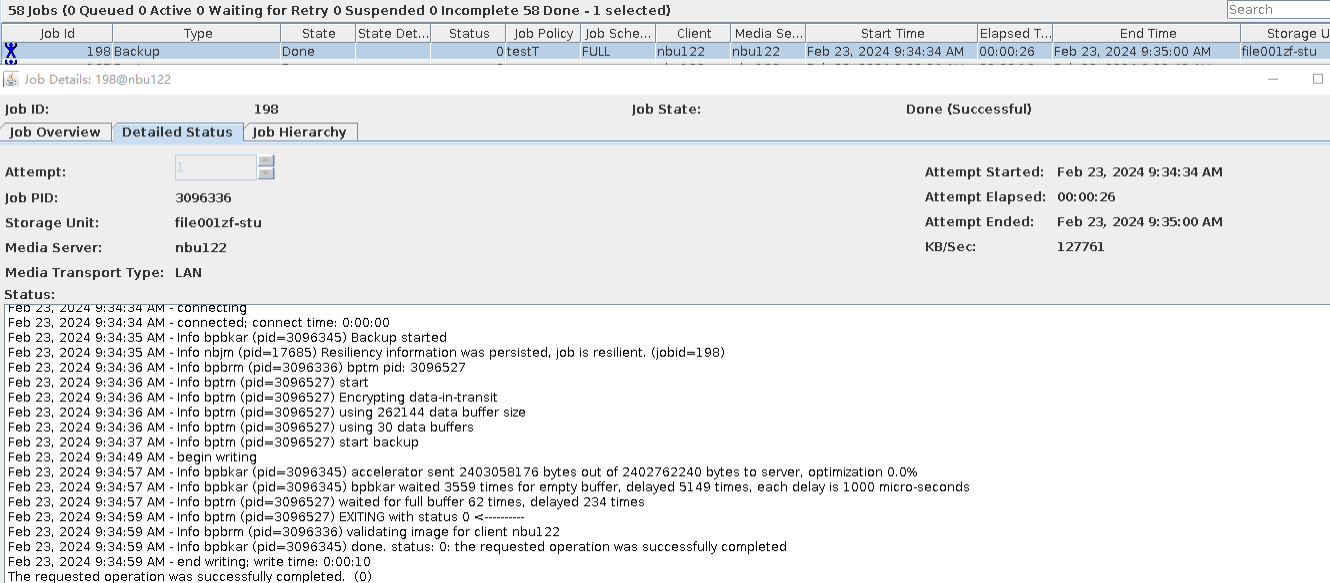

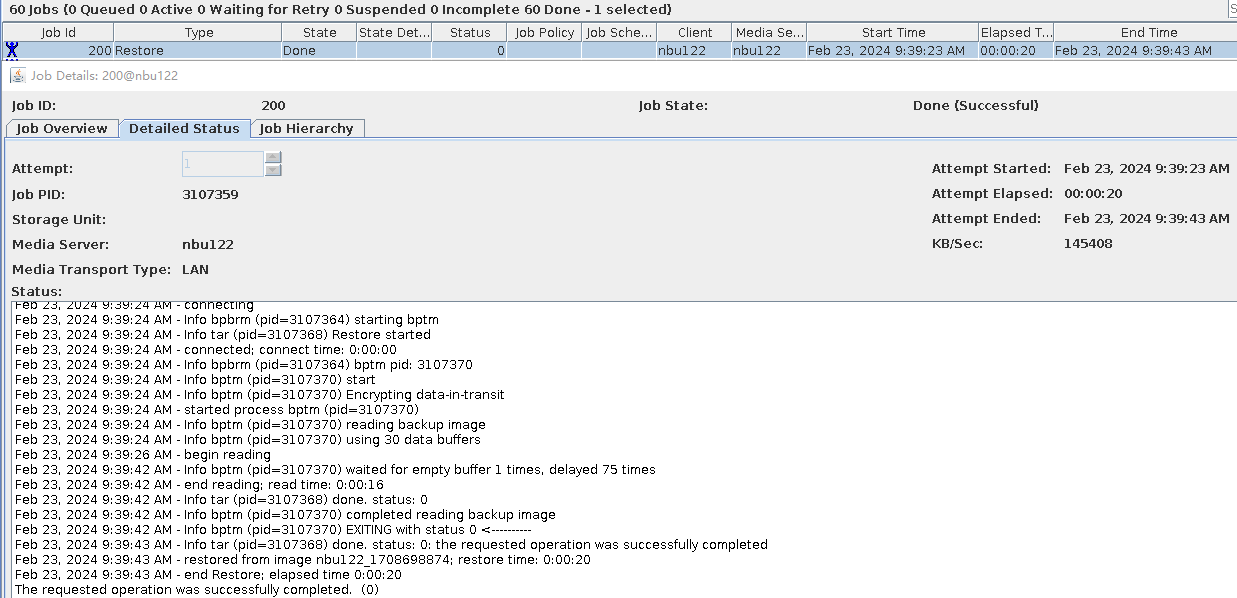

Objective | To verify that the backup system can confirm STS functionality and performance with accelerator, with accelerator and multistreaming, with accelerator and true image restore, and with accelerator for INC backups |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

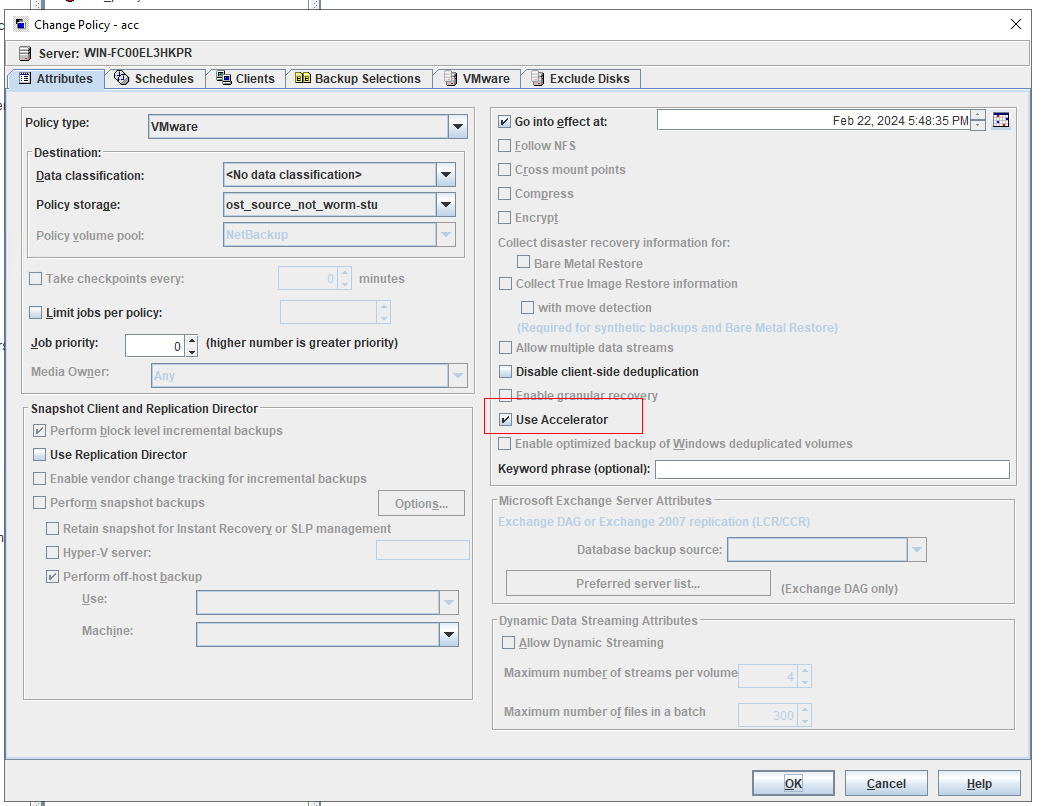

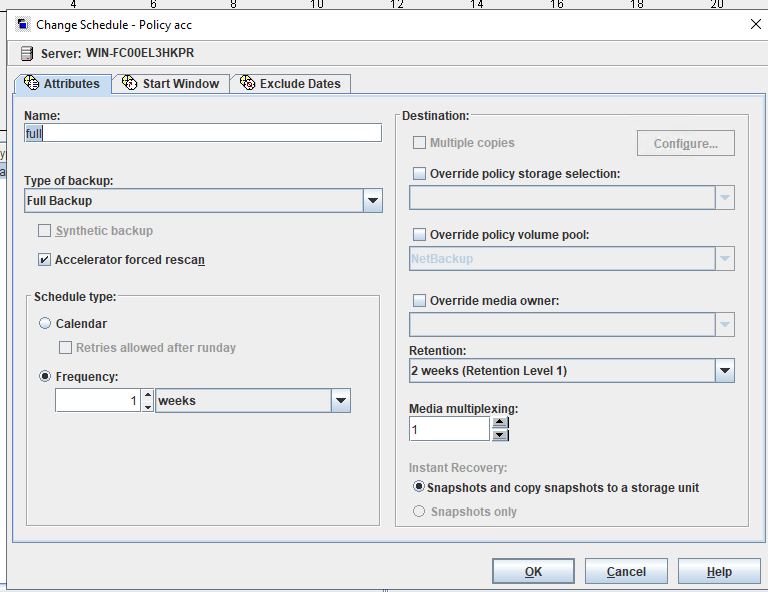

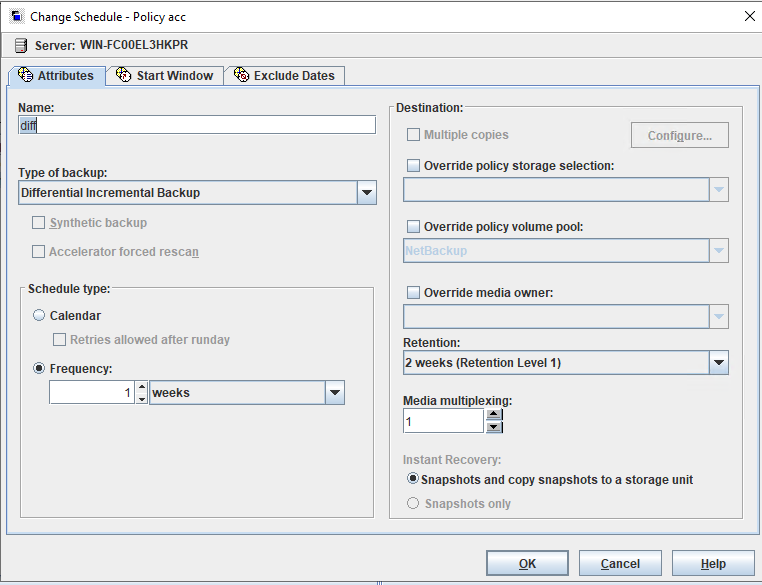

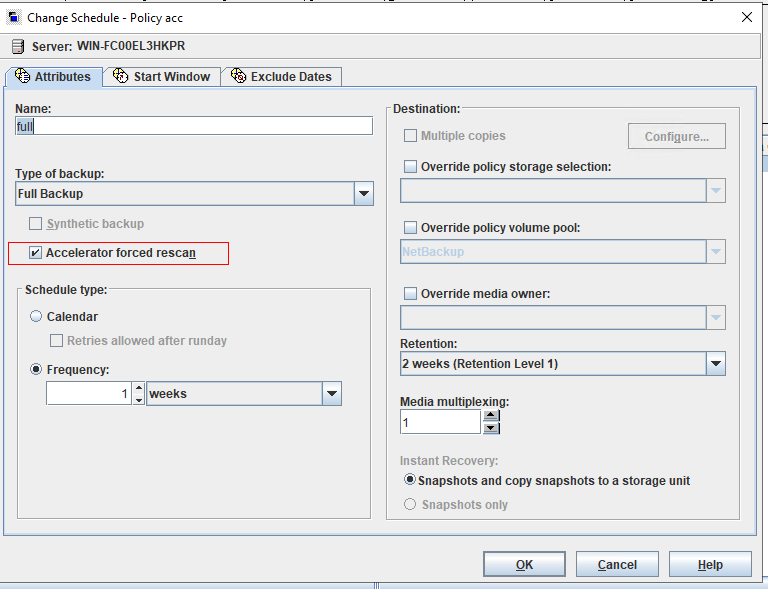

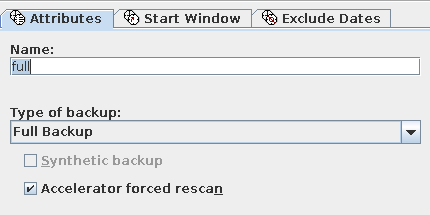

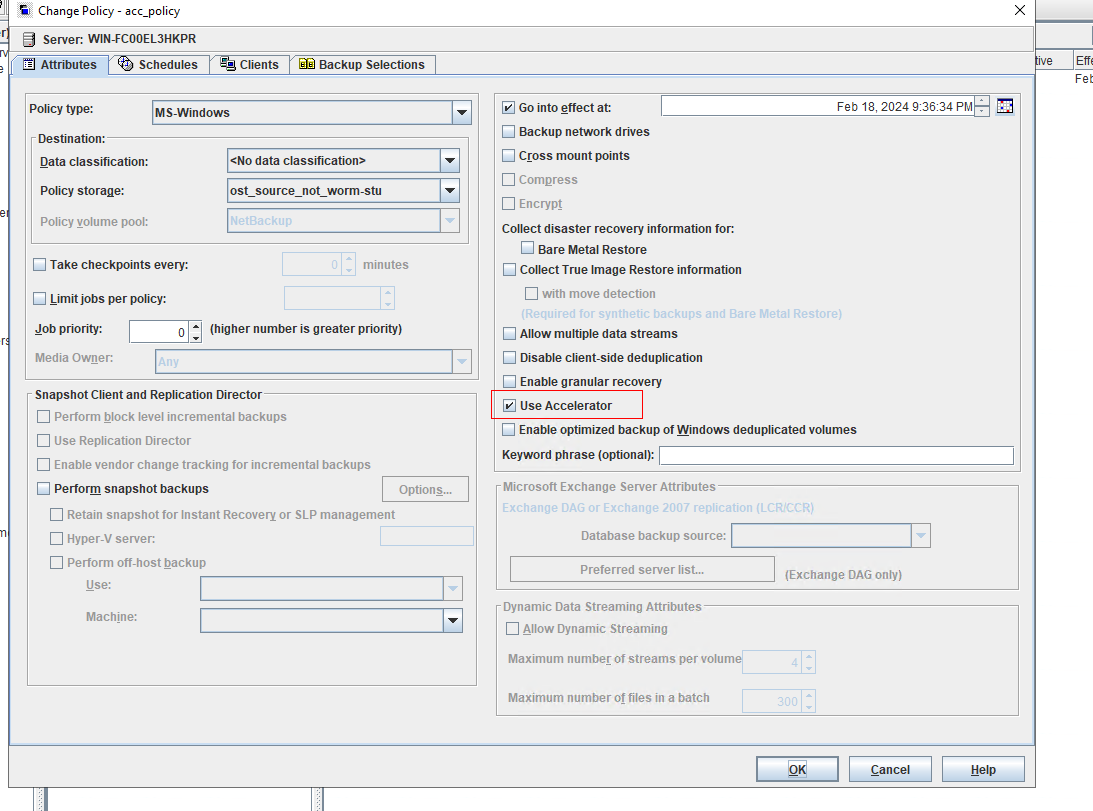

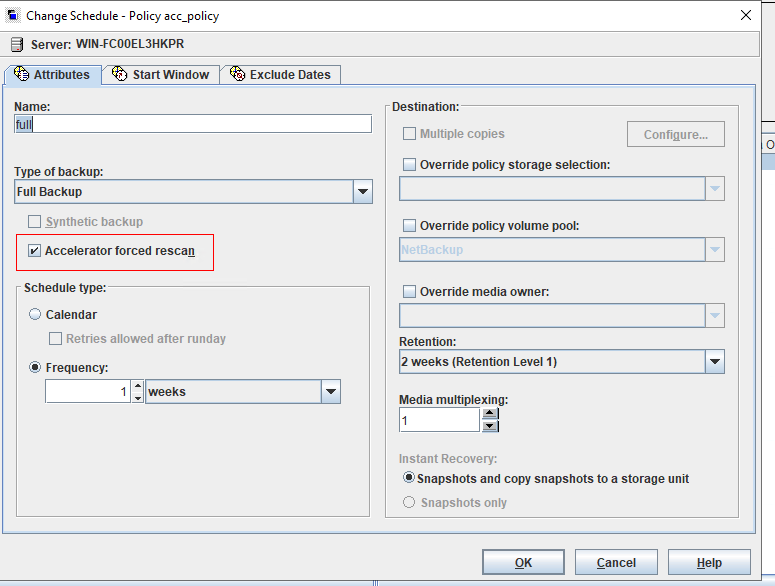

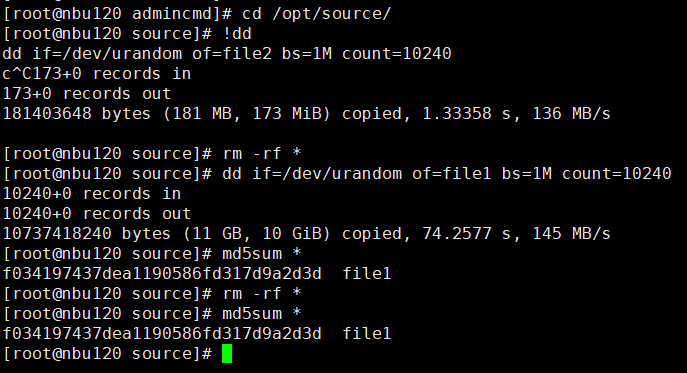

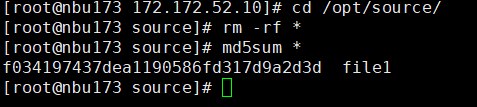

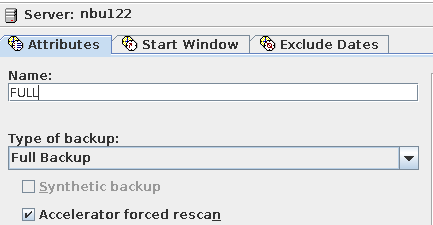

Procedure | 1. Create a storage server, disk pool, storage unit, and policy. (Enable the accelerator, TIR, and multi-stream function). 2. Insert the 1 GB file testfile_1 into the file system of the active node and perform differential incremental backup.  3. Perform TIR restoration and verify the data consistency of testfile_1.  4. Insert the 1 GB file testfile_2 into the file system of the host and perform a synthetic full backup.

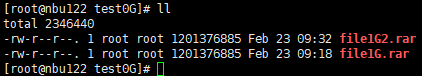

5. Perform standard restoration and verify data consistency between testfile_1 and testfile_2.  |

Expected Result |

|

Test Result | 1. The storage server, disk pool, and storage unit are created successfully. 2. The TIR incremental backup is successful.  3. The TIR data is restored successfully and the data is consistent. Restored successfully.  Data Consistency  3. The accelerated backup is successful.  4. The standard restoration is successful, and the data is consistent after the restoration. Restored successfully.

Data Consistency  |

Conclusion |

|

Remarks |

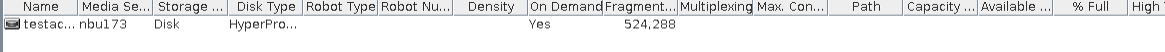

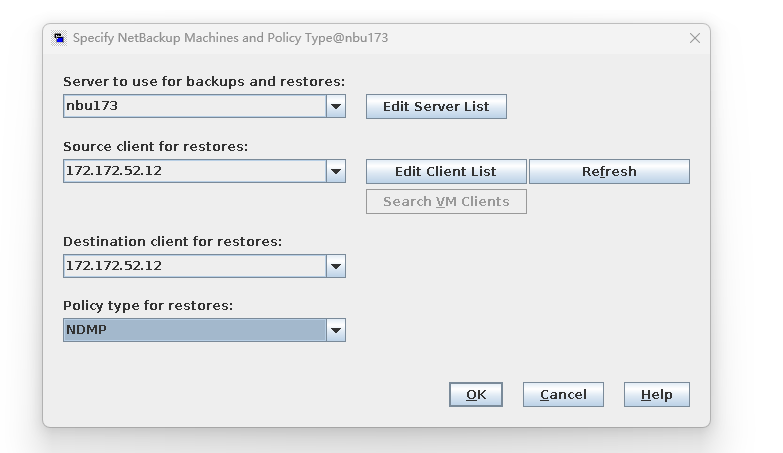

3.3.1.2 Confirm STS Functionality with Accelerator and Multistreaming for NDMP

Objective | To verify that the backup system can confirm STS functionality and performance with simple accelerator for NDMP To verify that the backup system can confirm STS functionality with accelerator and multistreaming for NDMP |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

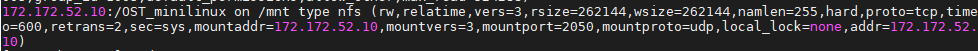

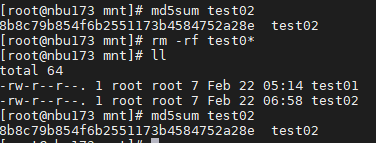

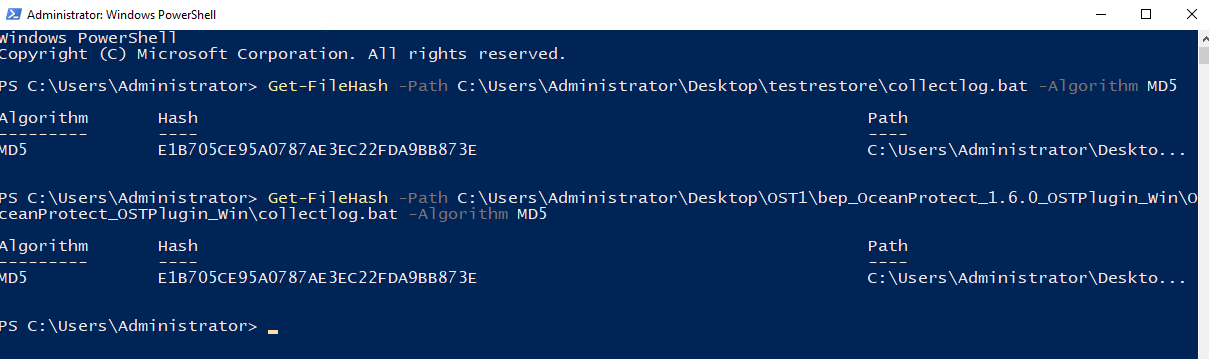

Test Result | 1. Create a storage server, disk pool, storage unit, and policy (enable the accelerator and enable the multi-streaming function). Creating a storage server  disk pool storage unit  Policy configuration  2. Insert data testfile_1 into the NDMP and perform incremental backup. 3. Insert data testfile_2 into the NDMP and perform the accelerated backup. 4. Restore data and verify data consistency between testfile_1 and testfile_2.  Mount the corresponding file system.  Test01 is successfully restored.

Test02 is restored successfully.  |

Conclusion |

|

Remarks |

3.3.1.3 Confirm STS Functionality with Accelerator and VMware

Objective | To verify that the backup system can confirm STS functionality with accelerator and VMware |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

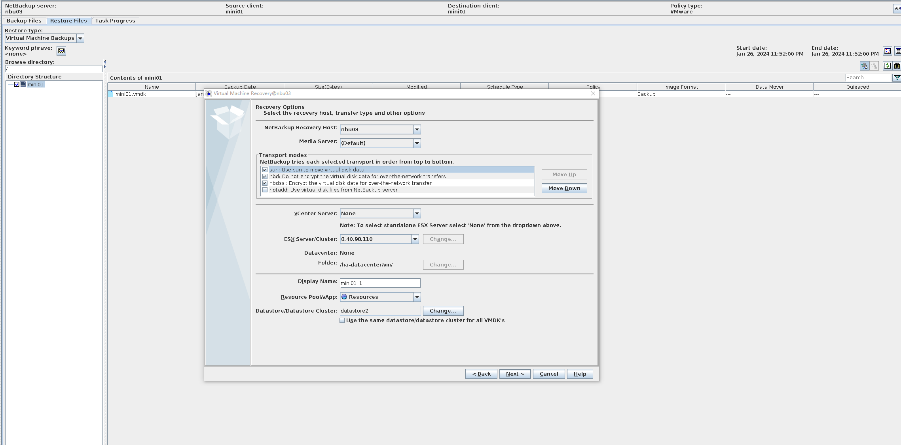

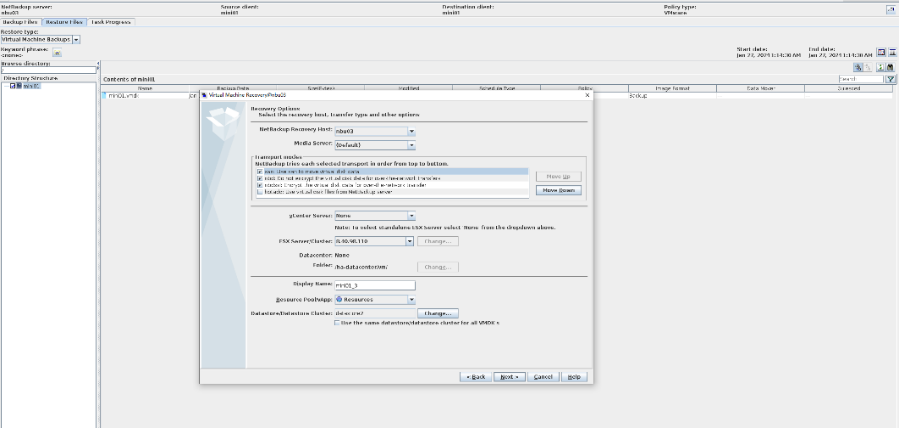

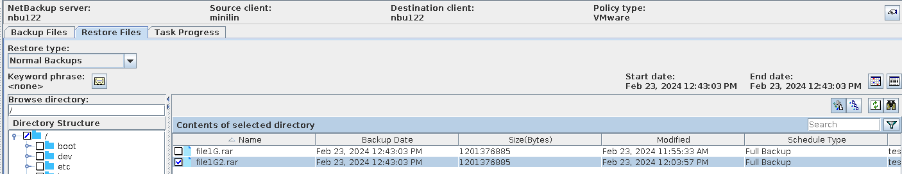

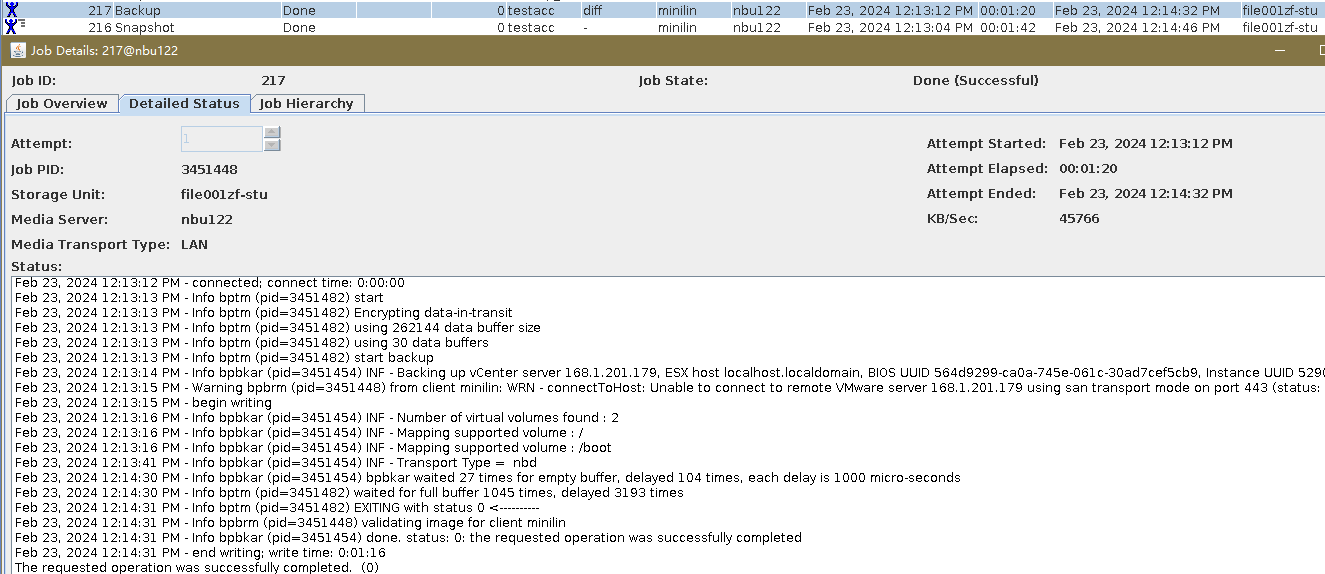

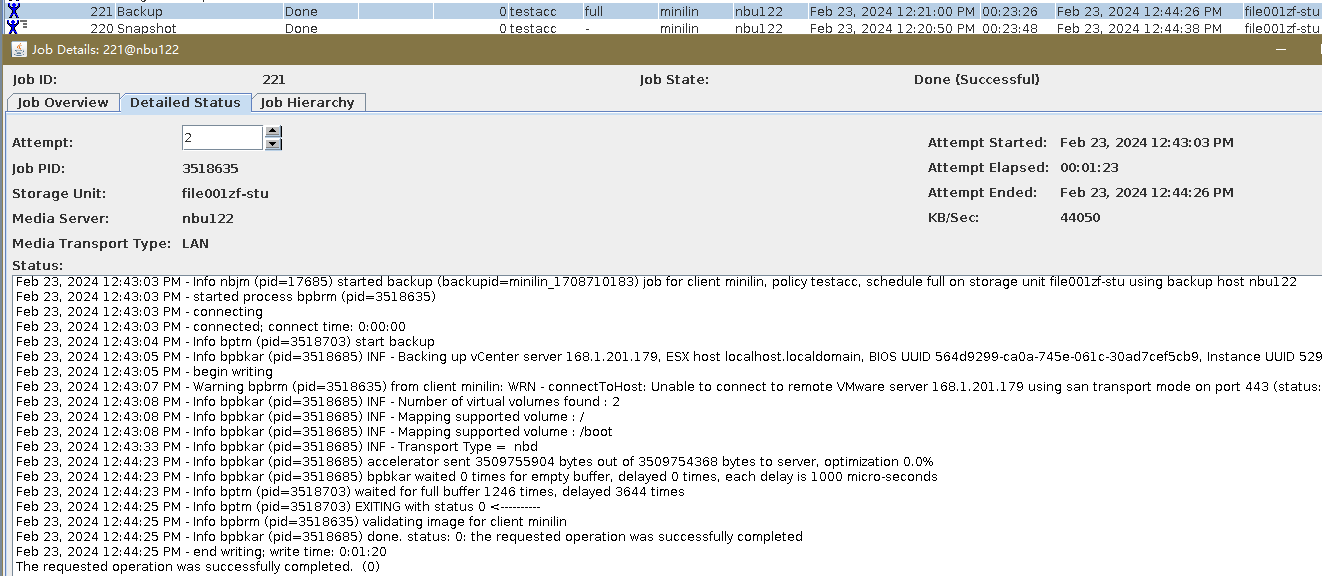

Test Result | 1. Create a storage server, disk pool, storage unit, and policy (enabling the accelerator). storage server  disk pool  storage unit policy (enabling the accelerator)   2. Insert the 1 GB file testfile_1 into the VM and perform incremental backup.  3. Insert the 1 GB file testfile_2 into the VM and perform accelerated backup.

4. Restore data and verify data consistency between testfile_1 and testfile_2.

test01

test02

|

Conclusion |

|

Remarks |

3.3.4 Confirm STS Functionality and Performance with Accelerator and GRT

Objective | To verify that the backup system can confirm STS functionality and performance with accelerator and GRT |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure | 1. Create a storage server, disk pool, storage unit, and policy (enabling the accelerator). storageserver  diskpool  storageunit  2. Insert the 1 GB file testfile_1 into the VM and perform full backup.  3. Insert the 1 GB file testfile_2 into the VM and perform incremental backup.  4. Modify the 1 GB file testfile_2 on the VM and perform accelerated backup.  5. Perform fine-grained restoration, restore only testfile_2, and verify data consistency.  |

Expected Result |

|

Test Result | 1. The storage server, disk pool, and storage unit are created successfully. 2. The full backup is successful. 3. The differential incremental backup is successful.  4. The backup is successfully accelerated.  5. Fine-grained restoration is successful and data is consistent. Restored successfully.  Data Consistency  |

Conclusion |

|

Remarks |

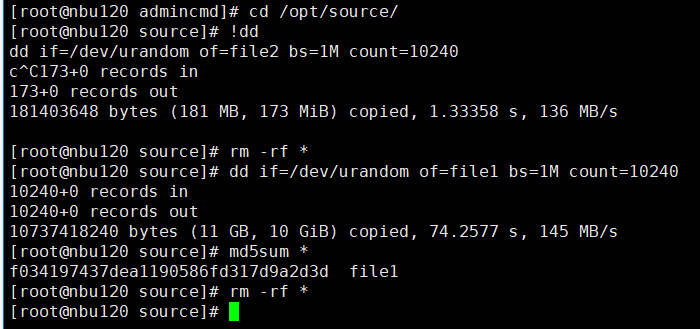

3.3.2 Optimized Synthetics

3.3.2.1 Optimized Synthetic Backup and Restore

Objective | To verify that the backup system can perform scale differential incremental, synthetic full backup, and optimized synthetic backup and restore |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

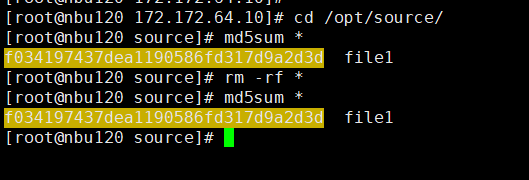

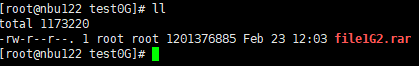

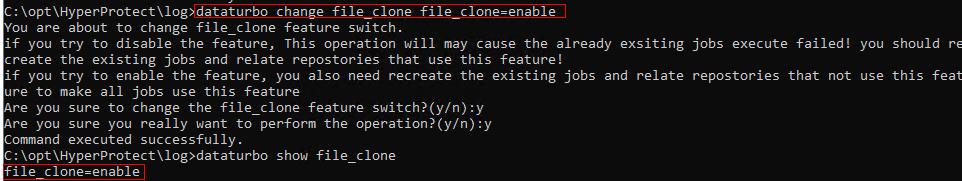

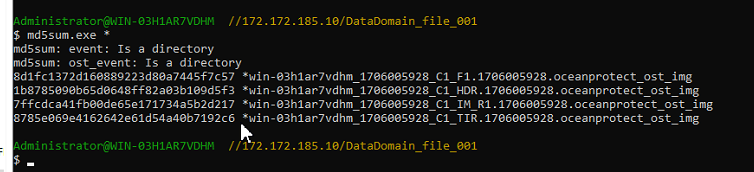

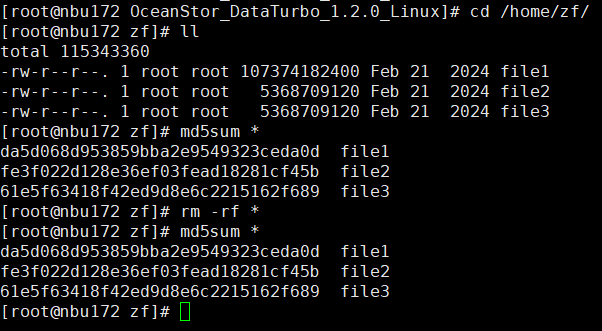

Test Result | 1. Create a storage server, disk pool, storage unit, and policy. Policy Settings   Enable fileclone.  2. Insert the 1 GB file testfile_1 into the file system of the active node and perform incremental backup. 3. Insert the 1 GB file testfile_2 into the file system of the host and perform a synthetic full backup. 4. Restore data and verify data consistency between testfile_1 and testfile_2. First backup  Second Increment  |

Conclusion |

|

Remarks |

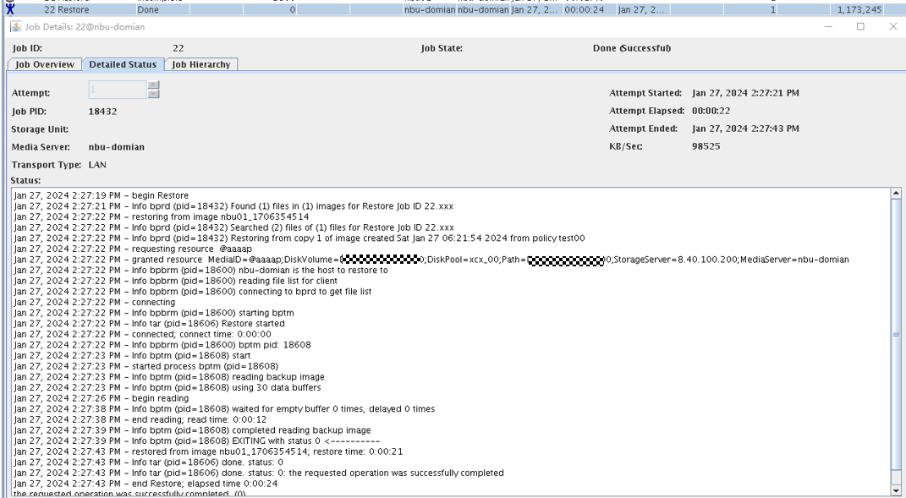

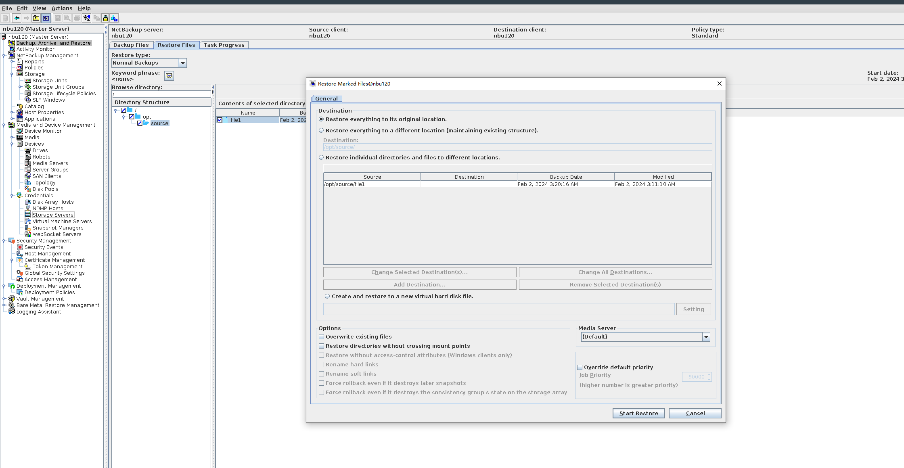

3.4 Recovery

3.4.1 Granular Recovery Technology

3.4.1.1 Optional GRT-Testing Media Server Configuration

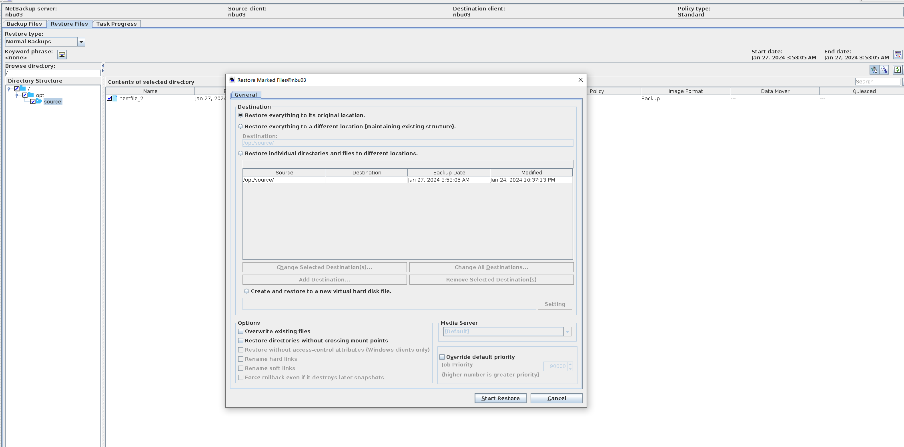

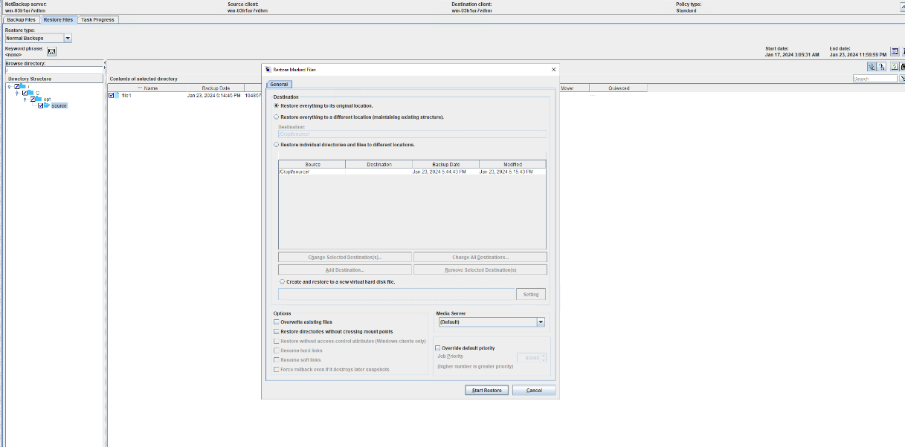

Objective | To verify that the backup system can perform selective restore of file on image and optional GRT-testing media server configuration |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

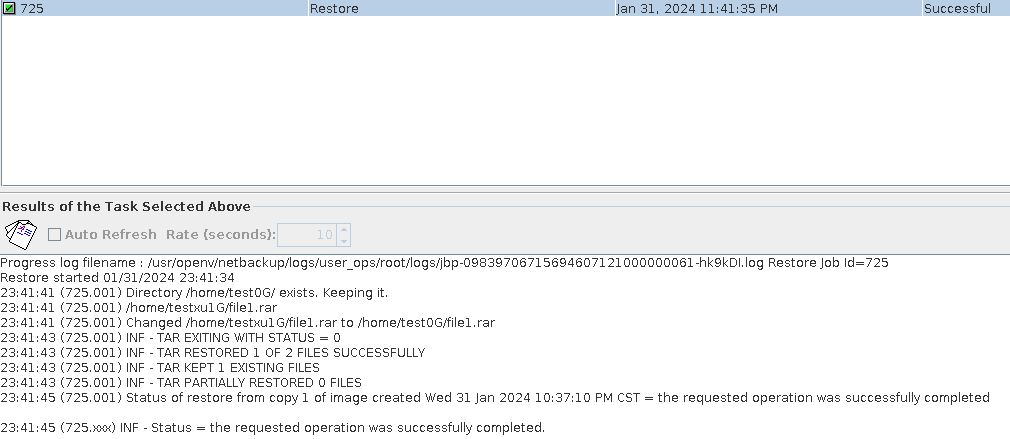

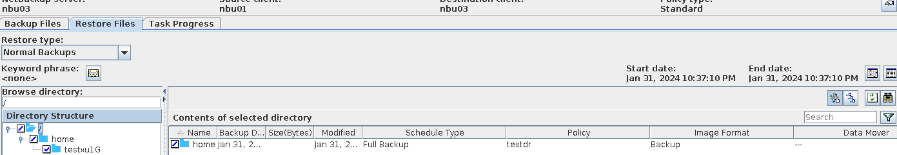

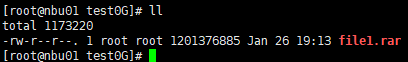

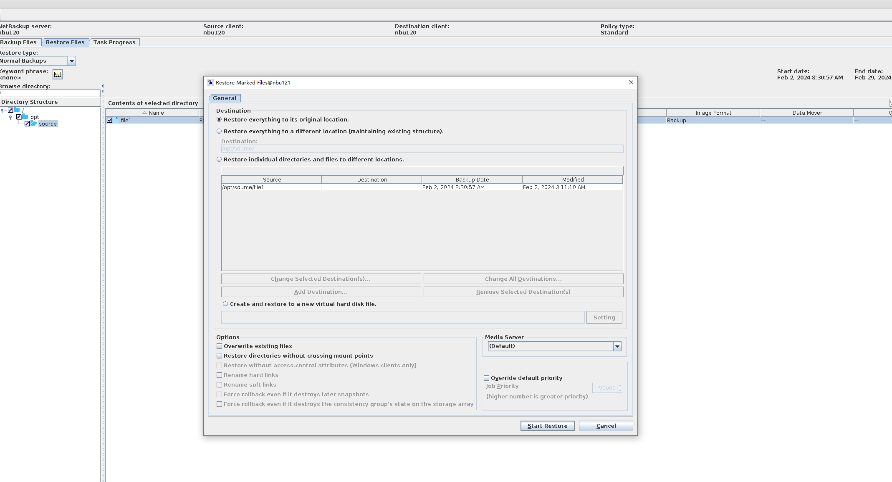

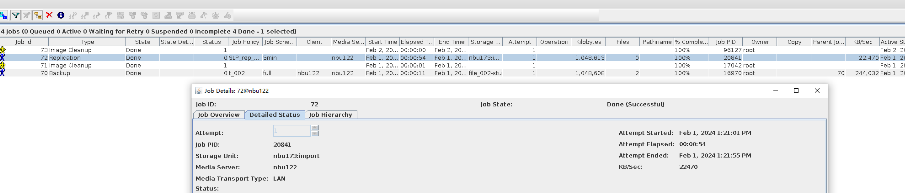

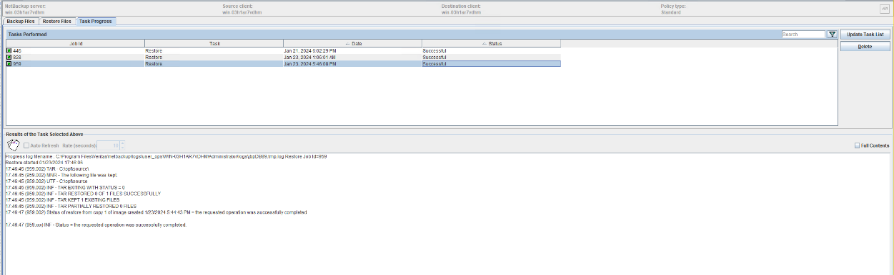

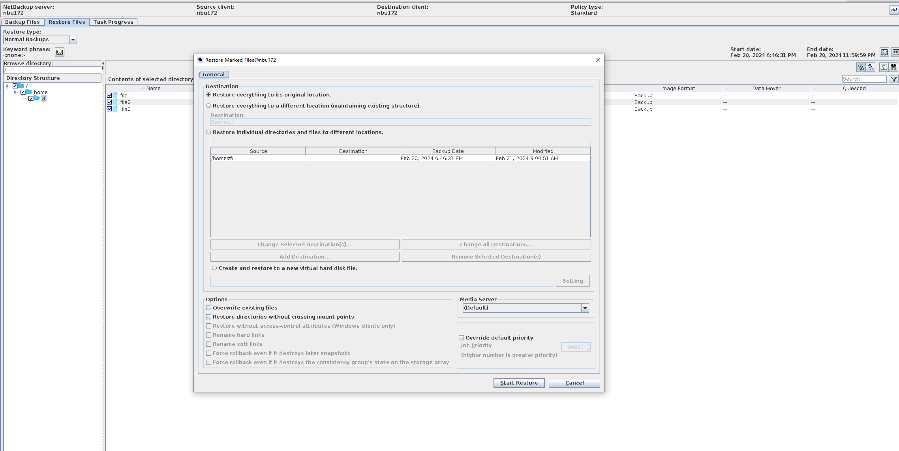

Test Result | 1. Create a storage server, diskpool, and storage unit.

2. Back up all files and restore them.  3. Perform differential incremental backup and restore files.   4. Permanently perform incremental backup and restore files.  |

Conclusion |

|

Remarks |

3.5 Data Security

3.5.1 WORM

3.5.1.1 OST – WORM Optimized Duplication Tests

Objective | To verify that the backup system can perform WORM import To verify that the backup system can perform WORM optimized duplication |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

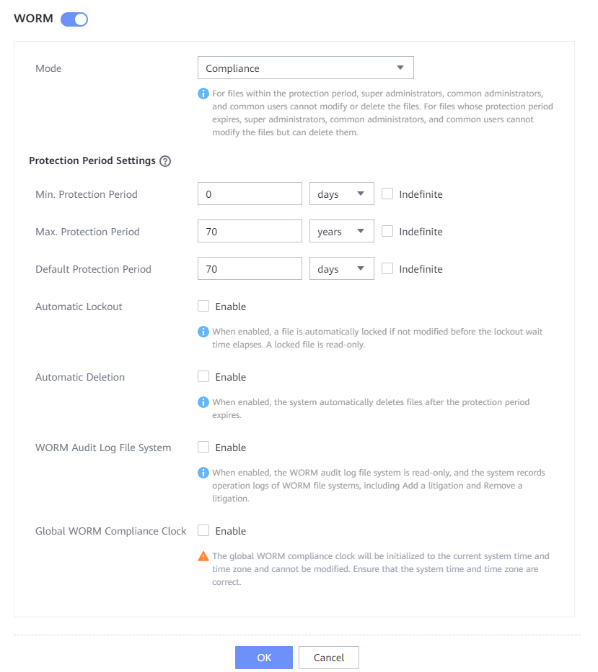

Test Result | 1. Log in to the Master Server as the system administrator. 2. Configure the file replication link relationship between the source LSU of the worm and the non-destination LSU of the worm. (A -> B)  3. On the source master server, configure the replication SLP policy, select Replication, and select Target master server. //The configuration is successful. 4. Configure storage units on each destination end.  5. On the source end, configure a backup policy based on the replication SLP policy.  6. Set a scheduled backup task and perform the backup.  7. Execute the replication task when the replication period arrives. //The replication is successful. The copy is imported to the specified Master Server at the destination end. Backup task

8. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage. original  copy 1 is the primary.

Set copy2 to primary.

//The primary/secondary data and capacity are consistent, and the link relationship exists.   9. Check whether the copy on node B can be modified and deleted.  //The copy of node B can be modified and deleted.

10. On the source end, configure a backup policy based on the replication SLP policy.

11. Set a scheduled backup task and perform the backup.   12. Execute the replication task when the replication period arrives.

//The replication is successful. The copy is imported to the specified Master Server on the destination end.  13. Compare the data consistency between the A and B primary/secondary, observe the capacity change, link relationship, and bottom-layer resource usage.

link

//The primary/secondary data and capacity are consistent, and the link relationship exists.

|

Conclusion |

|

Remarks |

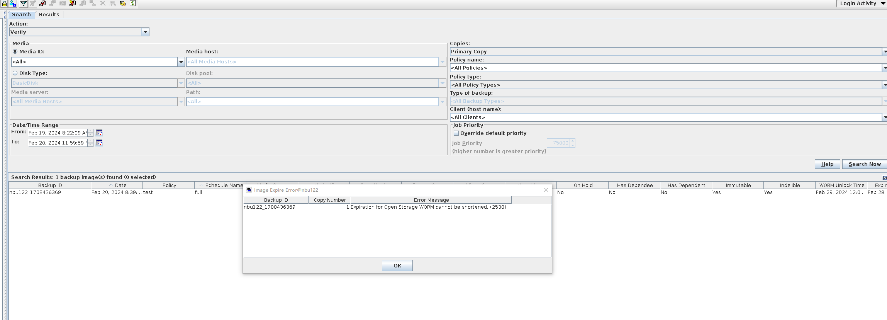

3.5.1.2 OST – WORM Image Expiration

Objective | To verify that the WORM image can expire normally |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

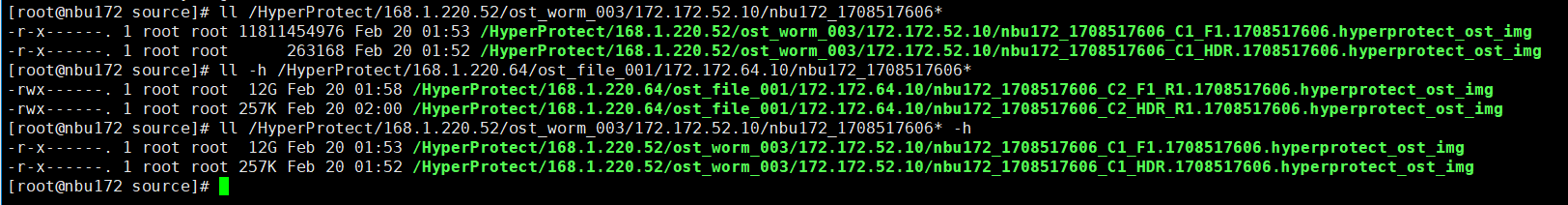

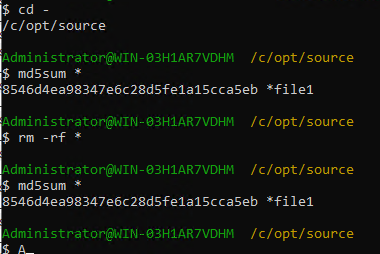

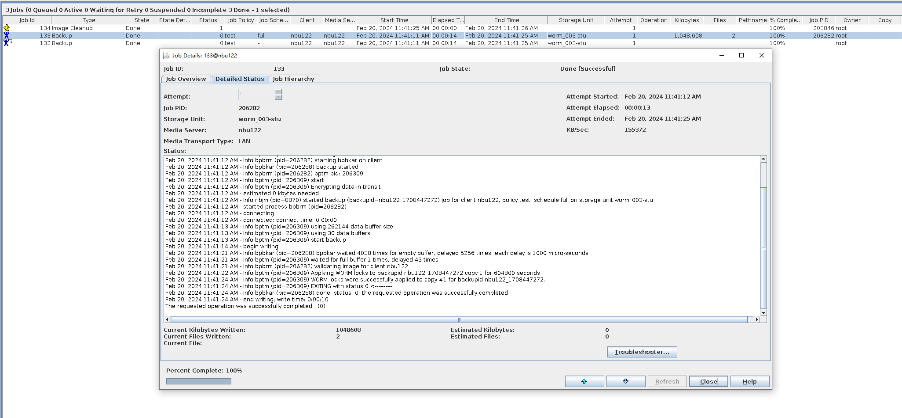

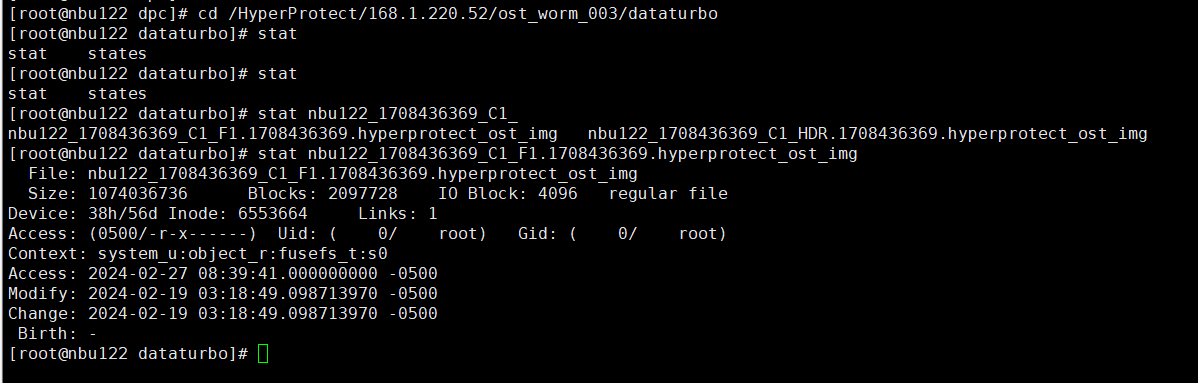

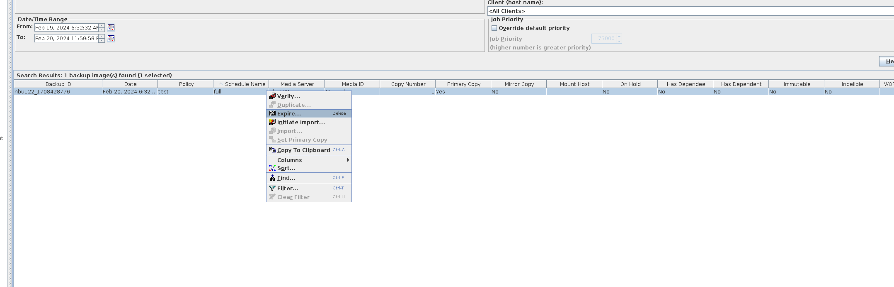

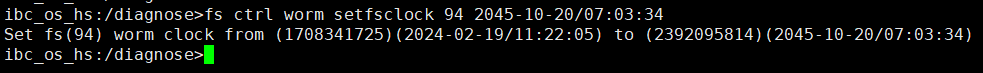

Test Result | 1. A regulation-level worm file system is created on the storage.  2. Add storage servers, disk pools, and storage units to the NBU.   3. Create a backup task to back up the image to the Worm file system and set the protection period.

4. Modify the document outside the protection period.  ① Changing the Protection Period

② Modify File  5. Delete files beyond the protection period.  |

Conclusion |

|

Remarks |

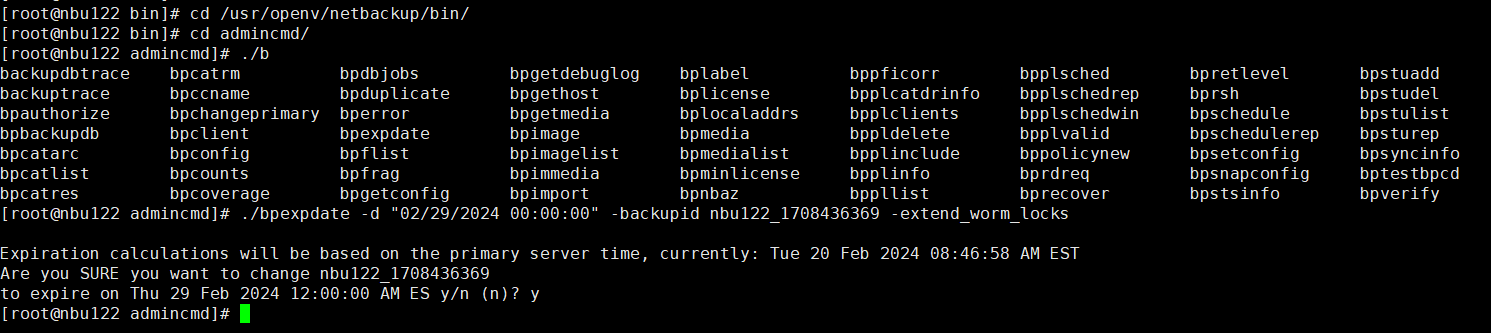

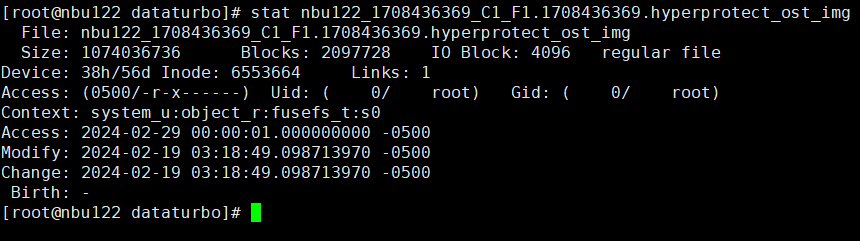

3.5.1.3 OST – WORM Extended Expiration

Objective | To verify that the backup system can extend WORM expiration |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

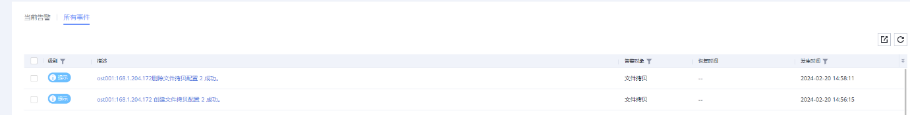

Test Result | 1. A regulation-level worm file system has been created on the storage.   2. Add the storage server, diskpool, and storage unit on the NBU.  3. Create a backup task to back up the image to the Worm file system and set the protection period.    4. Extend the protection period of the image within the protection period. fronts  change  After  Delete  |

Conclusion |

|

Remarks |

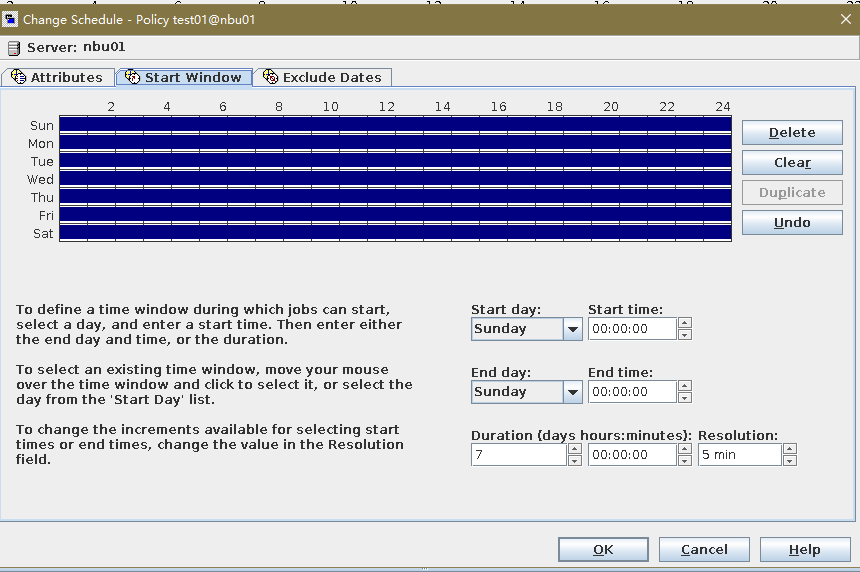

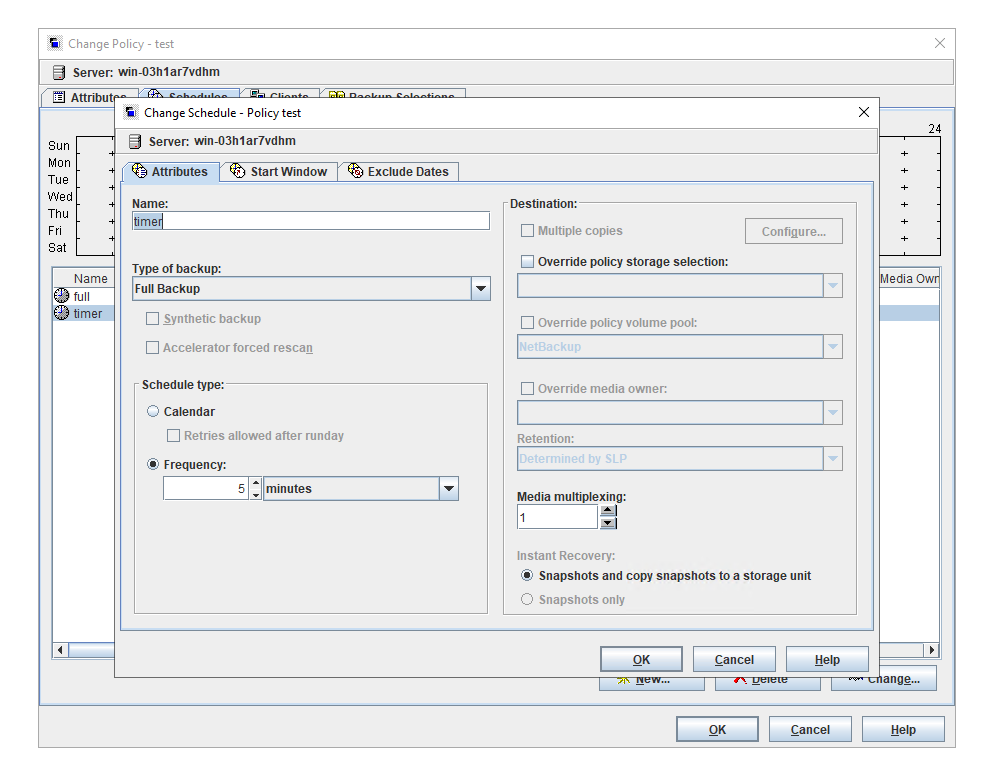

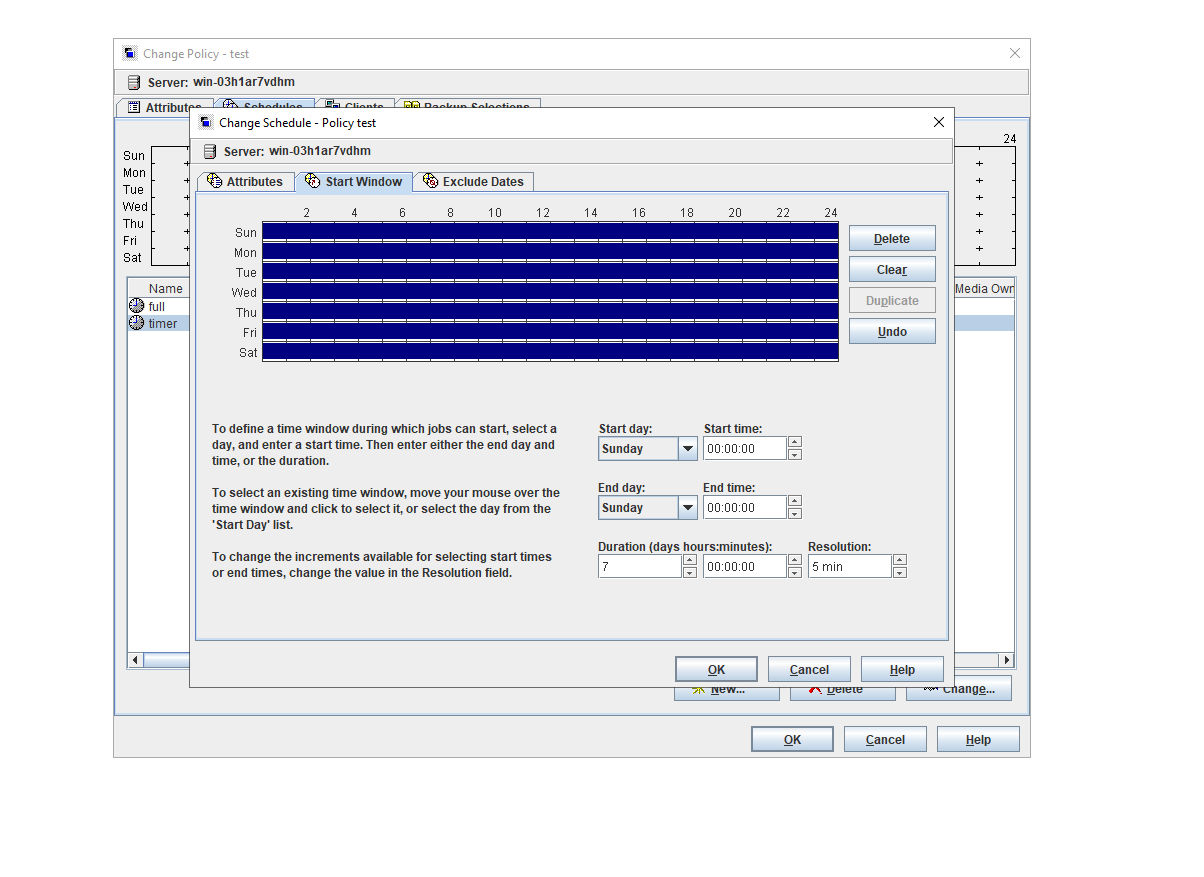

3.5.1.4 OST – WORM LSU Check Point Restart

Objective | To verify that the backup system can perform WORM LSU check point restart |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

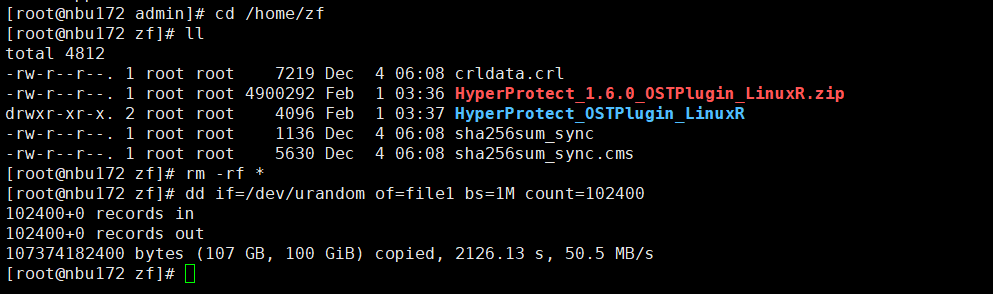

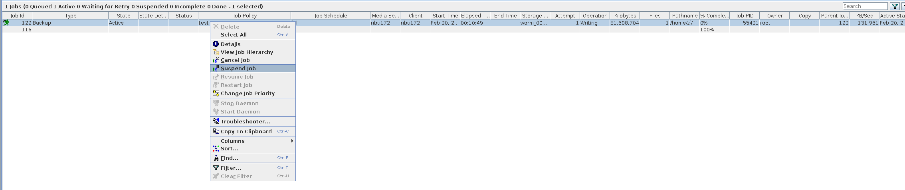

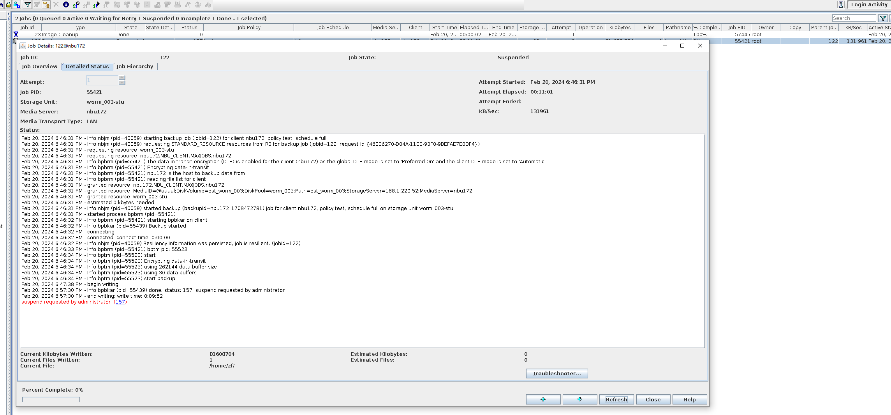

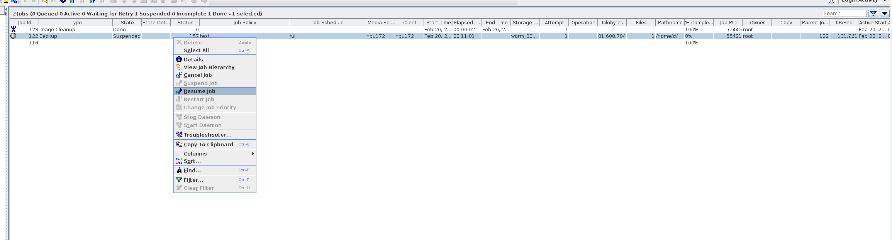

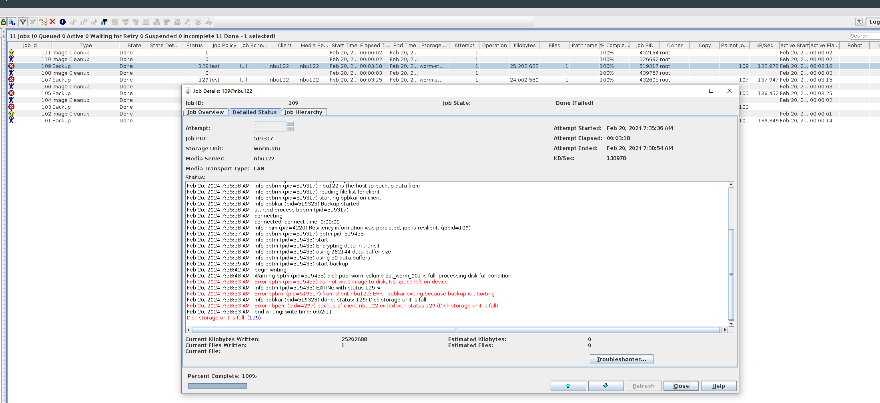

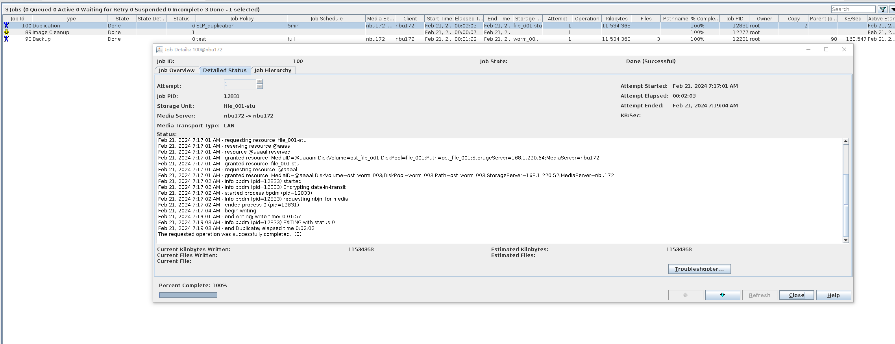

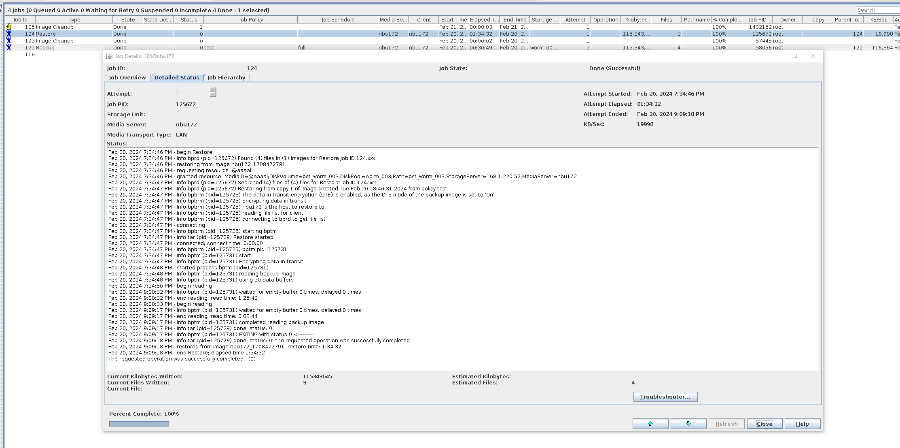

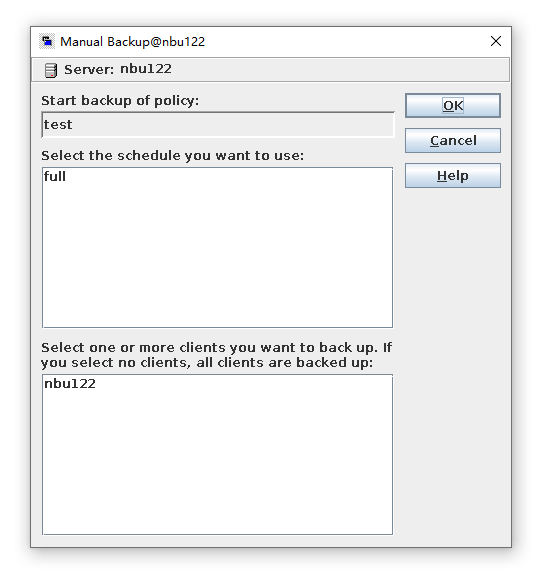

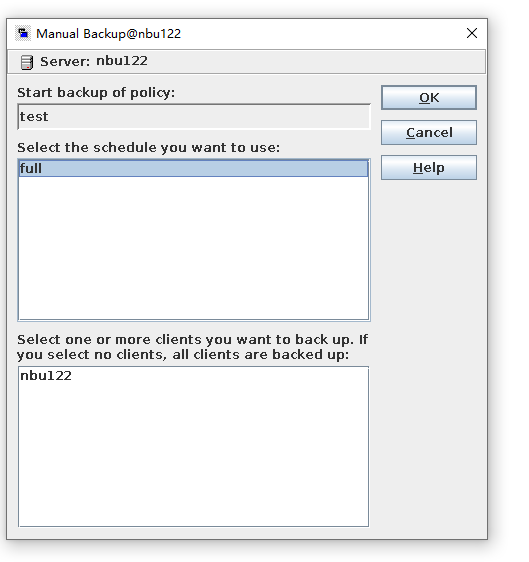

Test Result | 1. Create a storage server, disk pool, storage unit, and policy. Enable resumable download and set the interval to 5 minutes.     2. Insert the 100 GB file testfile_1 and perform full backup.  3. During the backup, the task is suspended for 10 minutes.   4. Pause the task for three minutes and resume the backup task. The backup is complete.

5. After the restoration, check data consistency. After the copy expires, verify space reclamation.

|

Conclusion |

|

Remarks |

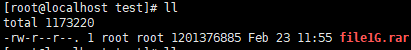

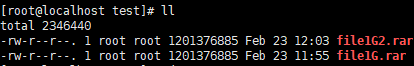

3.5.1.5 OST – WORM LSU Full Error

Objective | To verify that the backup system can raise WORM LSU full error |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

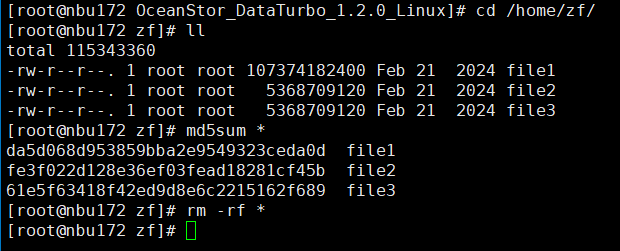

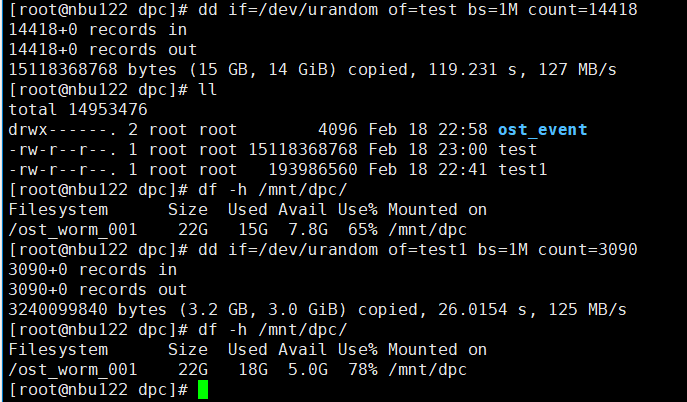

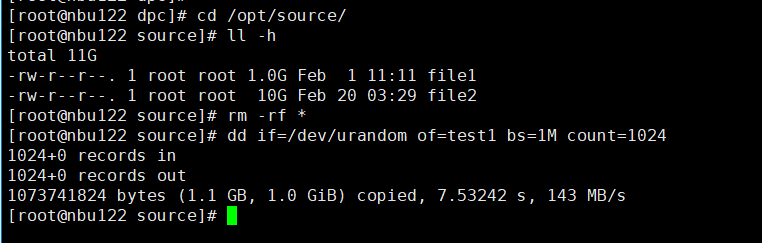

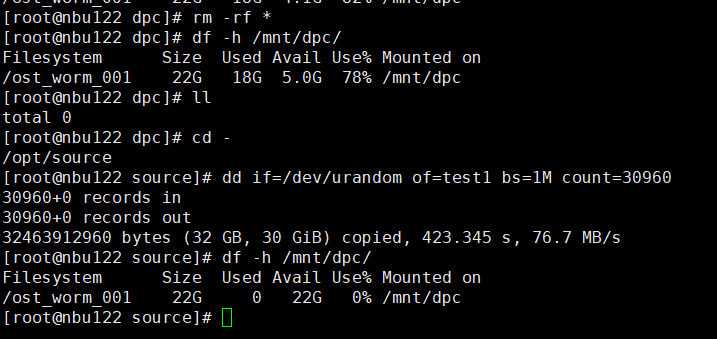

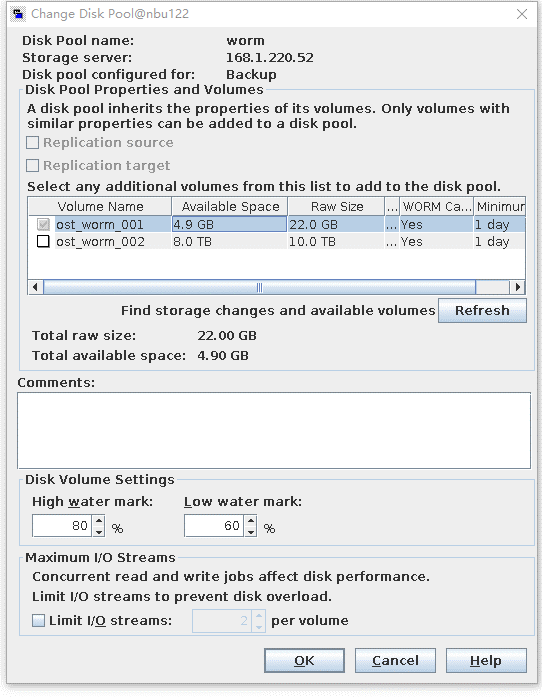

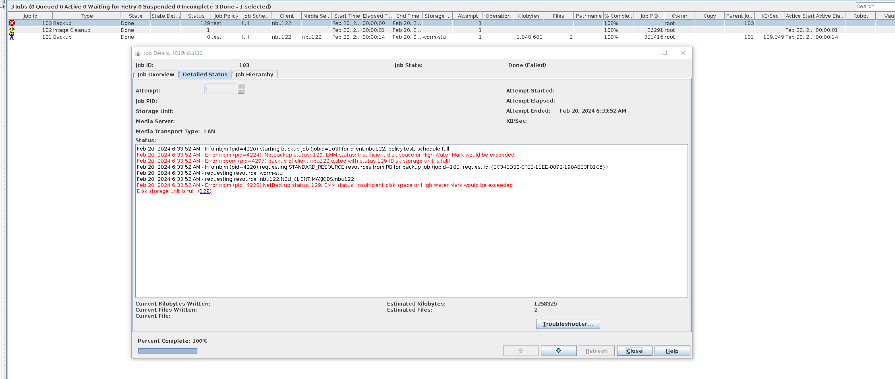

Test Result | 1. Use the Worm file system to create a storage server, disk pool, and storage unit, set the high watermark threshold to 80%, and preconfigure data to the LSU so that the capacity reaches 80%.

2. Back up another group of data to the LSU so that the capacity of the LSU exceeds 80% but falls below 100%.

3. Delete all copies and back up a group of data to the LSU again. The data size exceeds the total capacity of the LSU.

|

Conclusion |

|

Remarks |

3.5.1.6 OST – WORM Indelibility and Immutability

Objective | To verify the WORM indelibility and immutability of the backup system |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

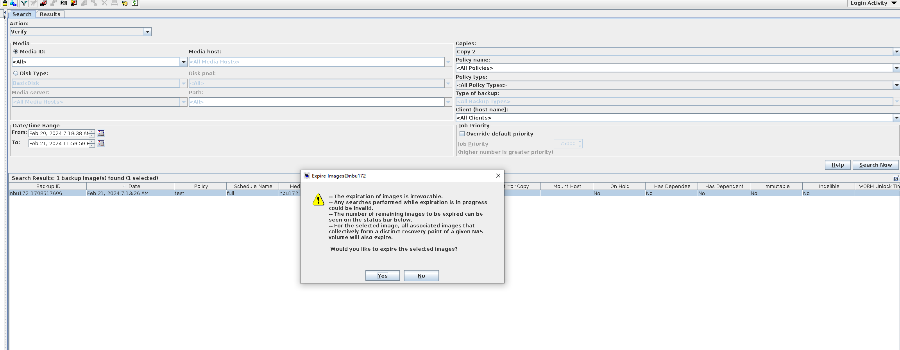

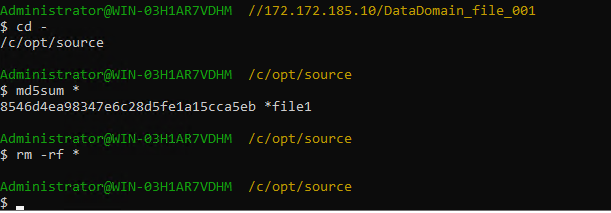

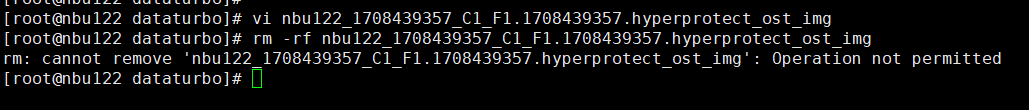

Test Result | 1. A regulation-level worm file system has been created on the storage.  2. Add the storage server, diskpool, and storage unit on the NBU.  3. Create a backup task to back up the image to the Worm file system and set the protection period.

4. The image expires within the protection period.  5. Modify and delete files within the protection period.

|

Conclusion |

|

Remarks |

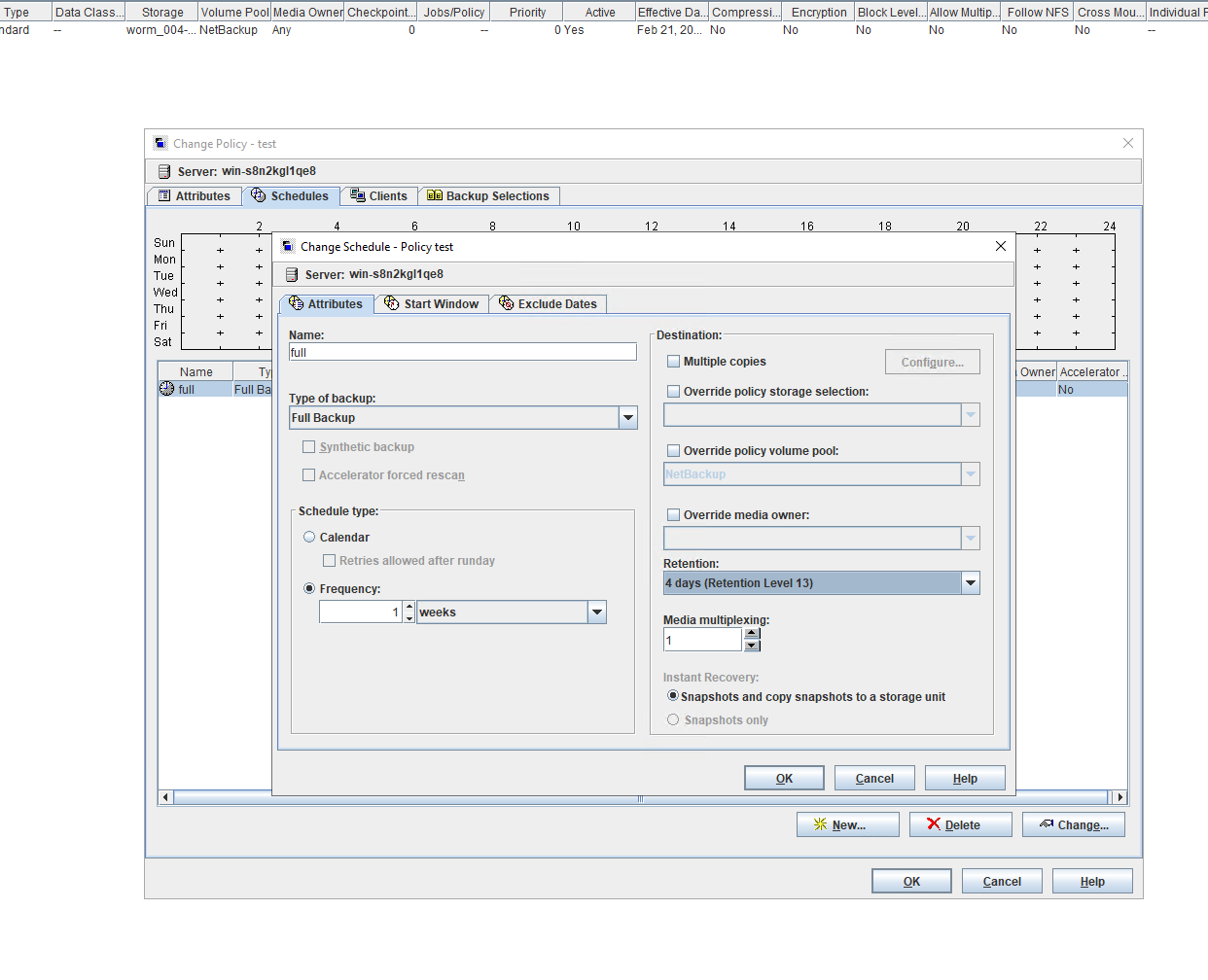

3.5.1.7 OST – WORM Retention Levels

Objective | To verify WORM retention levels of the backup system |

Networking | Networking diagram for verifying functions |

Prerequisites |

|

Procedure |

|

Expected Result |

|

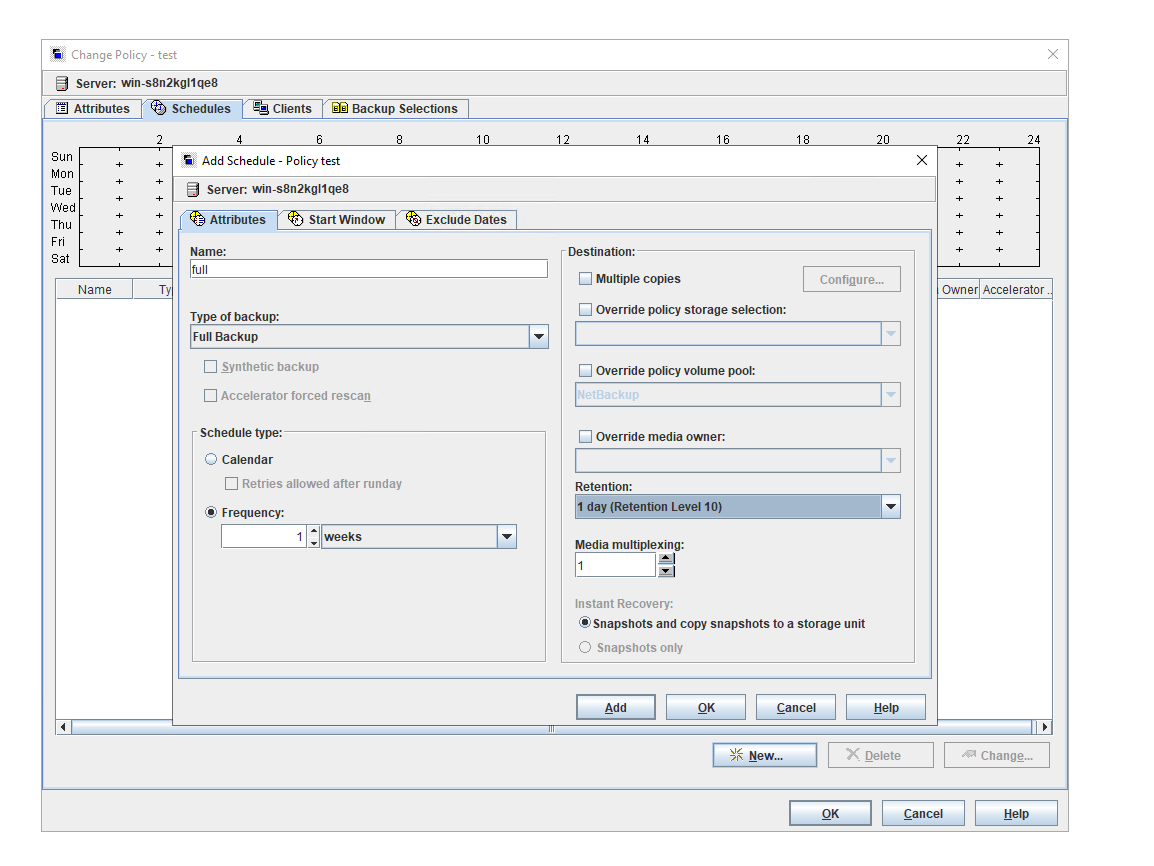

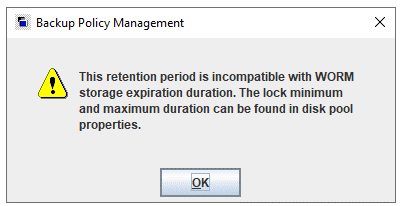

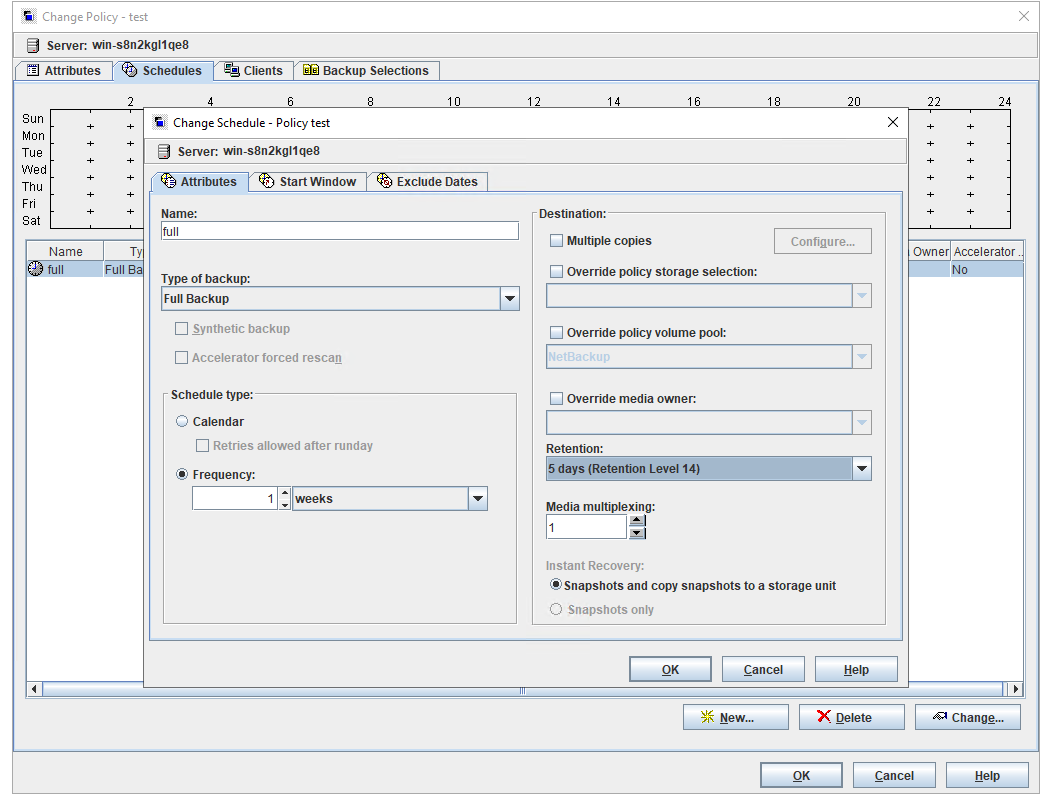

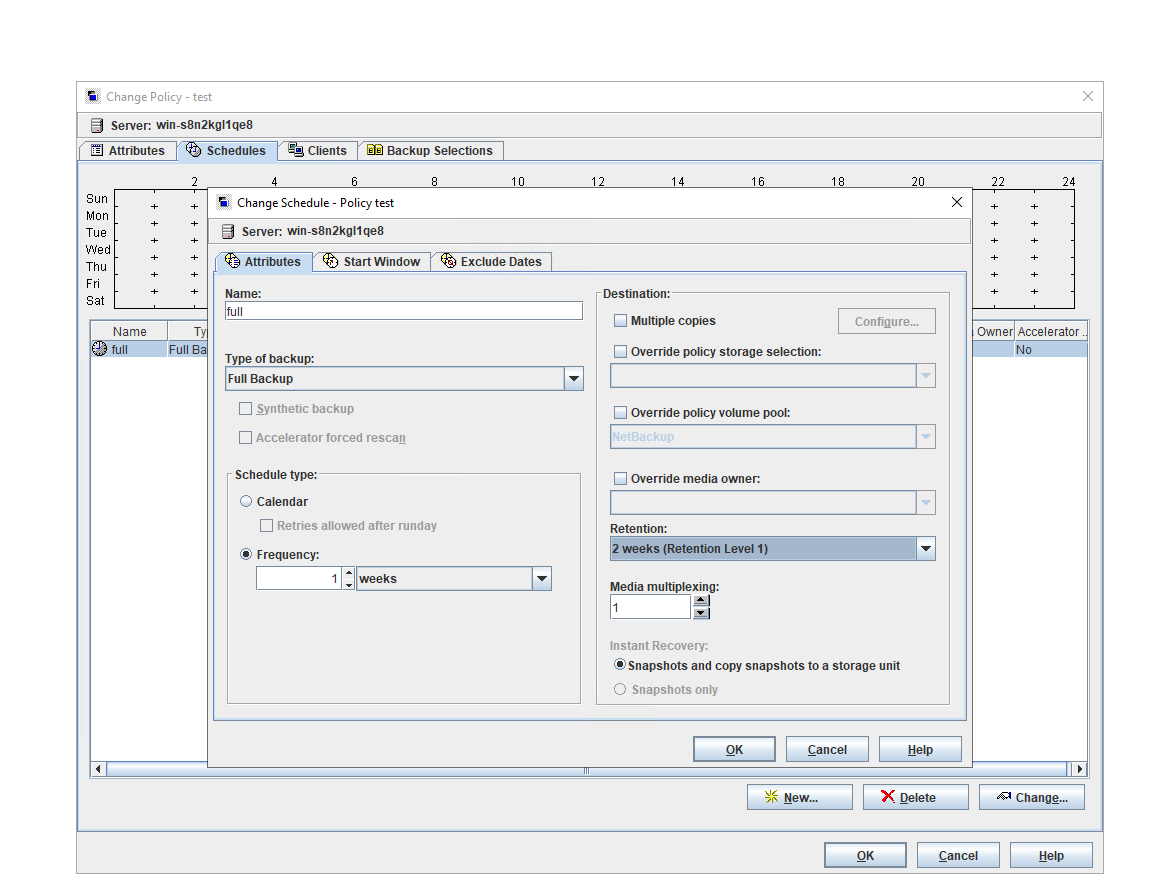

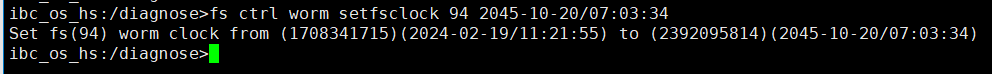

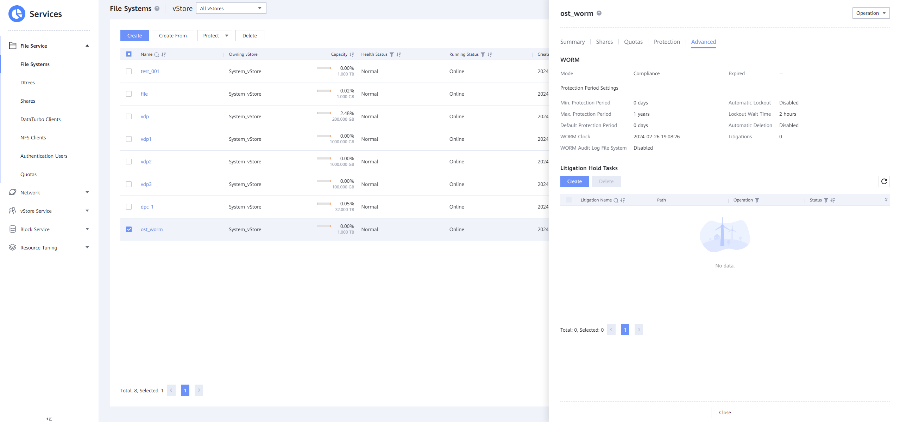

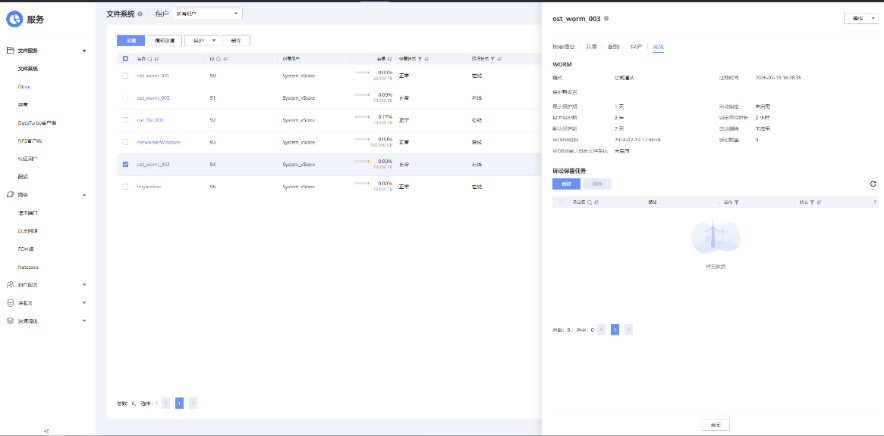

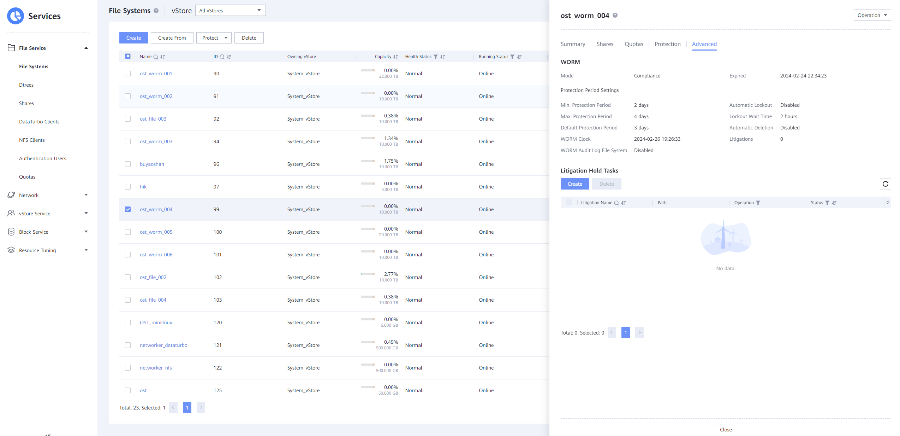

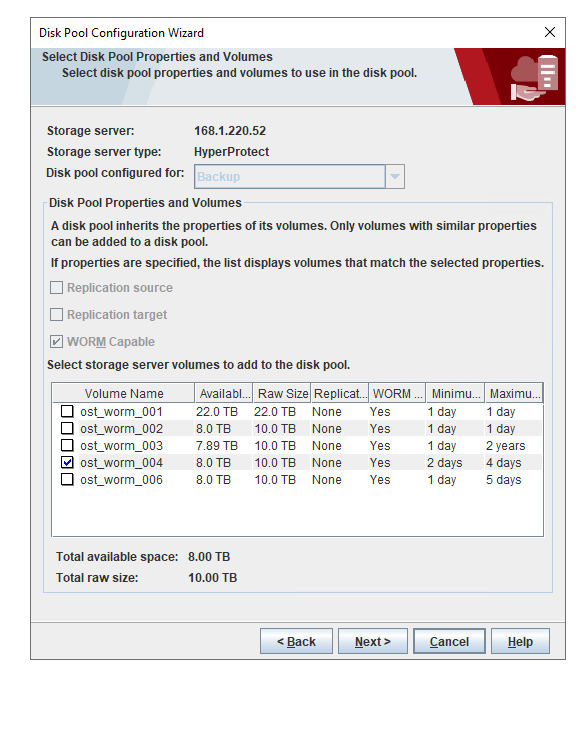

Test Result | 1. Create a Worm file system on the storage system and set the maximum and minimum protection periods to 4 days and 2 days respectively. |

Conclusion |

|

Remarks |

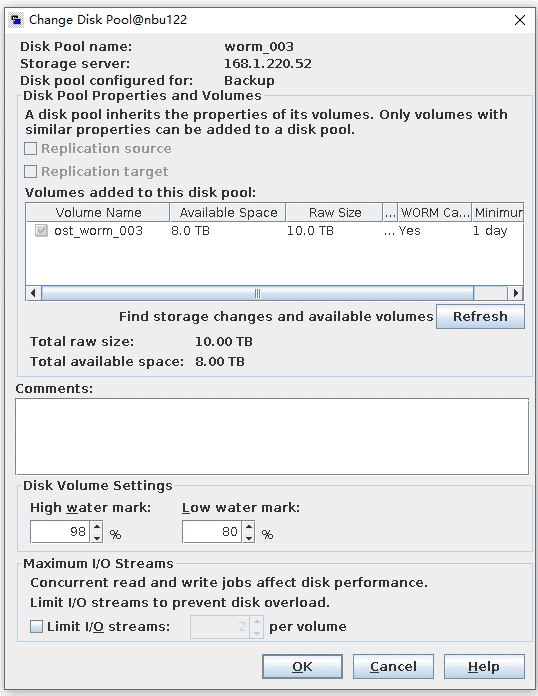

4. Verification Result

4.1 Test Result

Table 4-1 The following table lists the result of each test case.

Category | Case No. | Case Name | Test Result |

|---|---|---|---|

Basic Functions | 3.1.1 | Creation of Storage Server, Disk Pool and STU | Pass |

3.1.2 | Open Storage Backup, Import, Verify | Pass | |

3.1.3 | Pass | ||

3.1.4 | Cumulative Incremental Backup | Pass | |

3.1.5 | Inline Tape Copy Backup and Restore | Pass | |

3.1.6 | Policy Configuration for OpenStorage | Pass | |

3.1.7 | Backup with TIR Move Detect | Pass | |

3.1.8 | Backup with TIR No Move Detect | Pass | |

3.1.9 | User Archive Backup | Pass | |

Replication | 3.2.1.1 | Targeted Auto Image Replication | Pass |

3.2.1.2 | Bi-directional Targeted A.I.R. Replication | Pass | |

3.2.1.3 | Inject NetBackup Failure During Targeted A.I.R. Replication | Pass | |

3.2.1.4 | Break Implementation Replication Link During Targeted A.I.R. Replication | Pass | |

3.2.1.5 | Targeted A.I.R. Replication when Target Data Storage is Full | Pass | |

3.2.1.6 | Trigger Multiple Events for A Single Targeted AIR Image (previous replication succeeded) | Pass | |

3.2.1.7 | Perform Backups/Restores with Fan-out Targeted A.I.R | Pass | |

3.2.1.8 | Perform Backups/Restores with Cascaded Targeted A.I.R | Pass | |

3.2.1.9 | OST – Targeted A.I.R [ Backup – Duplication – Replication – Import from Replica] | Pass | |

3.2.1.10 | OST – Targeted A.I.R [ Backup – Replication – Import from Replica] | Pass | |

3.2.2.1 | OST – Backup and Restore Using Optimized Duplication-Enabled Storage Lifecycle Policy | Pass | |

Accelerator | 3.3.1.1 | OST – Confirm STS Functionality and Performance with Accelerator | Pass |

3.3.1.2 | Confirm STS Functionality with Accelerator and Multistreaming for NDMP | Pass | |

3.3.1.3 | Confirm STS Functionality with Accelerator and VMware | Pass | |

3.3.1.4 | Confirm STS Functionality and Performance with Accelerator and GRT | Pass | |

3.3.2.1 | Optimized Synthetic Backup and Restore | Pass | |

Recovery | 3.4.1.1 | Optional GRT-Testing Media Server Configuration | Pass |

Data Security | 3.5.1.1 | OST – WORM Optimized Duplication Tests | Pass |

3.5.1.2 | OST – WORM Image Expiration | Pass | |

3.5.1.3 | OST – WORM Extended Expiration | Pass | |

3.5.1.4 | OST – WORM LSU Check Point Restart | Pass | |

3.5.1.5 | OST – WORM LSU Full Error | Pass | |

3.5.1.6 | OST – WORM Indelibility and Immutability | Pass | |

3.5.1.7 | OST – WORM Retention Levels | Pass |

4.2 Conclusion

According to the test results, OceanProtect backup storage supports basic functions, target A.I.R, accelerator, granular recovery, WORM, optimized deduplication, and optimized synthesis of NetBackup OST. OceanProtect backup storage is compatible with NetBackup OST plugin.

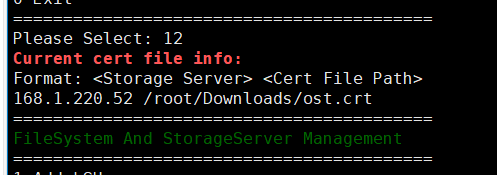

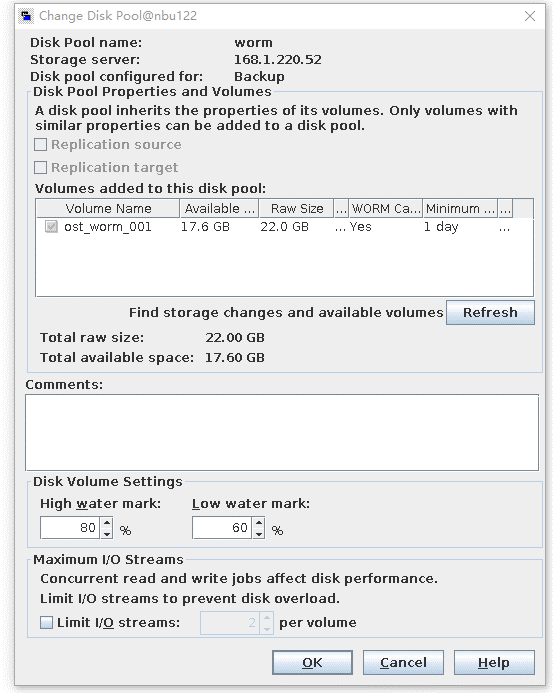

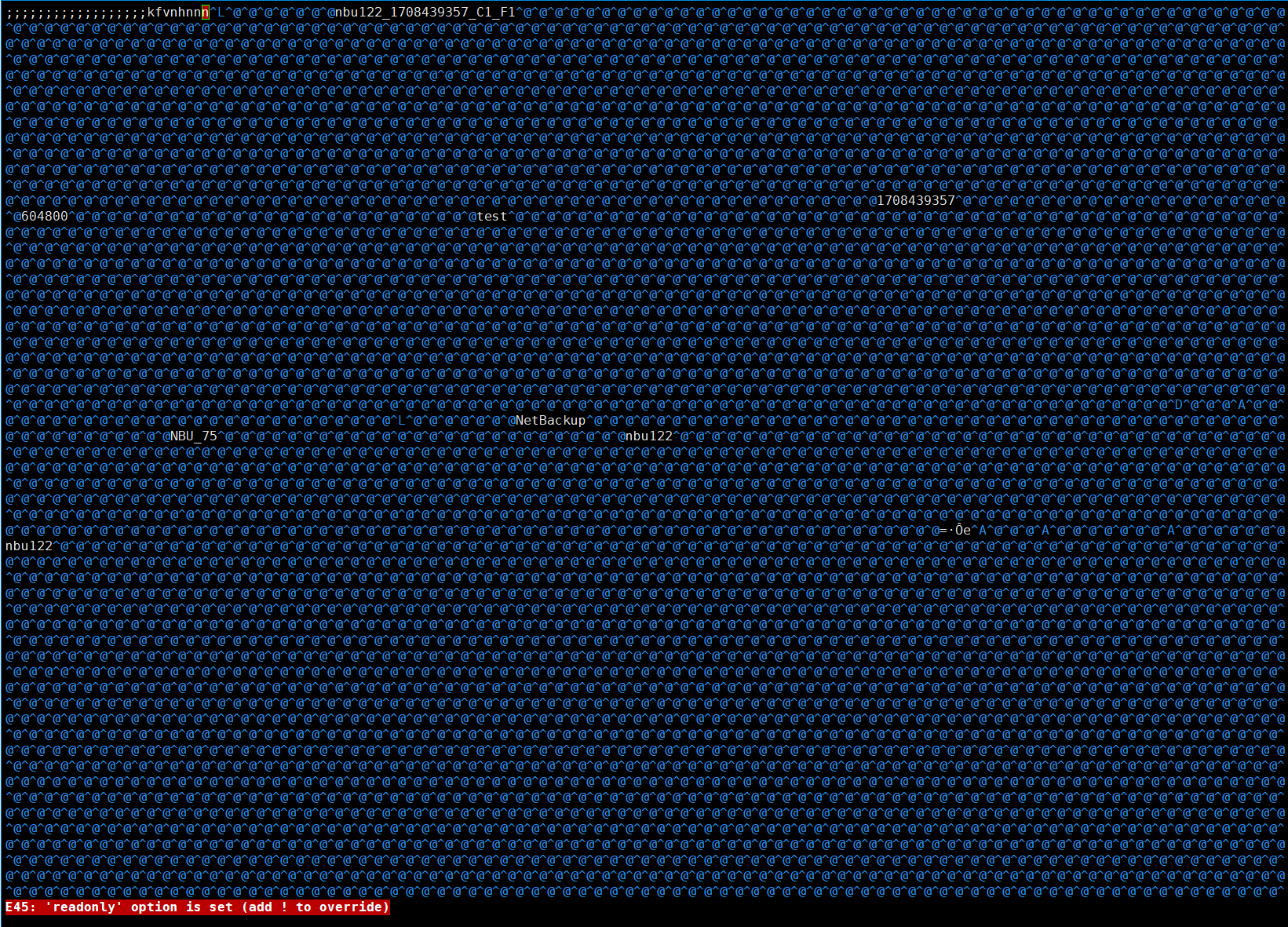

2. Add the storage server, worm disk pool, and storage unit to the NBU.

2. Add the storage server, worm disk pool, and storage unit to the NBU.

3. Set the worm lock period to 1 day and 5 days. 1day

3. Set the worm lock period to 1 day and 5 days. 1day