Huawei OceanStor Dorado All-Flash Storage & Huawei OceanStor Hybrid Flash Storage and VMware ESXi 9.0

Interoperability Test Report

Axians Global

All Rights Reserved

Executive Summary

Axians Global (“Axians”) assessed the interoperability of Huawei OceanStor Dorado All-Flash Storage & Huawei OceanStor Hybrid Flash Storage (hereinafter referred to as “the storage” as well) and VMware ESXi 9.0 operating system through FC and iSCSI.

In the assessment, Axians has determined that VMware ESXi 9.0 operating system will function with Huawei OceanStor Dorado All-Flash Storage & Huawei OceanStor Hybrid Flash Storage for the following scenarios:

Storage Tested | Protocol(s) Tested | Test Scenario | Result |

OceanStor Dorado All-Flash Storage & OceanStor Hybrid Flash Storage | FC and iSCSI | Basic Connectivity Test | Passed |

Reliability Test | Passed | ||

Performance and Stability Test | Passed |

1.Environment Configuration

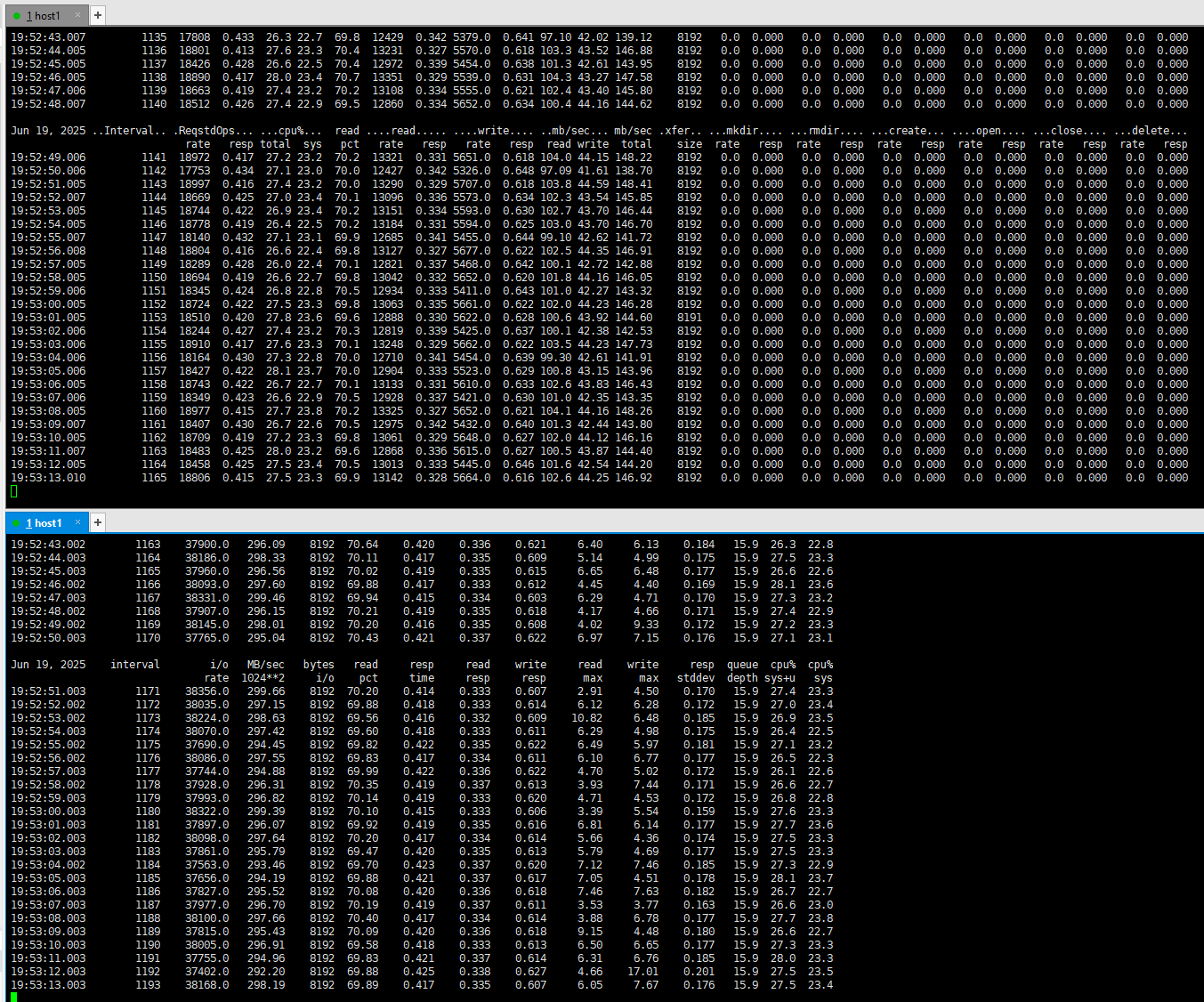

1.1 Networking Diagram

Figure 1.1 Storage and VMware ESXi Compatibility Test Networking

Networking description:

Both HBA ports of the host are connected to the switch.

Each controller of storage is connected to 3 ports to the switch, 2 of which are business ports and the other is a replication link port.

Each host port on the switch is zoned with all storage business ports (2 zones, each containing one host port and 8 storage ports).

The replication link ports of the two storage are zoned correspondingly (2 zones, each containing one replication link port for each of the two storage ports).

Each controller has a network port configured with an IP to connect to the quorum server network.

1.2 Hardware and Software Configuration

1.2.1 Storage Configuration

Table 1-1 Storage Configuration Table

Name | Description | Quantity |

Storage | OceanStor Dorado All-Flash Storage | 2 |

Storage | OceanStor Hybrid Flash Storage | 2 |

1.2.2 Other Hardware Configuration

Table 1-2 Hardware Configuration

Name | Description | Quantity | Usage |

VMWare ESXi Server |

| 2 | Host Server |

Fibre Channel Switch | Brocade G610 32G Fibre Channel Switch | 1 | Service Network Switch |

Ethernet Switch | Huawei CE6850 Ethernet Switch | 1 | Service Network Switch |

1.2.3 Test Software and Tools

Table 1-3 Test Software and Tool List

Software Name | Description | Version |

VMware ESXi | VMware Virtualization Platform | 9.0 |

Vdbench | IO Test Tool | 50407 |

Red Hat Enterprise Linux | Virtual Machine | 8.8 |

2. Basic Connectivity Test

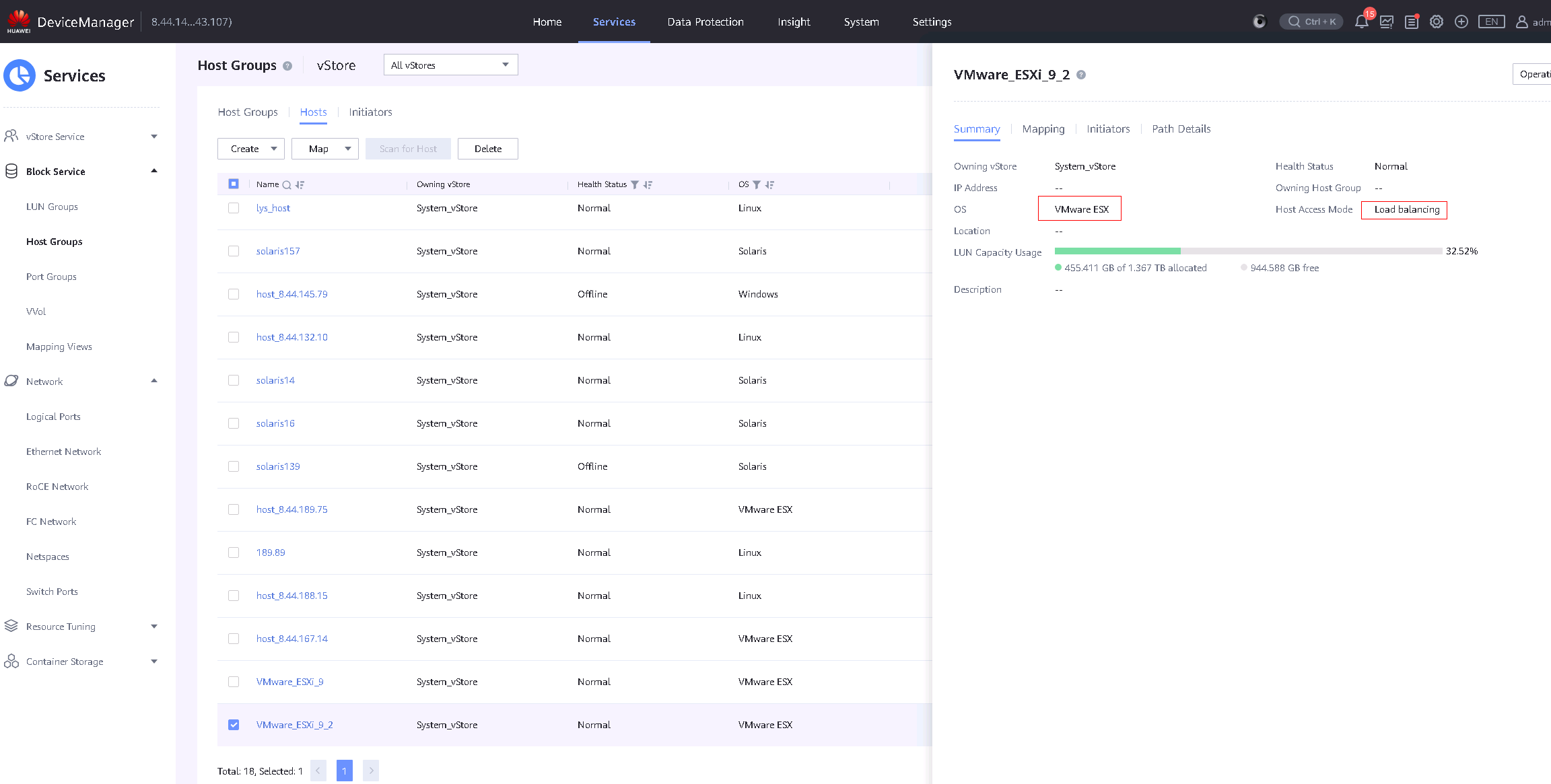

2.1 Port Mapping

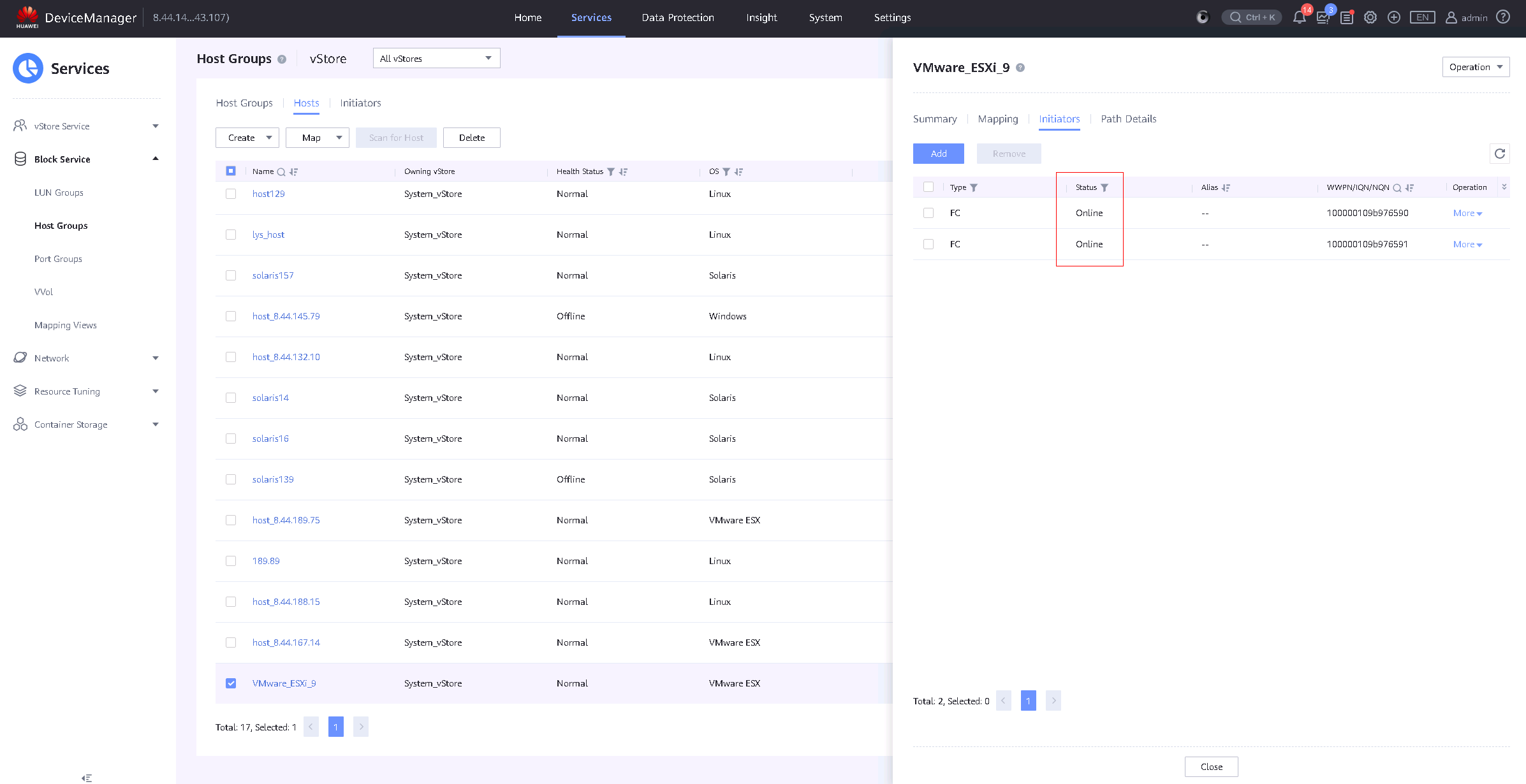

Test Purpose | To Verify That the Host Initiator Status is Online |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario and the physical links are functioning properly. 2.The host system is operating normally. 3.The Storage system is operating normally. |

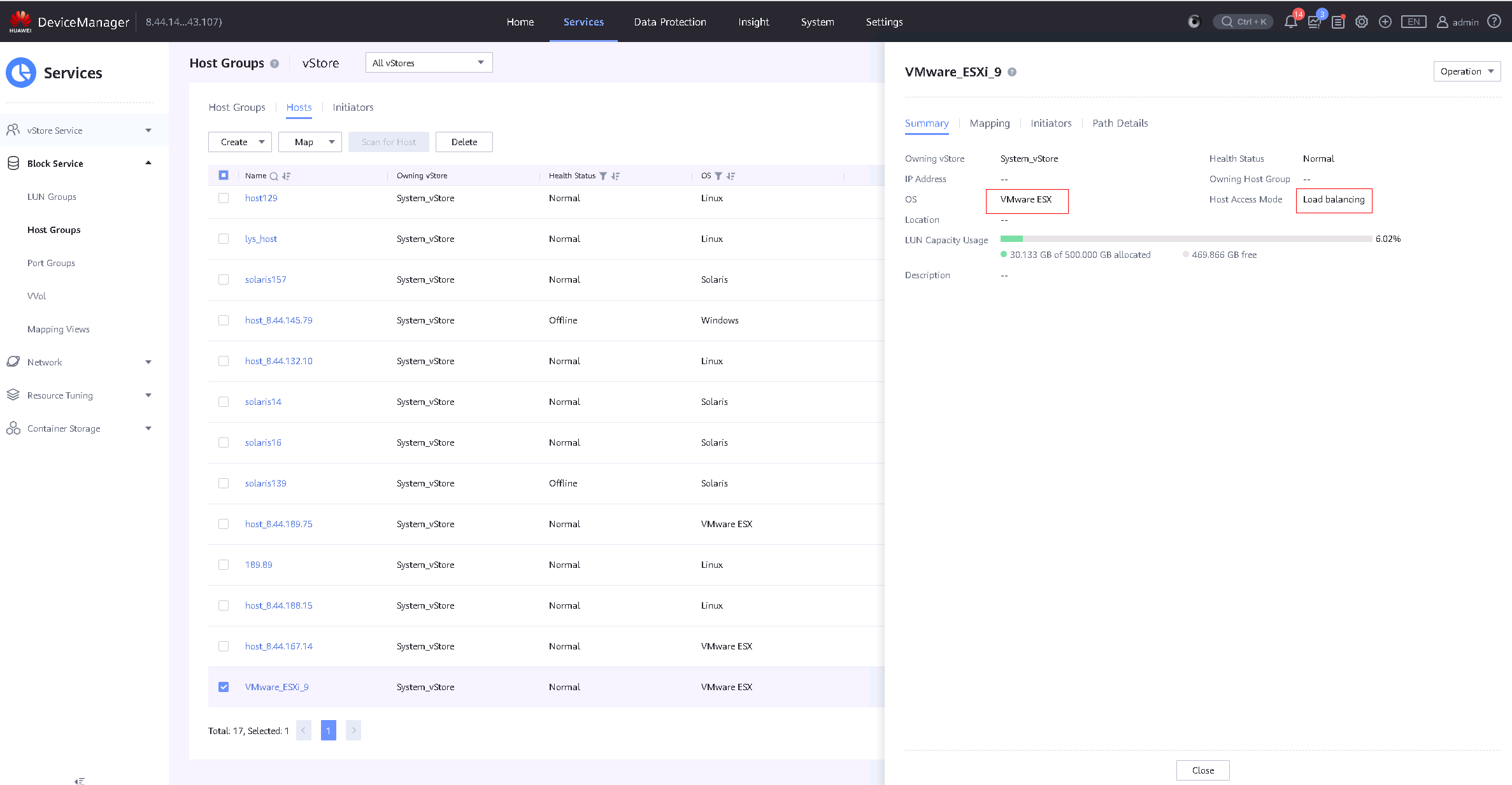

Test Procedure | 1.Log into the storage management system, add the host initiator to the corresponding host, and configure the host mode to load balancing. 2.Observe the initiator connection status. |

Expected Result | 1.In step 1, the host initiator is added successfully and host mode is configured correctly. 2.In step 2, the host initiator status is online. |

Test Record |

1.In step 1, the host initiator is added successfully and host mode is configured correctly.

2.In step 2, the host initiator status is online.

|

Test Conclusion | Passed |

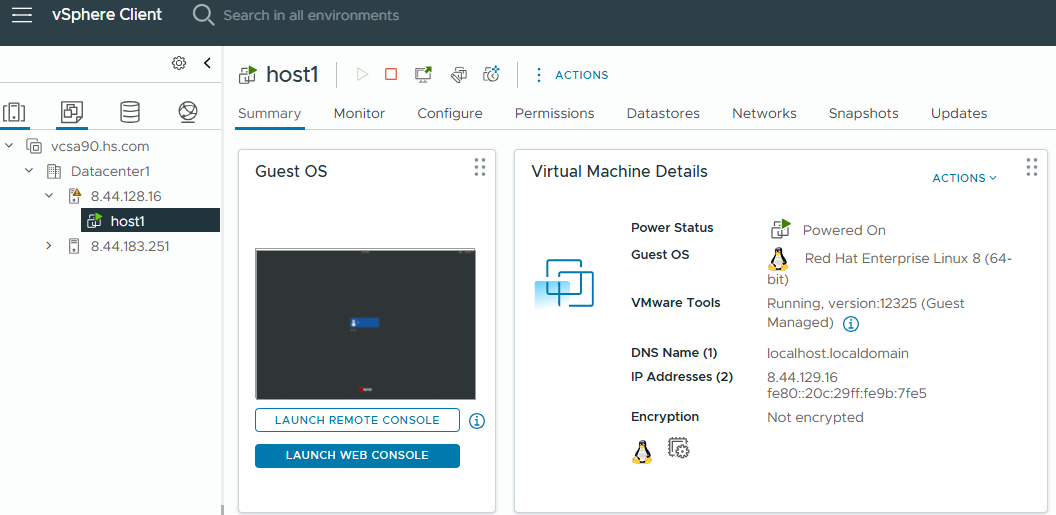

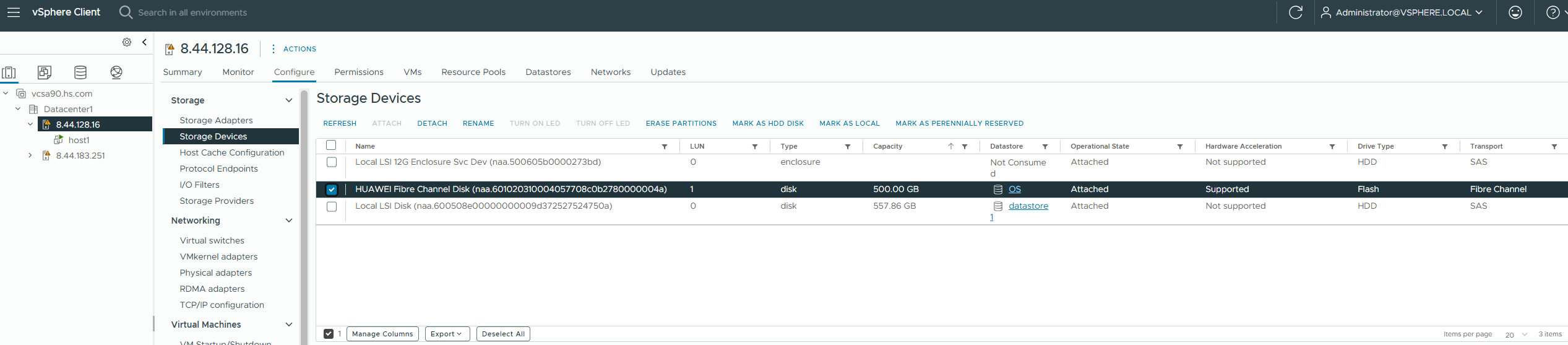

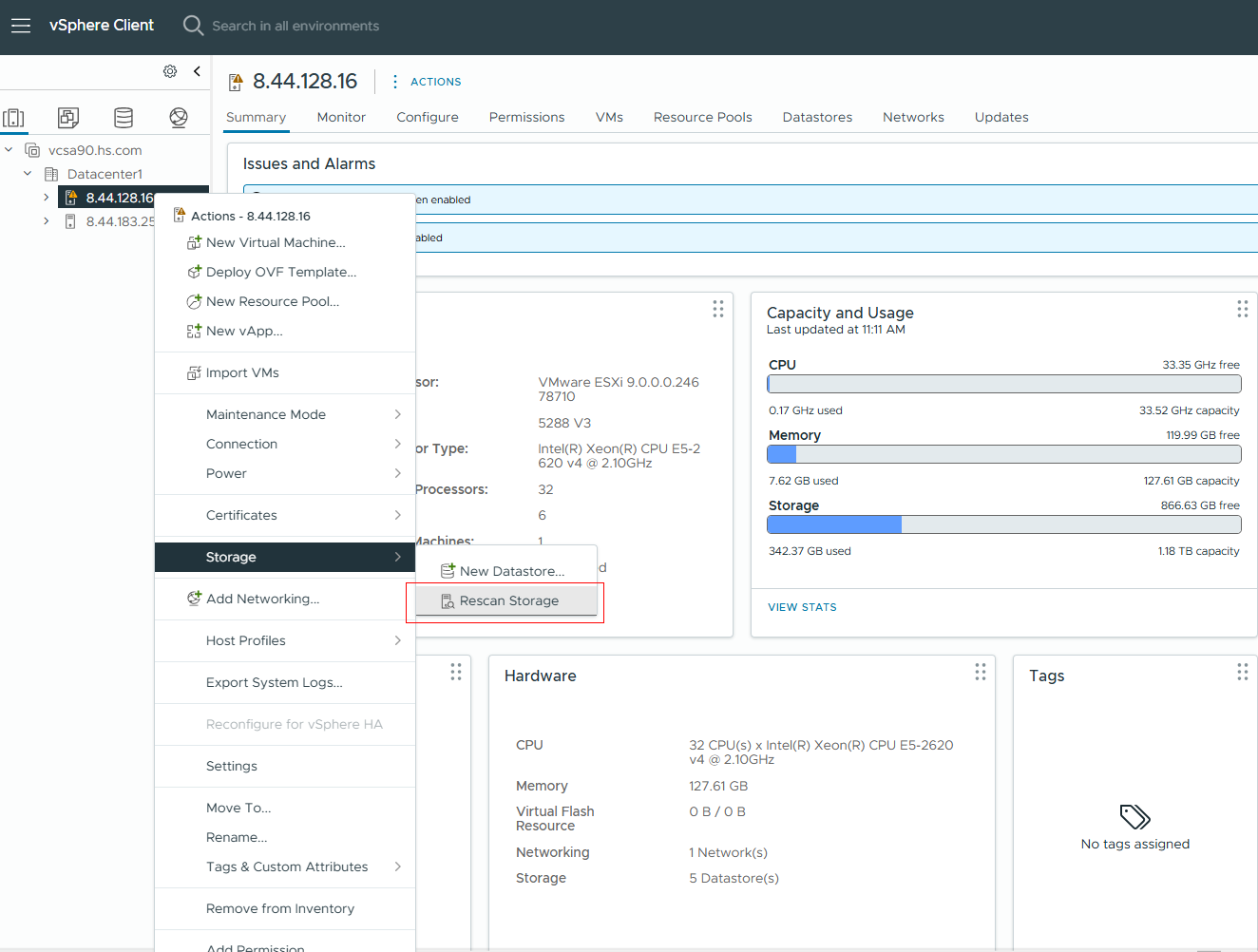

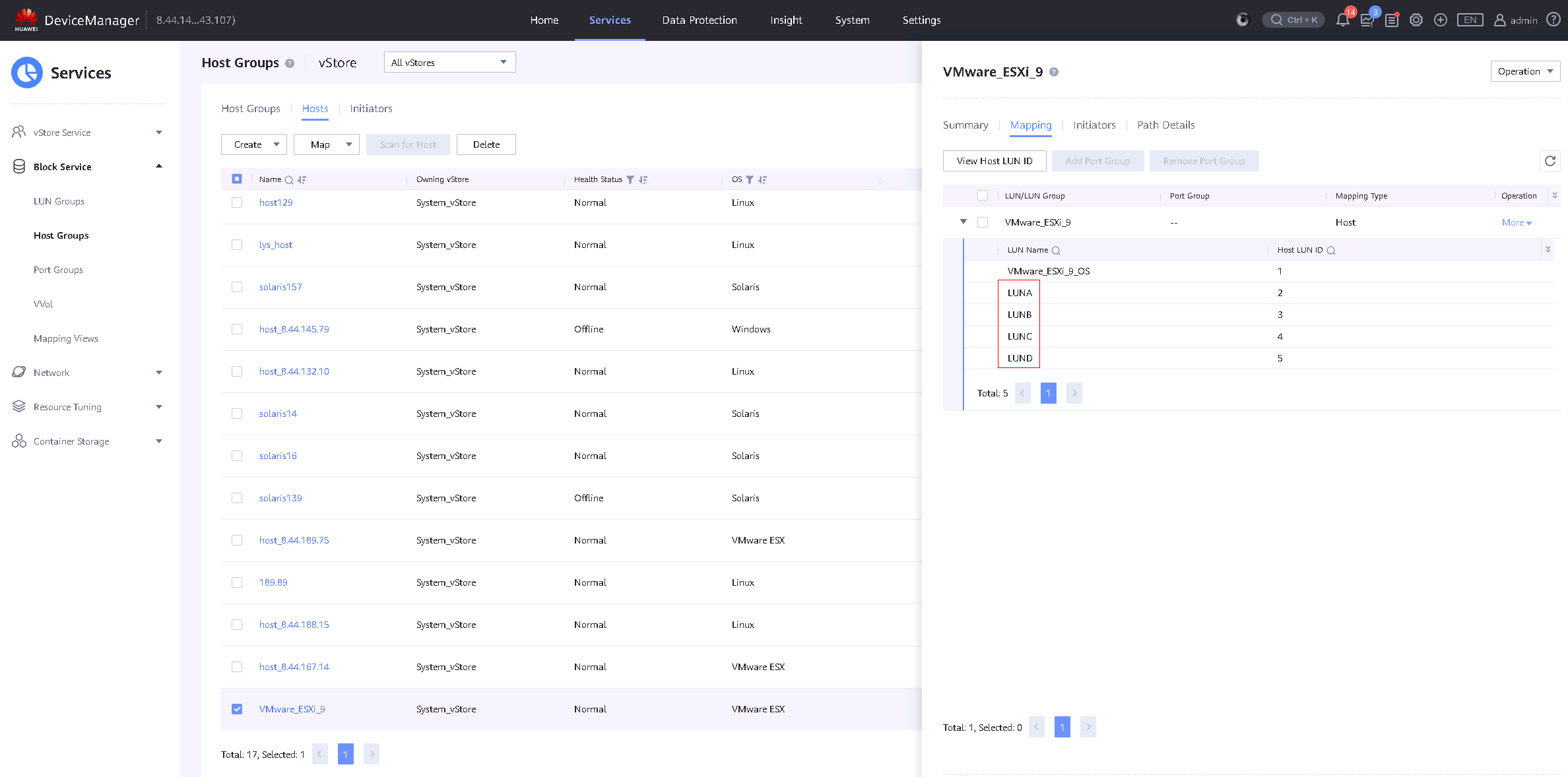

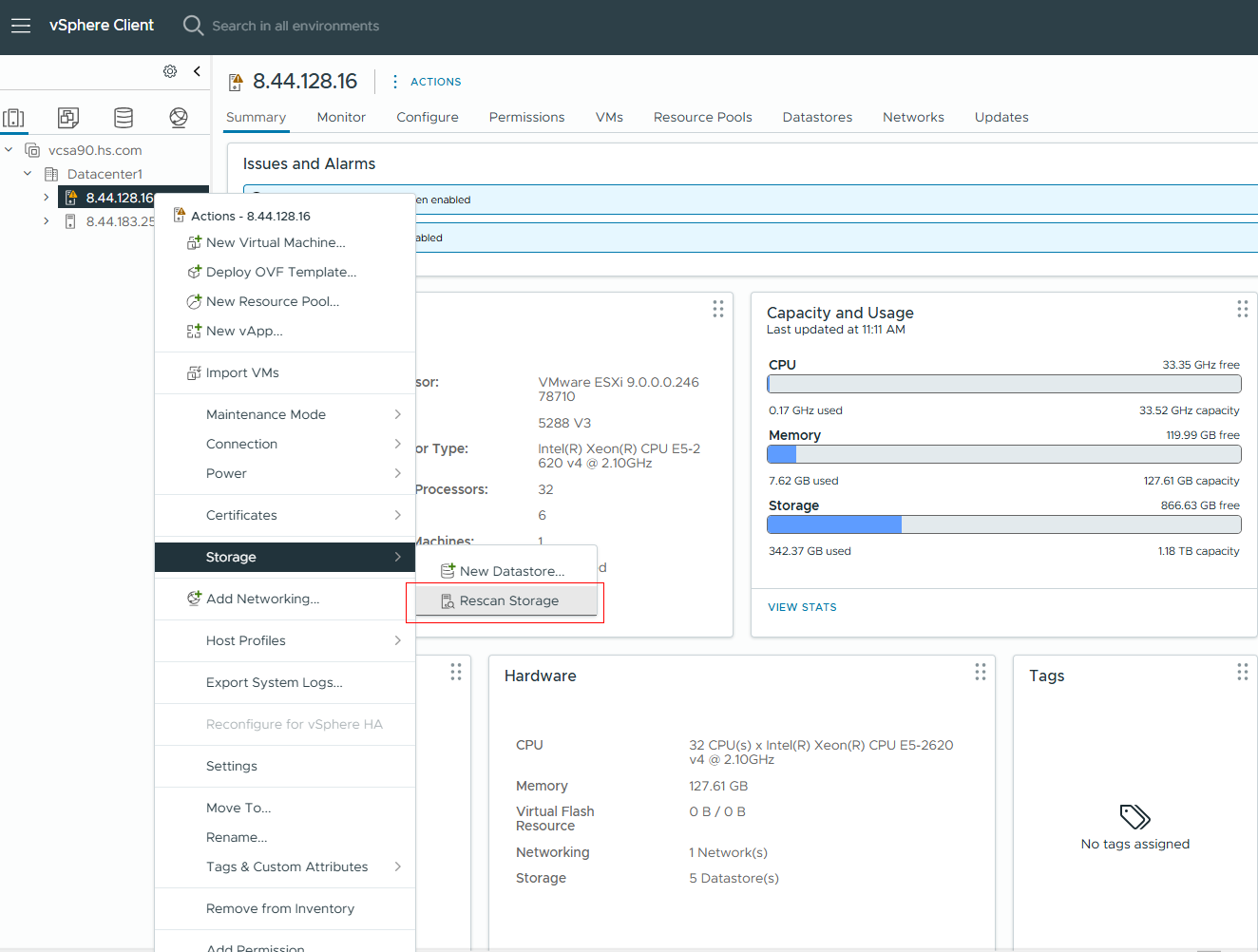

2.2 Virtual Machine Deployment

Test Purpose | To Verify That the Virtual Machine Could be Deployed on the LUN Mapped from Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The physical link is functioning properly. 2.The host system is operating normally. 3.The storage system is functioning properly. |

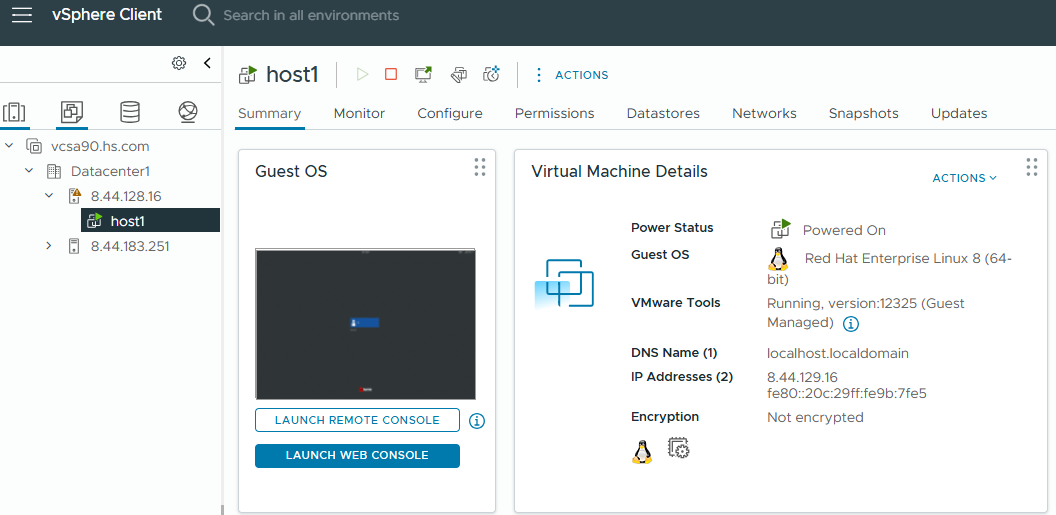

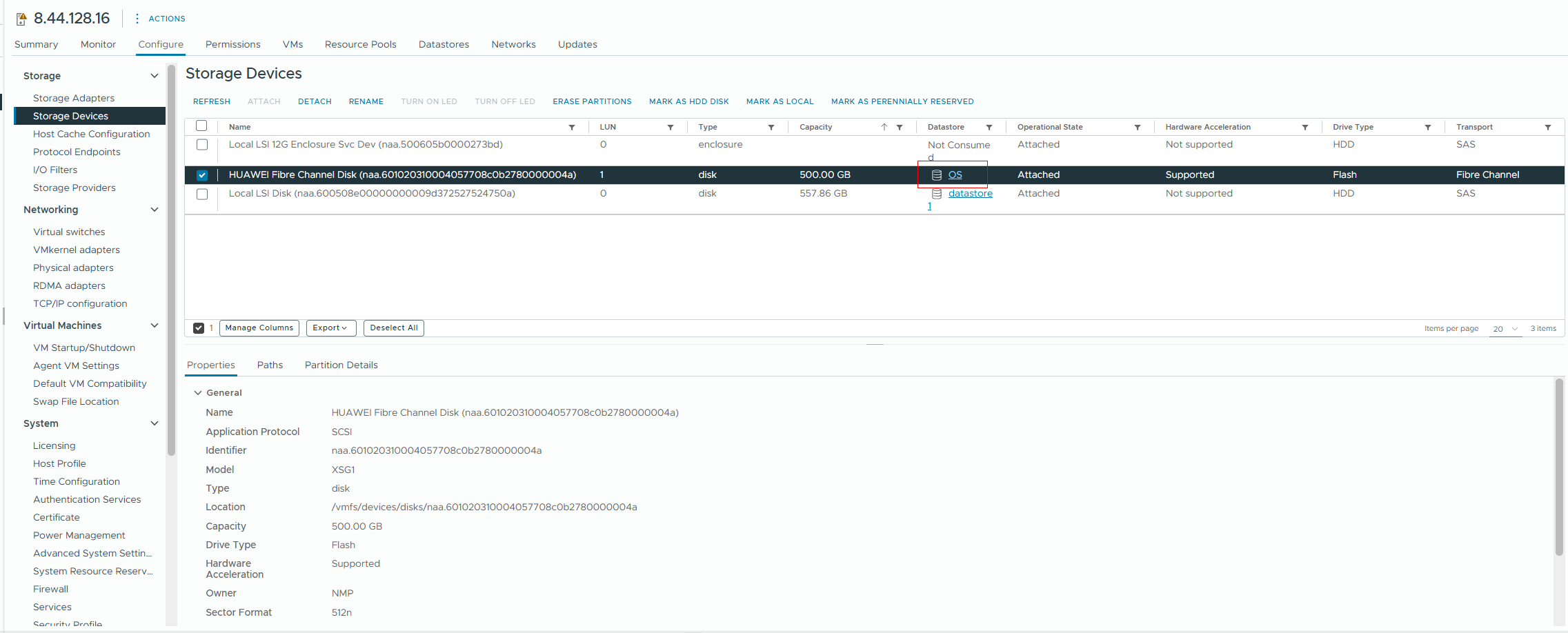

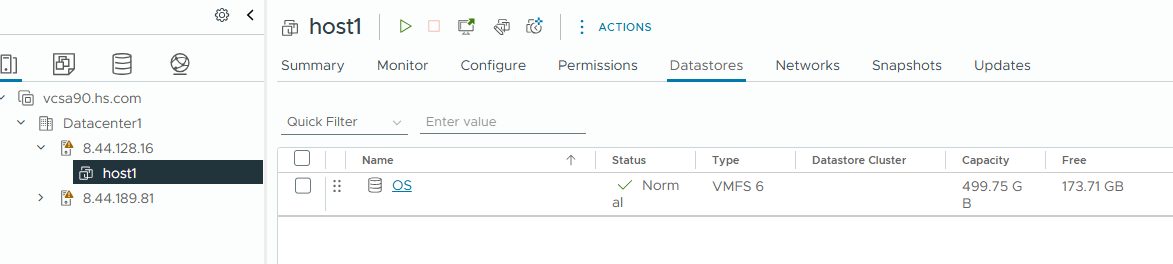

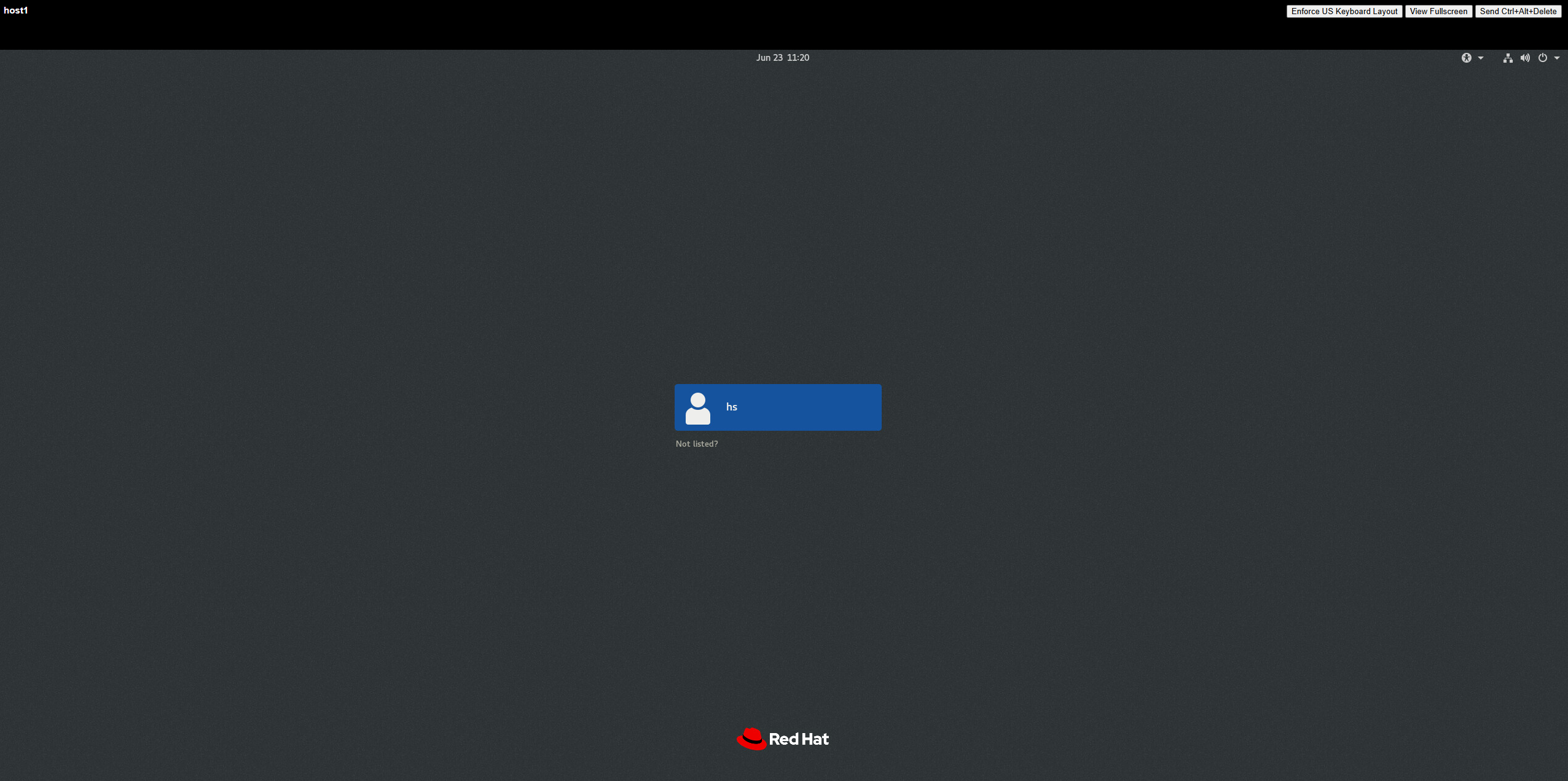

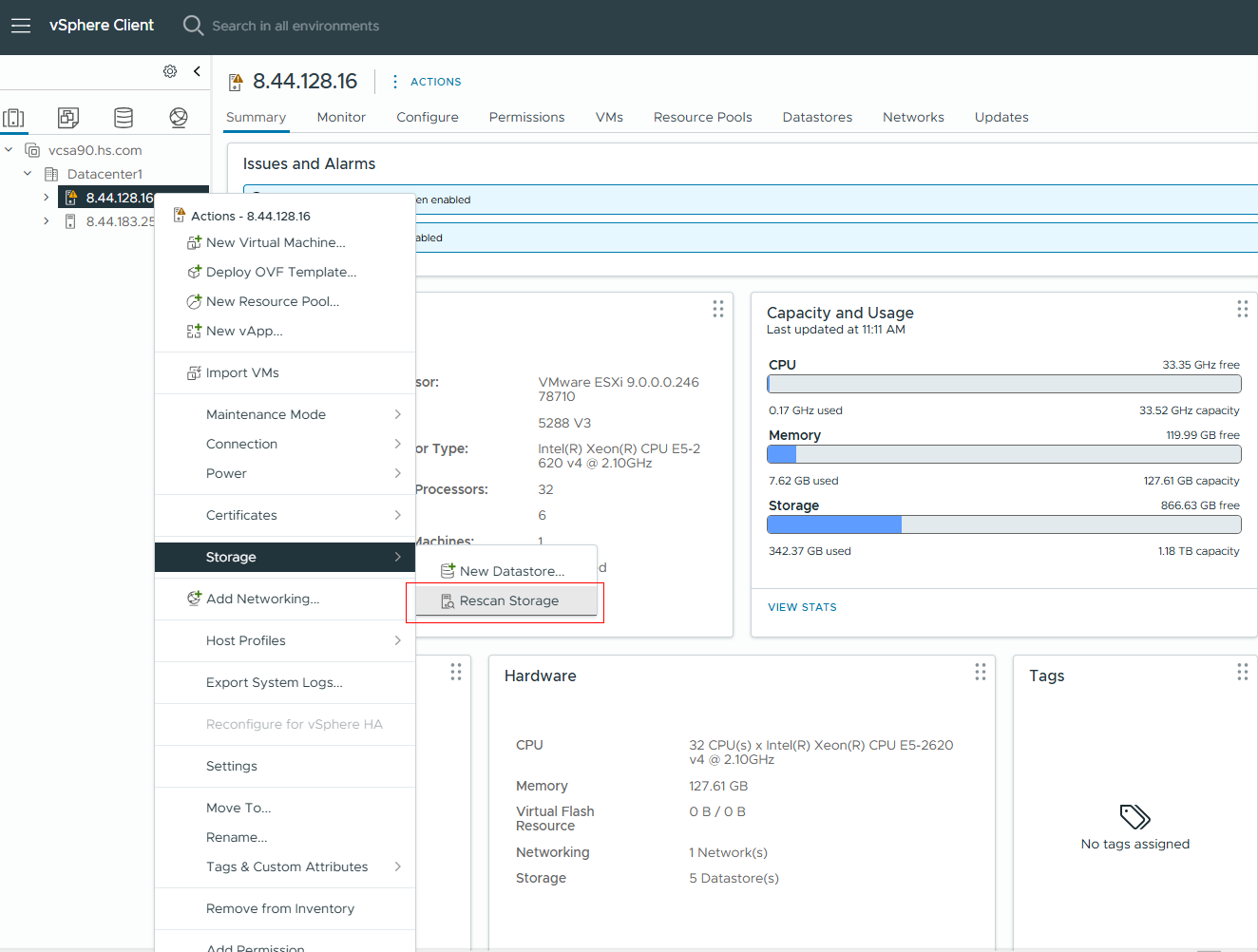

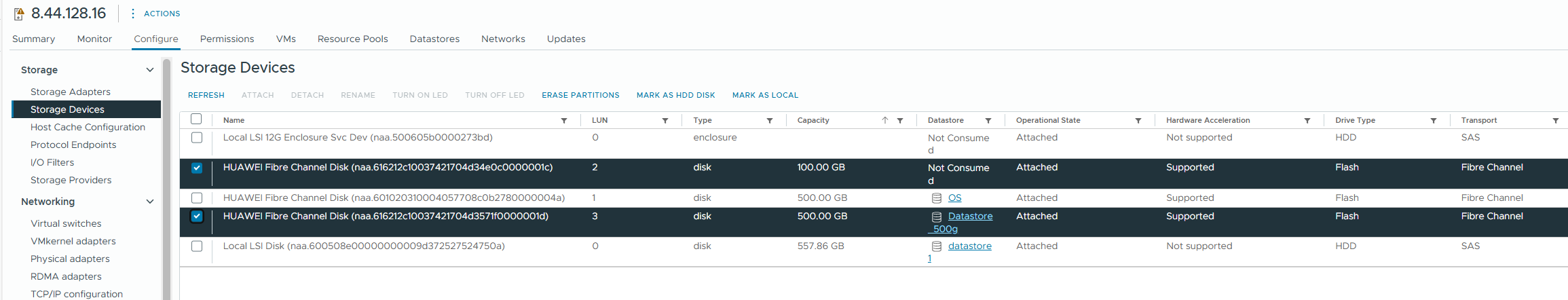

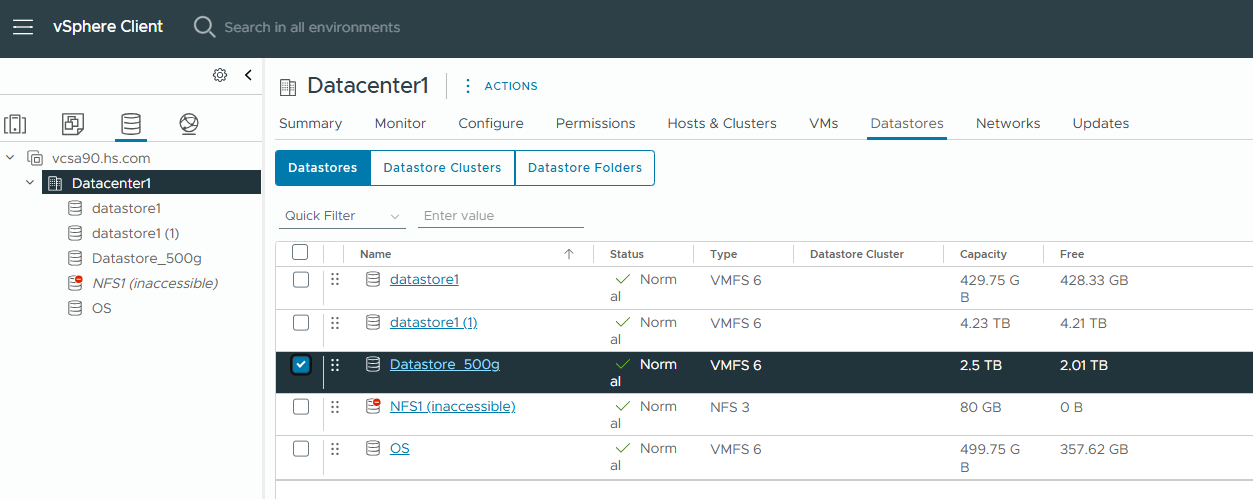

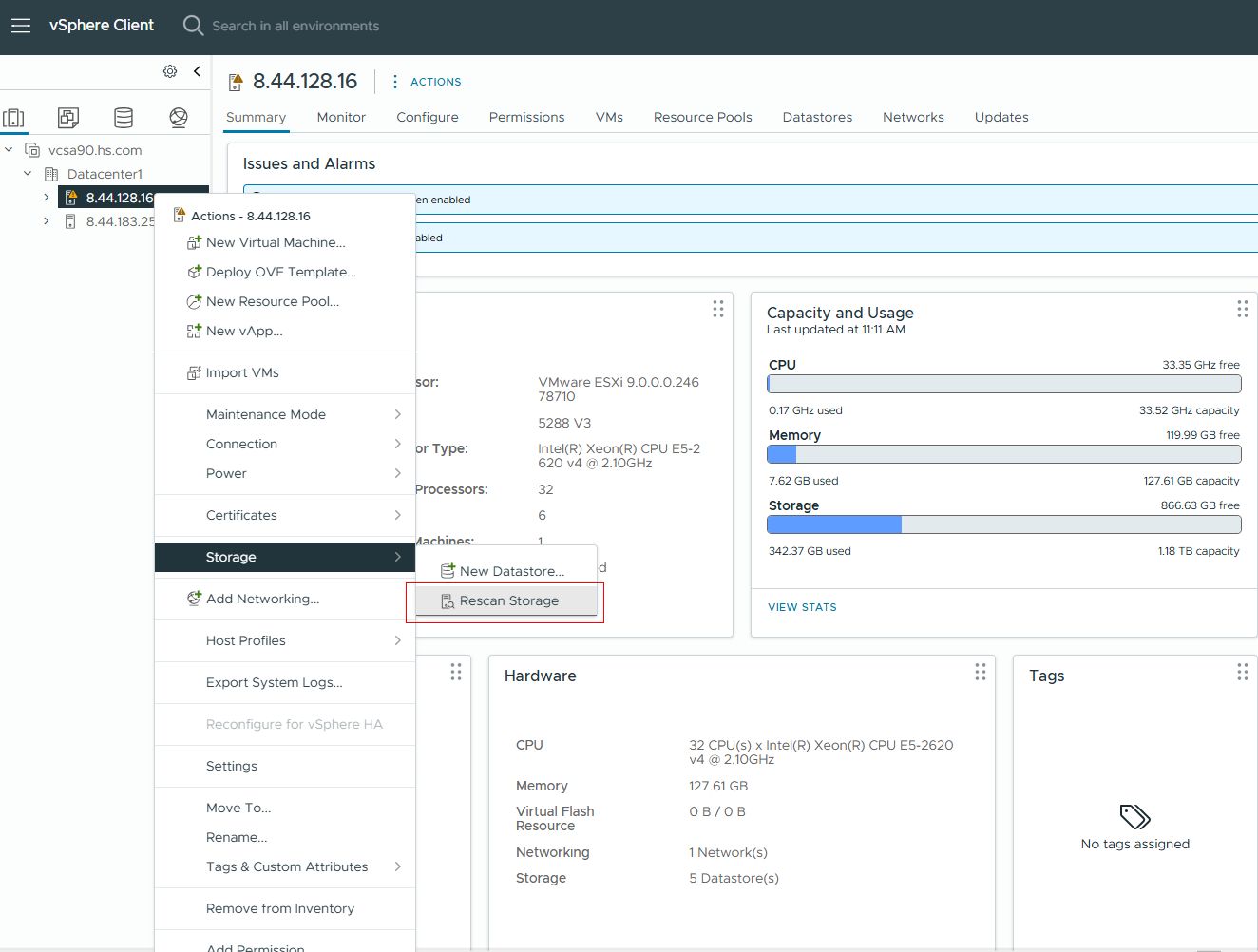

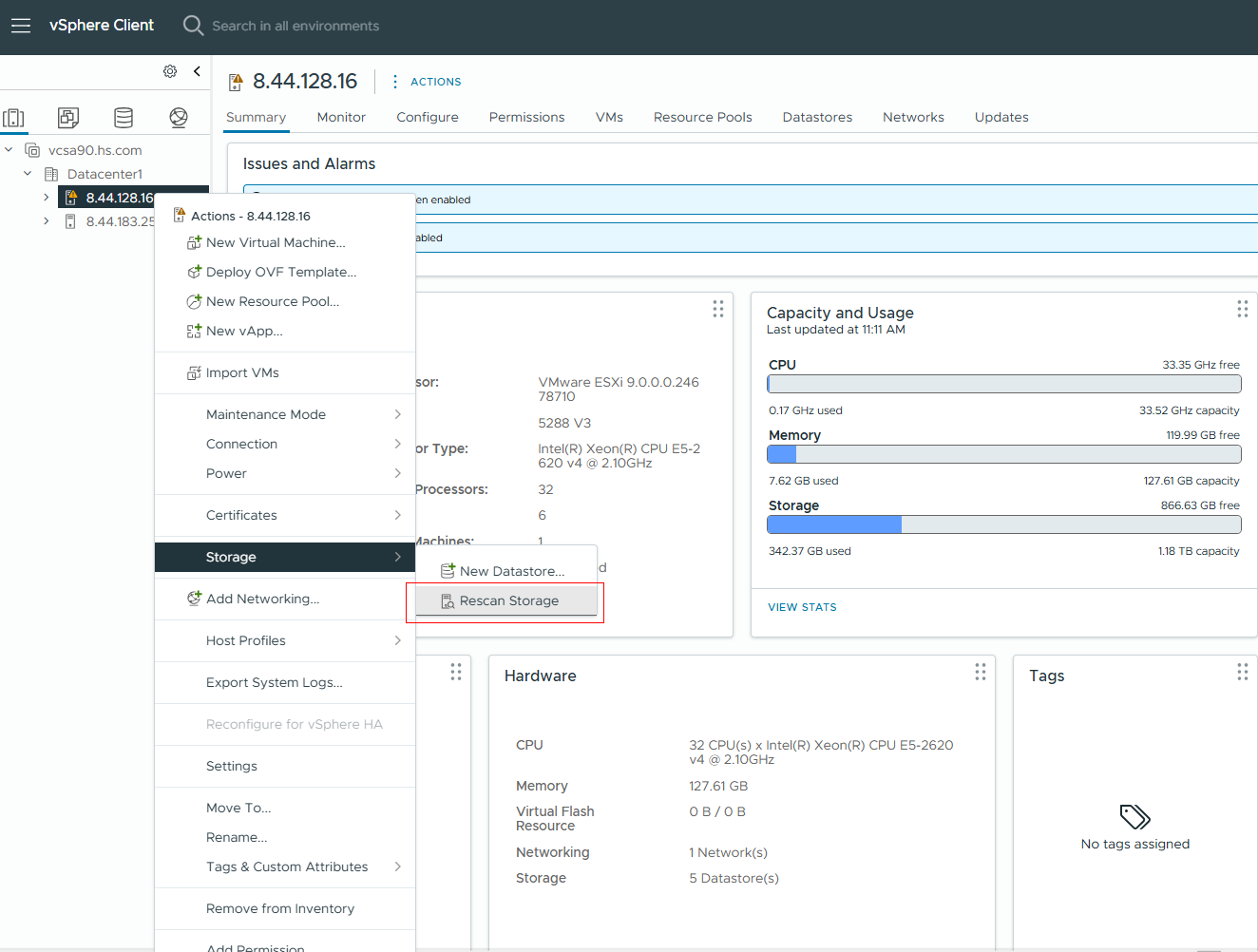

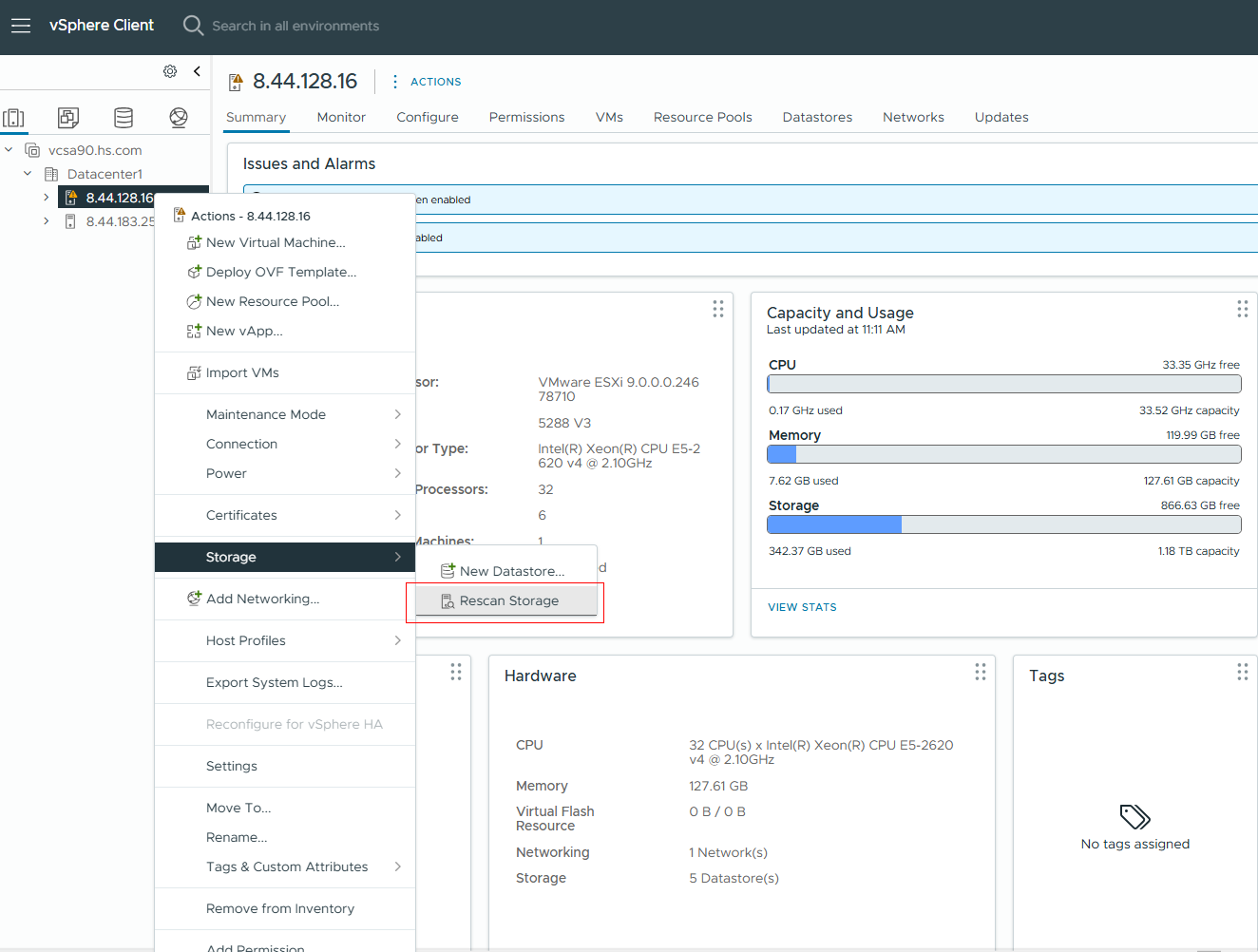

Test Procedure | 1.Log into the storage management system, map a 500GB LUN from the storage to the host. 2.Log into vSphere vCenter and perform a « Rescan Storage » to scan the LUNs and create a datastore on the LUN. 3.Log into the VMware ESXi host management interface through vSphere vCenter. 4.In the device tree, select the VMware ESXi host on which the virtual machine will be created. 5.Right-click on the VMware ESXi host, click » New Virtual Machine, » and follow the wizard to complete the virtual machine creation on the datastore created in step 2. 6.Install the operating system on the newly created virtual machine. |

Expected Result | 1.In step 2, the datastore is created successfully. 1.In step 5, the virtual machine is created successfully. 2.In step 6, the installation of the guest operating system (GOS) is completed successfully. |

Test Record | 1.LUN mapping is successful, and the datastore can be created.

2.The virtual machine was created successfully.

3.The operating system was installed successfully.

|

Test Conclusion | Passed |

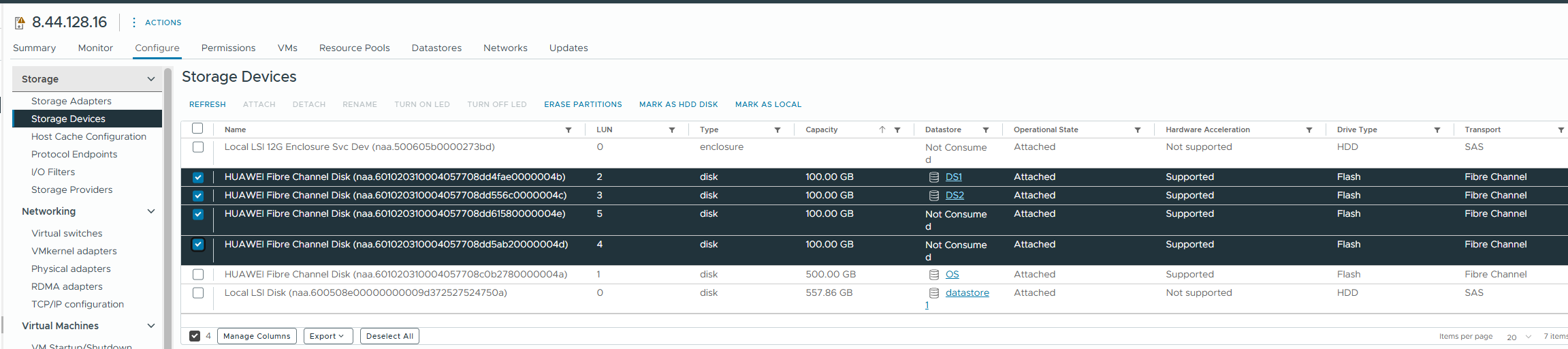

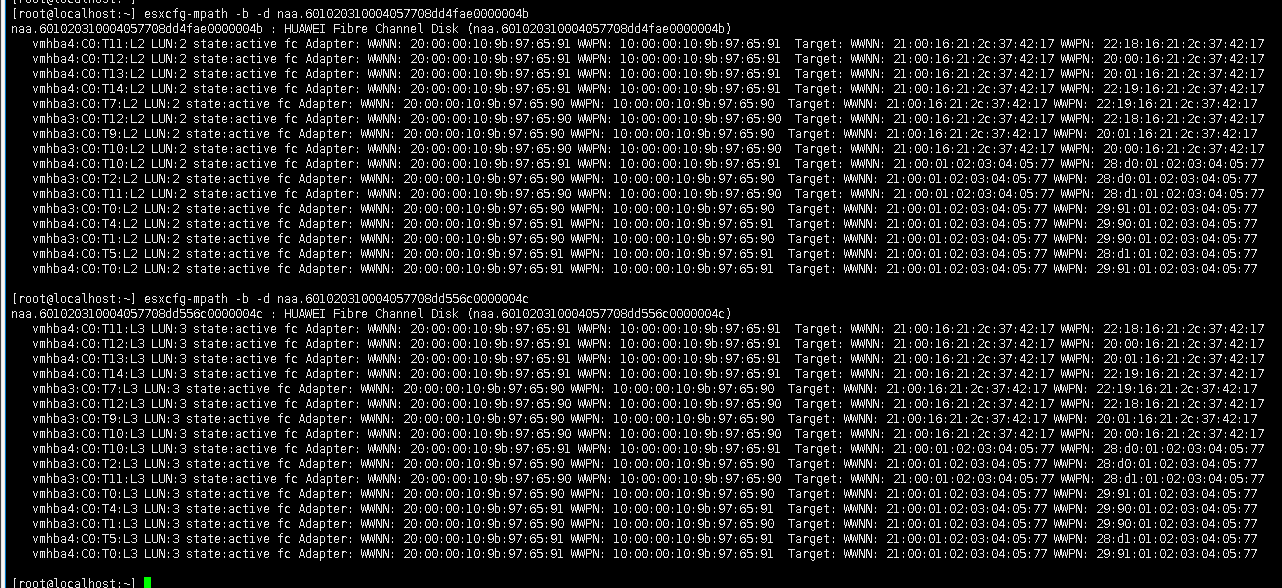

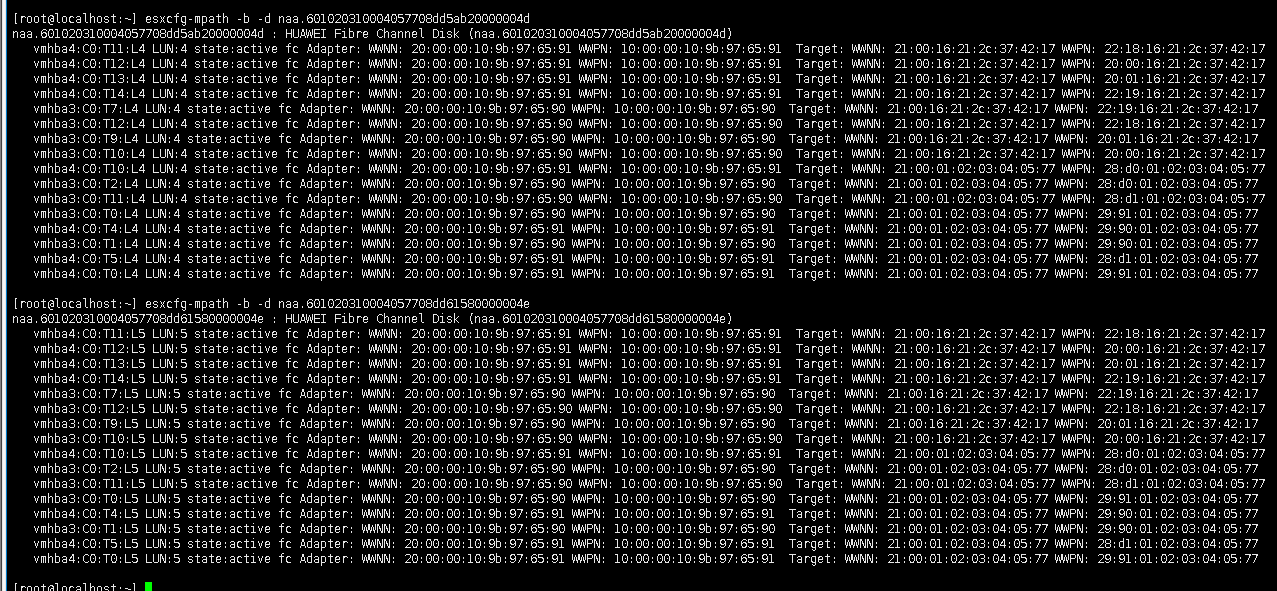

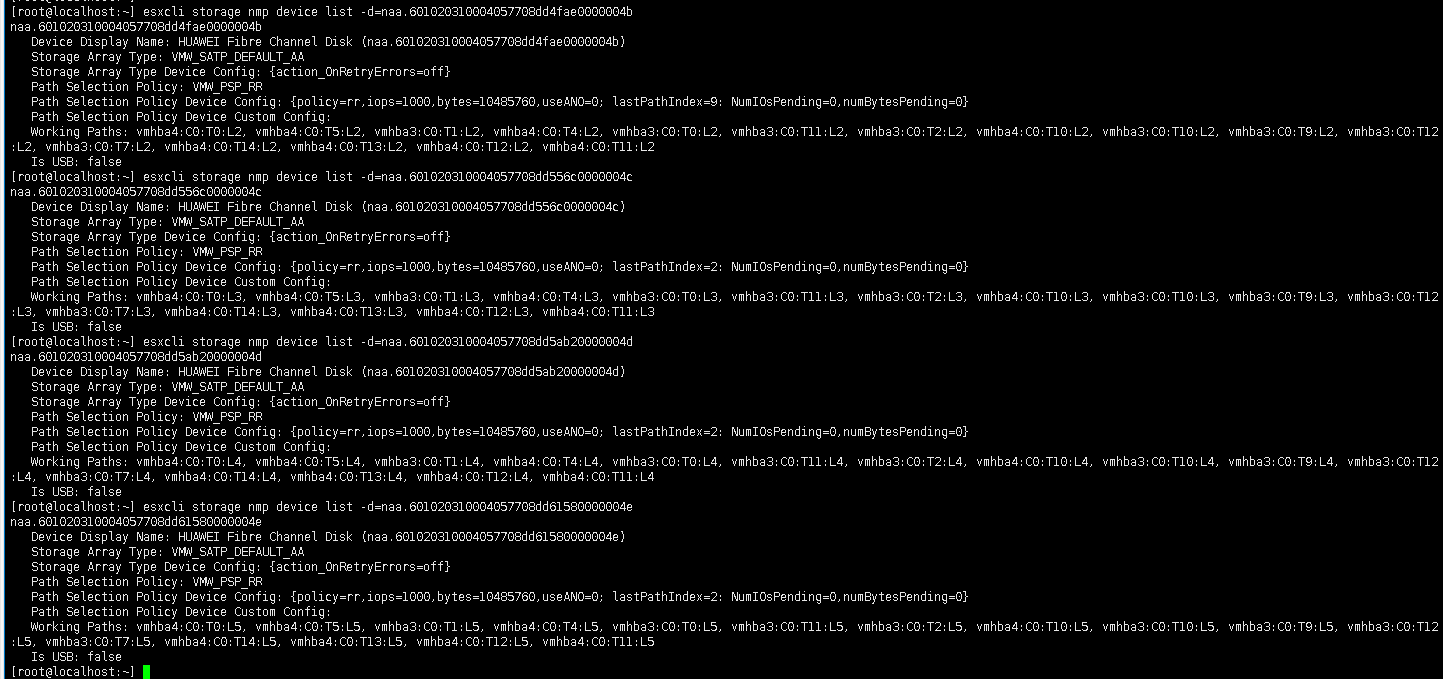

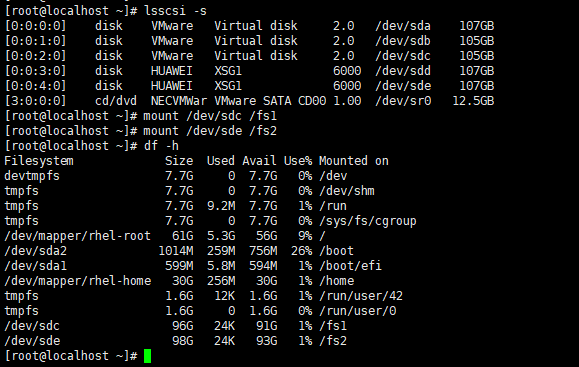

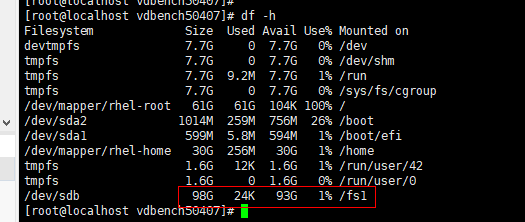

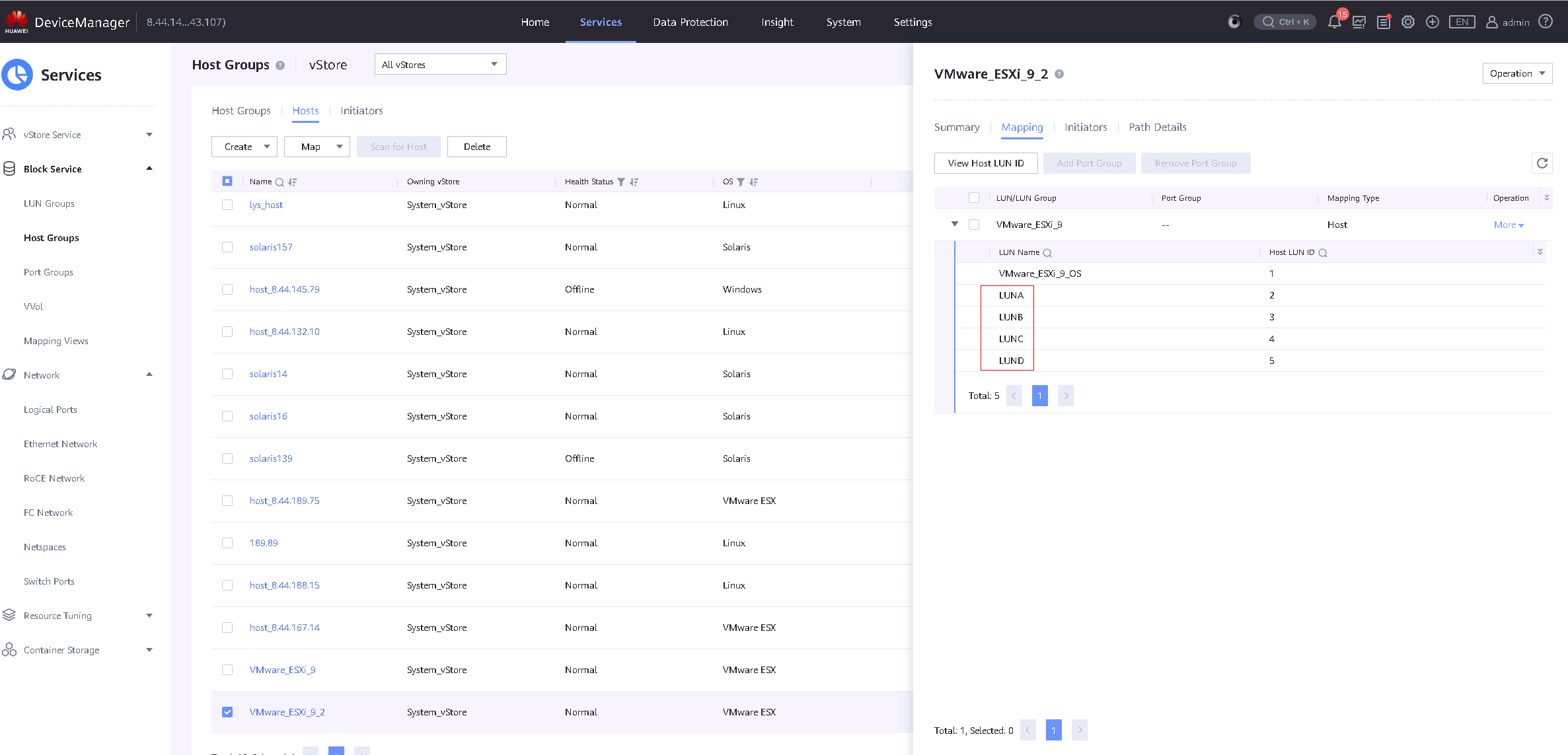

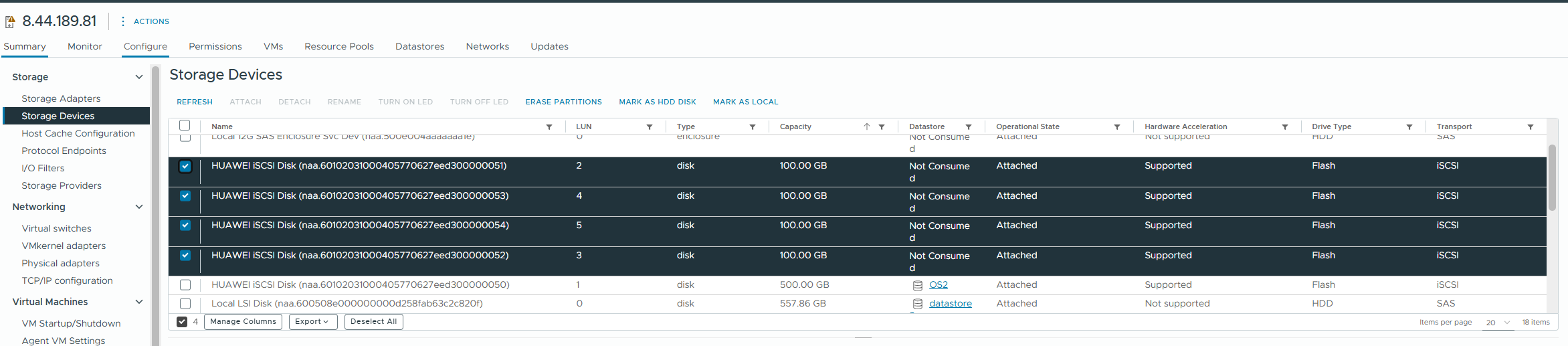

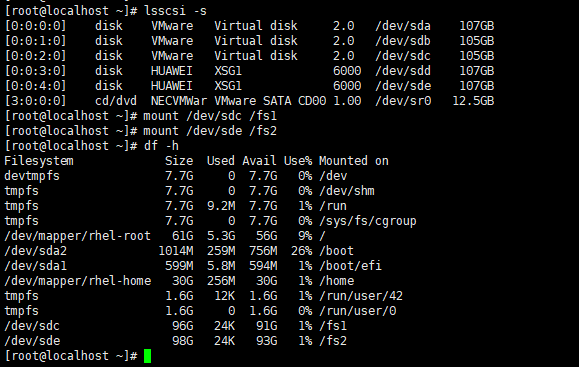

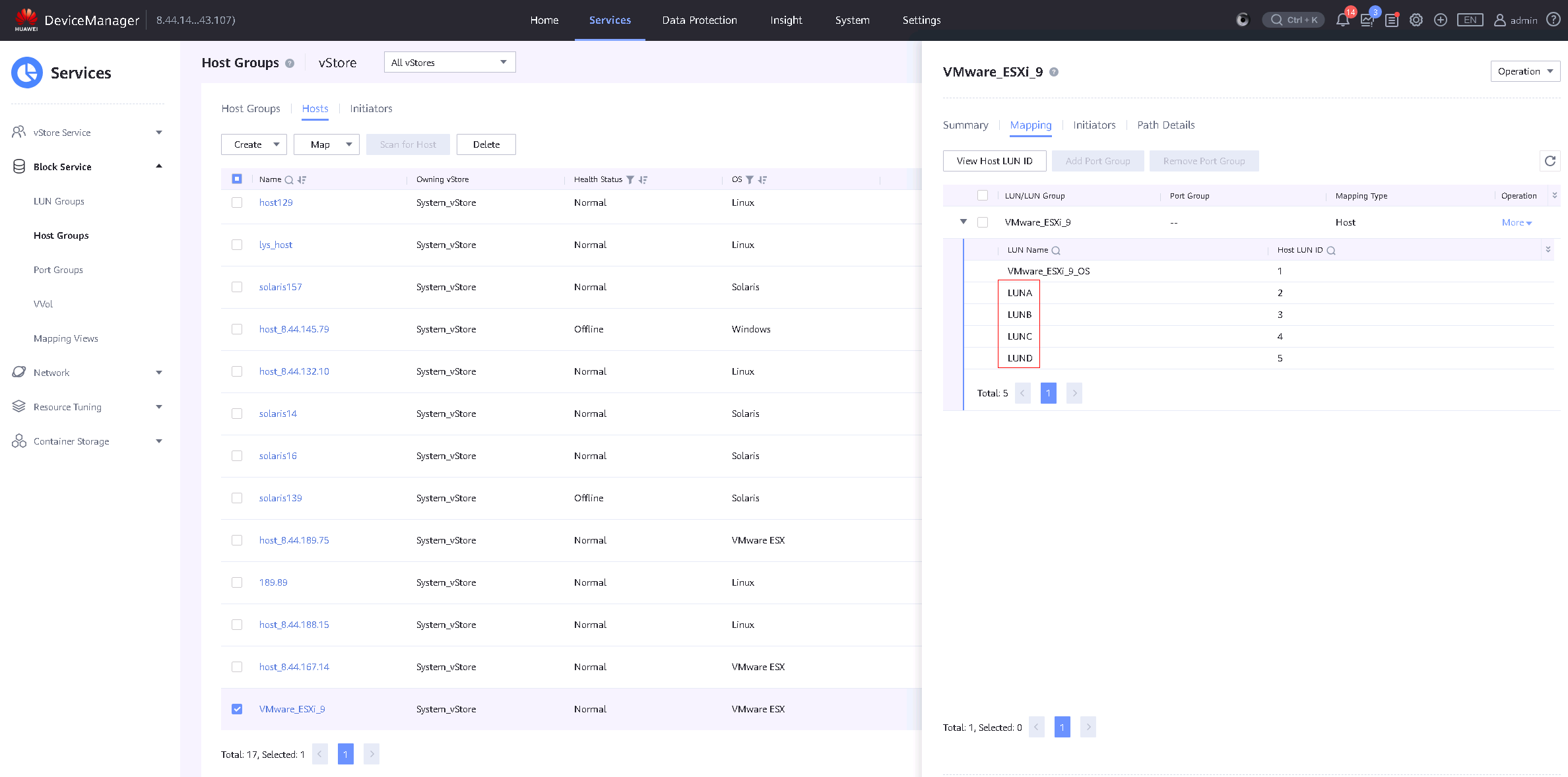

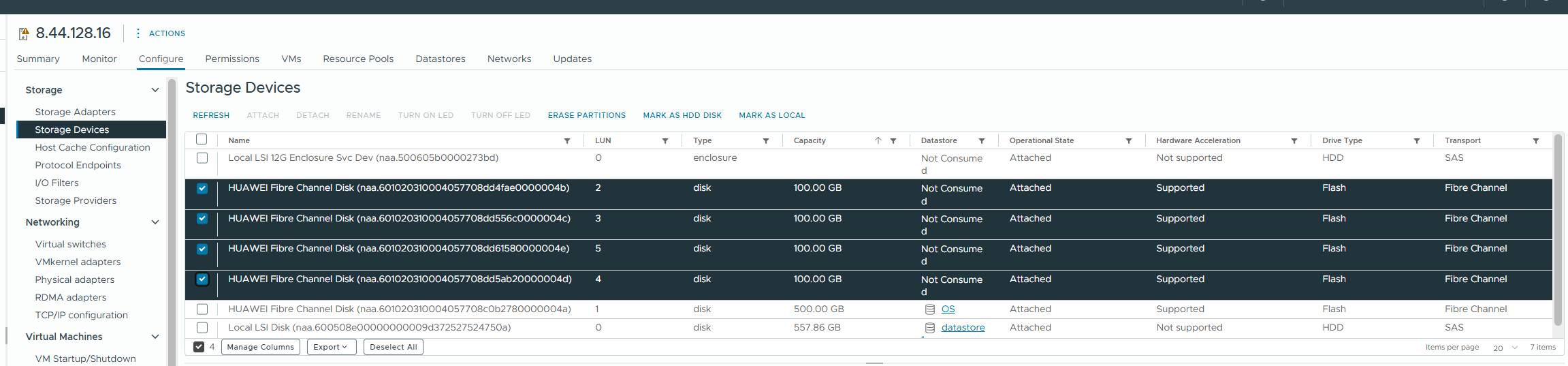

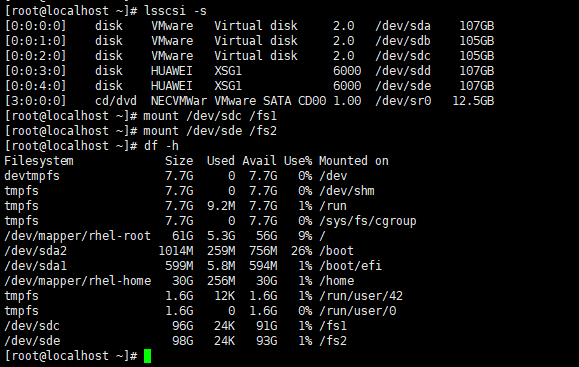

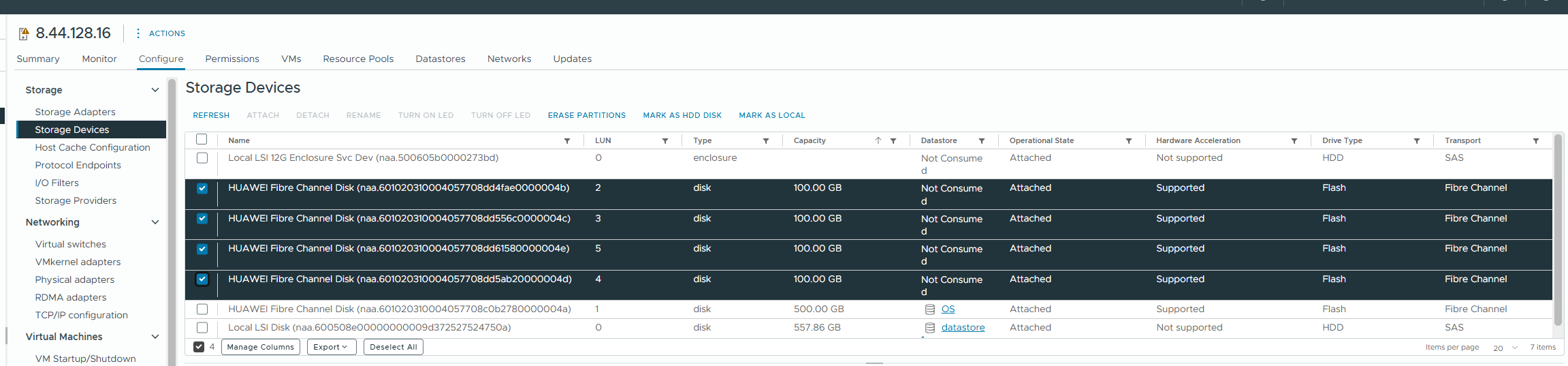

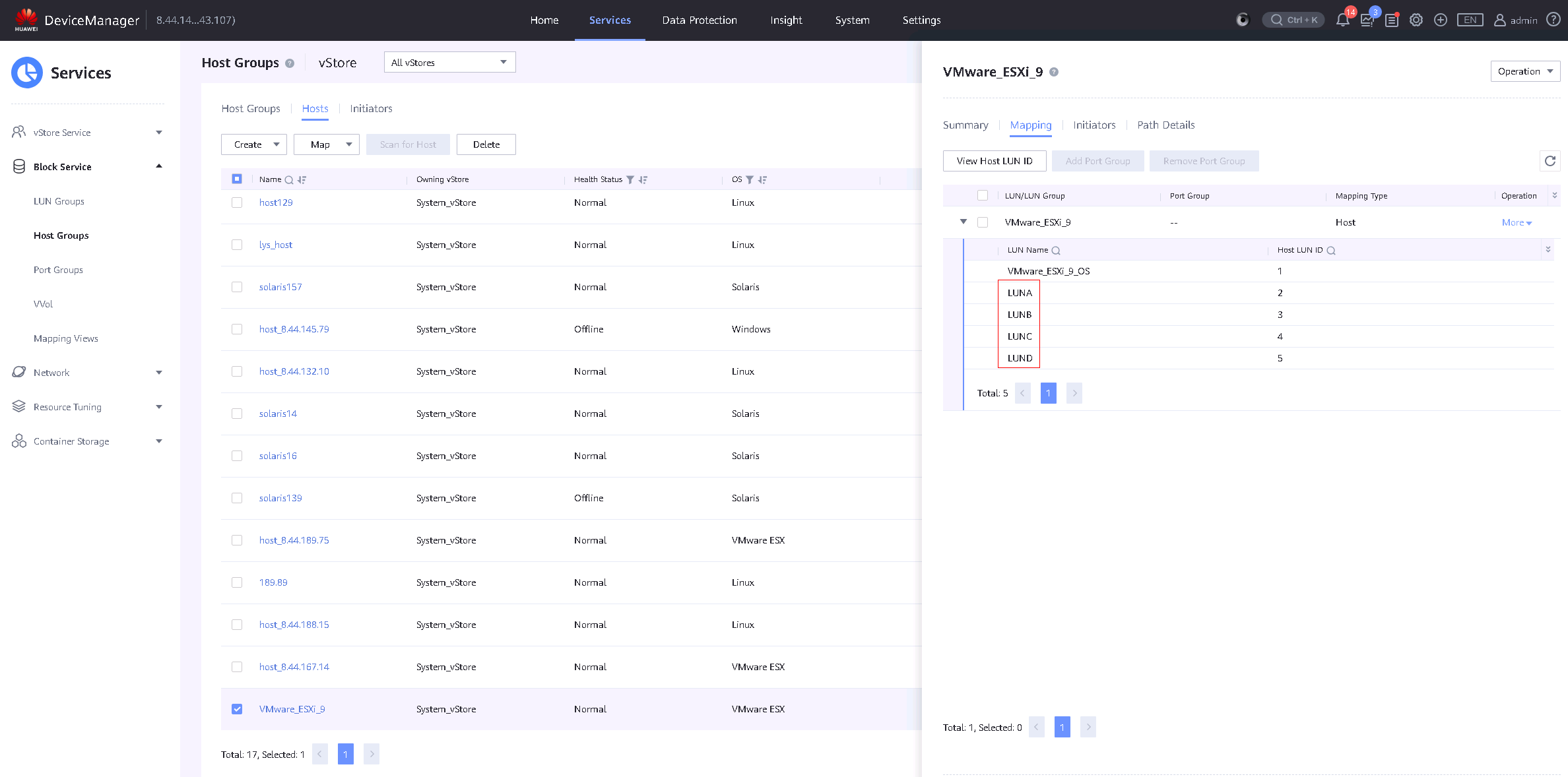

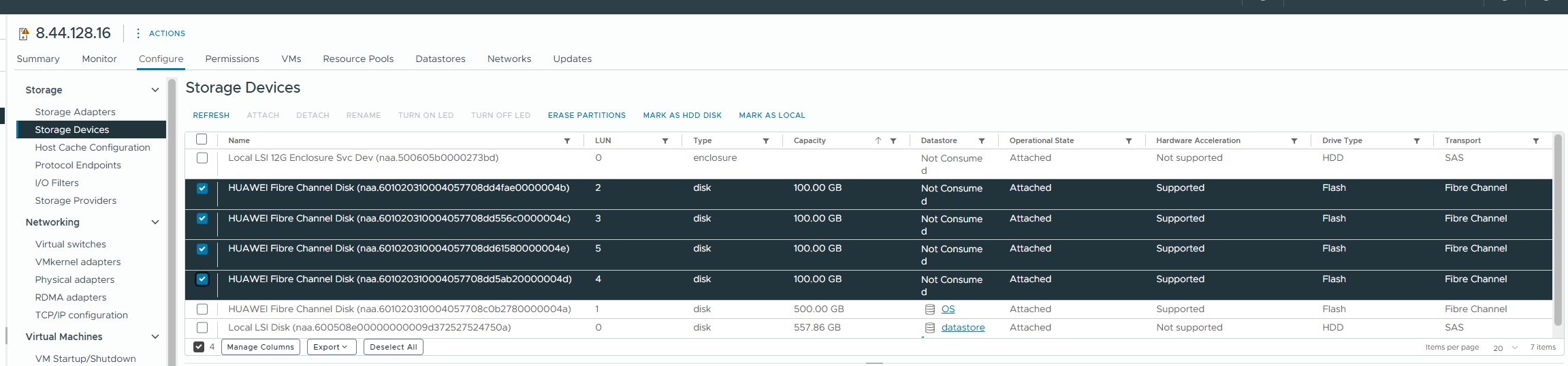

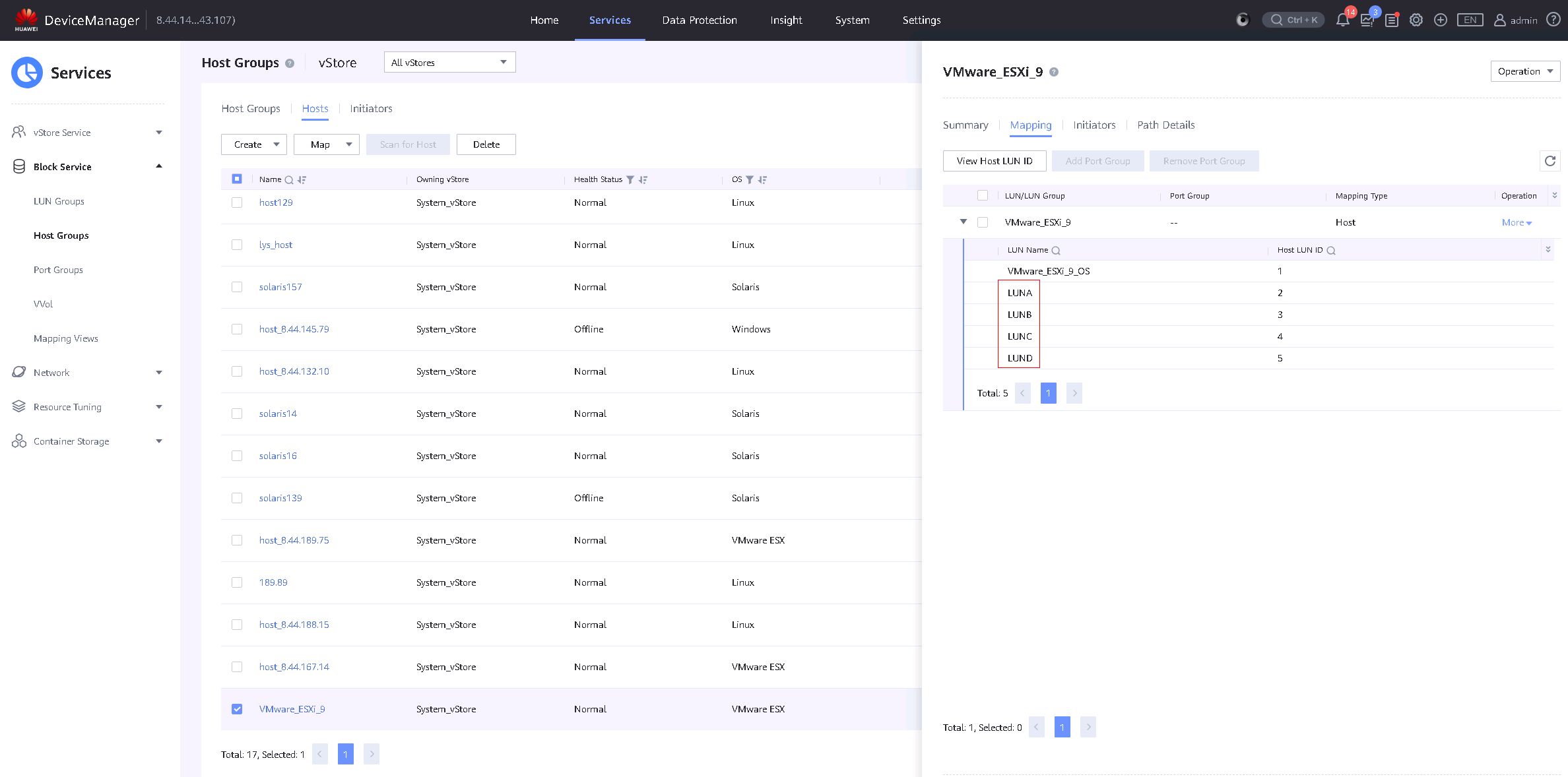

2.3 LUN Read/Write Operations

Test Purpose | To Verify That the Virtual Machine is able to Perform Read/Write Operations on the LUN Mapped from Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario and the physical links are functioning properly. 2.The host system is operating normally. 3.The storage system is functioning properly. 4.Multipath has been properly installed and configured. 5.The LUN mapping is normal. 6.The system disk used by the test virtual machine is created on the datastore established on the mapped LUN. |

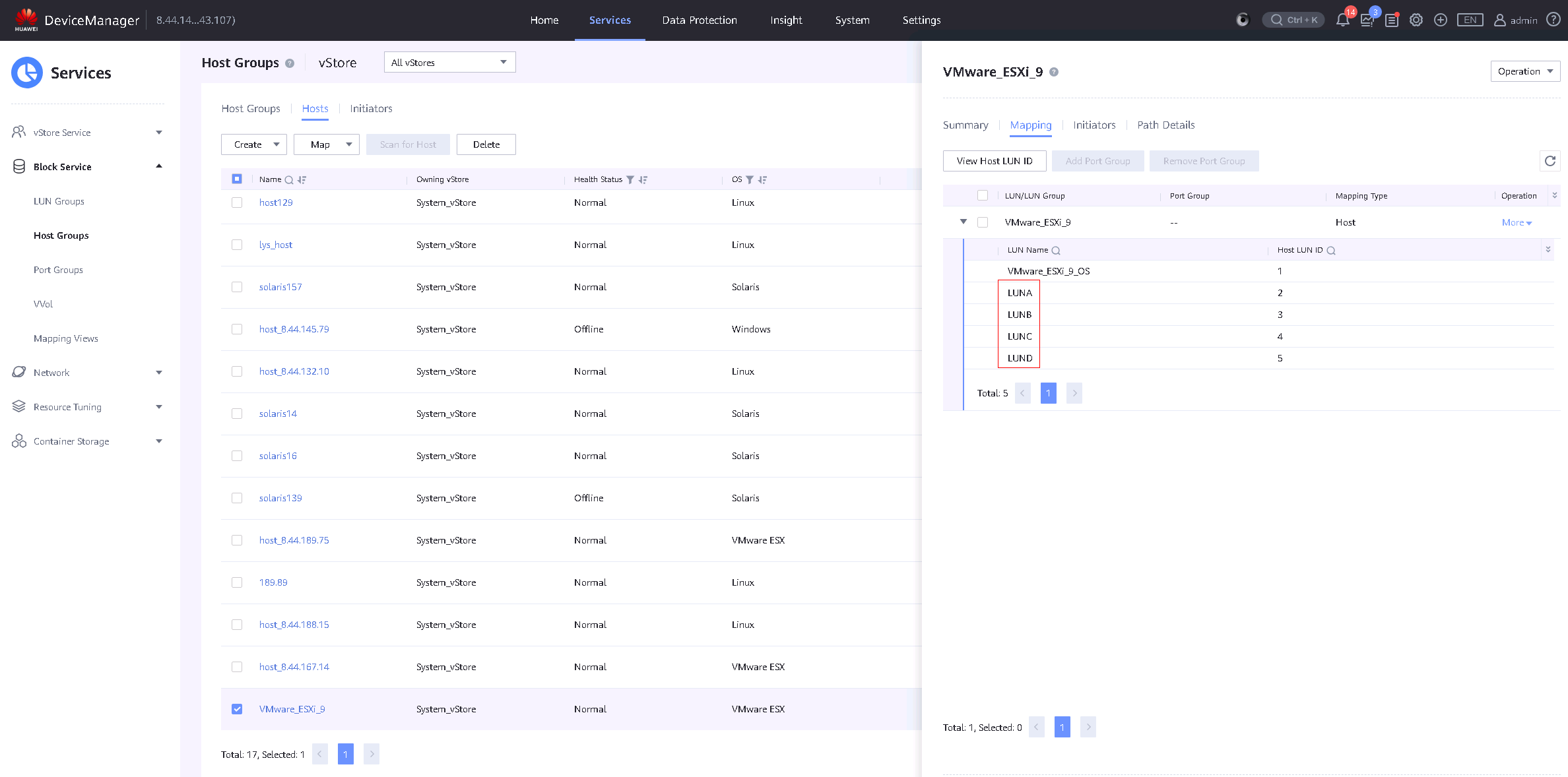

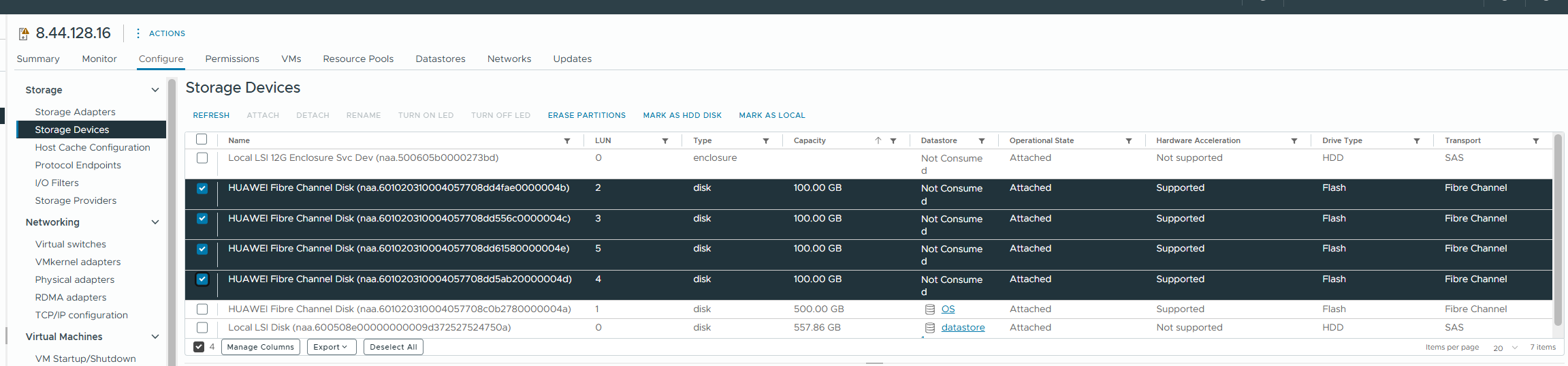

Test Procedure | 1.Log into the storage management system, create the mappings, and add four 100GB LUNs to the host. 2.Log into vSphere vCenter and perform a « Rescan Storage » to scan the LUNs and view disk information. 3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, observe the I/O performance. On LUN B and LUN D, create file systems and perform file system read/write operations using vdbench, observing the I/O performance. |

Expected Result | 1.In step 2, the host is able to detect the four mapped LUNs. 2.In step 4, the VM is able to perform read/write operations on all four disks without issues. |

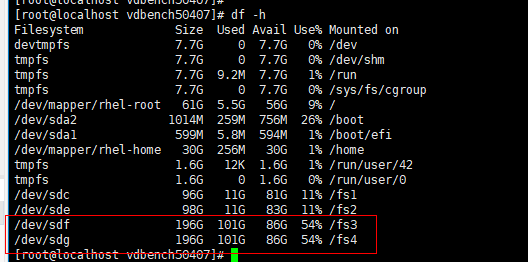

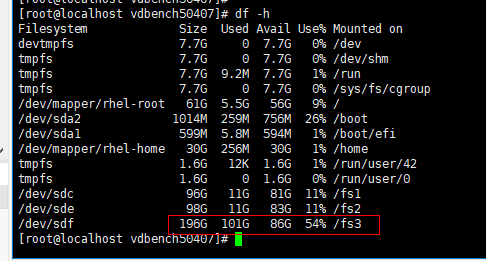

Test Record |

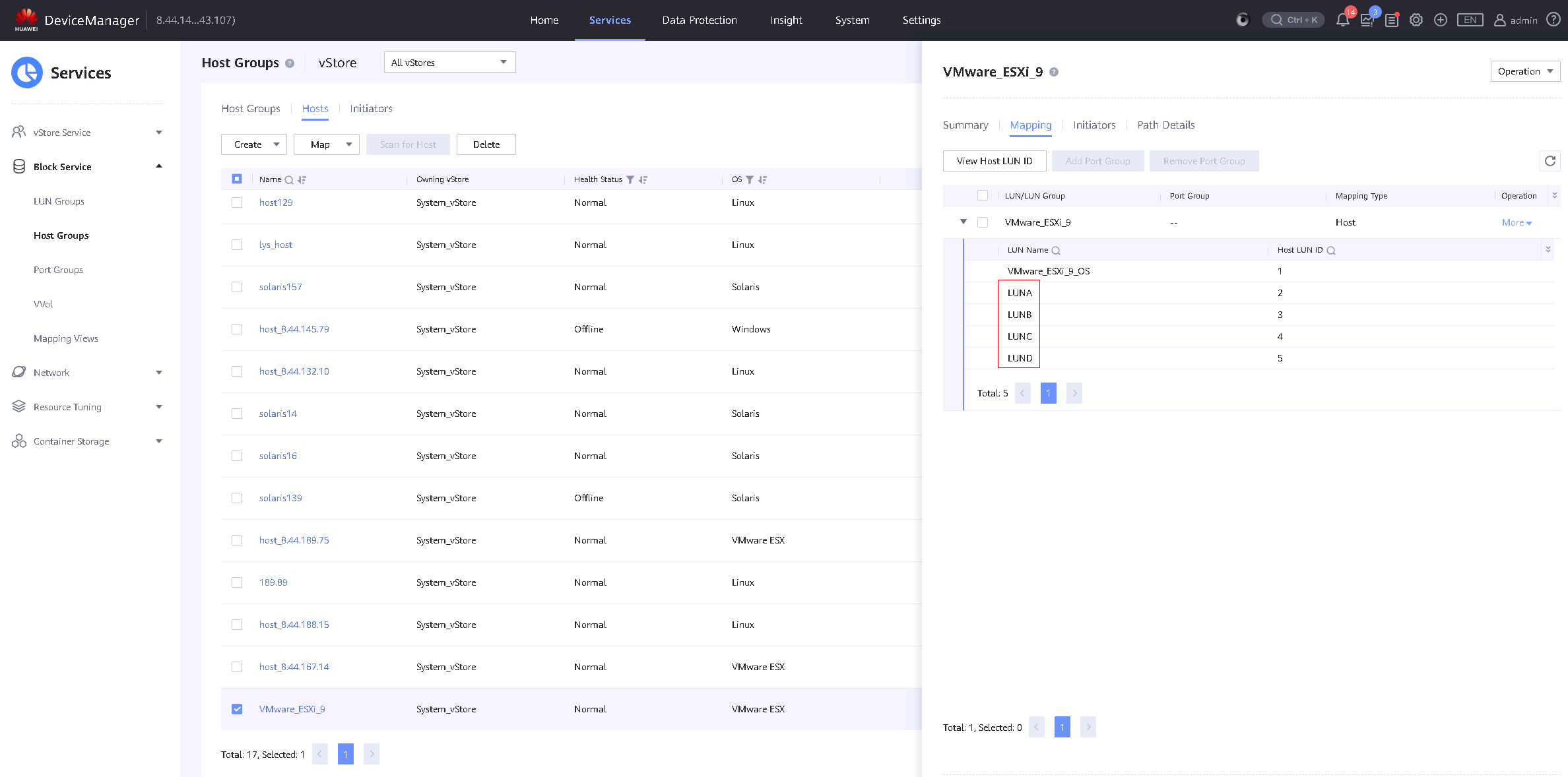

1.Log into the storage management system, create the mappings, and add four 100GB LUNs to the host.

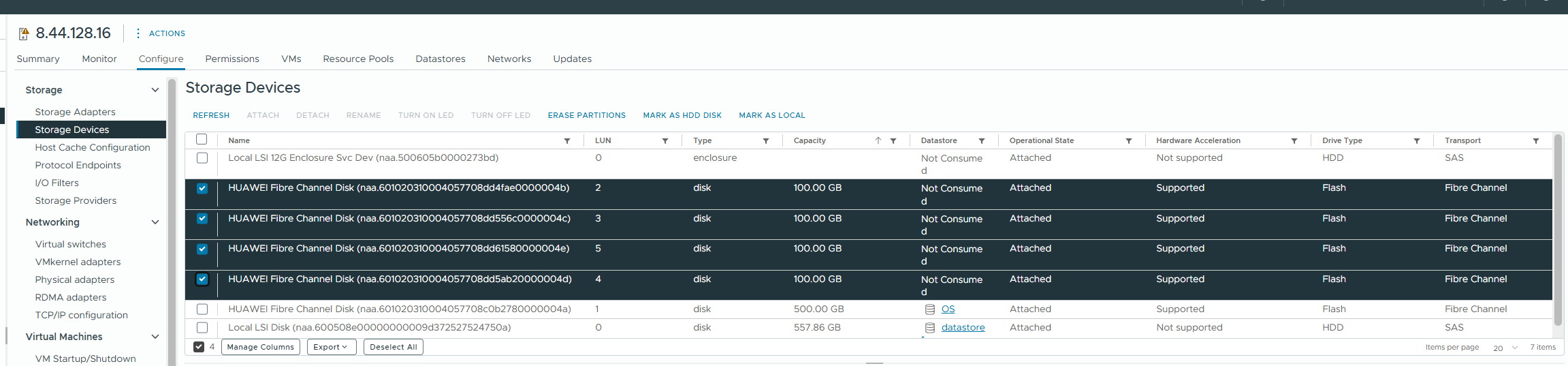

2.Log into vSphere vCenter and perform » Rescan Storage » to scan the LUNs and view the disk information.

3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. »

4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, and observe the I/O performance. In the VM, create file systems on LUN B and LUN D, and perform file system read/write operations using vdbench, observing the I/O performance.

|

Test Conclusion | Passed |

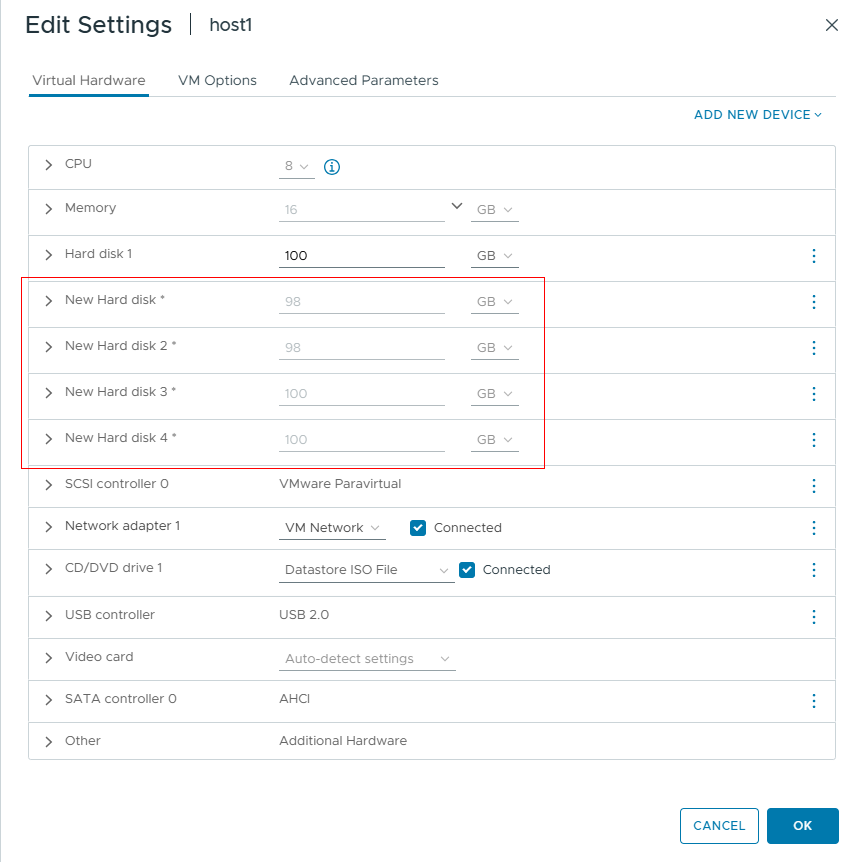

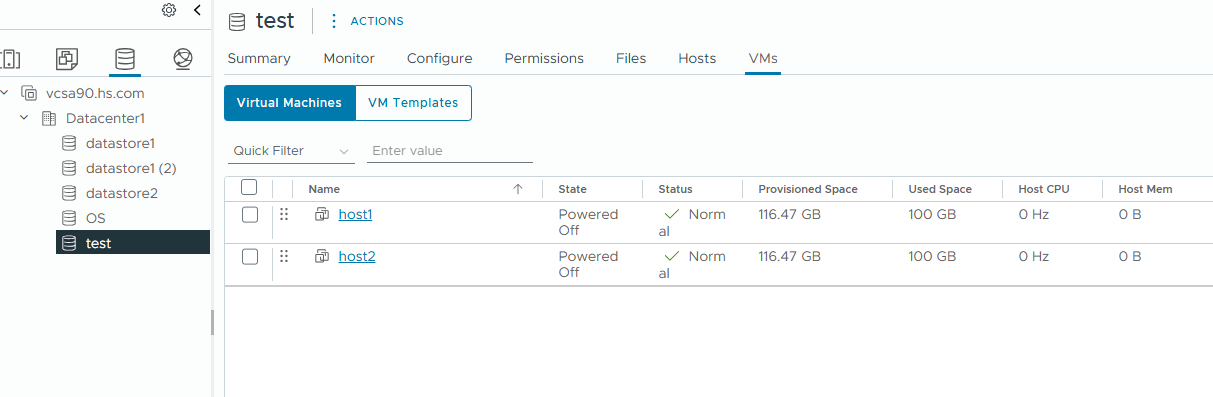

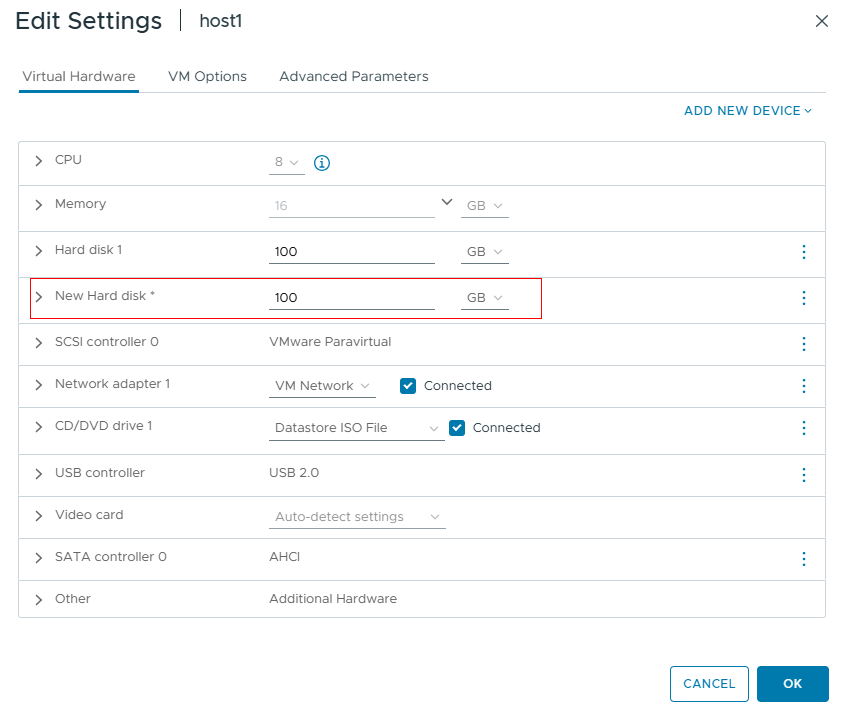

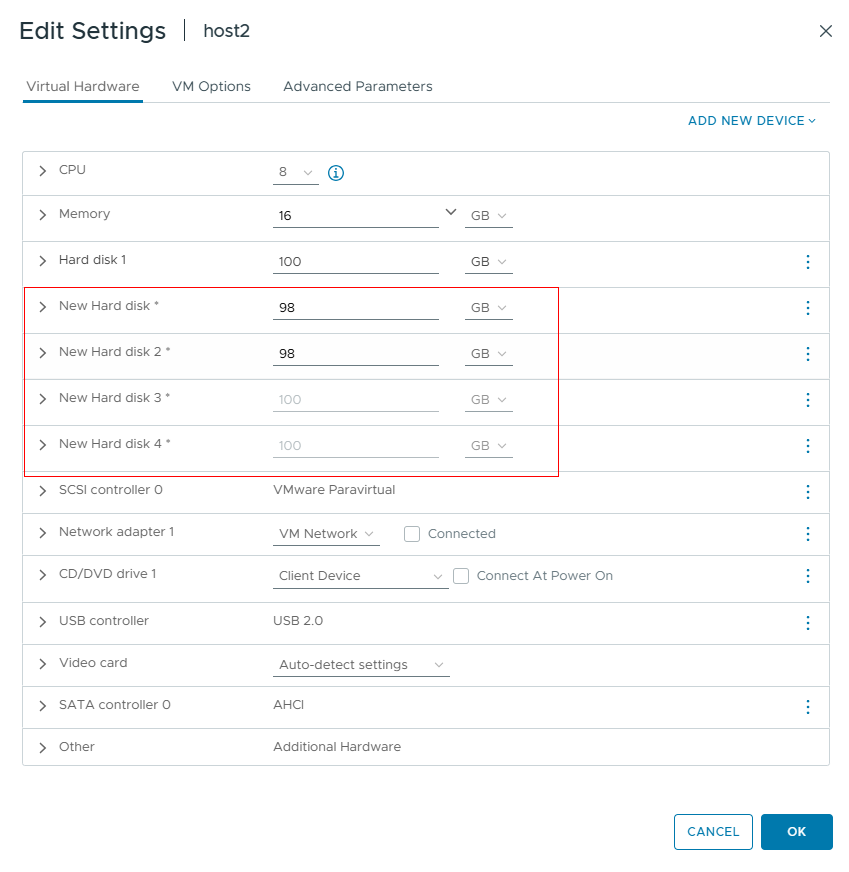

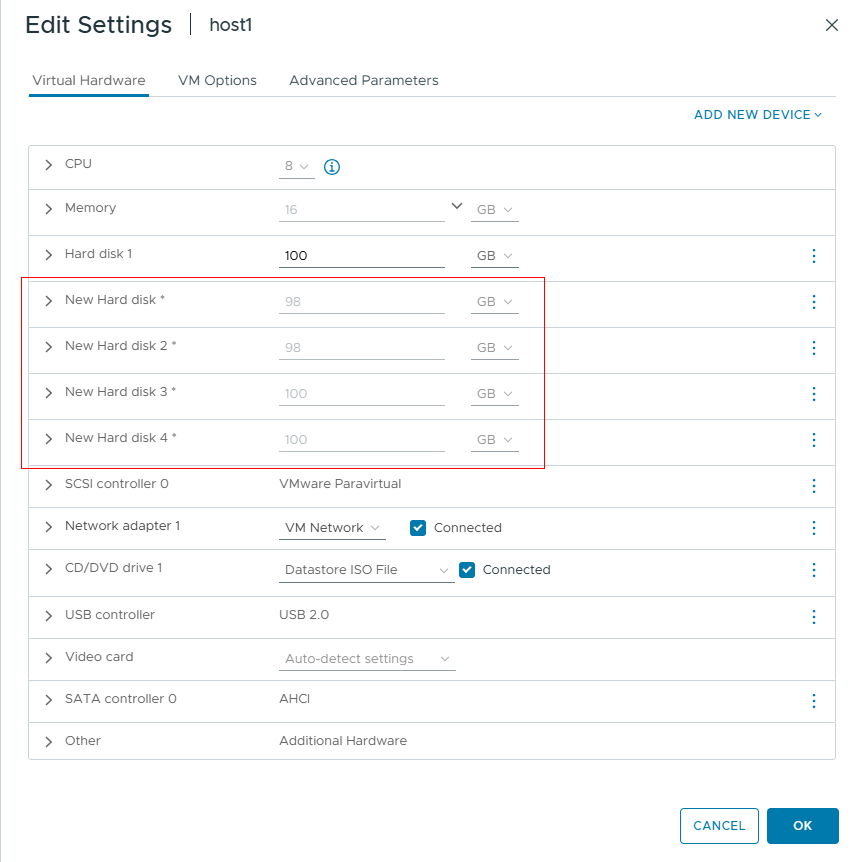

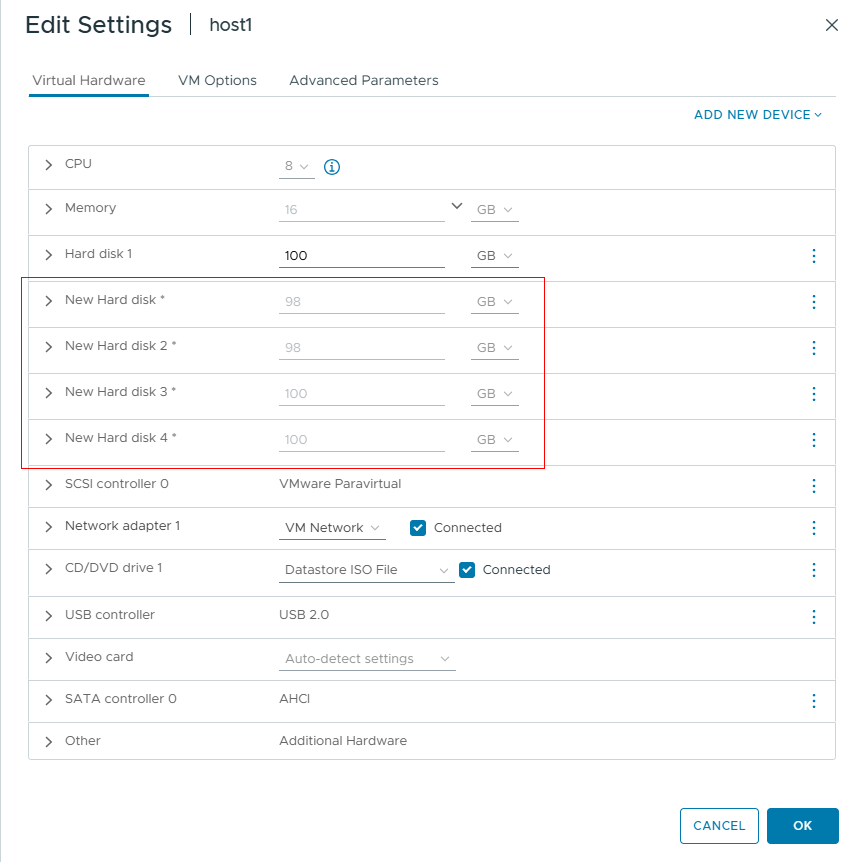

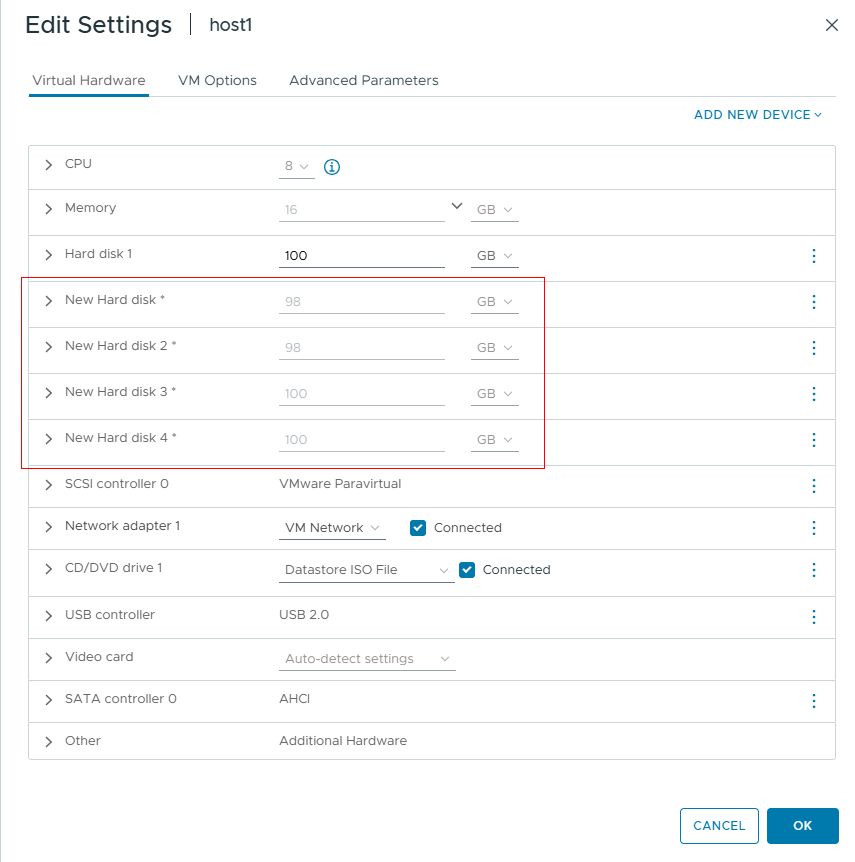

2.4 Online Addition of Virtual Disks to Virtual Machine

Test Purpose | To Verify That Virtual Disks Could be Added to Virtual Machine Online |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The physical connection is functioning properly. 2.The external storage and VMware ESXi systems are operating normally. 3.The port mapping is correct. 4.The system disk used by the test virtual machine is located on the datastore created from the mapped LUN. 5.The virtual machine has been created, and operating systems have been installed. 6.The external storage has been created and the LUN for testing has been mapped to the VMware ESXi host. |

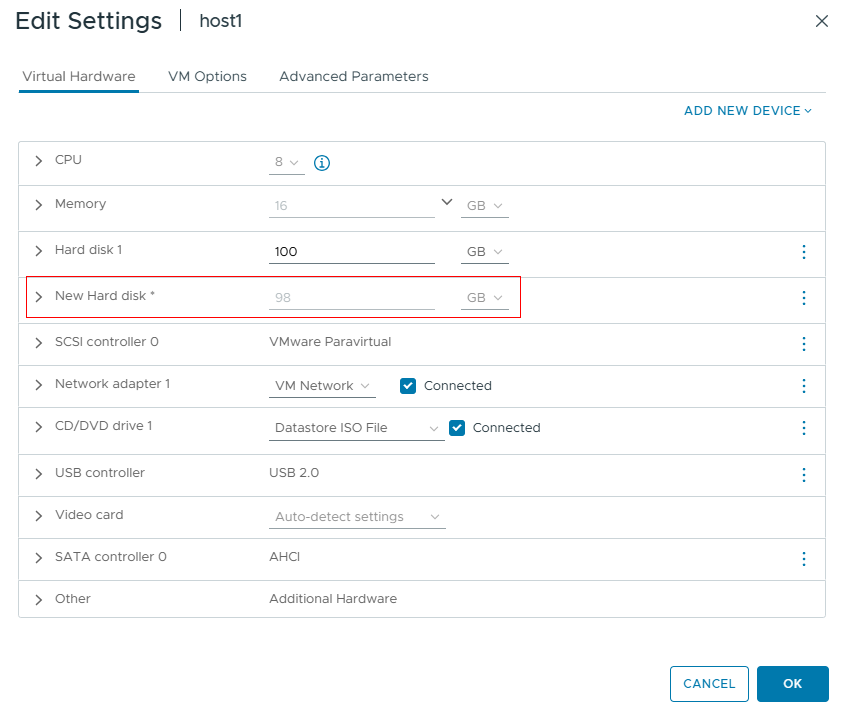

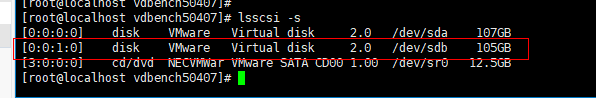

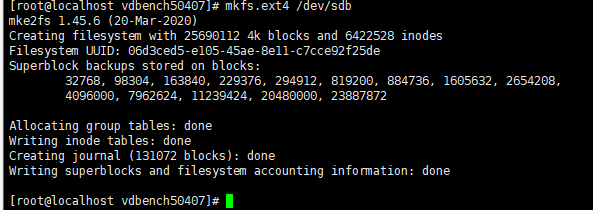

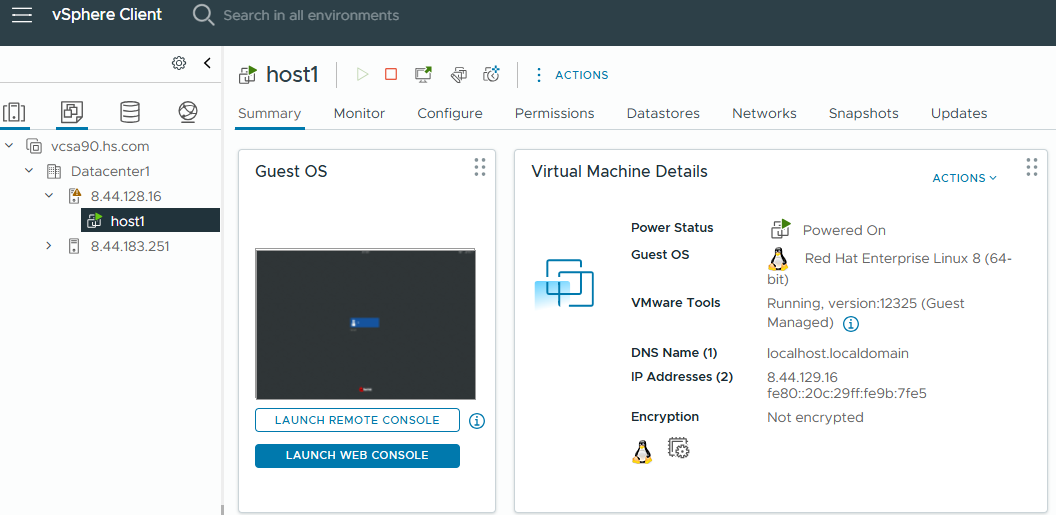

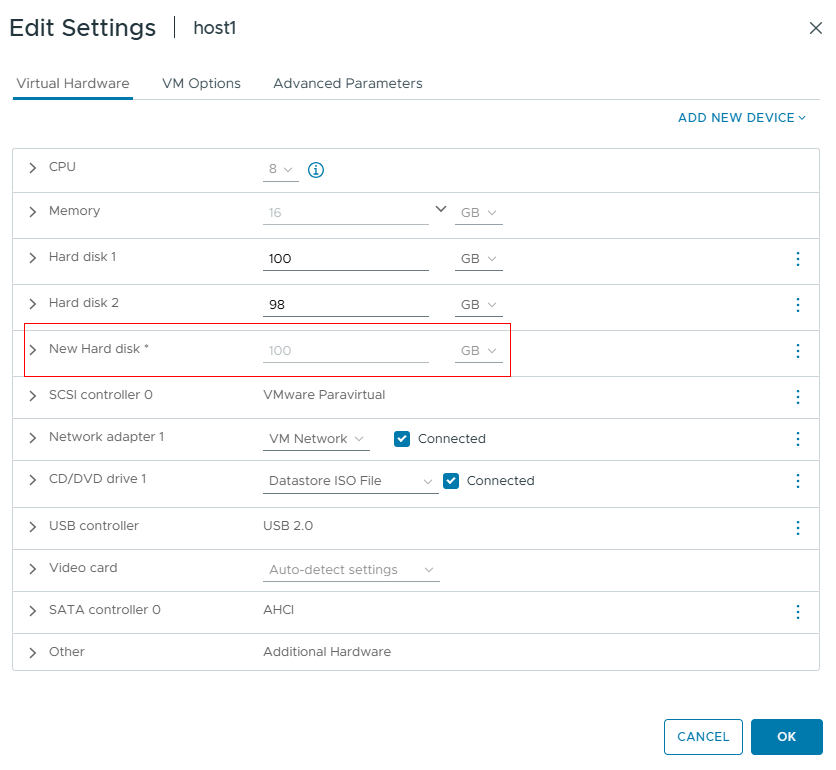

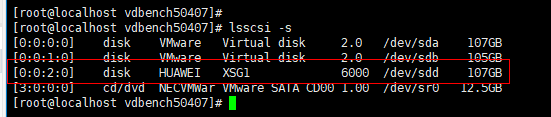

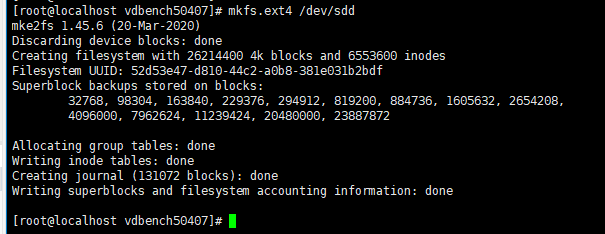

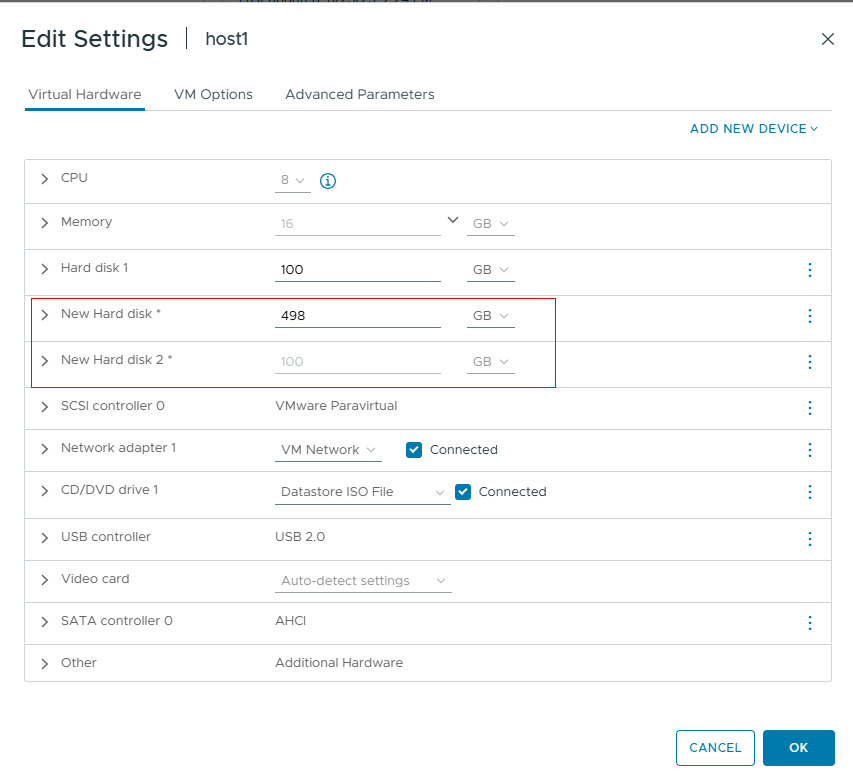

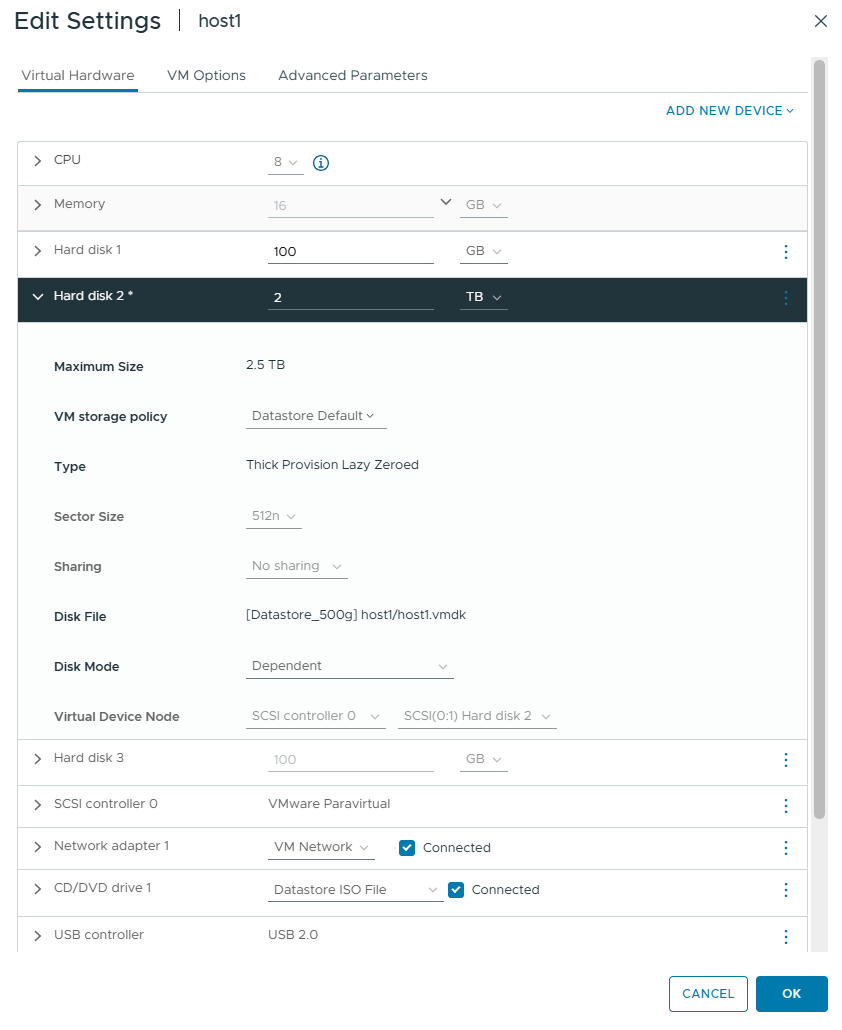

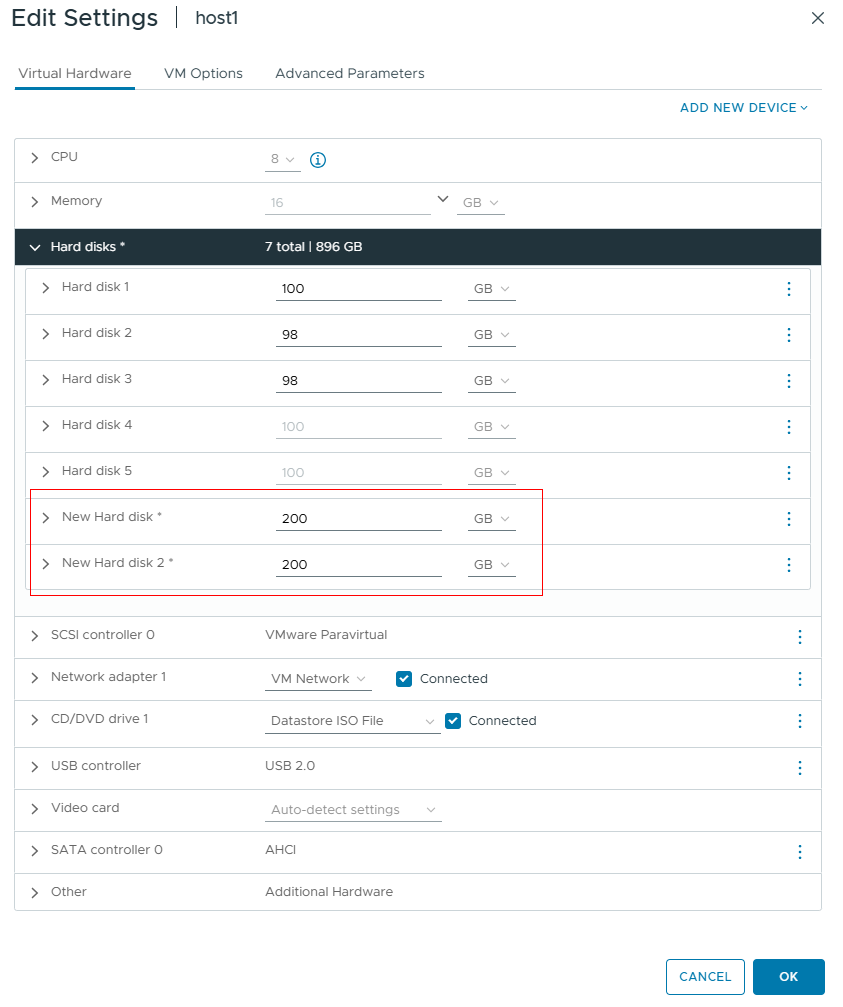

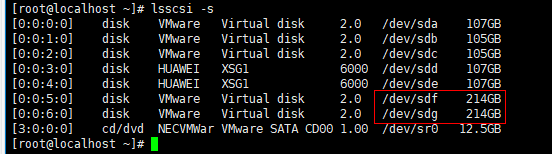

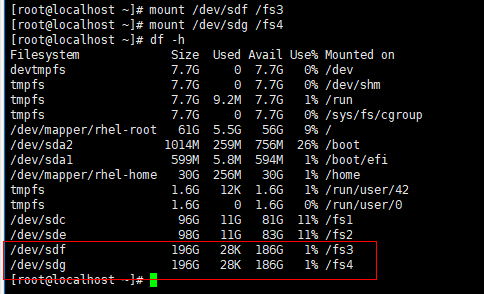

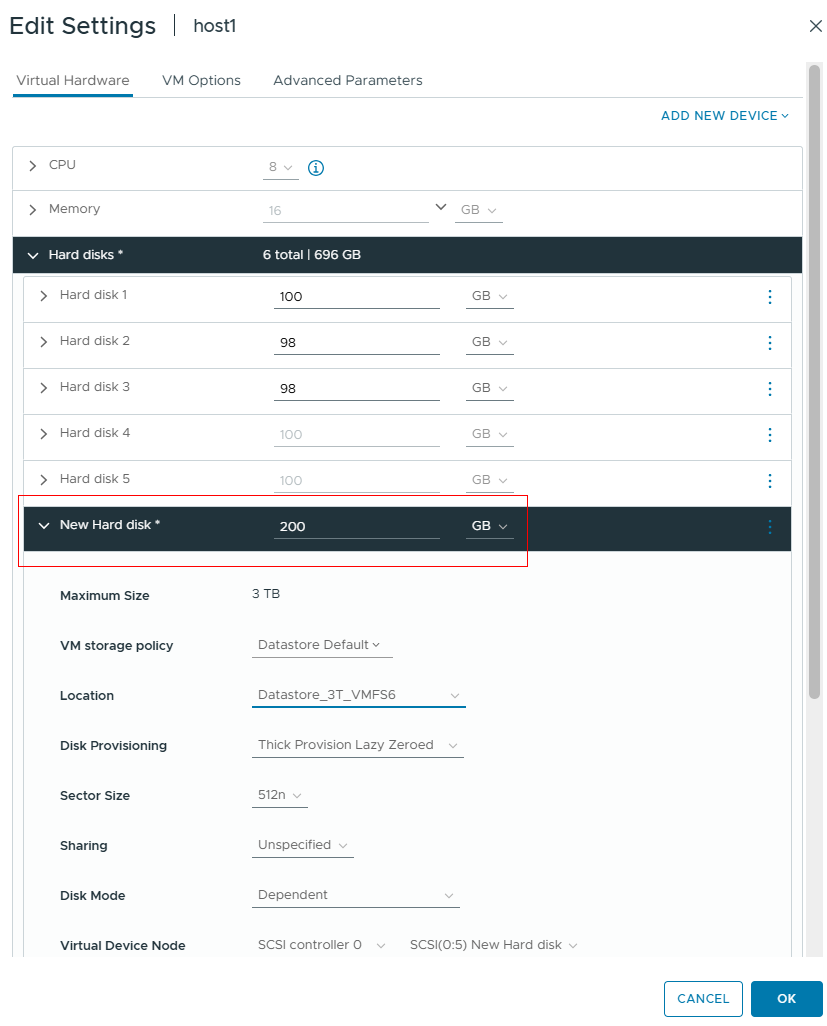

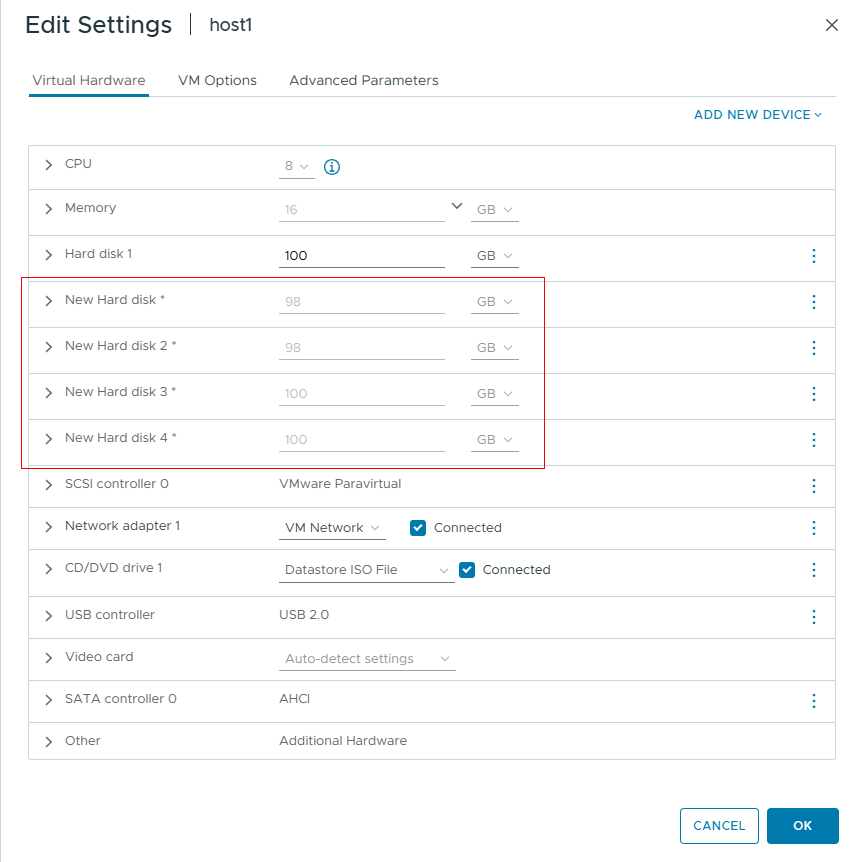

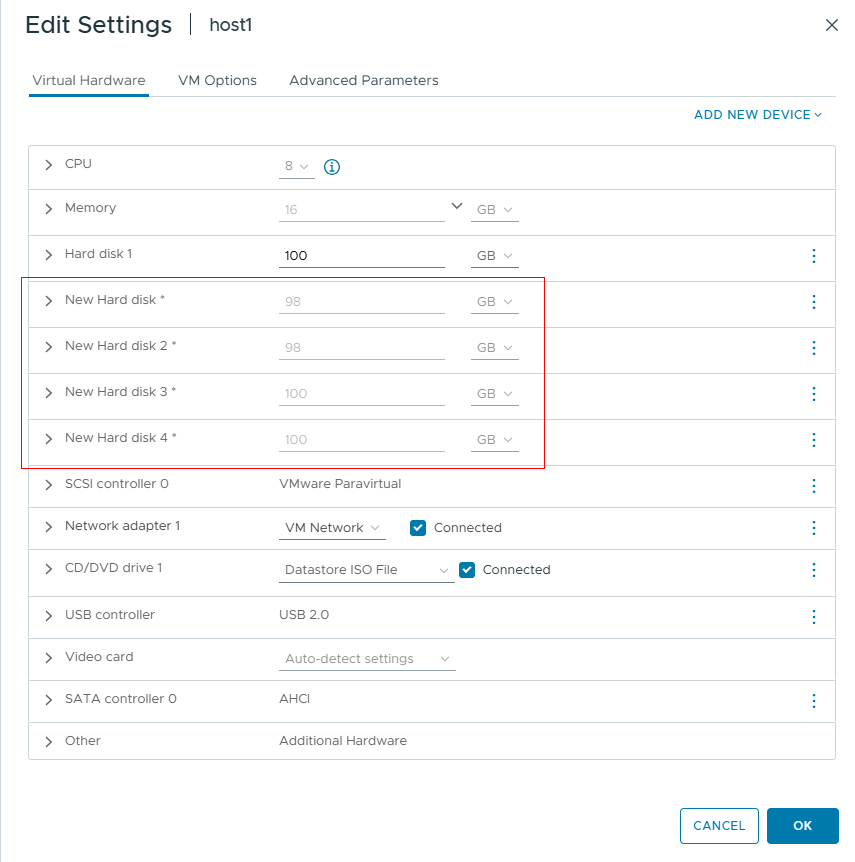

Test Procedure | 1.Log into the VMware ESXi host management interface vSphere vCenter and start the virtual machine. 2.Select the virtual machine to be tested, execute « Edit Settings » → « ADD NEW DEVICE » → choose the device type as « Hard Disk » → select the disk size, location and other parameters, then click « OK » to complete the creation of the disk. 3.Log into the virtual machine and use the device management tools to scan/view the disk devices. 4.Initialize and format the newly added virtual disk. |

Expected Result | 1.In step 3, the new disk added to the virtual machine is detected. 2.In step 4, the initialization and formatting of the virtual disk are completed successfully, with no system errors. |

Test Record | 1.Start the Virtual Machine

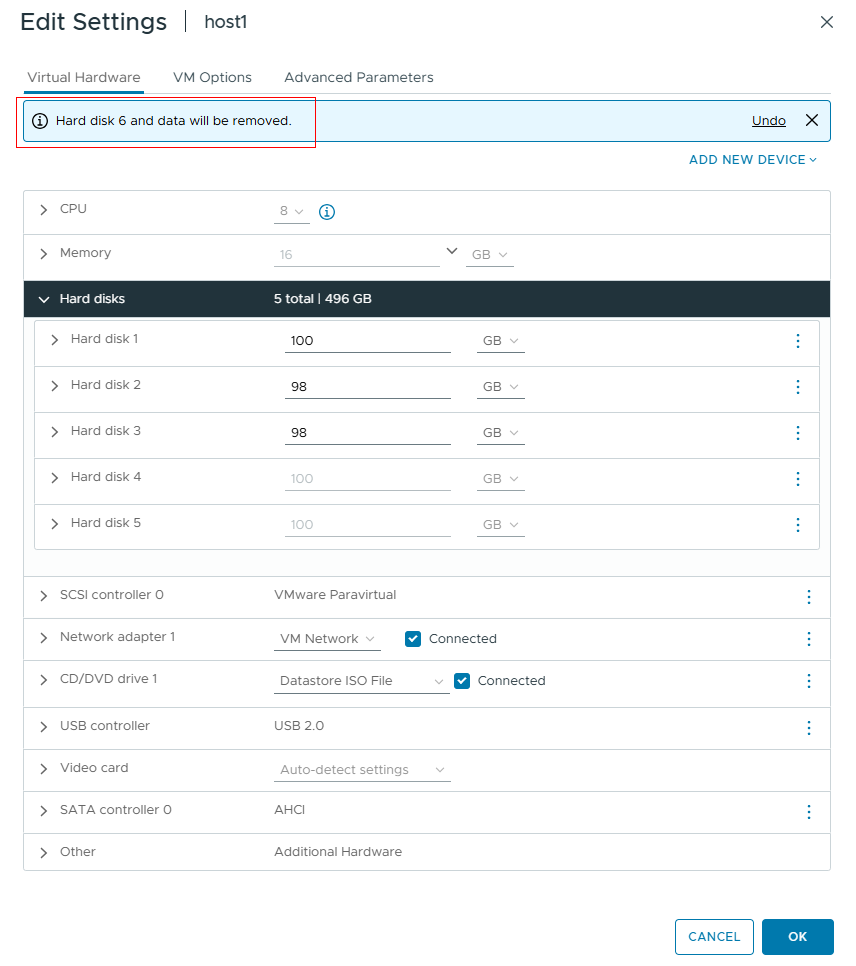

2.Add a virtual hard disk to the virtual machine.

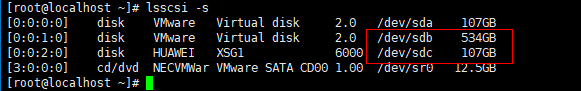

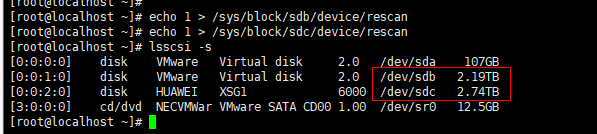

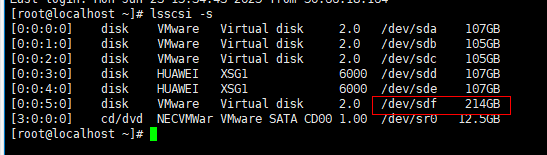

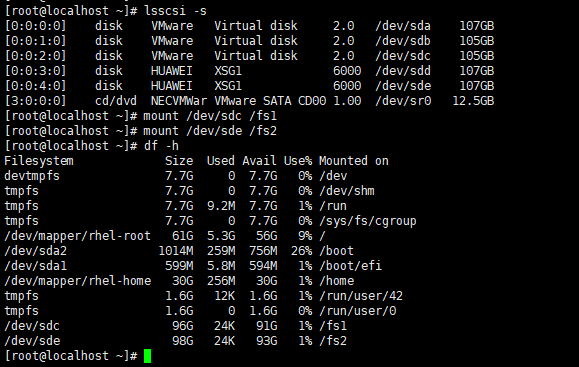

3.View Disks in the Virtual Machine

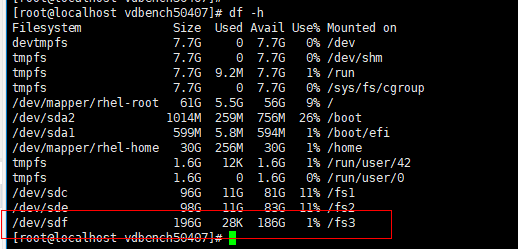

4.Format the Disk

|

Test Conclusion | Passed |

2.5 Online Addition of RDM Disk Mapping to Virtual Machine

Test Purpose | To Verify That RDM Disks Could be Added to Virtual Machine Online |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The physical connection is functioning properly. 2.The external storage and VMware ESXi systems are operating normally. 3.The port mapping is correct. 4.The system disk used by the test virtual machine is located on the datastore created from the mapped LUN. 5.The virtual machine has been created, and operating systems have been installed. 6.The external storage has created and mapped the LUN for testing to the VMware ESXi host. |

Test Procedure | 1.Log into the VMware ESXi host management interface vSphere vCenter and start the virtual machine. 2.Select the virtual machine to be tested, execute « Edit Settings » → « ADD NEW DEVICE » → choose the device type as « RDM Disk », then follow the wizard to complete the creation of the disk. 3.Log into the virtual machine and use the device management tools to scan/view the disk devices. 4.Initialize and format the newly added RDM disk. |

Expected Result | 1.In step 3, the new disk added to the virtual machine is detected. 2.In step 4, the initialization and formatting of the physical disk are completed successfully, with no system errors. |

Test Record | 1.Start the virtual machine.

2.Add an RDM Disk to the virtual machine.

3.View Disks in the Virtual Machine

4.Format the Disk

|

Test Conclusion | Passed |

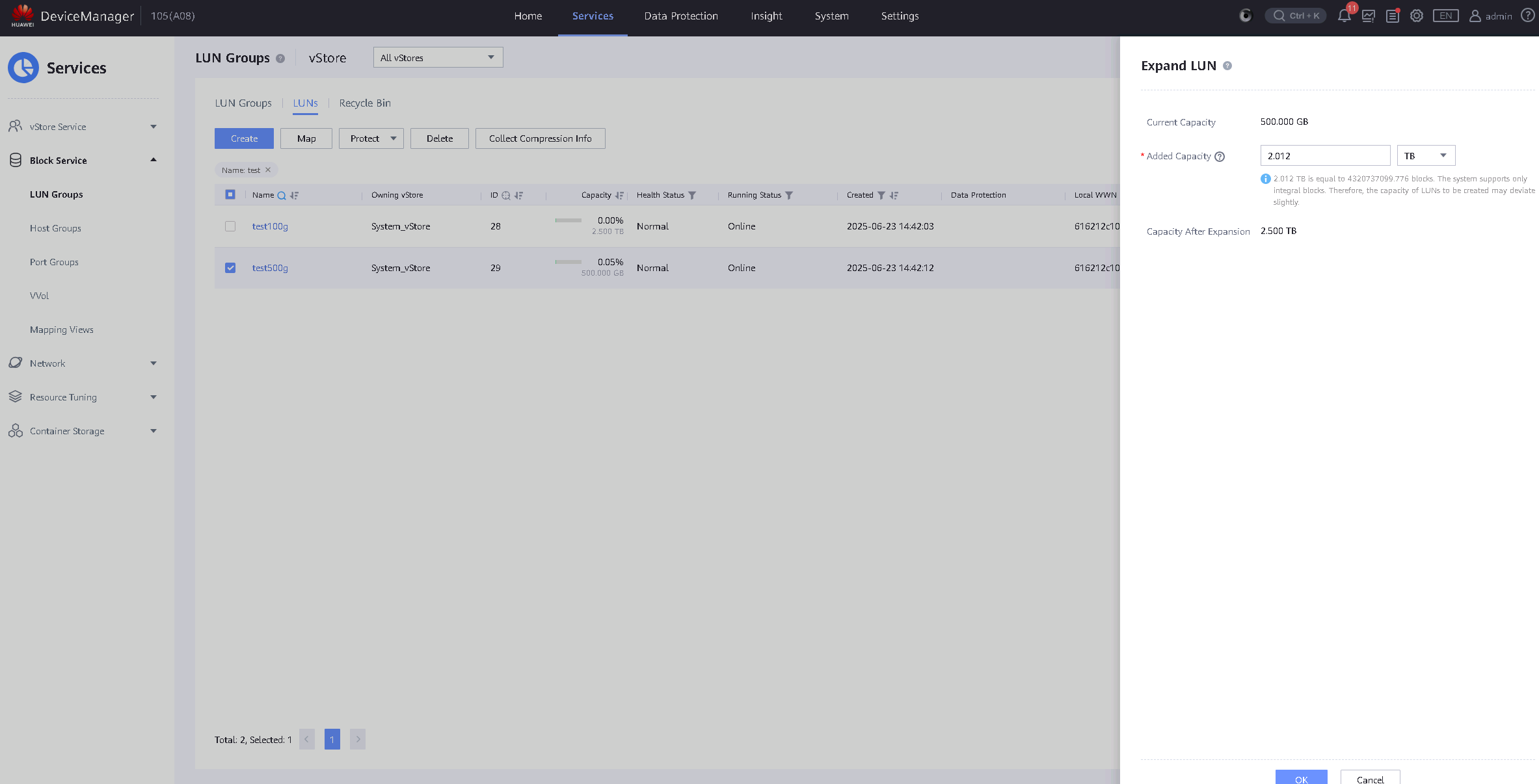

2.6 LUN Expansion

Test Purpose | To Verify That the LUN Mapped to Host Could be Expanded |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario and the physical links are functioning properly. 2.The host system is operating normally. 3.The storage system is functioning normally. 4.Multipath has been properly installed and configured. |

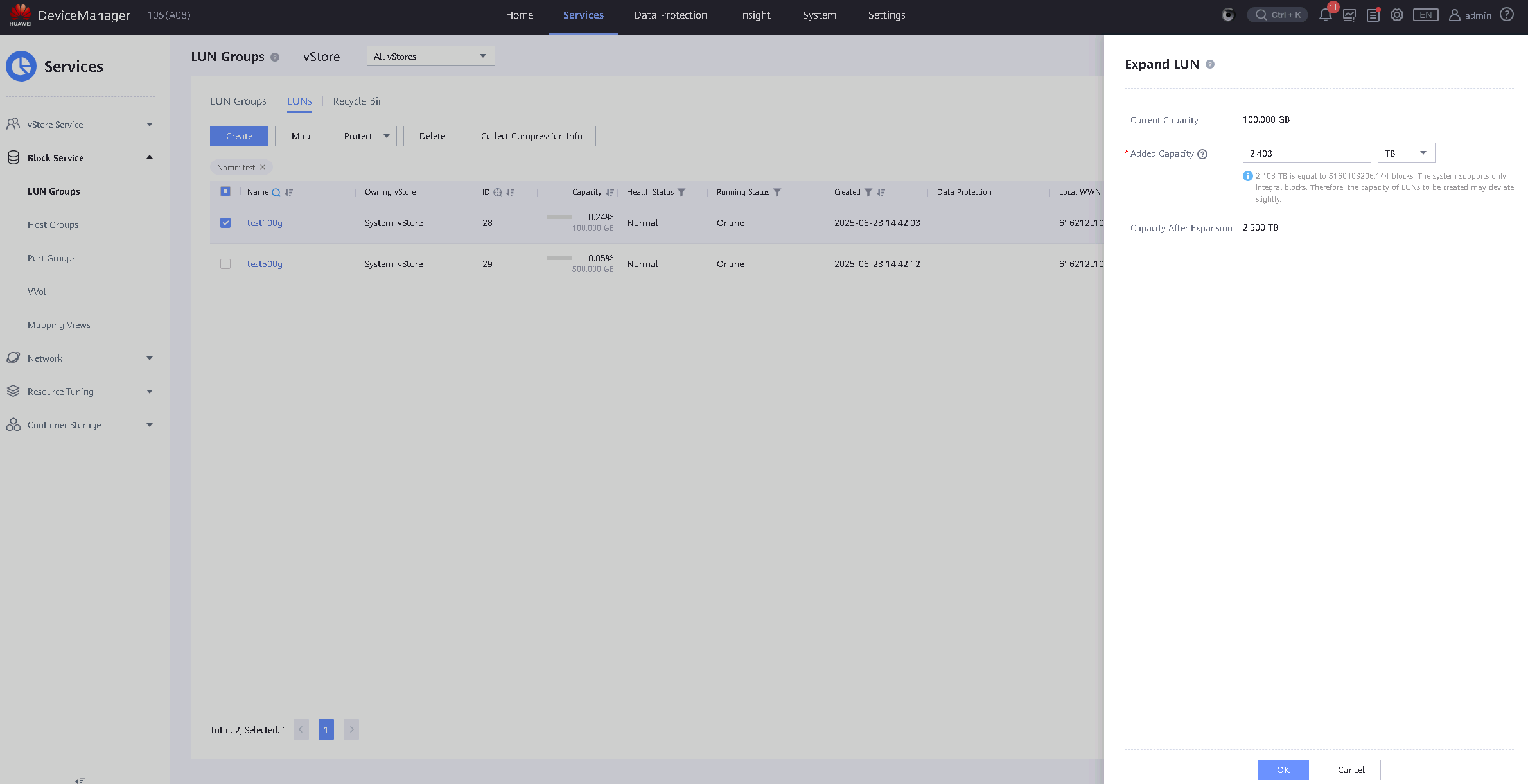

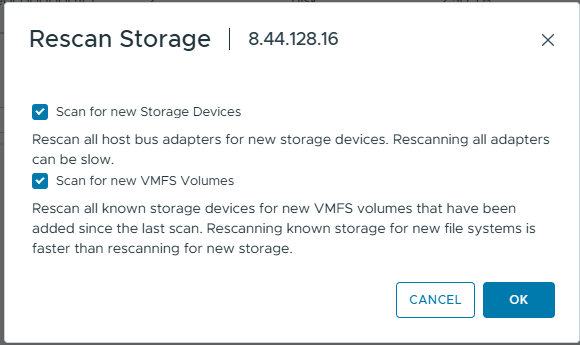

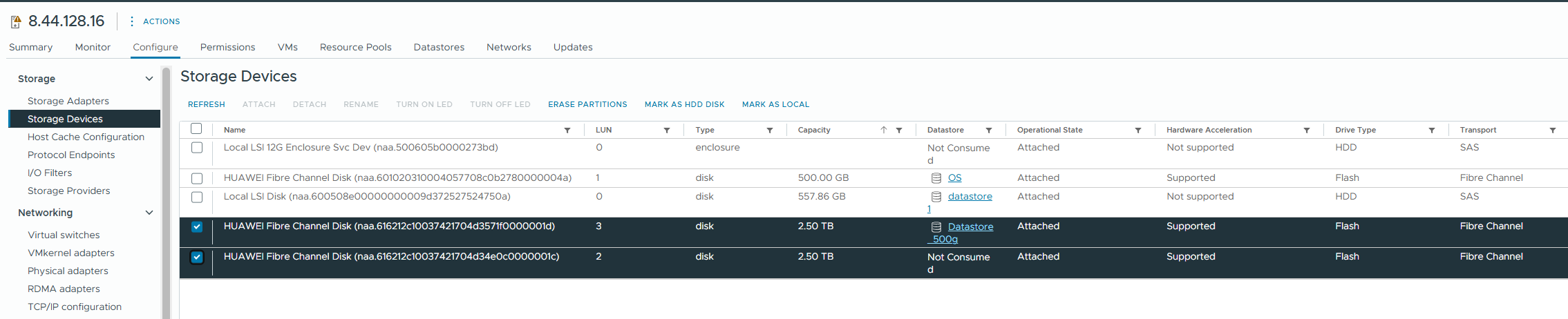

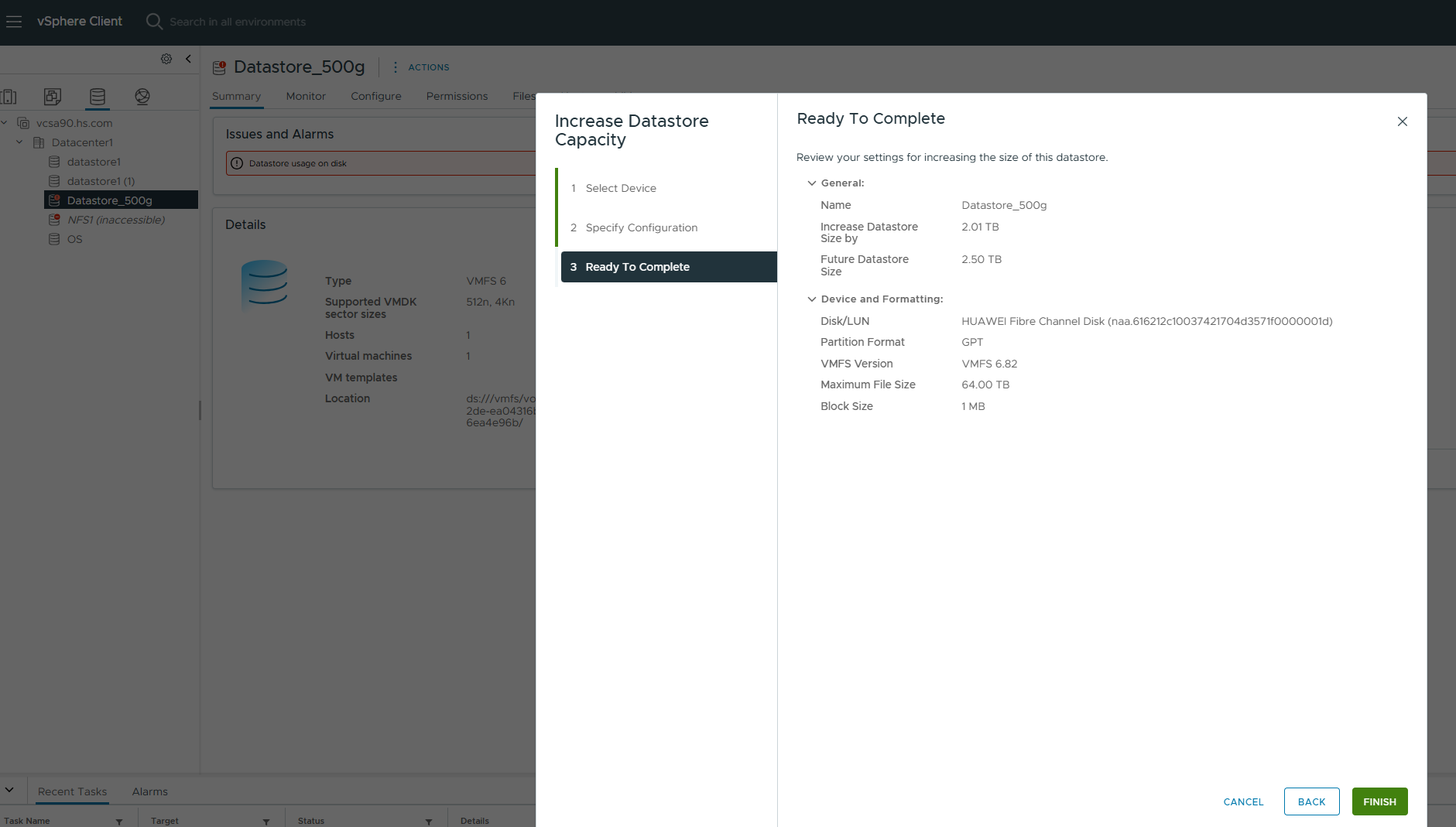

Test Procedure | 1.Log into the storage array management system and create two LUNs with sizes of 100GB and 500GB. 2.Map the LUNs to the corresponding host or host group. 3.Log into the host, scan the mapped LUNs, and check whether the LUN mapping status, number, and capacity information are correct. Also, create a datastore on the LUN with sizes of 500GB. 4.From the created datastore, use the full capacity to create a disk and map it to the virtual machine. Map the other LUN as a RDM Disk to the virtual machine. 5.Scan the LUNs in the virtual machine, and perform read/write operations on the two scanned LUNs. 6.Log into the storage array management system and expand both LUNs to 2.5TB. 7.Log into the host, scan the mapped LUNs, and check the LUN mapping status, number, and capacity information. Also, perform a full capacity expansion on the created datastore. 8.Edit the virtual machine configuration and expand the capacity of the datastore disk to 2TB. 9.Scan the disks in the virtual machine, observe the status of the business operations, and check the LUN capacity. |

Expected Result | 1.In step 3, the scanned LUN information is correct, and the datastore could be created successfully. 2.In step 5, the virtual machine scans and detects both disks successfully, and read/write operations are functioning normally. 3.In step 6, the expansion of the LUNs in the storage array is successful. 4.In step 7, after scanning, the capacity of both LUNs is correctly updated, and the datastore expands successfully. 5.In step 8, the datastore disk capacity is successfully expanded. 6.In step 9, the LUN capacities in the virtual machine are correctly refreshed, and I/O operations are unaffected. |

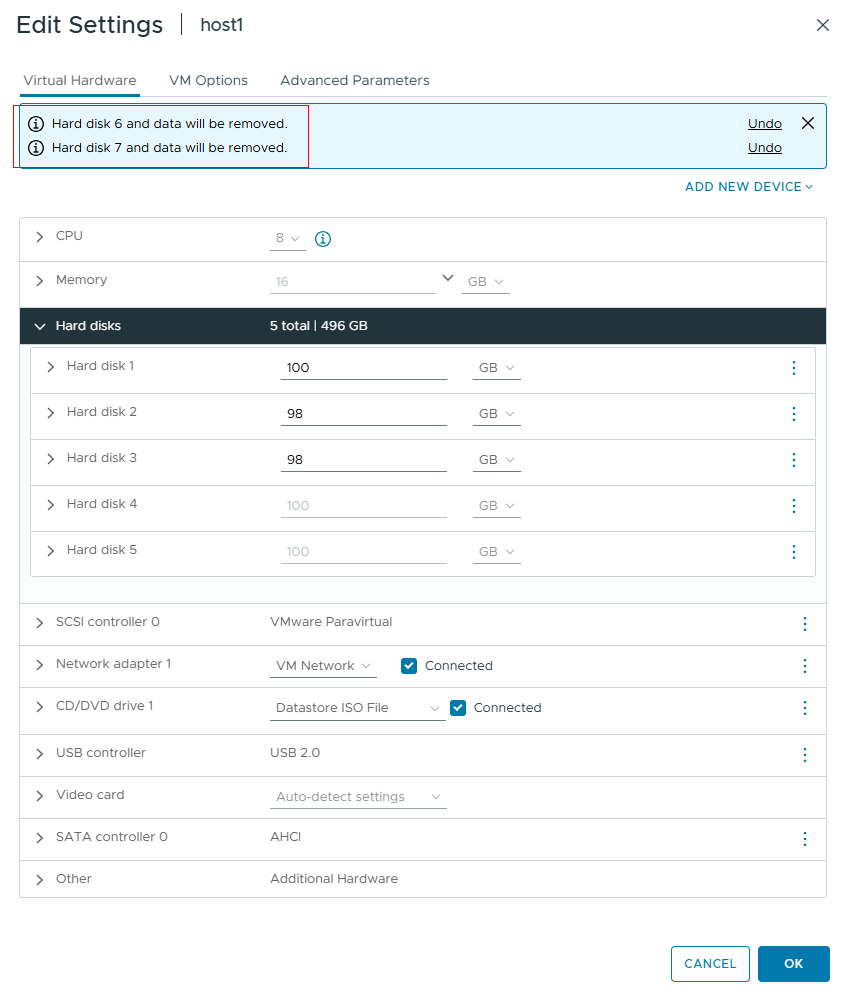

Test Record | 1.LUN mapping is successful, and the datastore can be created.

2.Add RDM Disk and Virtual Disk to the Virtual Machine.

3.View Disks in the Virtual Machine and Perform Read/Write Operations on the Disks.

4.Expand the LUN to 2.5TB.

5.Log into the host, scan the mapped LUN, and expand the created datastore.

6.Edit the virtual machine configuration and expand the datastore disk capacity to 2TB.

7.Scan the disks in the virtual machine and observe the business operations and LUN capacity.

|

Test Conclusion | Passed |

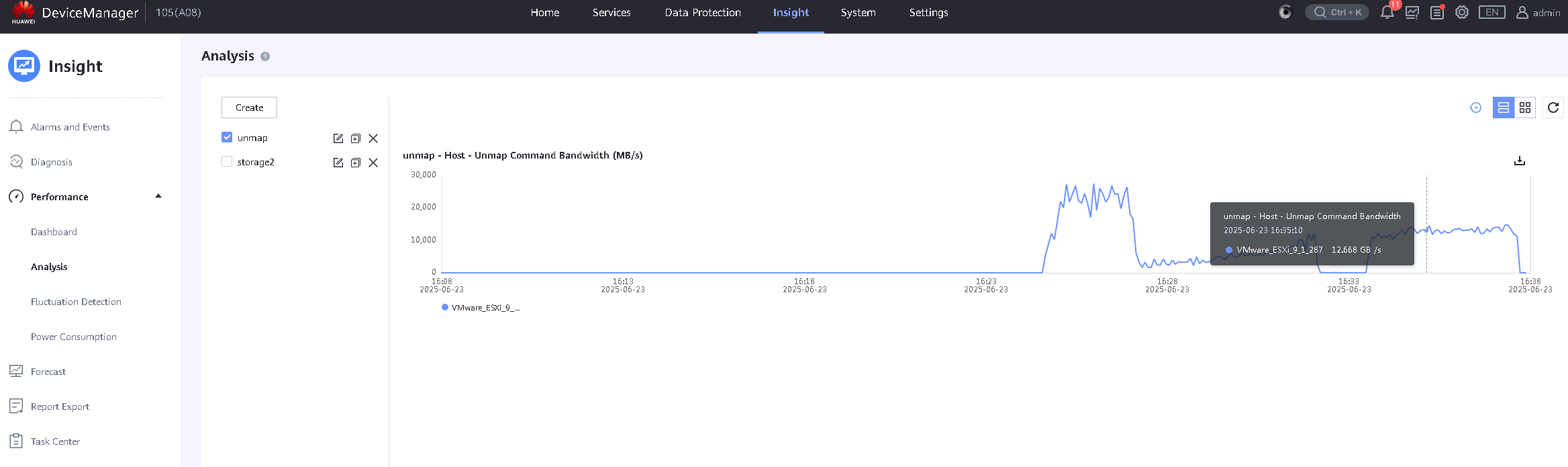

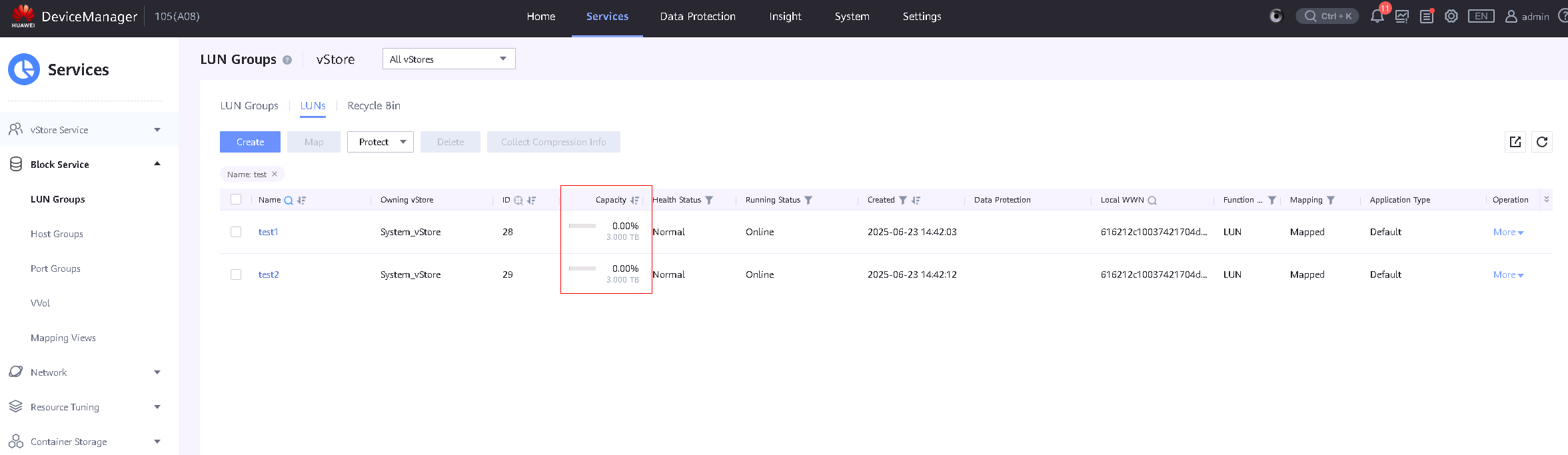

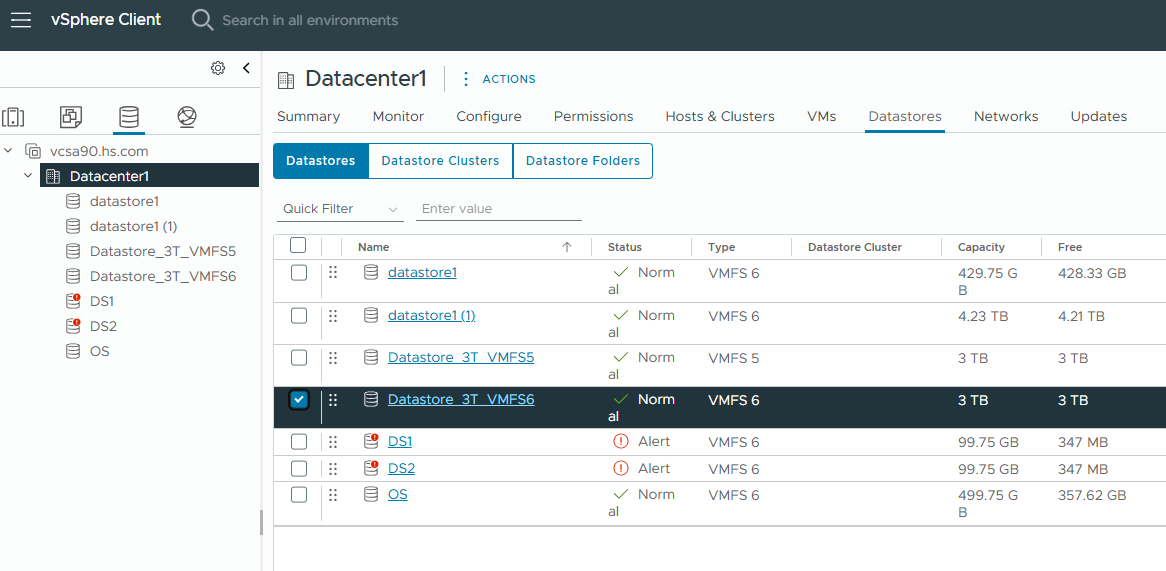

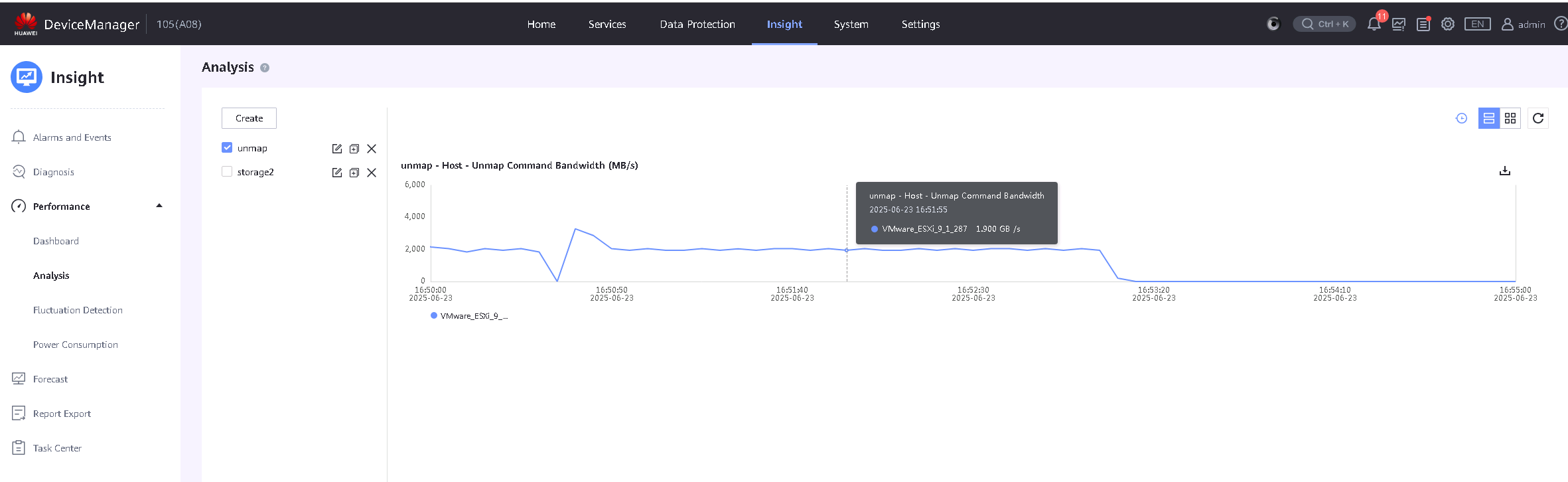

2.7 Manual ThinLUN Space Reclamation

Test Purpose | To Verify That Manual Space Reclamation is Successfully |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario and the physical links are functioning properly. 2.The host system is operating normally. 3.The storage system is functioning normally. 4.Multipath has been properly installed and configured. 5.Map four 100GB LUNs from the storage to the host, perform file system read/write operations and raw disk read/write operations as background I/O on the VM |

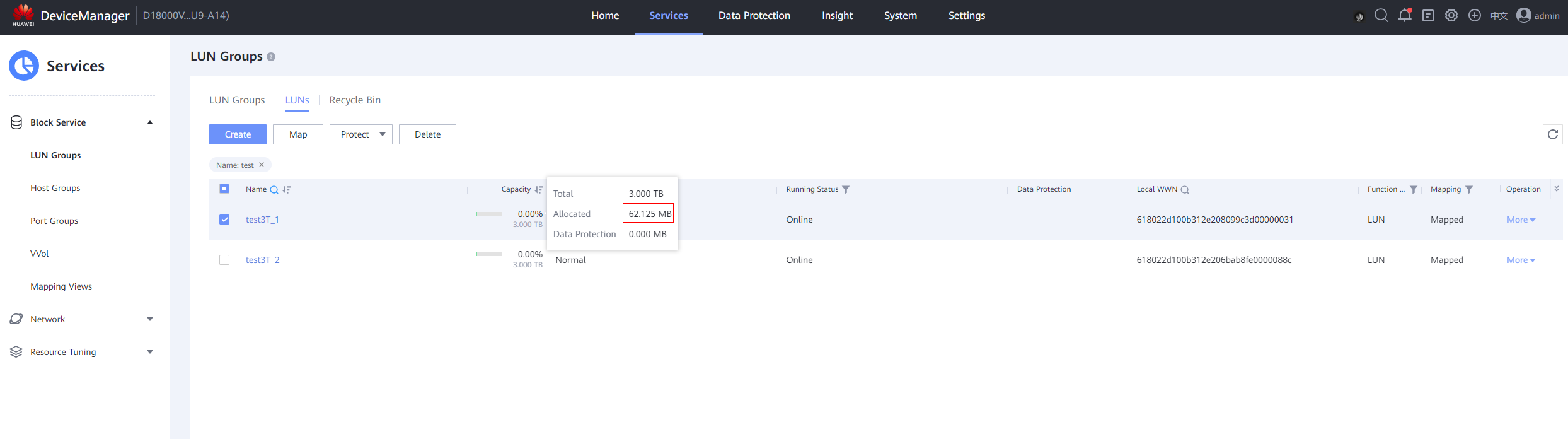

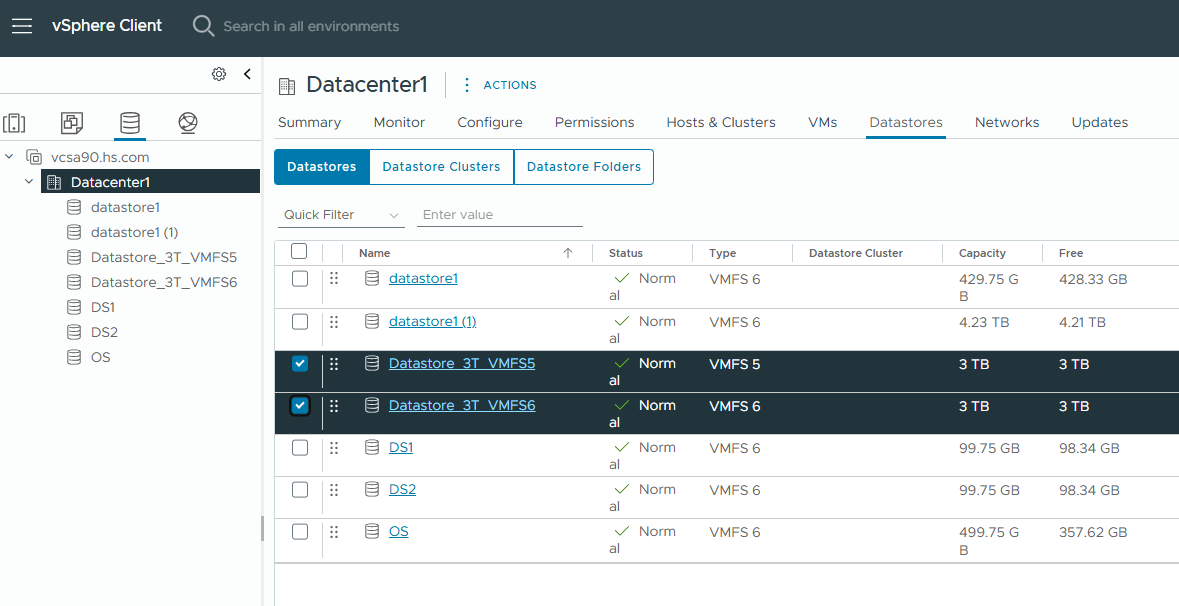

Test Procedure | 1.Map two 3TB LUNs from the storage to the host. 2.Format the two LUNs as VMFS6 and VMFS5 datastores. 3.Create a virtual disk (disk format is not limited) from each of the VMFS6 and VMFS5 datastores and add it to the VM. 4.Write random data to the virtual disk in the VM. 5.Check the used space of the two Thin LUNs. 6.Remove device and data of the two disks added to the VM. 7.For the two datastores, run the following command to manually reclaim space: esxcli storage vmfs unmap -l Volume_Label or esxcli storage vmfs unmap -u Volume_UUID. 8.Wait 10 to 30 minutes, check the used space of the two Thin LUNs, and monitor the unmap traffic. |

Expected Result | 1.In step 5, the used space is grown as expected. 2.In step 7, complete space reclamation normally. 3.In step 8, the Thin LUN space is reduced, and unmap traffic can be monitored in performance monitoring. And background I/O is unaffected. |

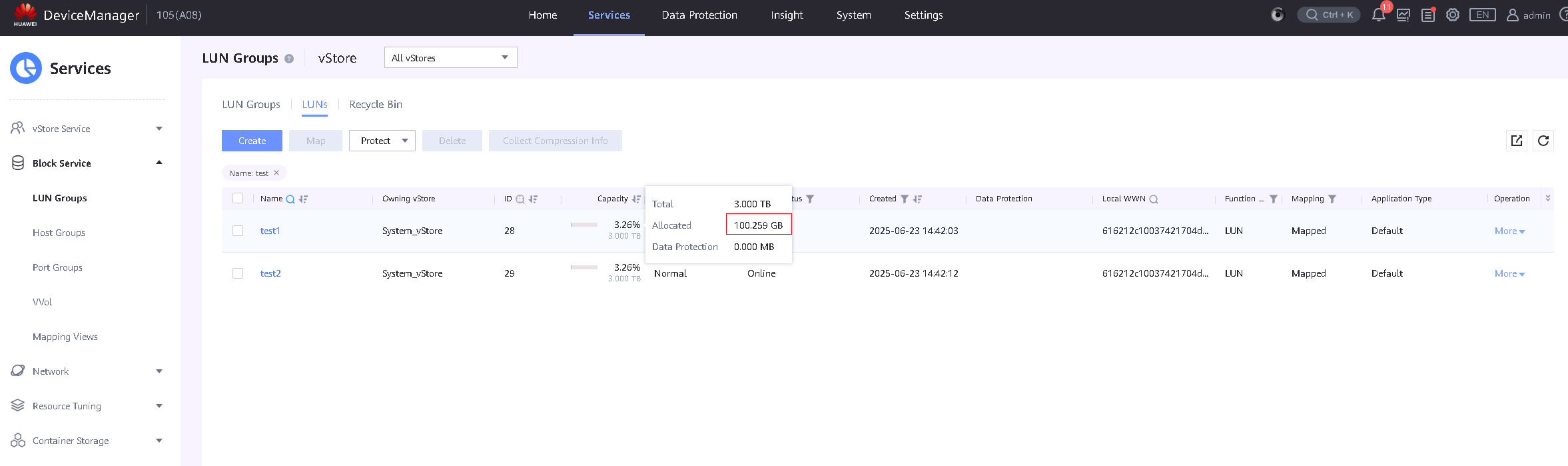

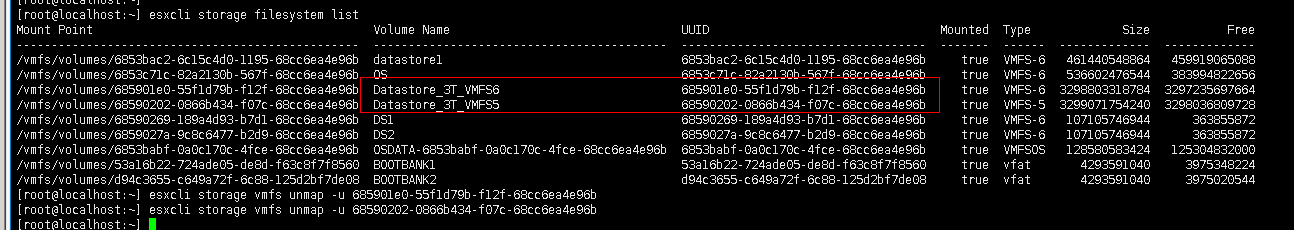

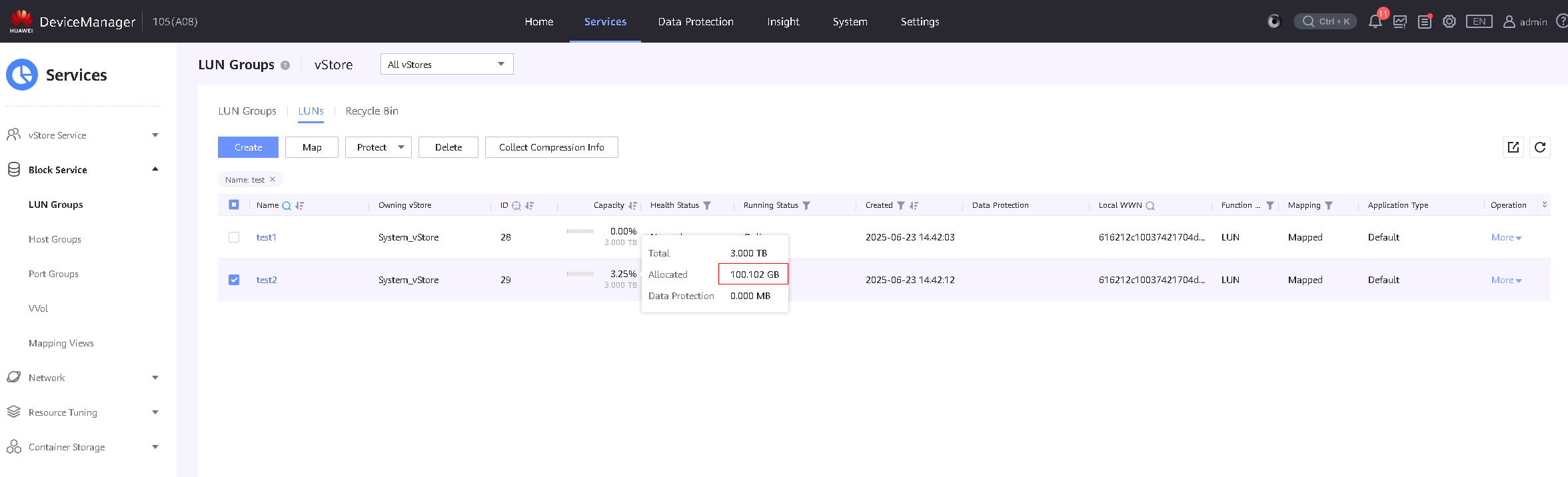

Test Record | 1.Map two 3TB LUNs from the storage to the host and format the two LUNs as VMFS6 and VMFS5 datastores.

2.Create a virtual disk from each of the VMFS6 and VMFS5 datastores and add it to the VM.

3.Write random data to the virtual disk in the VM.

4.Check the used space of the two Thin LUNs.

5.Remove device and data of the two disks added to the VM.

6.For the two datastores, run the following command to manually reclaim space: esxcli storage vmfs unmap -l Volume_Label or esxcli storage vmfs unmap -u Volume_UUID.

8.Wait for a while, check the used space of the two Thin LUN, and monitor the unmap traffic. And check the background I/O.

|

Test Conclusion | Passed |

2.8 Automatic ThinLUN Space Reclamation by Deleting Virtual Disk

Test Purpose | To Verify That Automatic Space Reclamation is Successfully |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario and the physical links are functioning properly. 2.The host system is operating normally. 3.The storage system is functioning normally. 4.Multipath has been properly installed and configured. 5.Map four 100GB LUNs from the storage to the host, perform file system read/write operations and raw disk read/write operations as background I/O on the VM. |

Test Procedure | 1.Map a 3TB LUN from the storage to the host. 2.Format the LUN as VMFS6 datastore and enable auto unmap. 3.Create a virtual disk (disk format is not limited) from the VMFS6 datastore and add it to the VM. 4.Write random data to the virtual disk in the VM. 5.Check the used space of the two Thin LUNs. 6.Remove device and data of the two disks added to the VM. 7.Wait 10 to 30 minutes, check the used space of the two Thin LUN, and monitor the unmap traffic on the storage. |

Expected Result | 1.In step 5, the used space is grown as expected. 2.In step 7, the Thin LUN space is reduced, and unmap traffic can be monitored in performance monitoring. And background I/O is unaffected. |

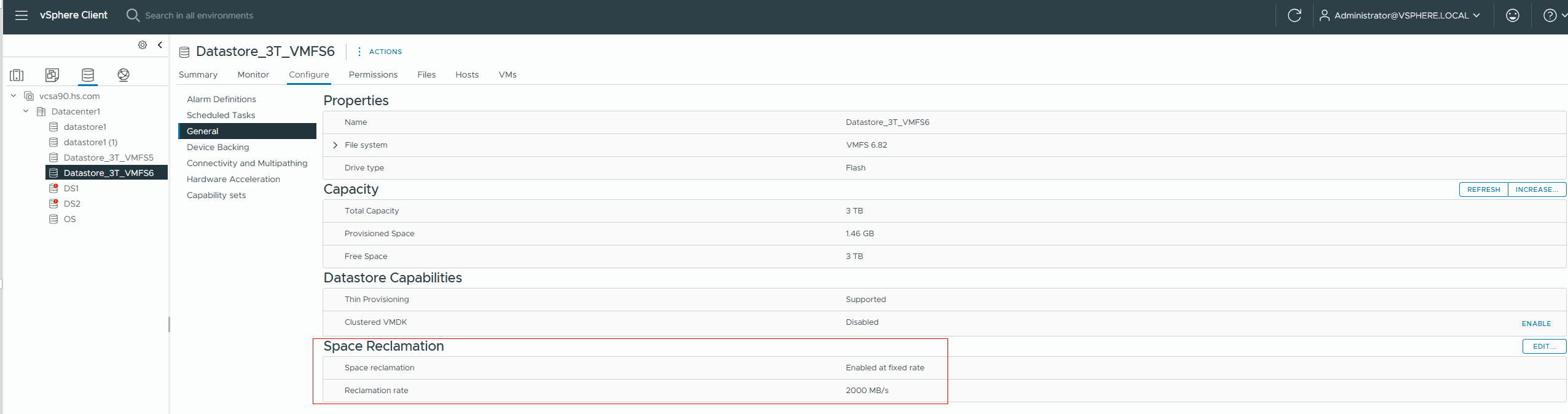

Test Record | 1.Format the LUN as VMFS6 datastore and enable auto unmap.

2.Create a virtual disk from the VMFS6 datastore and add it to the VM.

3.Write random data to the virtual disk in the VM.

4.Check the used space of the two Thin LUNs.

6.Remove device and data of the two disks added to the VM.

7.Wait for a while, check the used space of the two Thin LUN, and monitor the unmap traffic. And check the background I/O.

|

Test Conclusion | Passed |

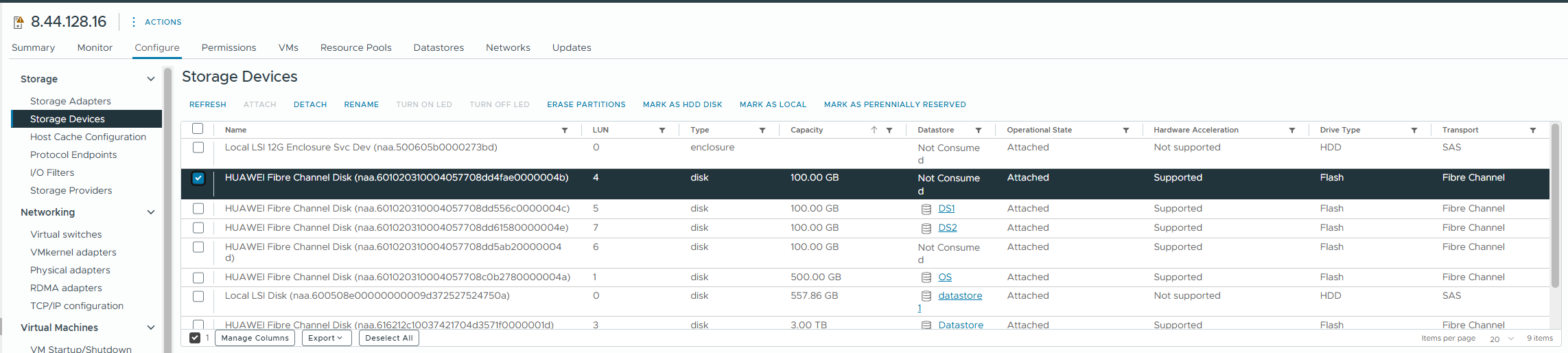

2.9 VAAI Status Check

Test Purpose | To Check the Support for VAAI Command Words on the Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. |

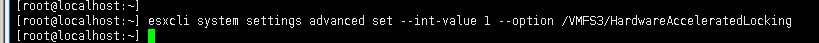

Test Procedure | 1.Log into the storage management system and create mappings, adding a 100GB LUN to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.In the VMware ESXi host, run the command: esxcli storage core device vaai status get -d [naa ID]. Check ATS Status, Clone Status, Zero Status, and Delete Status. |

Expected Result | 1.In step 3, ATS Status, Clone Status, Zero Status and Delete Status return values all show supported. |

Test Record | 1.Map a 100GB LUN to the host.

2.Check vaai status.

|

Test Conclusion | Passed |

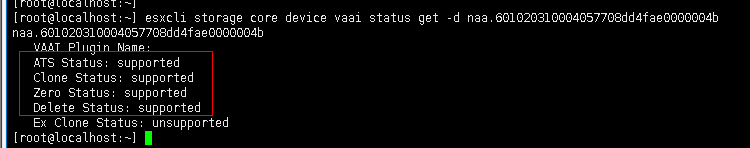

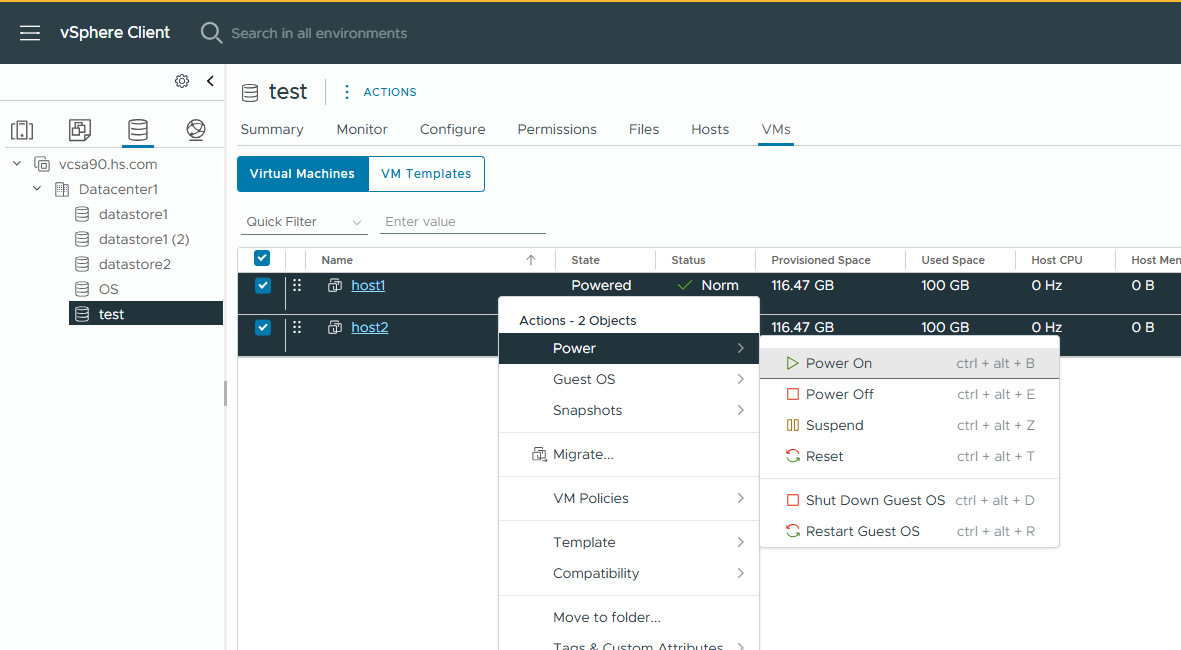

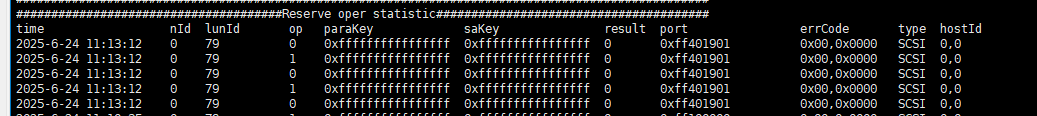

2.10 Verification of VAAI ATS

Test Purpose | To Verify That VAAI ATS is Supported on the Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. 7.Two ESXi hosts are added to vCenter cluster normally. |

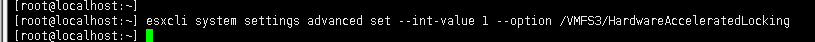

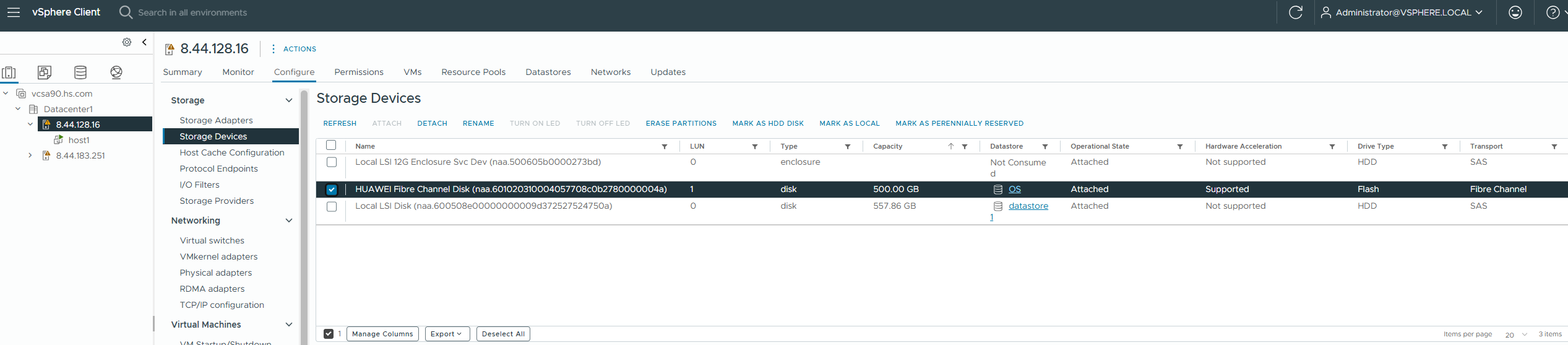

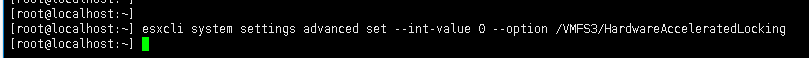

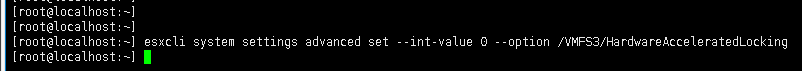

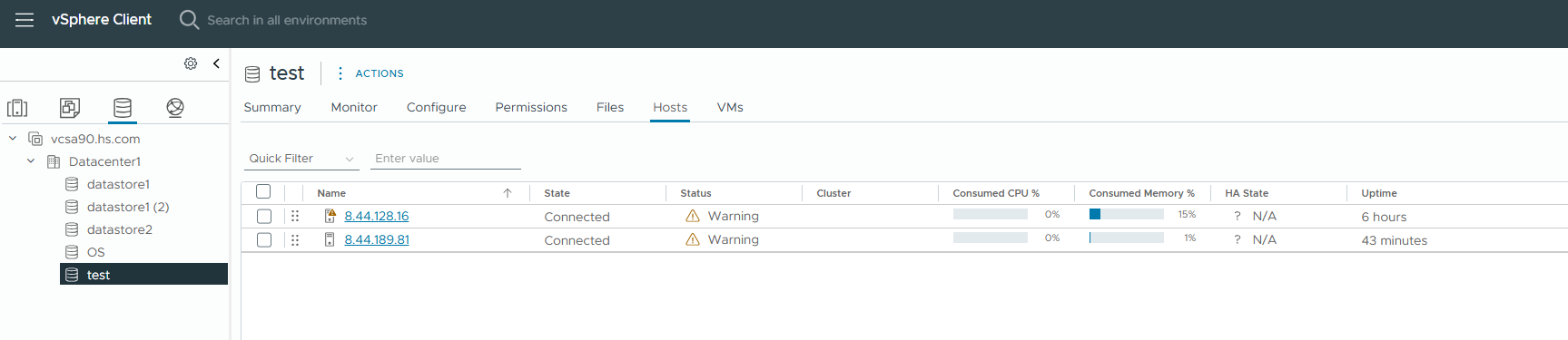

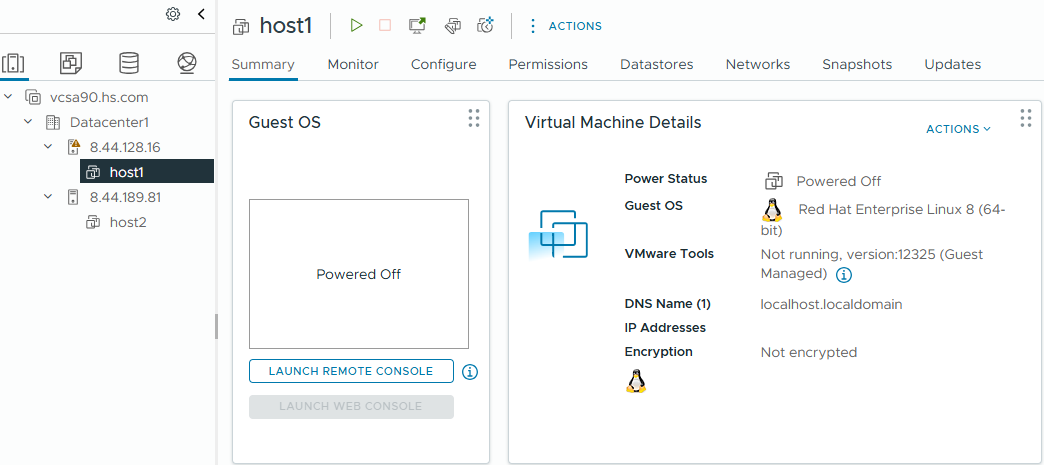

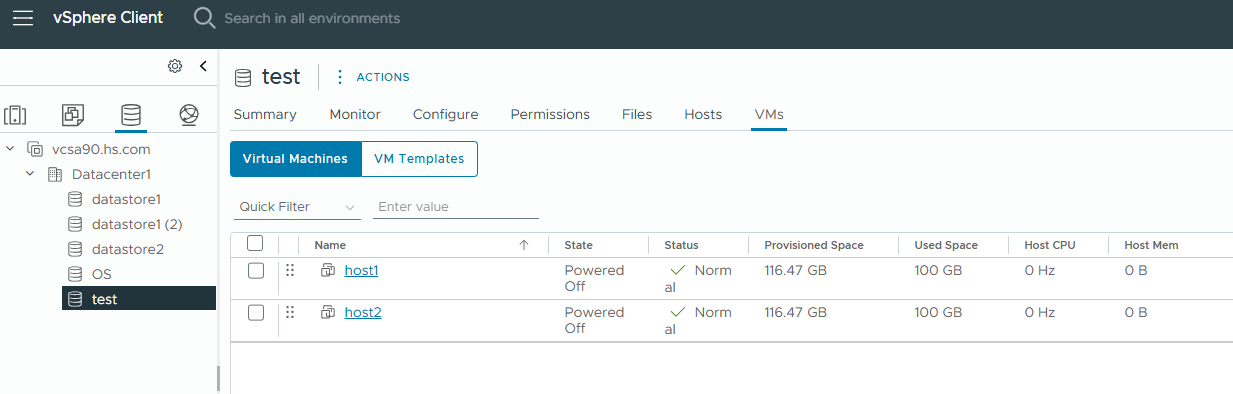

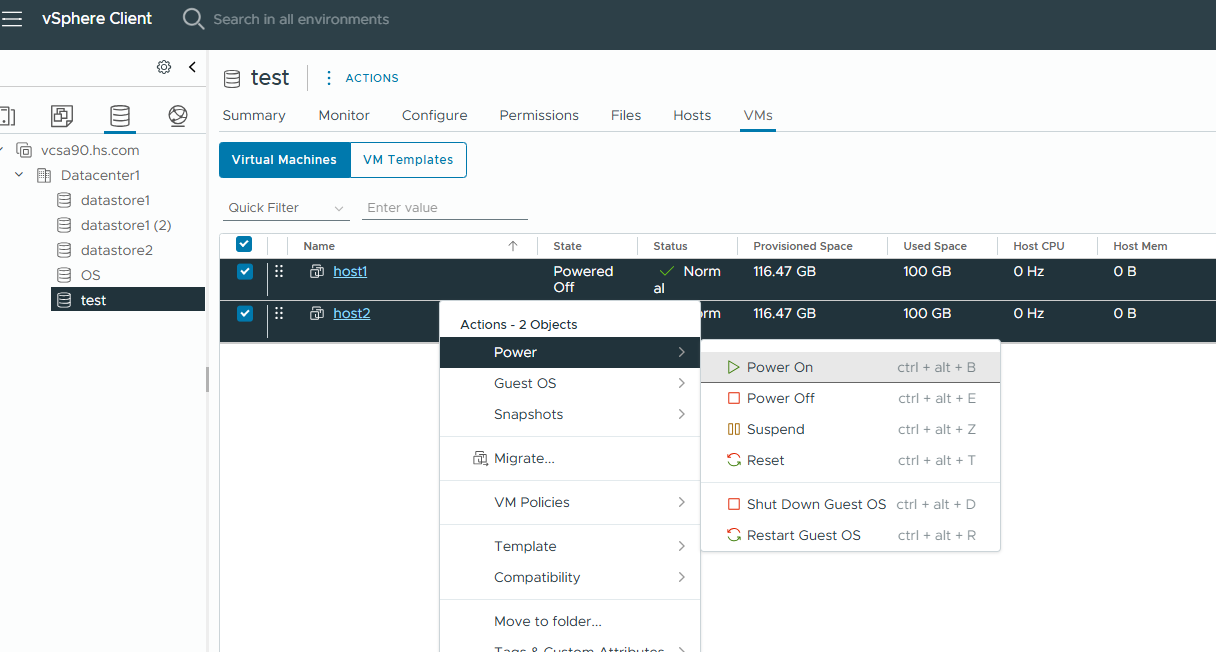

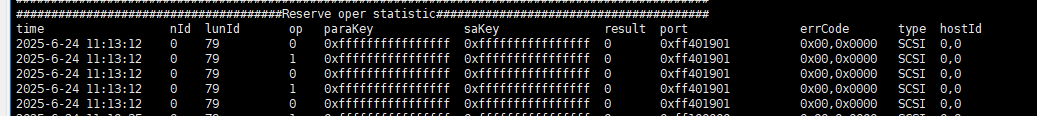

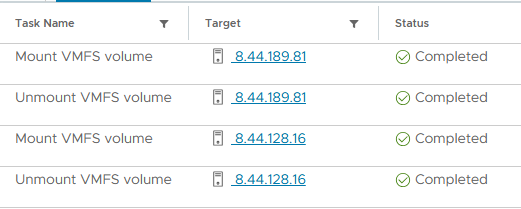

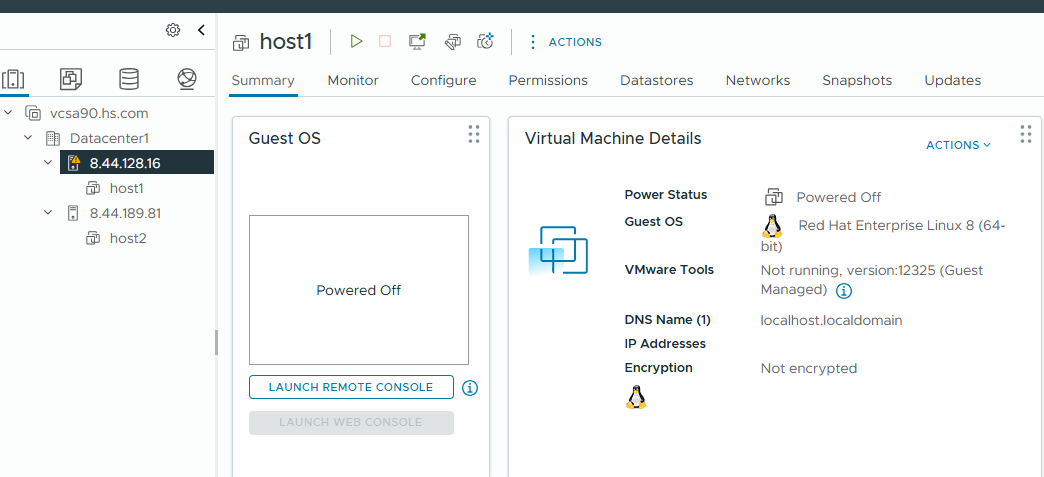

Test Procedure | 1.Log into the storage management system and create mappings, adding a 500GB LUN to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Run the following command in both ESXi hosts to disable VAAI ATS: esxcli system settings advanced set –int-value 0 –option /VMFS3/HardwareAcceleratedLocking. 4.Create a VMFS6 datastore on the LUN, and the datastore is shared by two ESXi hosts. 5.Create two virtual machines, one belonging to ESXi host 1 and the other belonging to ESXi host 2, and all virtual machines are located in the datastore created in step 4. 6. Power on all virtual machines and check the reservation information on the storage. 7.Run the following command in both ESXi hosts to enable VAAI ATS: esxcli system settings advanced set –int-value 1 –option /VMFS3/HardwareAcceleratedLocking. 8.Unmount and mount the datastore on all ESXi hosts one by one. 9. Create two virtual machines, one belonging to ESXi host 1 and the other belonging to ESXi host 2, and all virtual machines are located in the datastore created in step 4. 10. Power on all virtual machines and check the reservation information on the storage. |

Expected Result | 1.In step 3, VAAI ATS disabled successfully 2.In step 6, the virtual machine starts normally, and the SCSI reservation information can be viewed on the storage. 3.In step 7, VAAI ATS enabled successfully 4.In step 8, the datastore was successfully unmounted and remounted. 5.In step 10, the virtual machine starts normally, and there is no new SCSI reservation information on the array. |

Test Record | 1.Run the following command in both ESXi hosts to disable VAAI ATS: esxcli system settings advanced set –int-value 0 –option /VMFS3/HardwareAcceleratedLocking.

2.Create a VMFS6 datastore on the LUN, and the datastore is shared by two ESXi hosts.

3.Create two virtual machines, one belonging to ESXi host 1 and the other belonging to ESXi host 2, and all virtual machines are located in the datastore.

4.Power on all virtual machines and check the reservation information on the storage.

5.Run the following command in both ESXi hosts to enable VAAI ATS: esxcli system settings advanced set –int-value 1 –option /VMFS3/HardwareAcceleratedLocking.

6.Unmount and mount the datastore on all ESXi hosts one by one.

7.Create two virtual machines, one belonging to ESXi host 1 and the other belonging to ESXi host 2, and all virtual machines are located in the datastore created in step 4.

8.Power on all virtual machines and check the reservation information on the storage.

|

Test Conclusion | Passed |

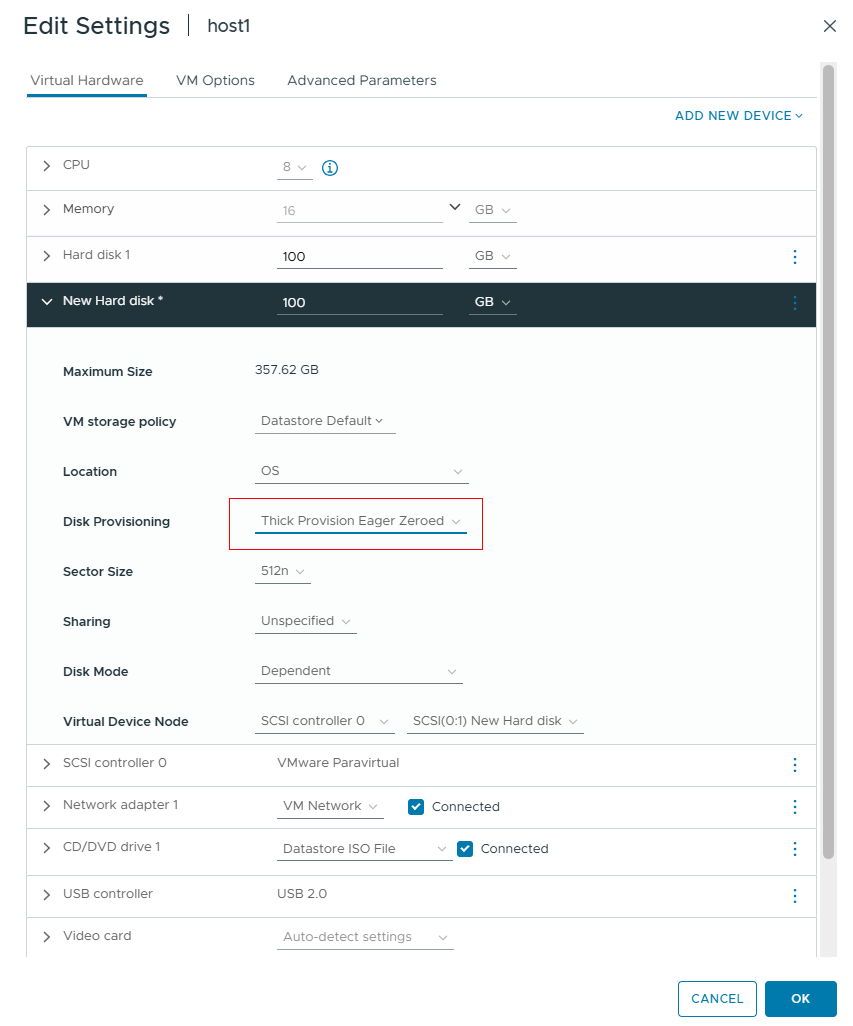

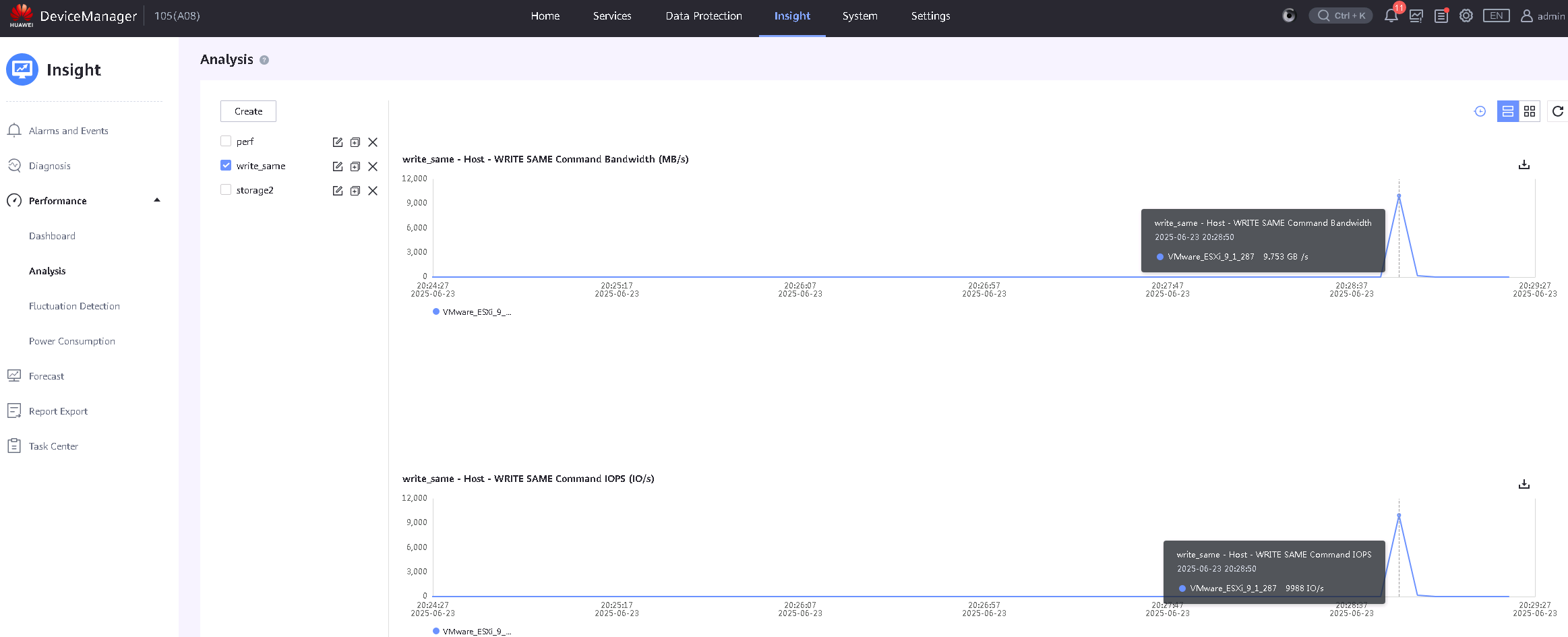

2.11 Verification of VAAI Block Zero

Test Purpose | To Verify That VAAI Block Zero is Supported on the Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

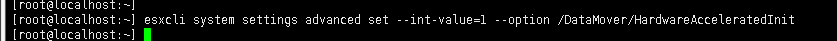

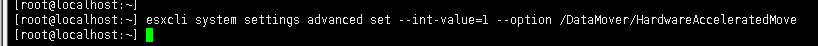

Test Procedure | 1.Log into the storage management system and create mappings, adding a 500GB LUN to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create a VMFS6 datastore on the LUN. 4.Run the following command in VMware ESXi host to enable VAAI Block Zero: esxcli system settings advanced set –int-value=1 –option /DataMover/HardwareAcceleratedInit. 5.Create a virtual disk from the VMFS6 datastore and add it to the VM. Disk provisioning mode select thick provision eager zeroed. Monitor the write same traffic on the storage. |

Expected Result | 1.In step 4, VAAI Block Zero enabled successfully. 2.In step 5, thick provision eager zeroed disk creation succeeded and write same traffic can be monitored in performance monitoring. |

Test Record |

1.Create a VMFS6 datastore on the LUN.

2.Run the following command in VMware ESXi host to enable VAAI Block Zero: esxcli system settings advanced set –int-value=1 –option /DataMover/HardwareAcceleratedInit.

3.Create a virtual disk from the VMFS6 datastore and add it to the VM. Disk provisioning mode select thick provision eager zeroed. Monitor the write same traffic on the storage.

|

Test Conclusion | Passed |

2.12 Verification of VAAI Full Copy

Test Purpose | To Verify That VAAI Full Copy is Supported on the Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

Test Procedure | 1.Log into the storage management system and create mappings, adding a 500GB LUN to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create a VMFS6 datastore on the LUN. 4.Create a virtual machine on the datastore and install the operating system on the newly created virtual machine. Create a virtual disk from the datastore and add it to the VM. Copy data to fill the disk. 5.Run the following command in VMware ESXi host to enable VAAI Full Copy: esxcli system settings advanced set –int-value=1 –option /DataMover/HardwareAcceleratedMove. 6.Clone the virtual machine. The cloned virtual machine is located on the same datastore as the original virtual machine. Monitor the full copy traffic on the storage. |

Expected Result | 1.In step 5, VAAI Full Copy enabled successfully 2.In step 6, the virtual machine cloning is successful, and write same traffic can be monitored in performance monitoring. |

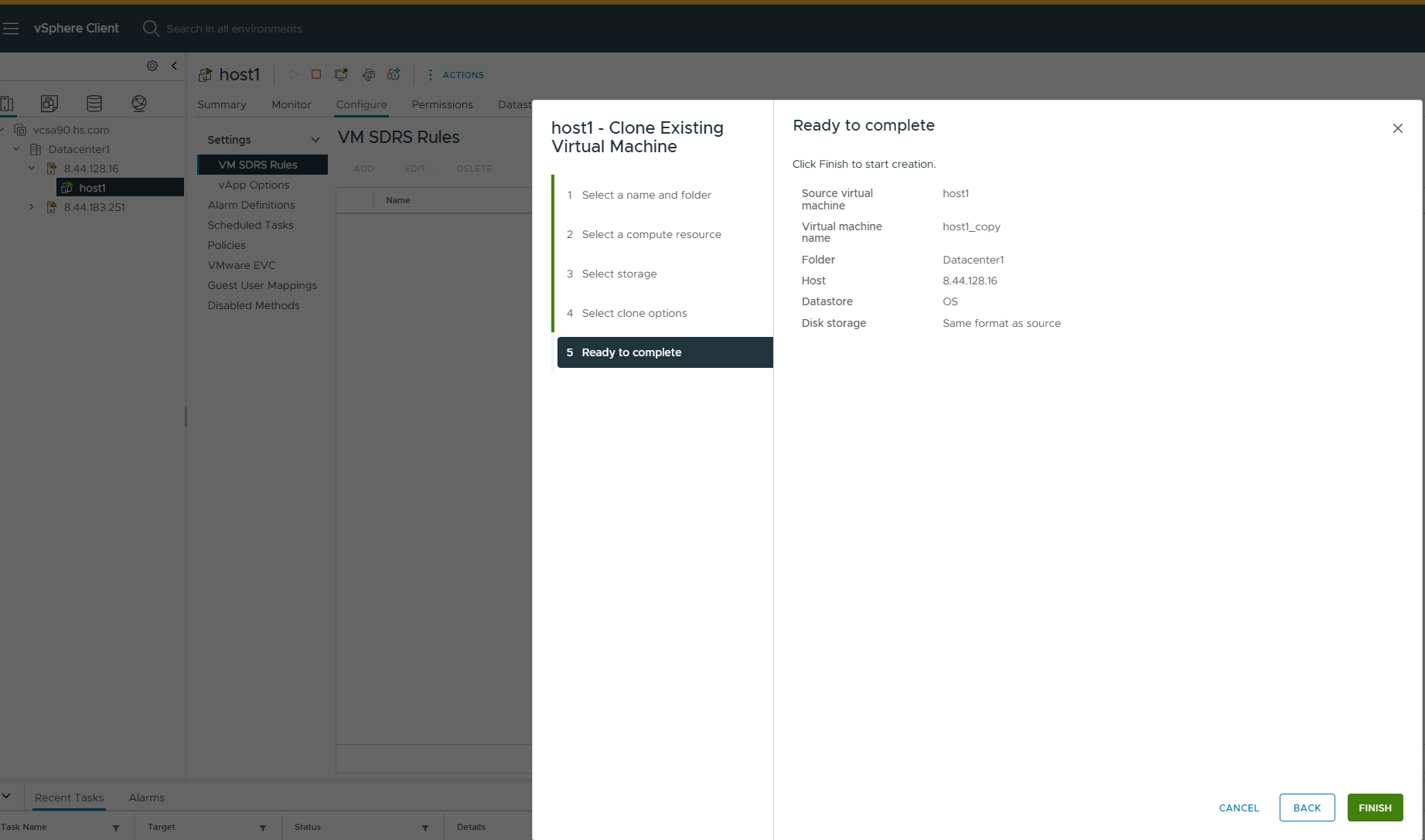

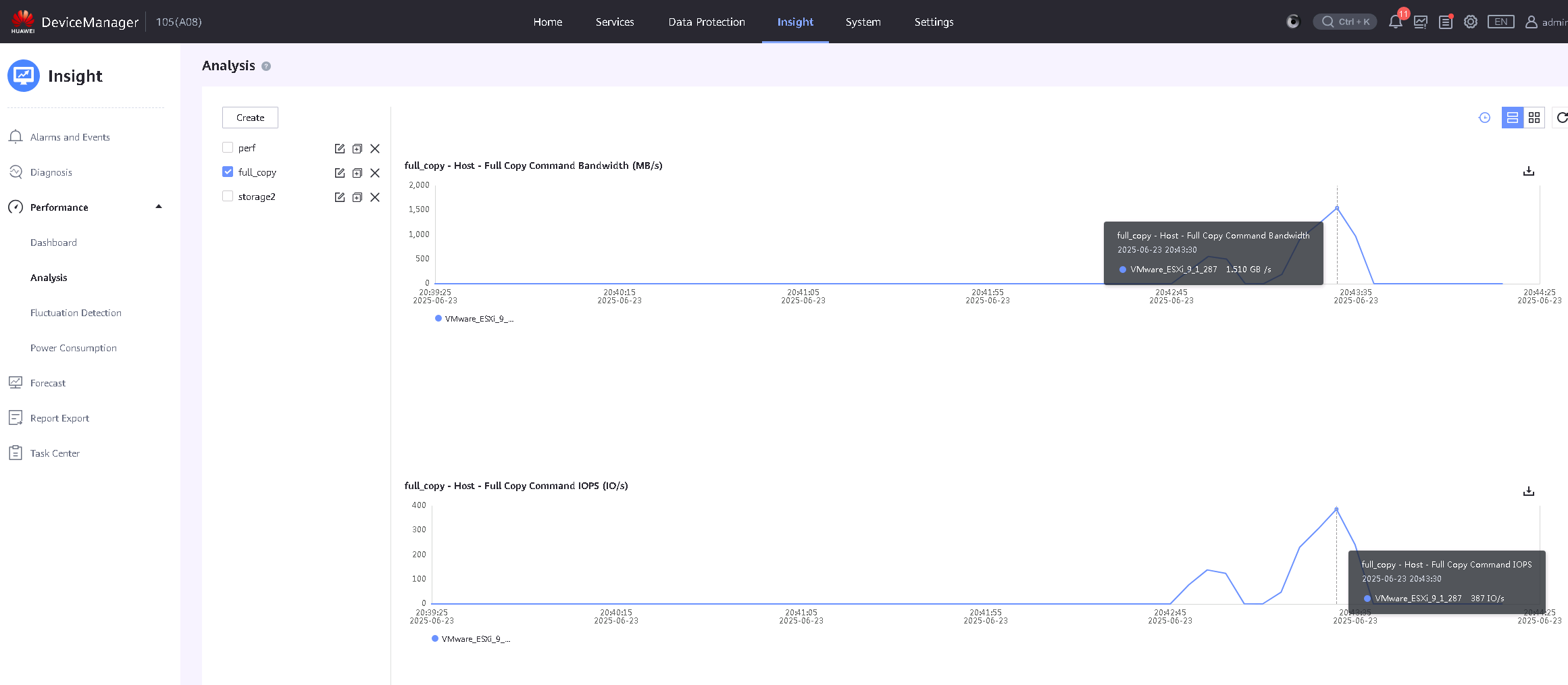

Test Record | 1.Create a VMFS6 datastore on the LUN.

2.Create a virtual machine on the datastore and install the operating system on the newly created virtual machine. Create a virtual disk from the datastore and add it to the VM. Use vdbench to fill the disk with data.

3.Run the following command in VMware ESXi host to enable VAAI Full Copy: esxcli system settings advanced set –int-value=1 –option /DataMover/HardwareAcceleratedMove.

4.Clone the virtual machine. The cloned virtual machine is located on the same datastore as the original virtual machine. Monitor the full copy traffic on the storage.

|

Test Conclusion | Passed |

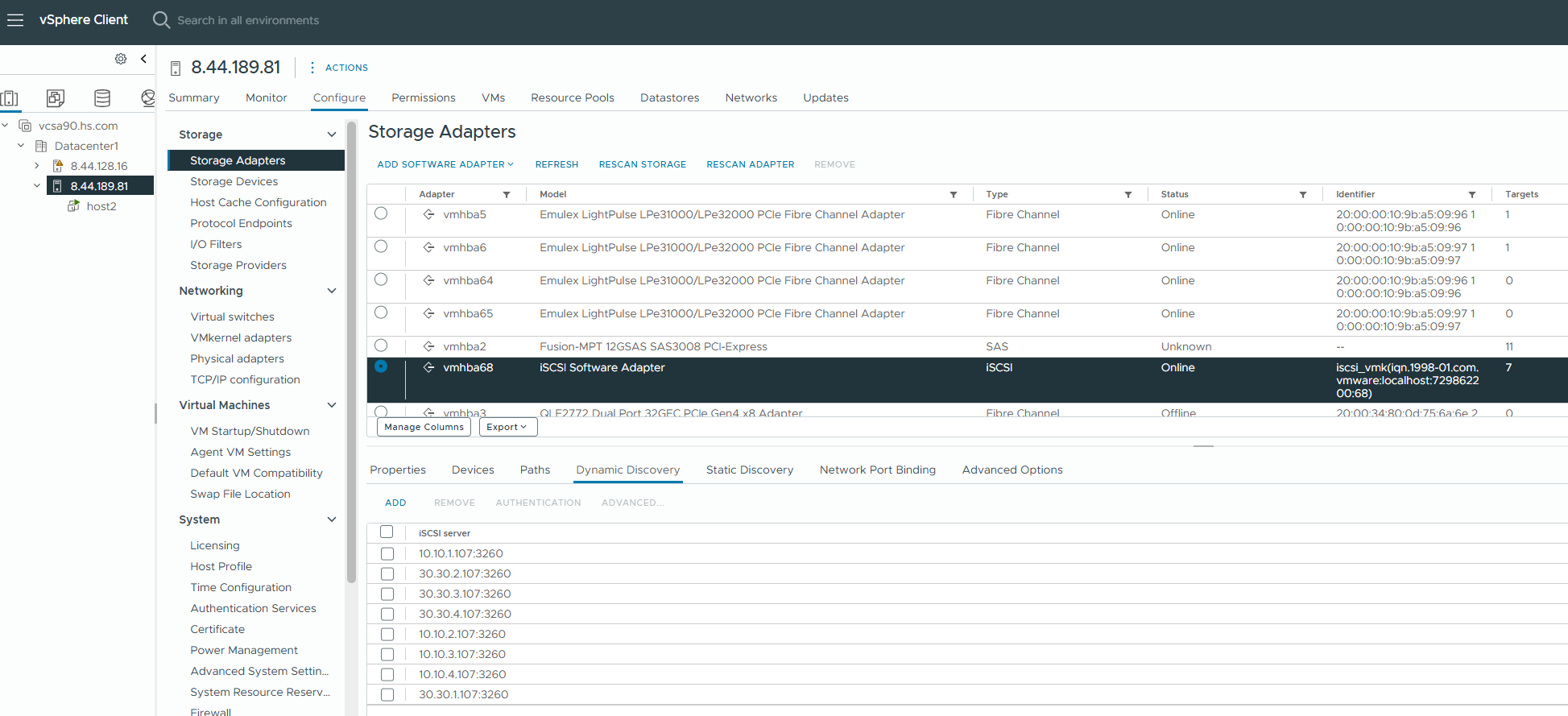

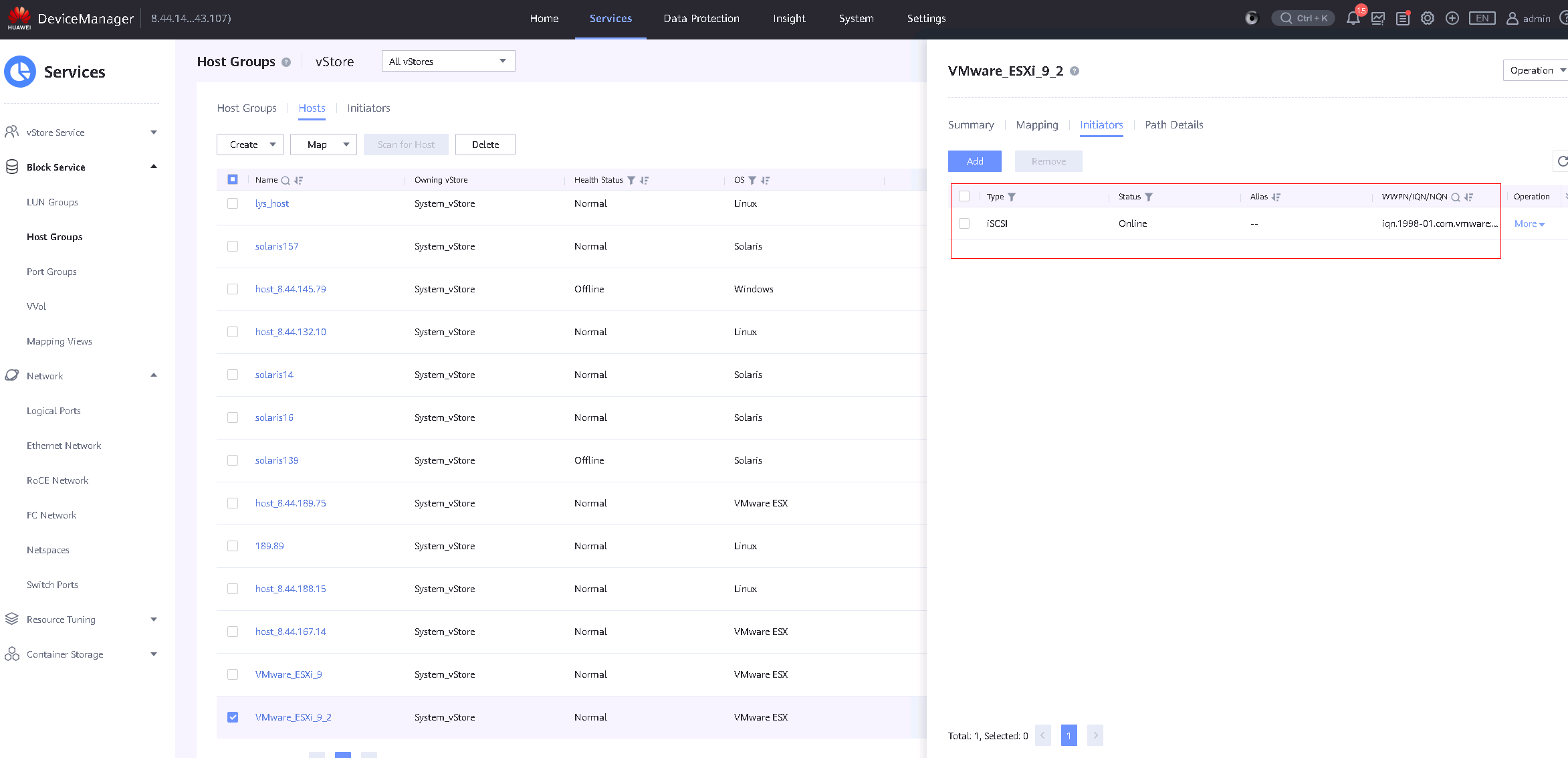

2.13 iSCSI Connection and LUN Mapping

Test Purpose | To Verify That iSCSI Connection Could be Established and the Virtual Machine is able to Perform Read/Write Operations on the LUN Mapped from Storage |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario and the physical links are functioning properly. 2.The host system is operating normally. 3.The Storage system is operating normally. |

Test Procedure | 1.Establish iSCSI connections between the host and storage. 2.Log into the storage management system, add the host initiator to the corresponding host, and configure the host mode to load balancing. 3.Observe the initiator connection status. 4.Log into the storage management system, create the mappings, and add four 100GB LUNs to the host. 5.Log into vSphere vCenter and perform a « Rescan Storage » to scan the LUNs and view disk information. 6.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 7.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, observe the I/O performance. On LUN B and LUN D, create file systems and perform file system read/write operations using vdbench, observing the I/O performance. |

Expected Result | 1.In step 5, the host is able to detect the four mapped LUNs. 2.In step 7, the VM is able to perform read/write operations on all four disks without issues. |

Test Record | 1.Establish iSCSI connections between the host and storage.

2.Log into the storage management system, add the host initiator to the corresponding host, and configure the host mode correctly according to the test scenario.

3.Observe whether the initiator connection status is online.

4.Log into the storage management system, create the mappings, and add four 100GB LUNs to the host.

5.Log into vSphere vCenter and perform a « Rescan Storage » to scan the LUNs and view disk information.

6.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. »

7.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, observe the I/O performance. On LUN B and LUN D, create file systems and perform file system read/write operations using vdbench, observing the I/O performance.

|

Test Conclusion | Passed |

3. Reliability Test

3.1 Storage Replication Link Failure and Recovery

Test Purpose | To Verify That Business is Normal in the Storage Replication Link Failure Scenario |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The storage HyperMetro is configured correctly. 7.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

Test Procedure | 1.Log into the storage management system and create mappings, adding four 100GB HyperMetro LUNs to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, and observe the I/O performance. In the VM, create file systems on LUN B and LUN D, and perform file system read/write operations using vdbench, observing the I/O performance. 5.Set the preferred site of the HyperMetro pairs to storage 1, then Unplug all cables of replication links between the storages and observe the I/O performance. 6.Plug back the cables back in, then wait for the HyperMetro pairs synchronization to complete and observe the I/O performance. 7.Set the preferred site of the HyperMetro pairs to storage 2, then Unplug all cables of replication links between the storages and observe the I/O performance. 8. Plug back the cables back in, then wait for the HyperMetro pairs synchronization to complete and observe the I/O performance. |

Expected Result | 1.In step 2, the host is able to scan and detect the four mapped LUNs. 2.In step 4, I/O read/write operations are normal. 3.In step 5, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 4.In step 6, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. 5.In step 7, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 6.In step 8, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. |

Test Record | 1.Log into the storage management system, create mappings, and add four 100GB LUNs to the host.

2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information.

3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks ».

4.Perform vdbench raw disk read/write tests on LUN A and LUN C within the VM, and observe IO; create file systems on LUN B and LUN D within the VM, and perform vdbench file system read/write tests, observing IO.

5.Set the preferred site of the HyperMetro pairs to storage 1, then Unplug all cables of replication links between the storages and observe the I/O performance.

6.Plug back the cables back in, then wait for the HyperMetro pairs synchronization to complete and observe the I/O performance.

7.Set the preferred site of the HyperMetro pairs to storage 2, then Unplug all cables of replication links between the storages and observe the I/O performance.

8.Plug back the cables back in, then wait for the HyperMetro pairs synchronization to complete and observe the I/O performance.

|

3.2 Storage HyperMetro Pair Pause and Synchronize

Test Purpose | To Verify That Business is Normal in the Storage HyperMetro Pairs Pause and Synchronize Scenario |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The storage HyperMetro is configured correctly. 7.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

Test Procedure | 1.Log into the storage management system and create mappings, adding four 100GB HyperMetro LUNs to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, and observe the I/O performance. In the VM, create file systems on LUN B and LUN D, and perform file system read/write operations using vdbench, observing the I/O performance. 5.Pause the preferred site of HyperMetro pairs and observe the I/O performance. 6.Synchronize HyperMetro pairs and observe the I/O performance. 7.Pause the non-preferred site of HyperMetro pairs and observe the I/O performance. 8.Synchronize HyperMetro pairs and observe the I/O performance. |

Expected Result | 1.In step 2, the host is able to scan and detect the four mapped LUNs. 2.In step 4, I/O read/write operations are normal. 3.In step 5, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 4.In step 6, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. 5.In step 7, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 6.In step 8, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. |

Test Record | 1.Log into the storage management system, create mappings, and add four 100GB LUNs to the host.

2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information.

3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks ».

4.Perform vdbench raw disk read/write tests on LUN A and LUN C within the VM, and observe IO; create file systems on LUN B and LUN D within the VM, and perform vdbench file system read/write tests, observing IO.

5.Pause the preferred site of HyperMetro pairs and observe the I/O performance.

6.Synchronize HyperMetro pairs and observe the I/O performance.

7.Pause the non-preferred site of HyperMetro pairs and observe the I/O performance.

8.Synchronize HyperMetro pairs and observe the I/O performance.

|

Test Conclusion | Passed |

3.3 One Sit of HyperMetro Storages Abnormal Power Off and Recovery

Test Purpose | To Verify That Business is Normal in the One Sit of HyperMetro Storages Abnormal Power Off Scenario |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The storage HyperMetro is configured correctly. 7.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

Test Procedure | 1.Log into the storage management system and create mappings, adding four 100GB HyperMetro LUNs to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, and observe the I/O performance. In the VM, create file systems on LUN B and LUN D, and perform file system read/write operations using vdbench, observing the I/O performance. 5.Remove the power supply of the preferred site, then wait for the BBU to discharge and observe the I/O performance. 6.Power on the storage and observe the I/O performance. 7.Remove the power supply of the non-preferred site, then wait for the BBU to discharge and observe the I/O performance. 8.Power on the storage and observe the I/O performance. |

Expected Result | 1.In step 2, the host is able to scan and detect the four mapped LUNs. 2.In step 4, I/O read/write operations are normal. 3.In step 5, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 4.In step 6, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. 5.In step 7, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 6.In step 8, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. |

Test Record | 1.Log into the storage management system, create mappings, and add four 100GB LUNs to the host.

2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information.

3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks ».

4.Perform vdbench raw disk read/write tests on LUN A and LUN C within the VM, and observe IO; create file systems on LUN B and LUN D within the VM, and perform vdbench file system read/write tests, observing IO.

5.Remove the power supply of the preferred site, then wait for the BBU to discharge and observe the I/O performance.

6.Power on the storage and observe the I/O performance.

7.Remove the power supply of the non-preferred site, then wait for the BBU to discharge and observe the I/O performance.

8.Power on the storage and observe the I/O performance.

|

Test Conclusion | Passed |

3.4 Storage Interface Card Failure and Recovery

Test Purpose | To Verify That Business is Normal in the Interface Card Failure Scenario |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network is correctly configured according to the network diagram, and the physical links are normal. 2.Host system is functioning properly. 3.Storage system is functioning properly. 4.Multipath is correctly installed and configured. 5.LUN mapping is correct. 6.The storage HyperMetro is configured correctly. 7.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

Test Procedure | 1.Log into the storage management system and create mappings, adding four 100GB HyperMetro LUNs to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, and observe the I/O performance. In the VM, create file systems on LUN B and LUN D, and perform file system read/write operations using vdbench, observing the I/O performance. 5.Unplug the interface card of Controller A and observe the I/O performance. 6.Reinsert the interface card and observe the I/O performance. |

Expected Result | 1.In step 2, the host is able to scan and detect the four mapped LUNs. 2.In step 4, I/O read/write operations are normal. 3.In step 5, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 4.In step 6, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. |

Test Record | 1.Log into the storage management system, create mappings, and add four 100GB LUNs to the host.

2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information.

3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks ».

4.Perform vdbench raw disk read/write tests on LUN A and LUN C within the VM, and observe IO; create file systems on LUN B and LUN D within the VM, and perform vdbench file system read/write tests, observing IO.

5.Unplug the interface card of Controller A and observe the I/O performance.

6.Reinsert the interface card and observe the I/O performance.

|

Test Conclusion | Passed |

3.5 Single Controller Failure and Recovery

Test Purpose | To Verify That Business is Normal in the Controller Failure Scenario |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the corresponding scenario, and the physical connections are functioning properly. 2.The host system is operating normally. 3.The storage system is functioning normally. 4.Multipath has been properly installed and configured. 5.LUN mapping is normal. 6.The storage HyperMetro is configured correctly. 7.The system disk used by the test VM is created on the datastore of the storage-mapped LUN. |

Test Procedure | 1.Log into the storage management system and create mappings, adding four 100GB HyperMetro LUNs to the host. 2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information. 3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks. » 4.In the VM, perform raw disk read/write operations on LUN A and LUN C using vdbench, and observe the I/O performance. In the VM, create file systems on LUN B and LUN D, and perform file system read/write operations using vdbench, observing the I/O performance. 5. Unplug the controller A observe the I/O performance. 6. Reinsert the the controller. Wait for Controller A to recover and observe the I/O performance. |

Expected Result | 1.In step 2, the host is able to scan and detect the four mapped LUNs. 2.In step 4, I/O read/write operations are normal. 3.In step 5, I/O is uninterrupted, the reset time is within the specification range, and I/O is balanced across all remaining paths of the LUN. 4.In step 6, there is no reset, the faulted path status recovers to normal, and I/O resumes balanced across all paths of the LUN. |

Test Record | 1.Log into the storage management system and create mappings, adding four 100GB LUNs to the host.

2.Log into vSphere vCenter and execute « Rescan Storage » to scan the LUNs and view disk information.

3.Create datastores on LUN A and LUN B, and add them to the VM as « Virtual Disks »; add LUN C and LUN D to the VM as « RDM Disks ».

4.Perform raw disk read/write operations on LUN A and LUN C in the VM using vdbench and observe the I/O. Create file systems on LUN B and LUN D in the VM, and perform file system read/write operations using vdbench, observing the I/O.

5.Unplug the controller A and observe the I/O performance.

6.Reinsert the the controller. Wait for Controller A to recover and observe the I/O performance.

|

Test Conclusion | Passed |

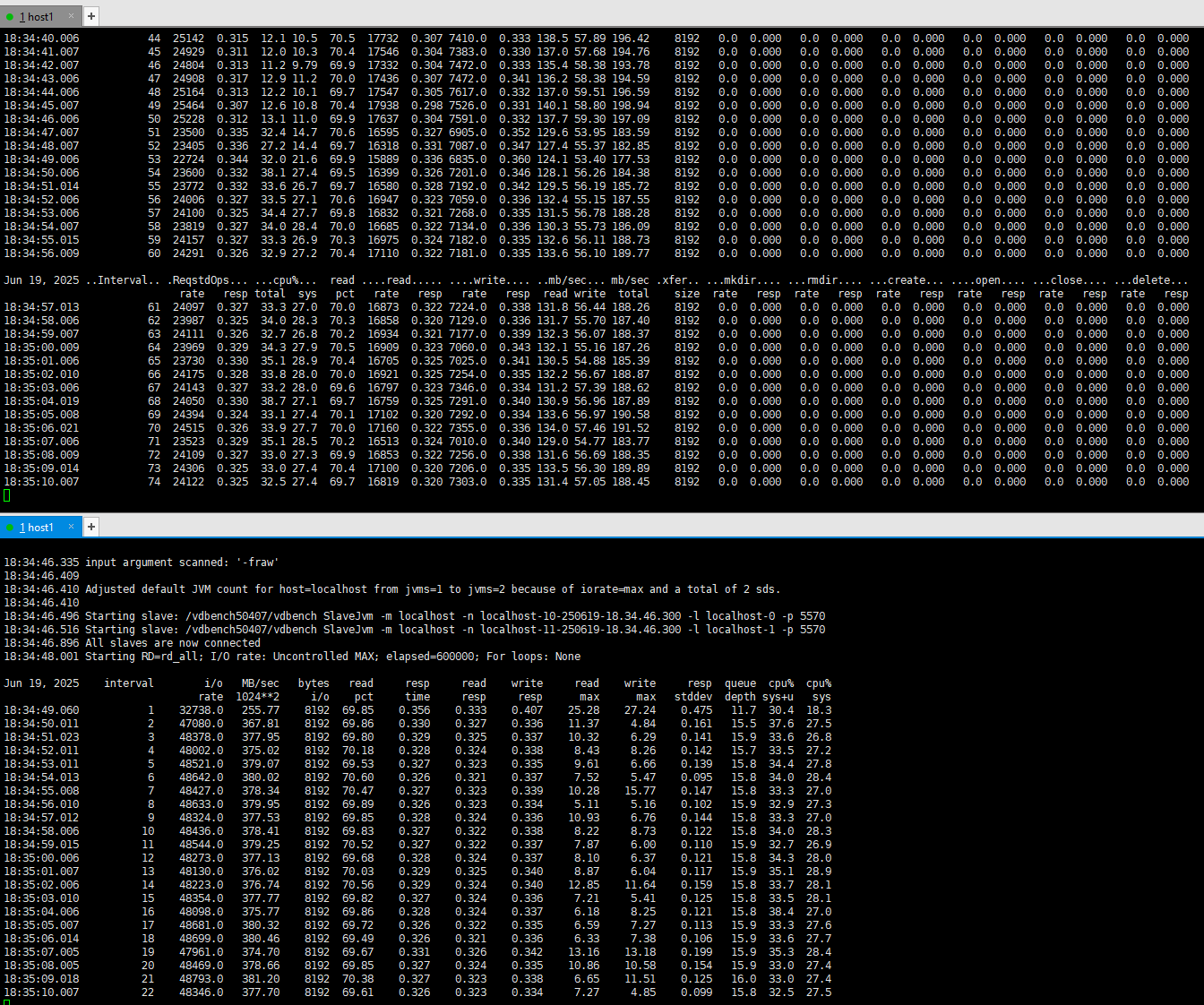

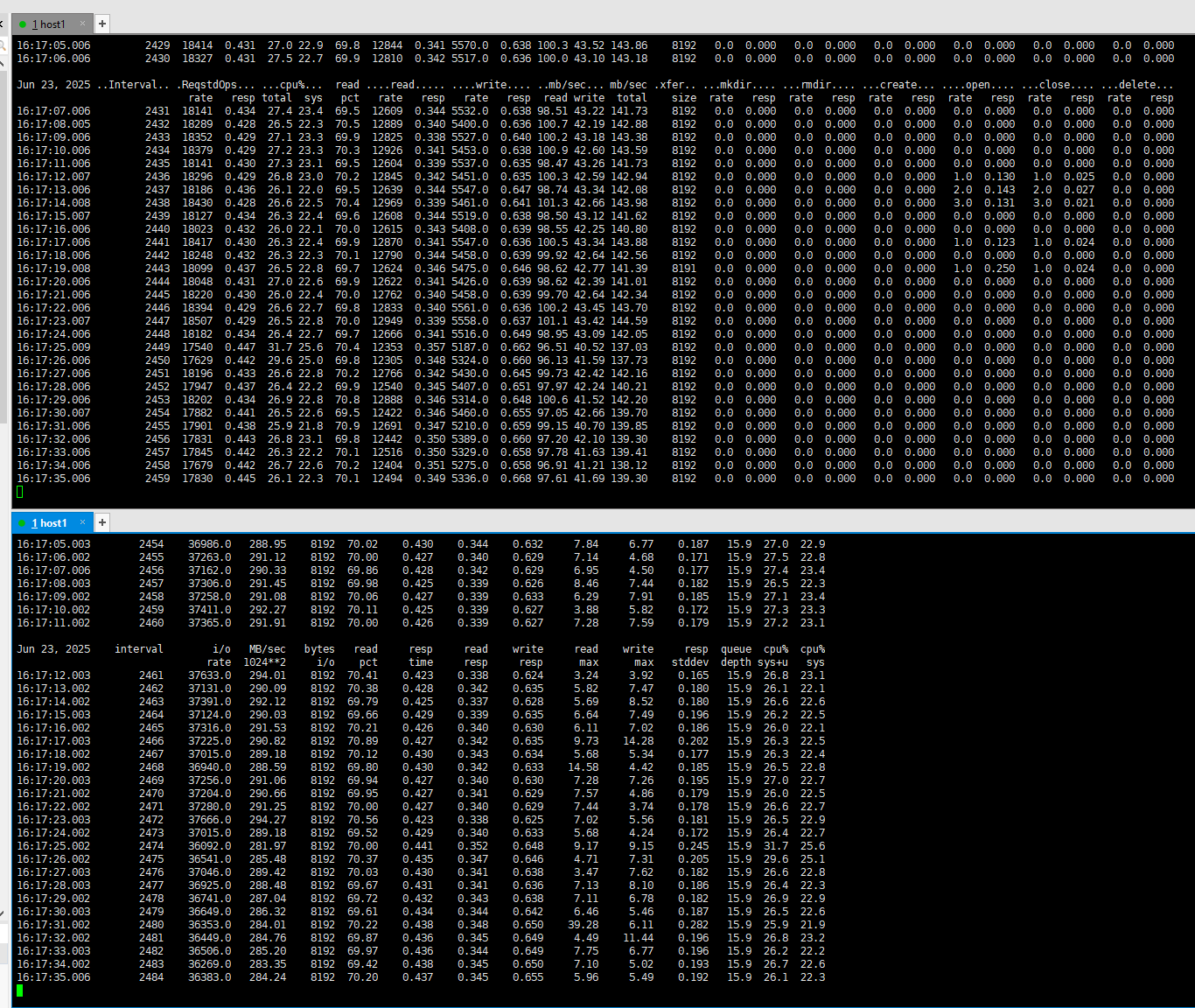

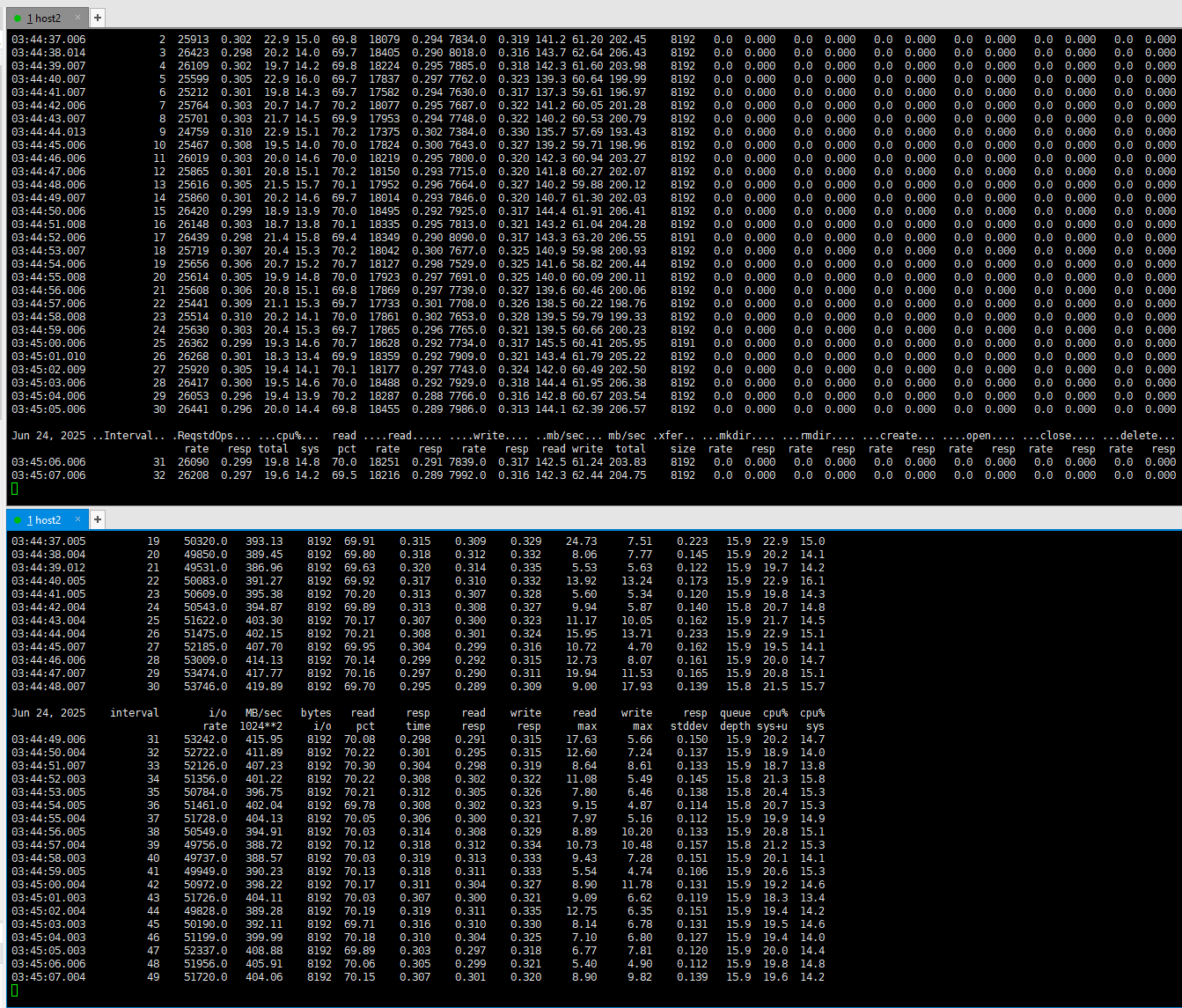

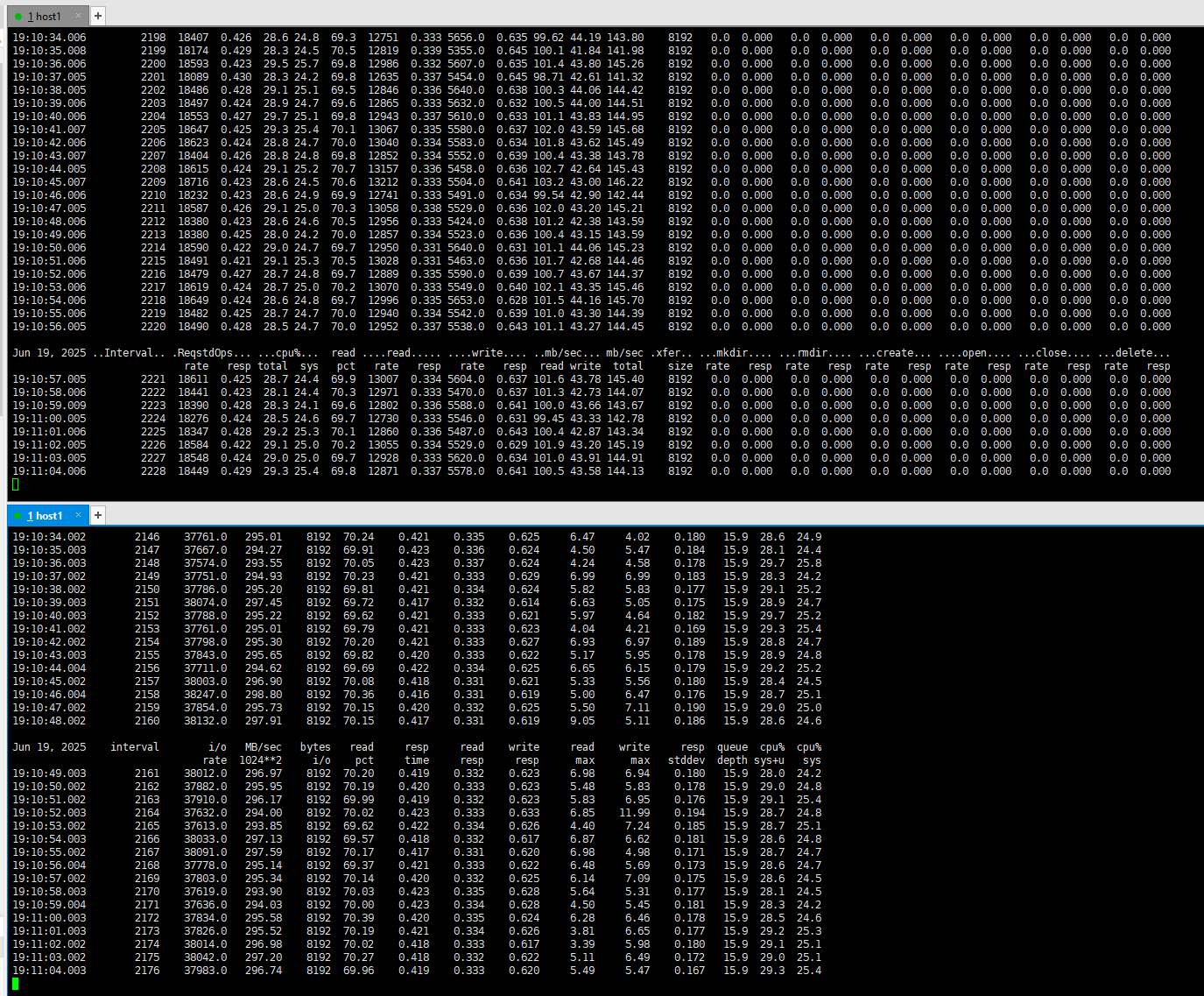

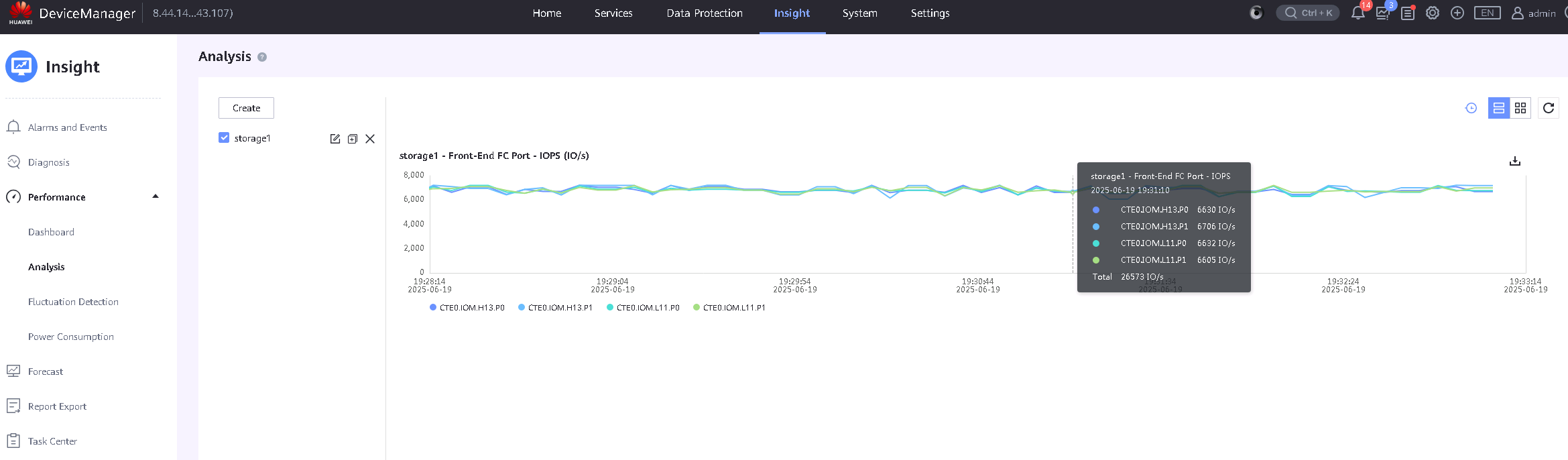

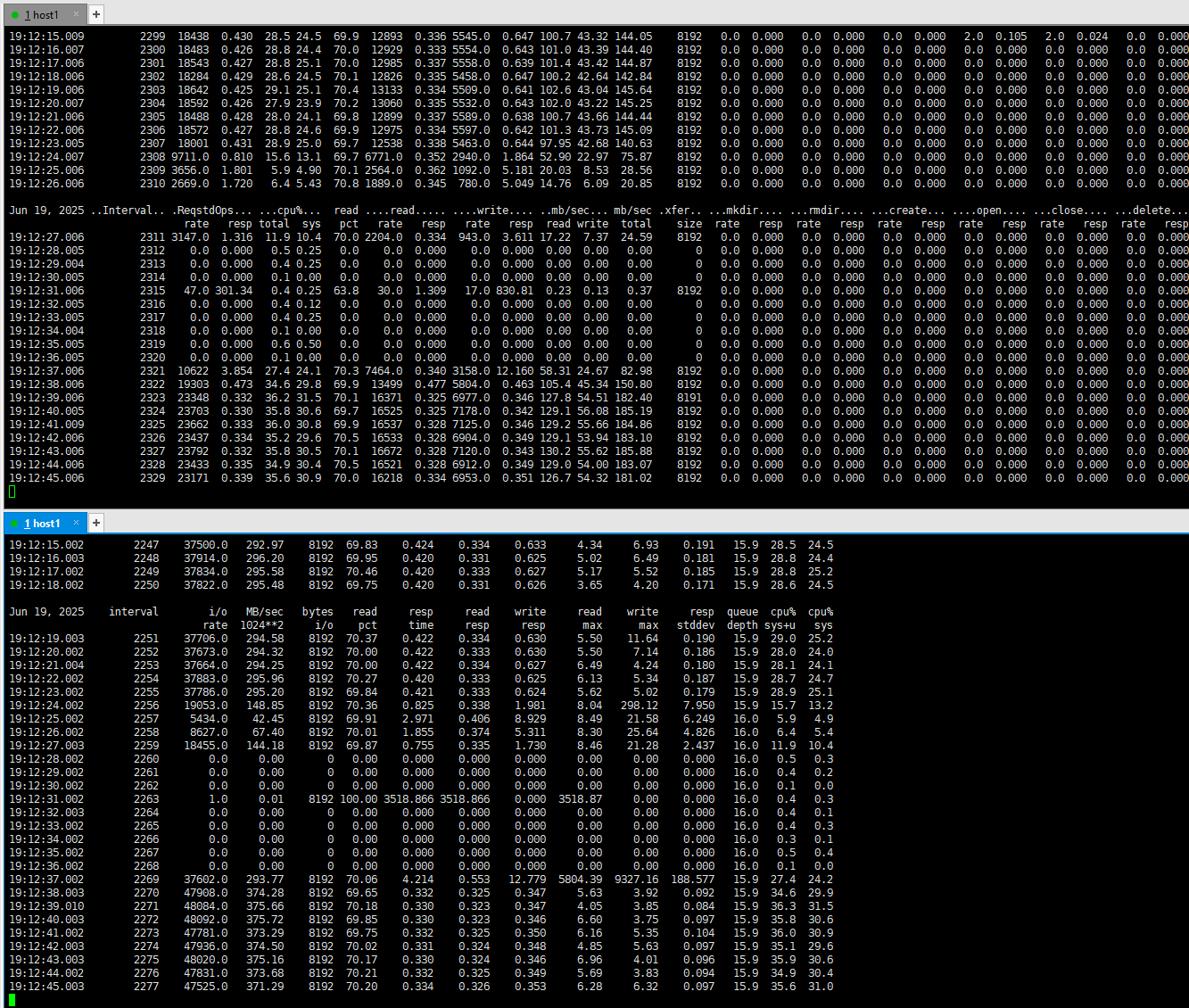

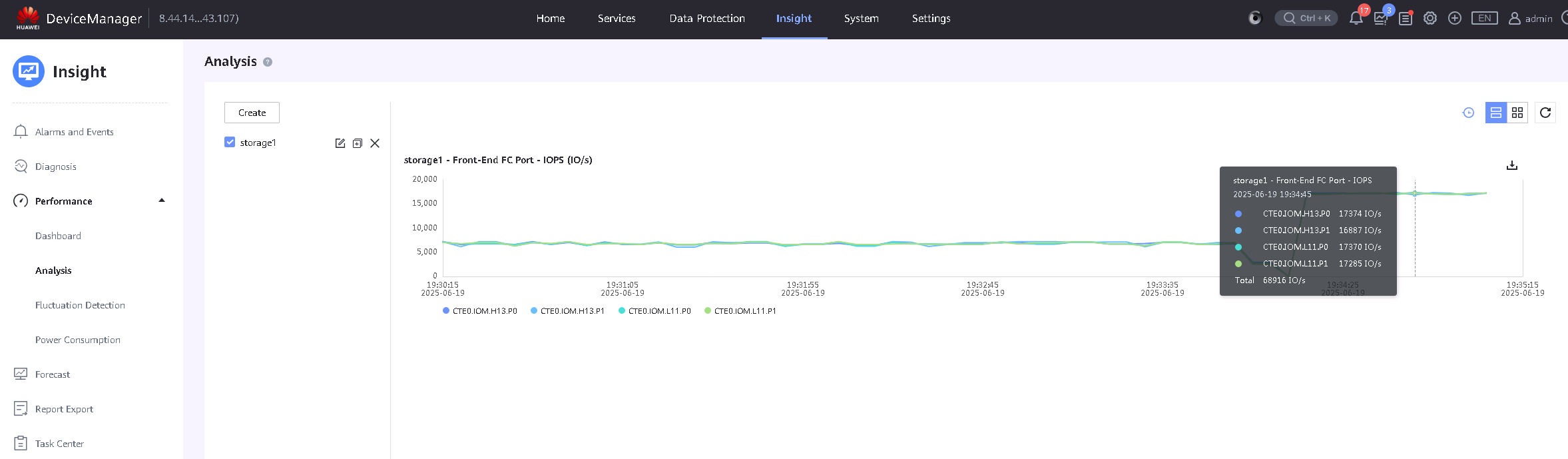

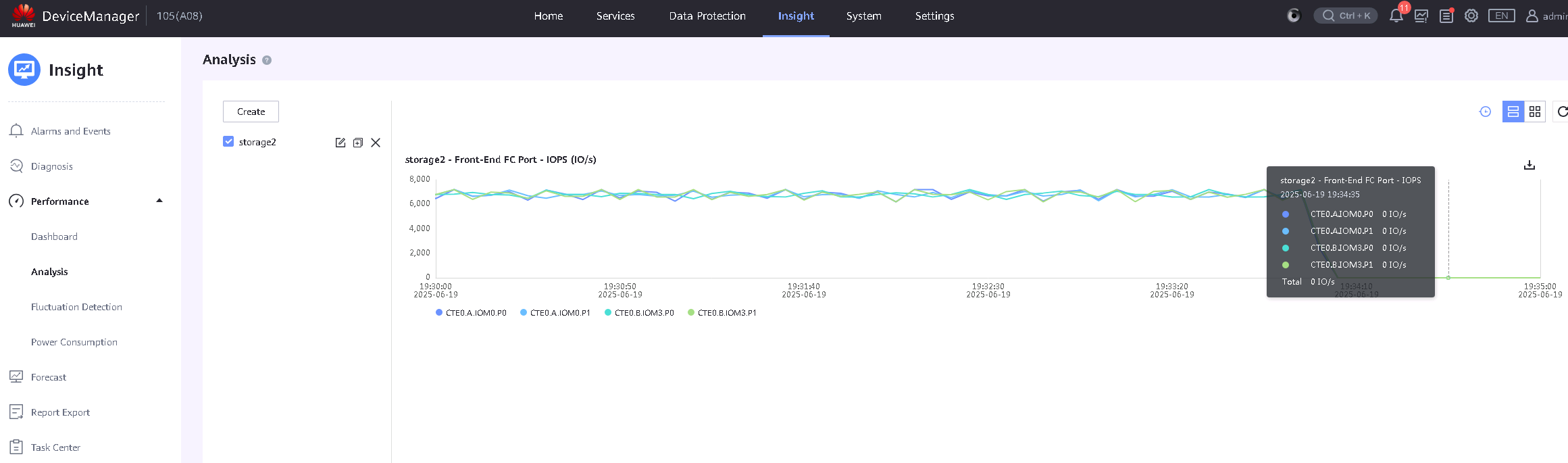

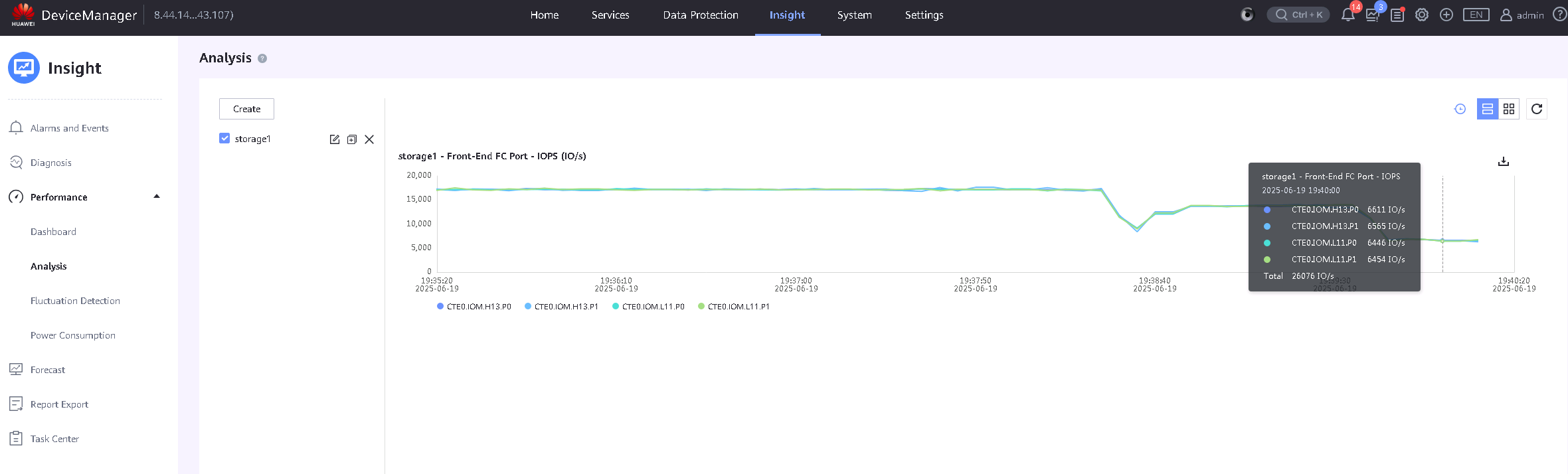

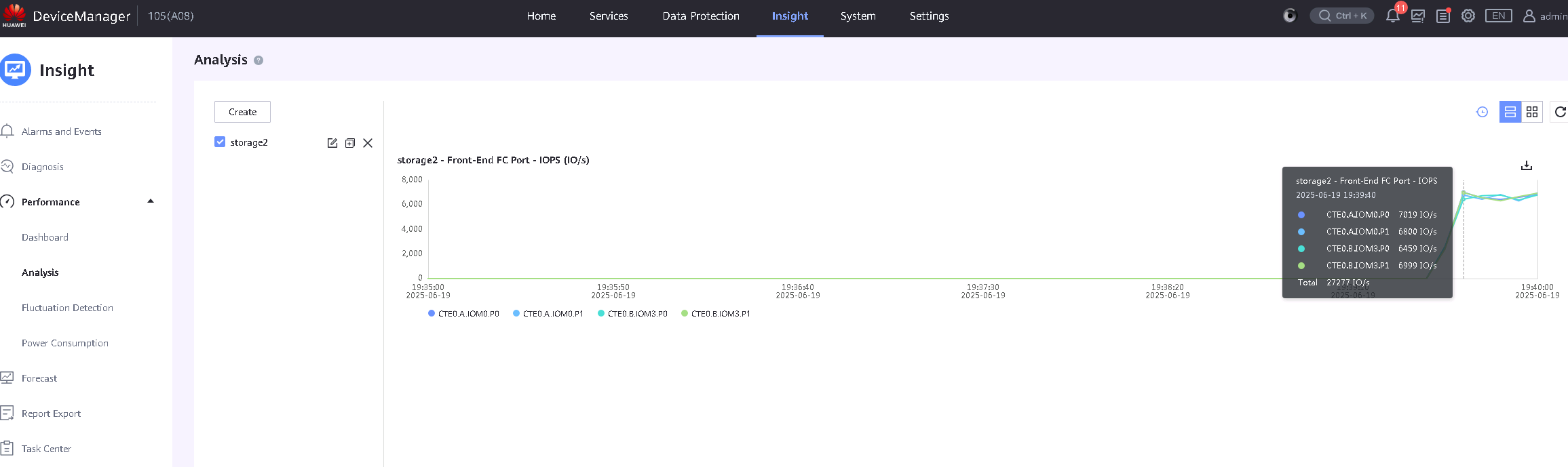

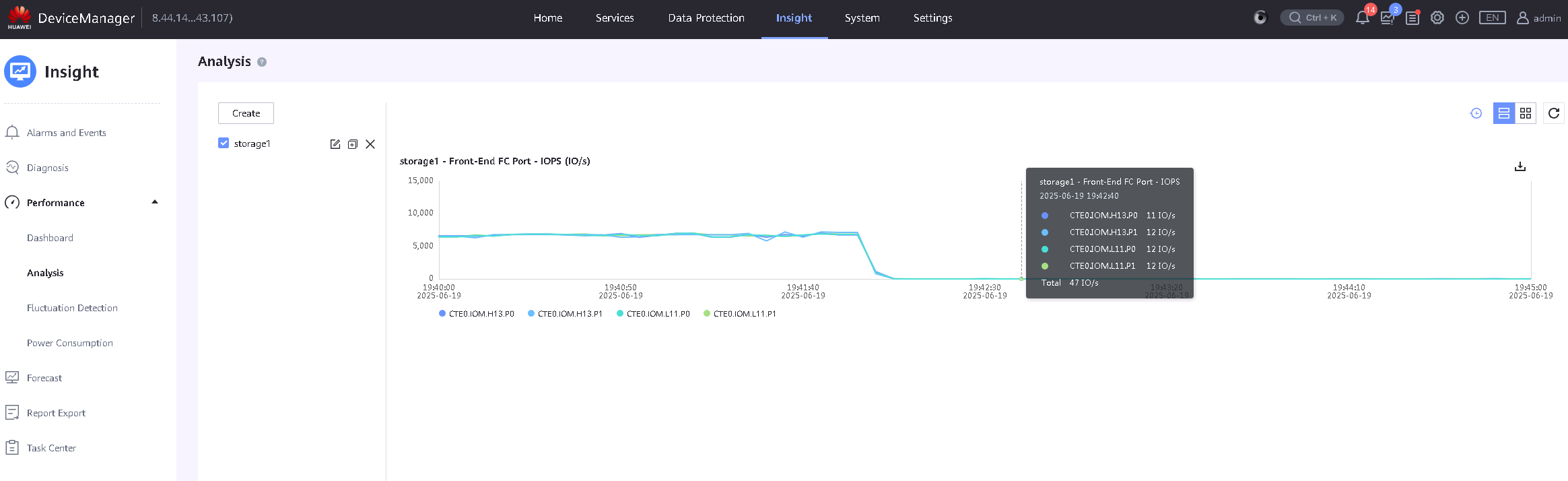

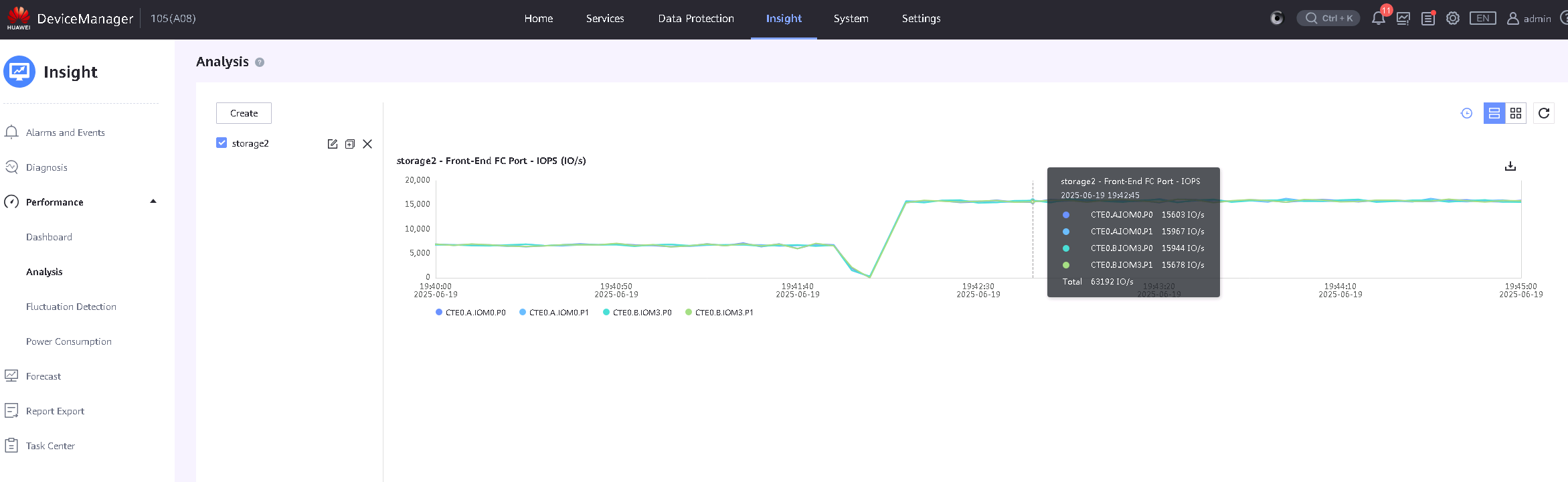

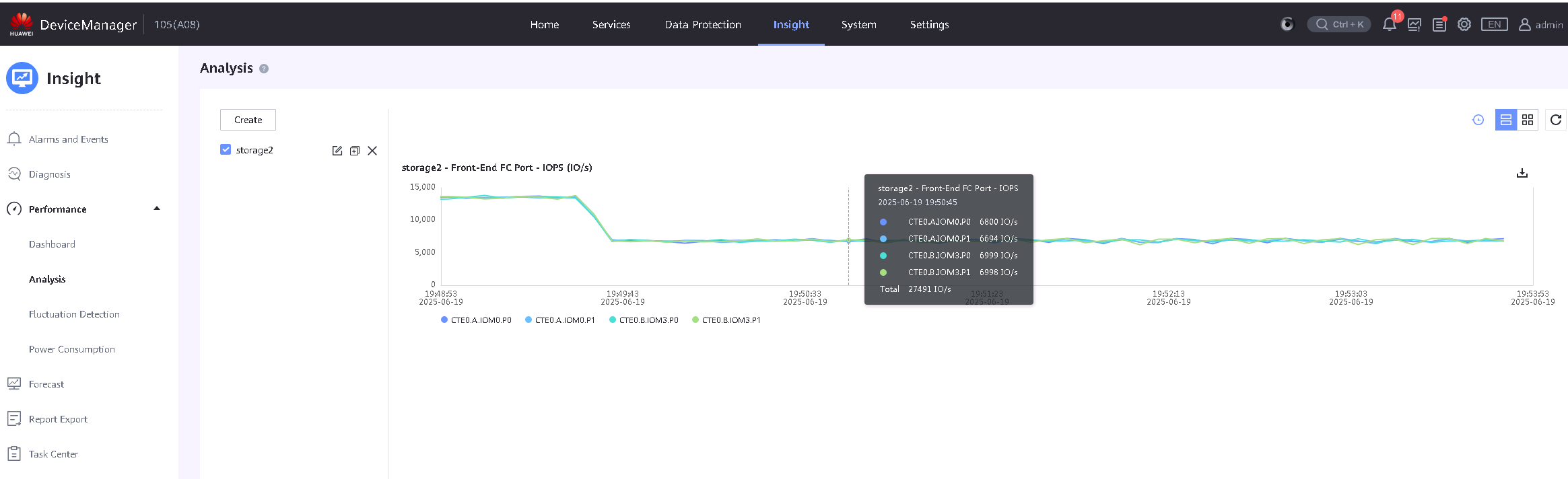

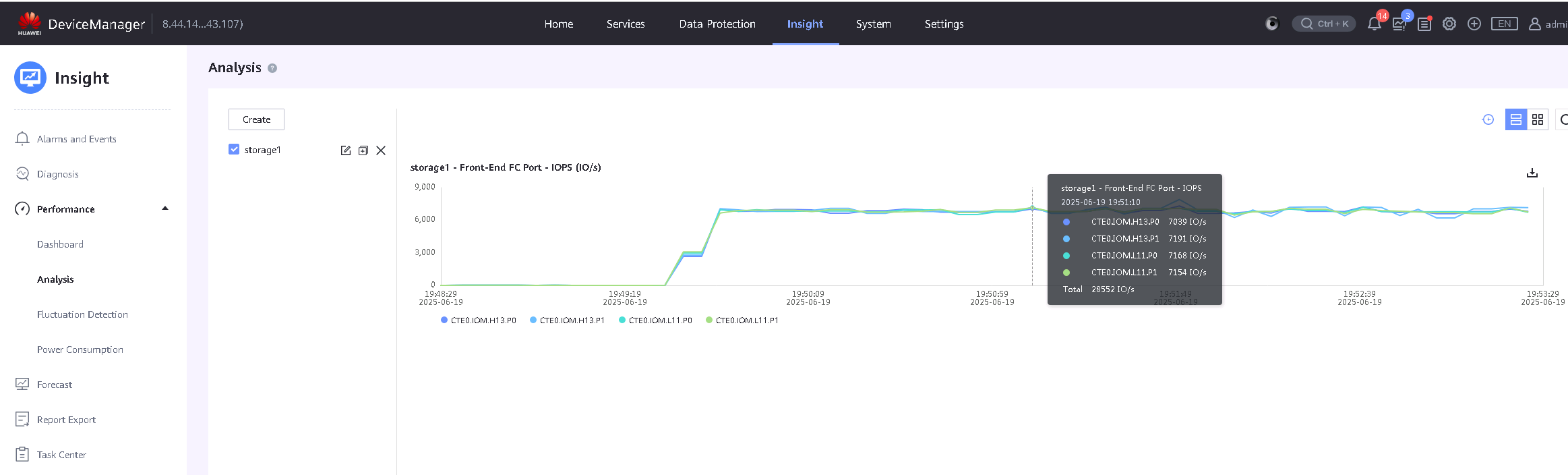

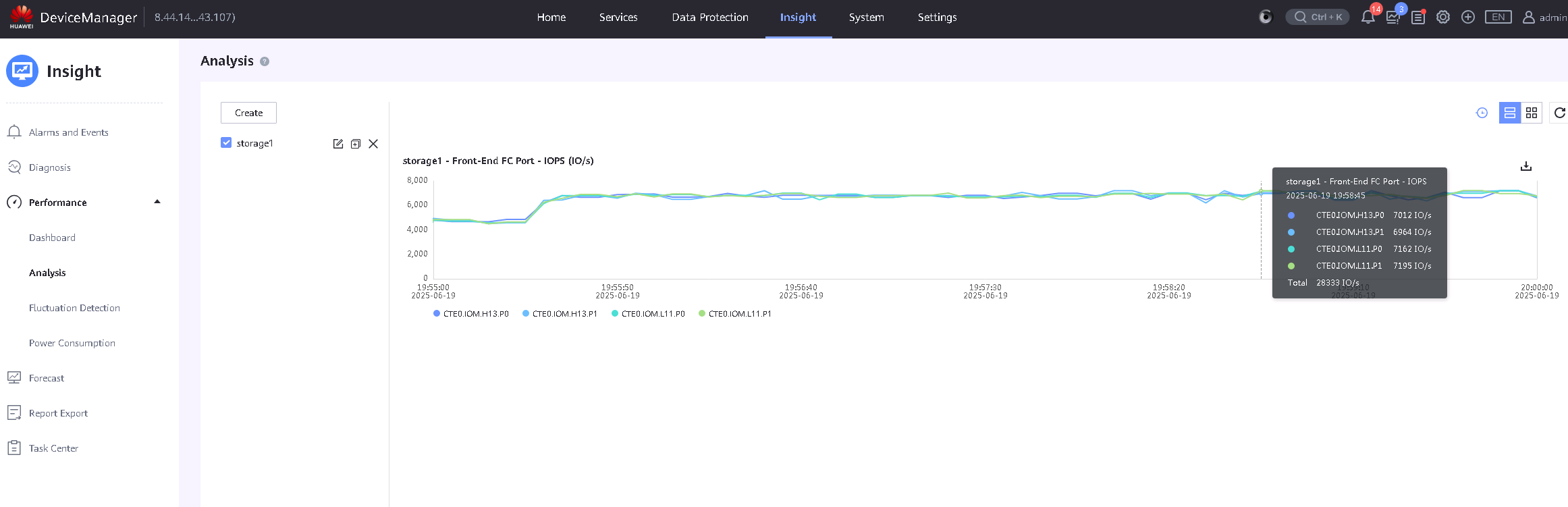

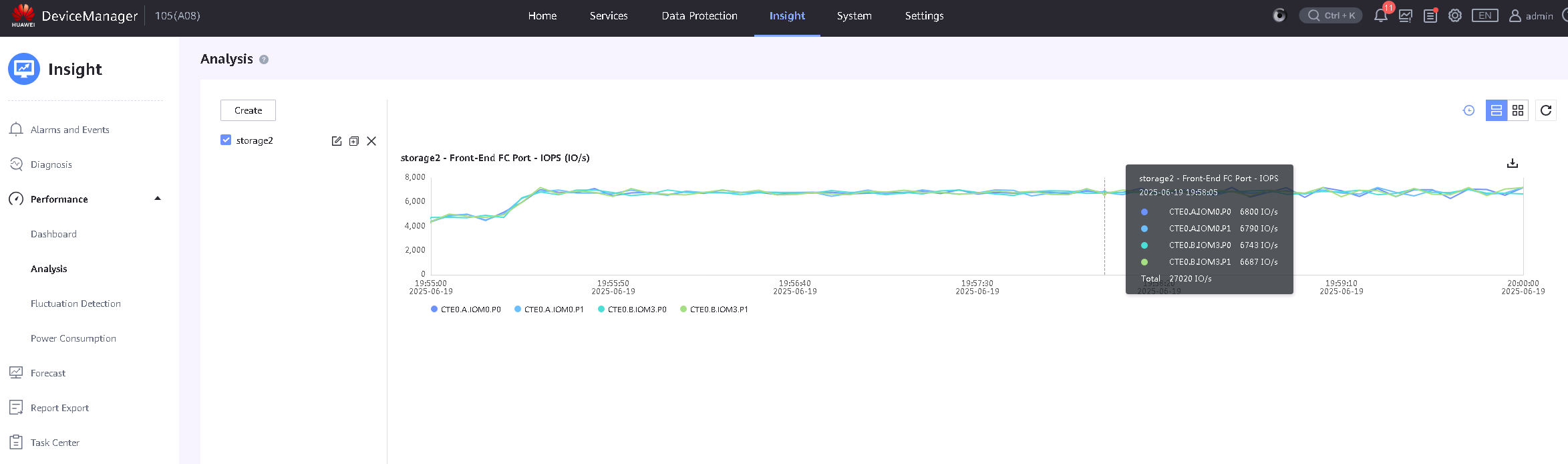

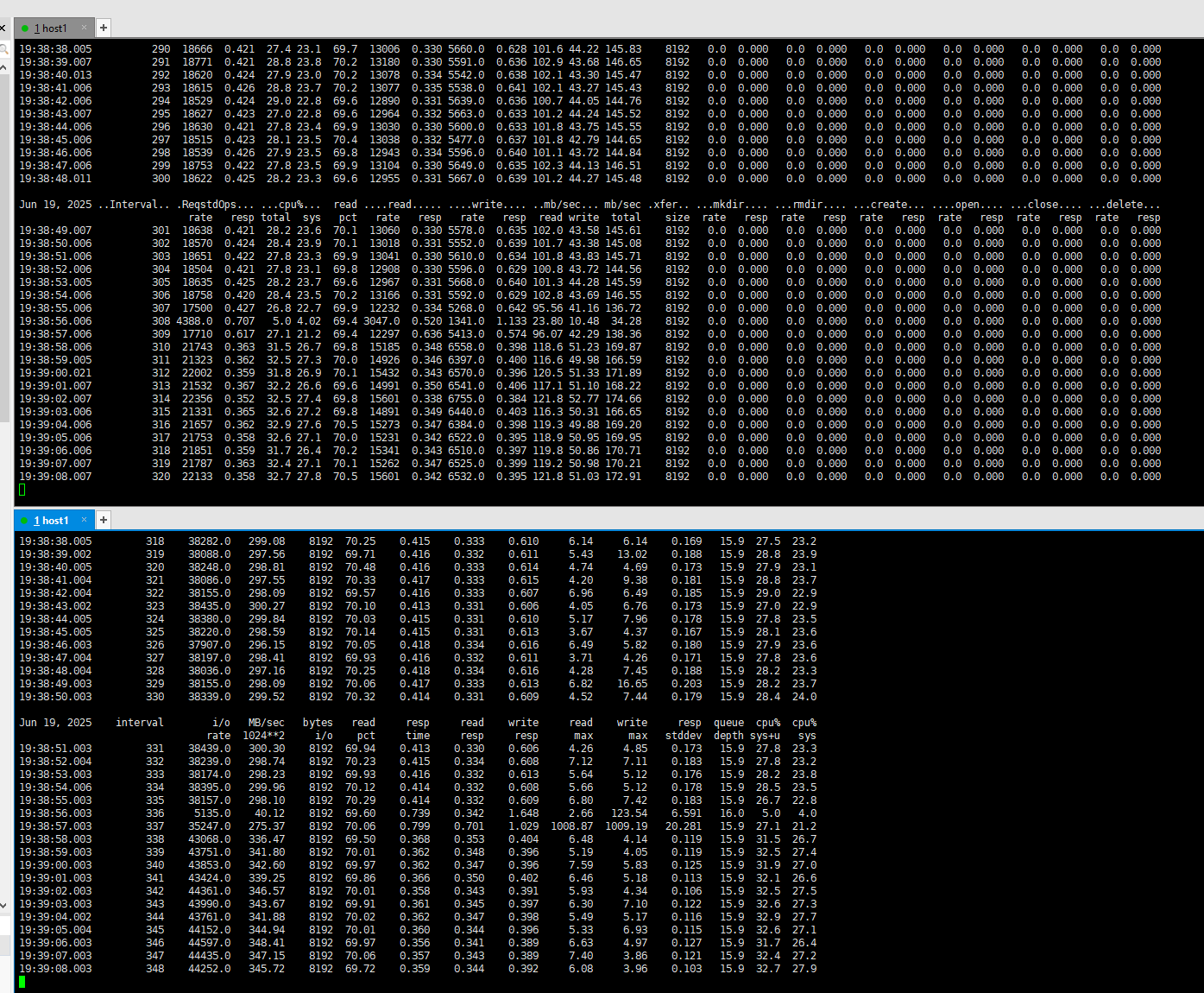

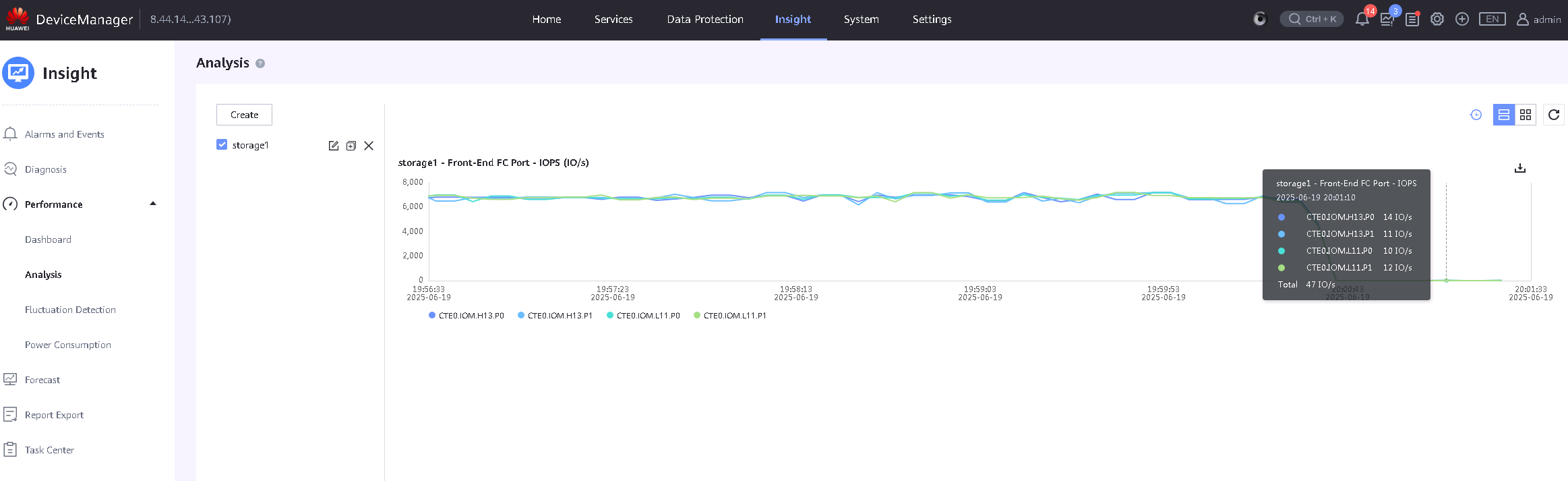

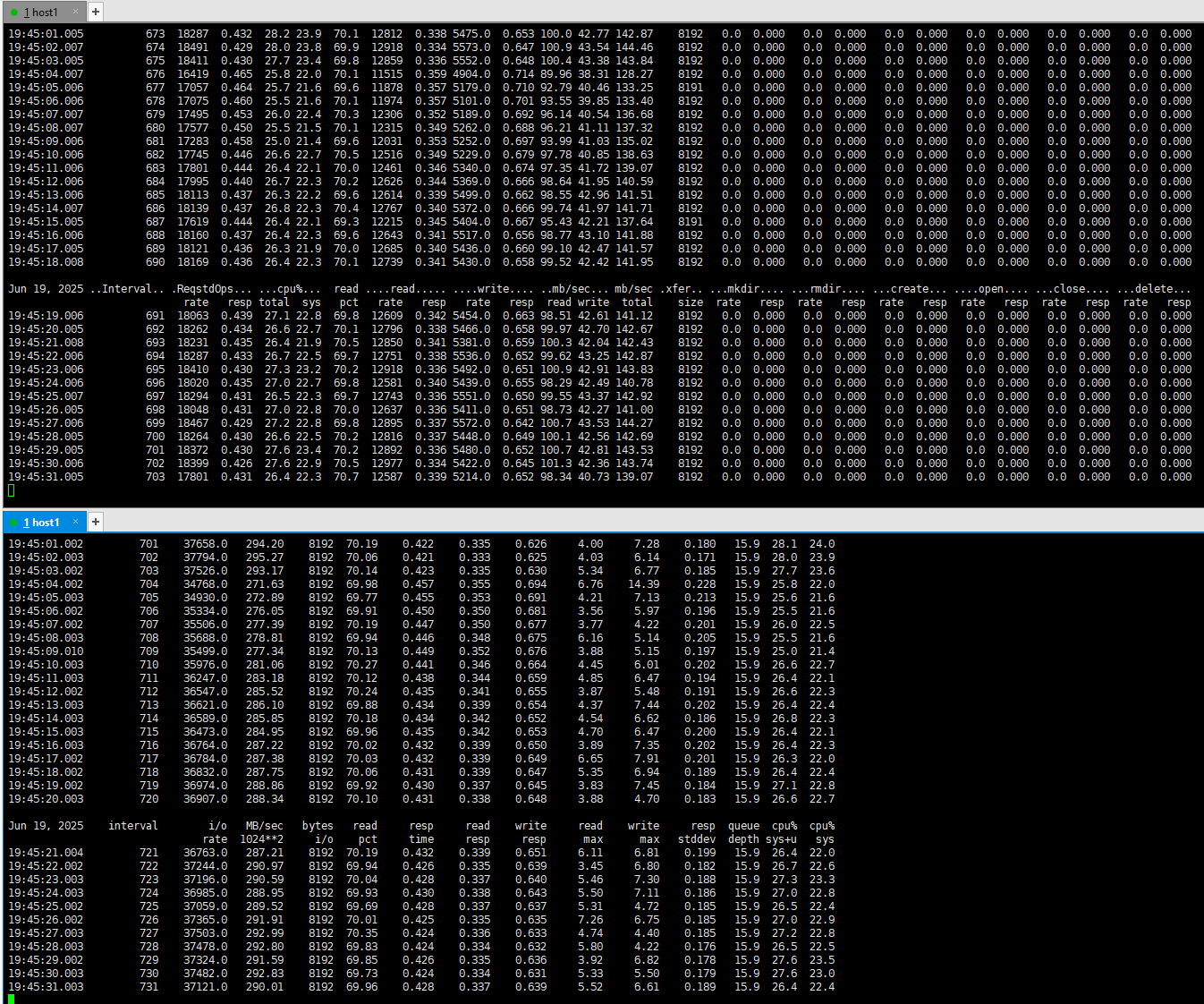

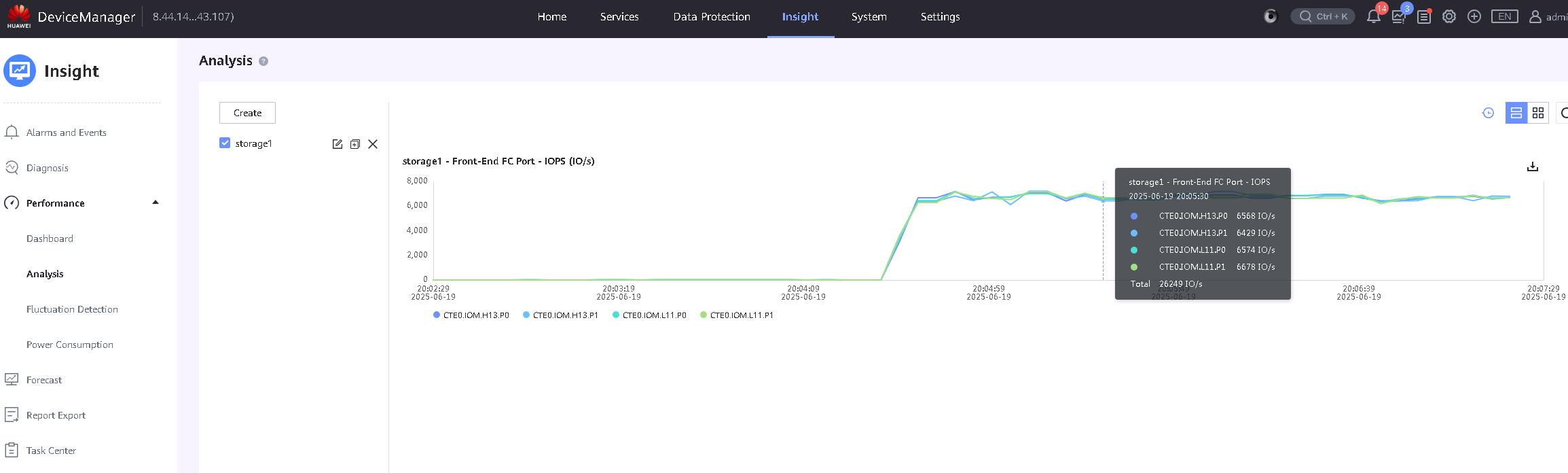

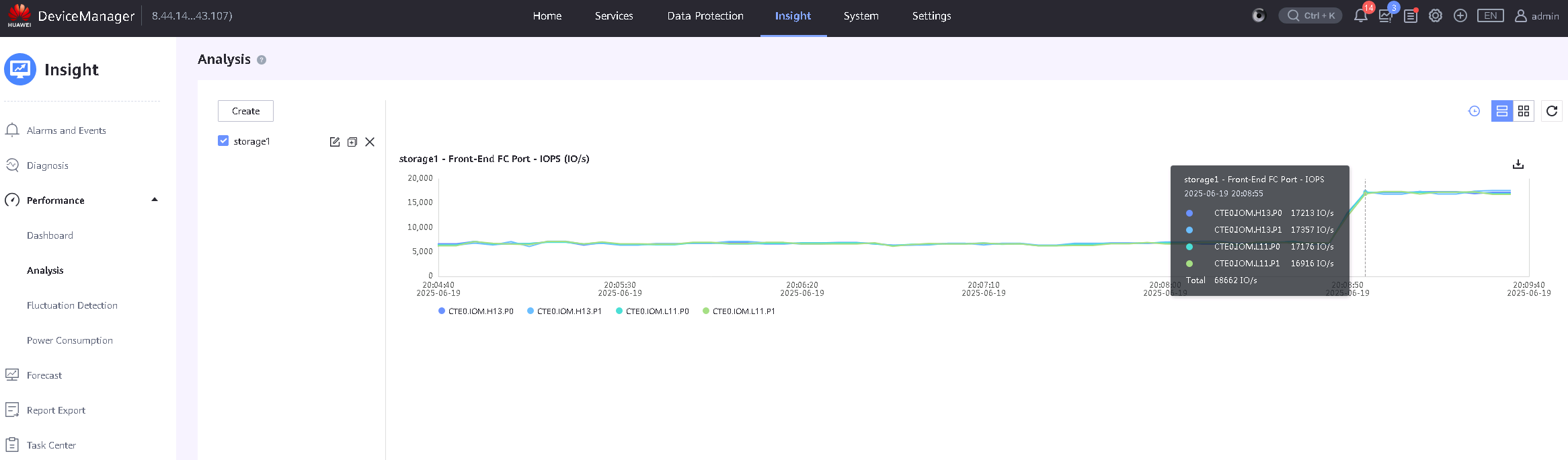

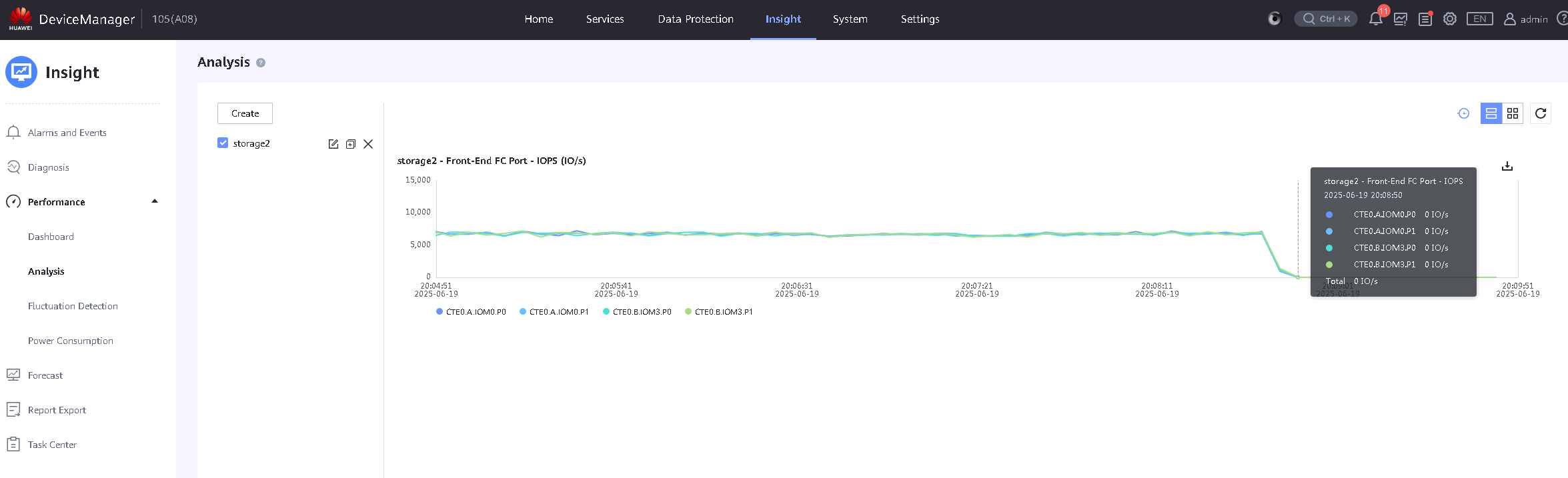

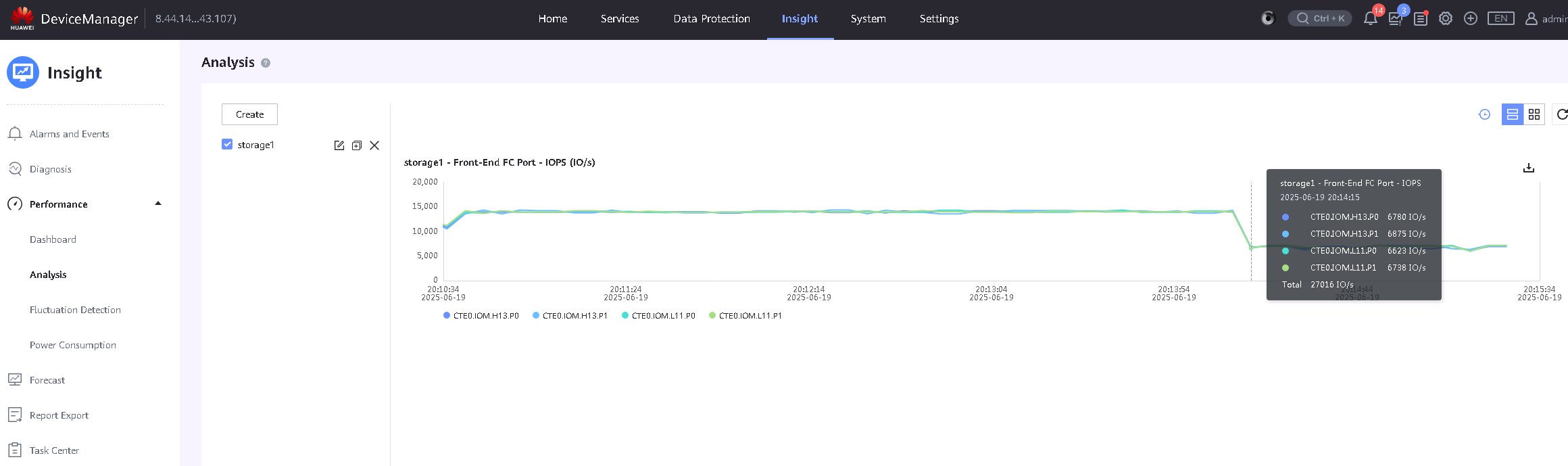

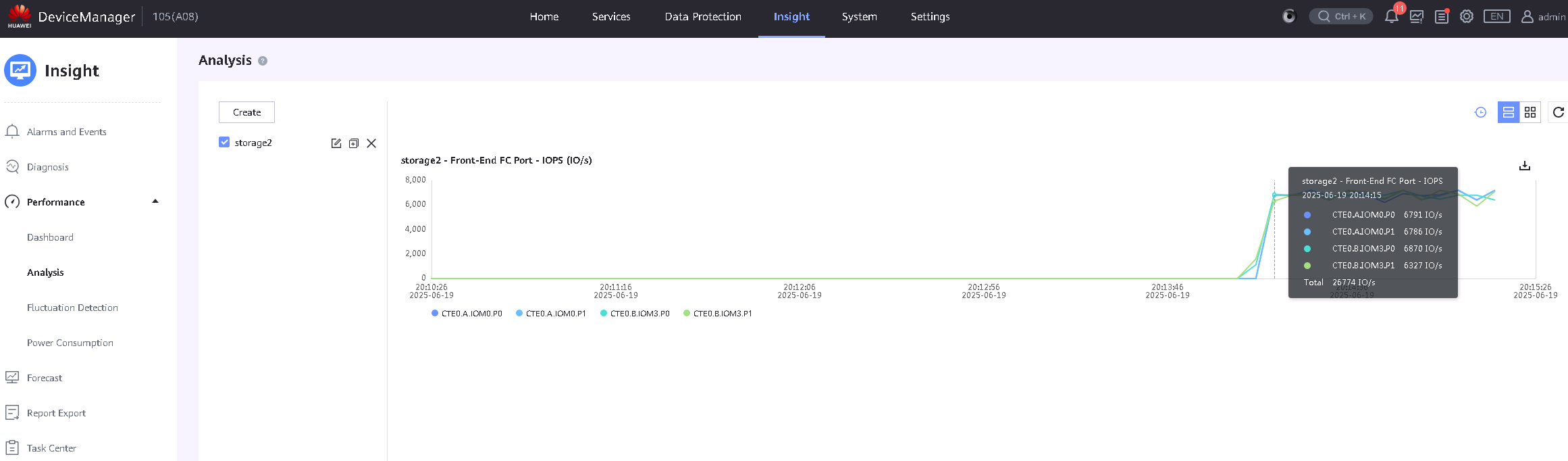

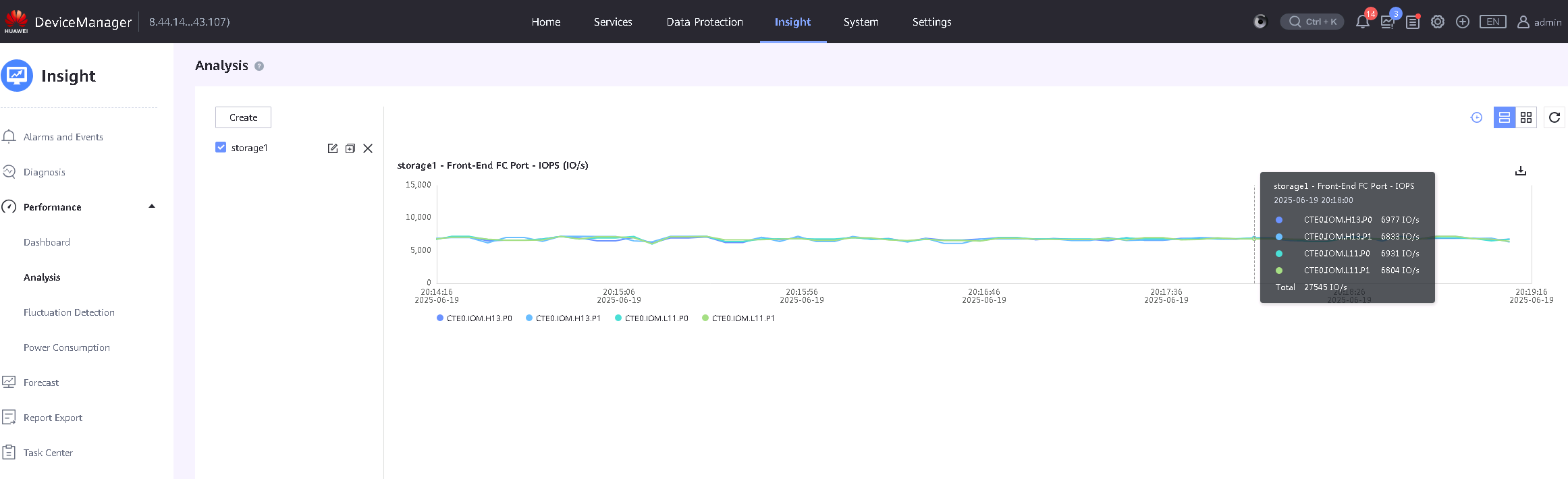

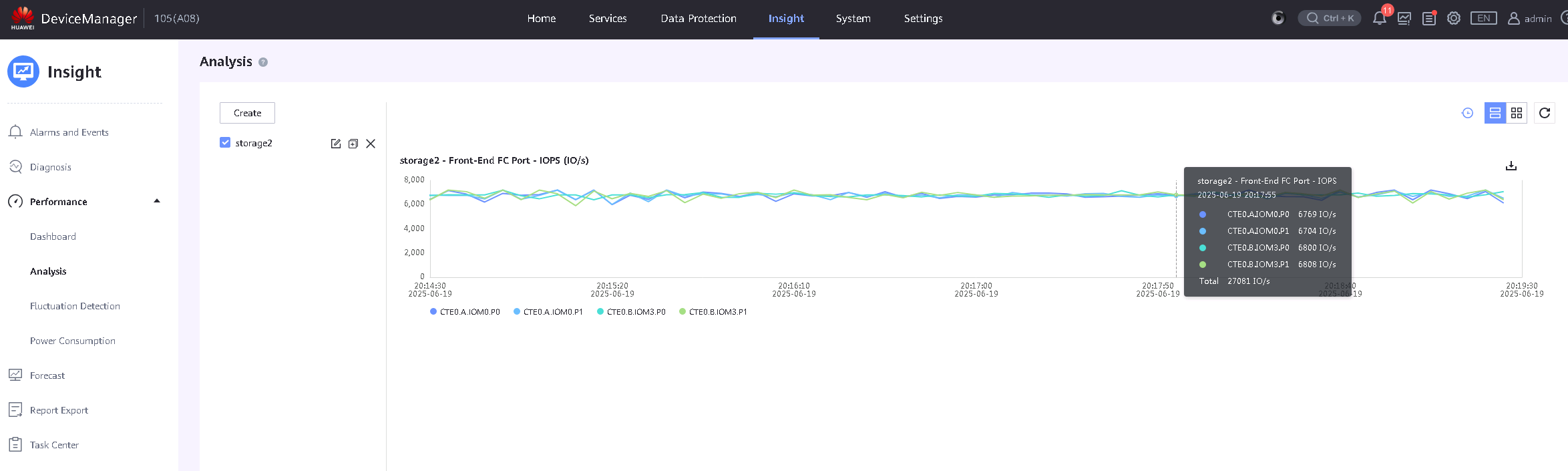

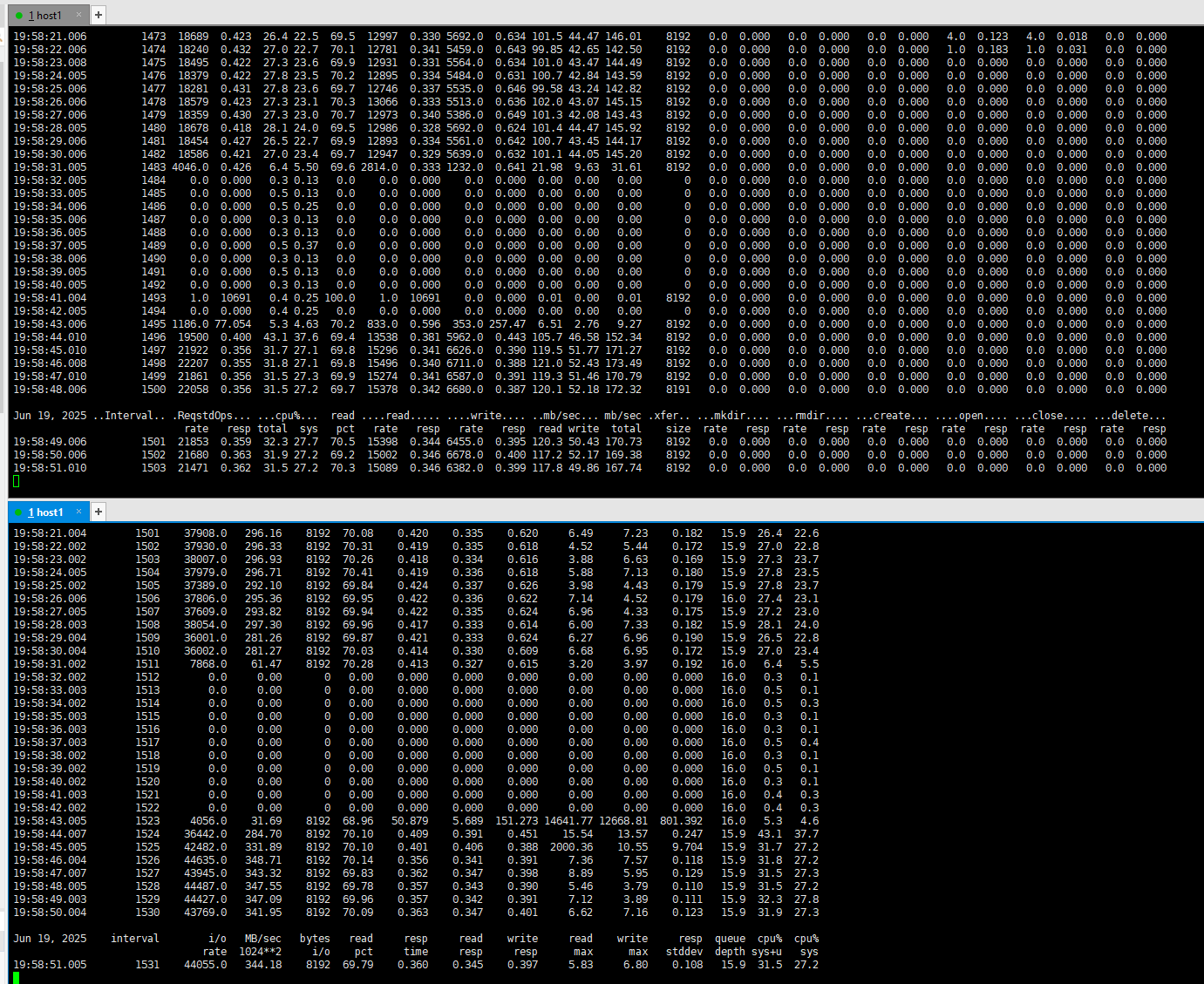

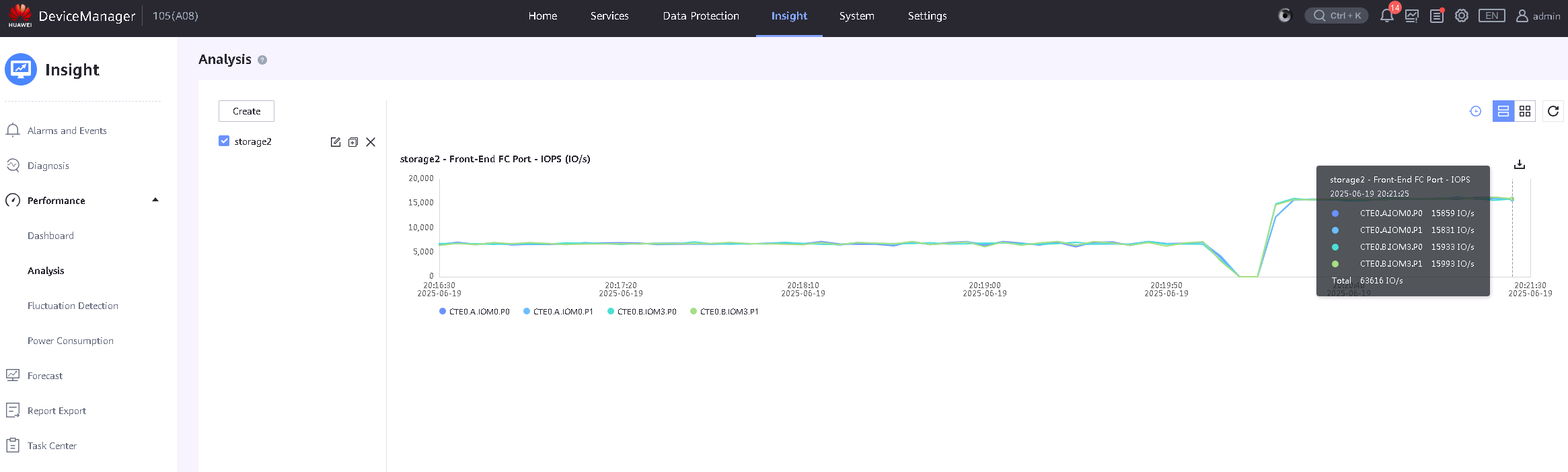

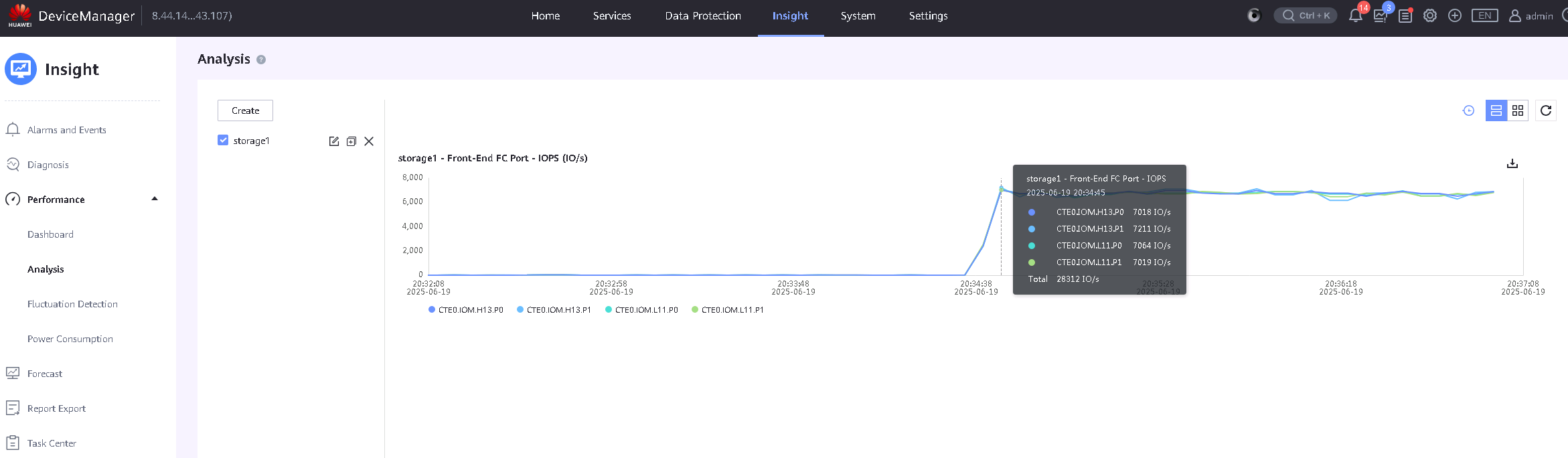

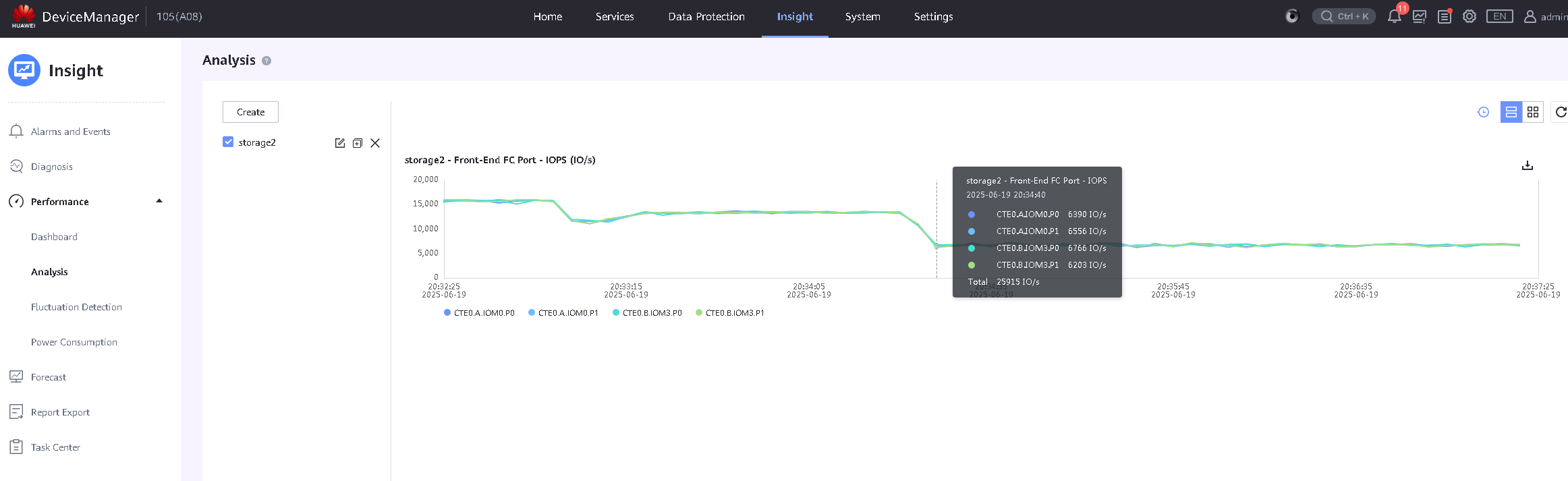

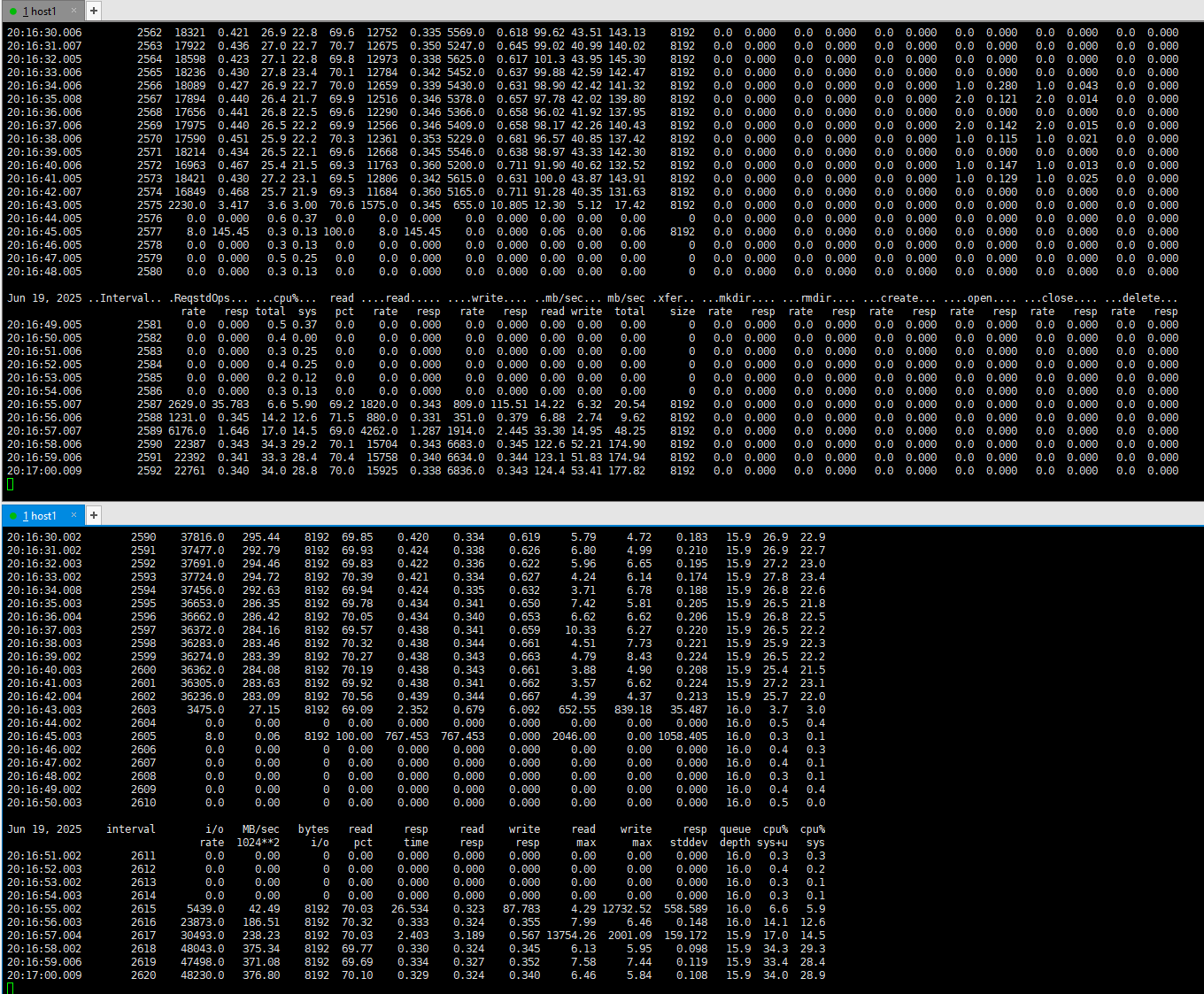

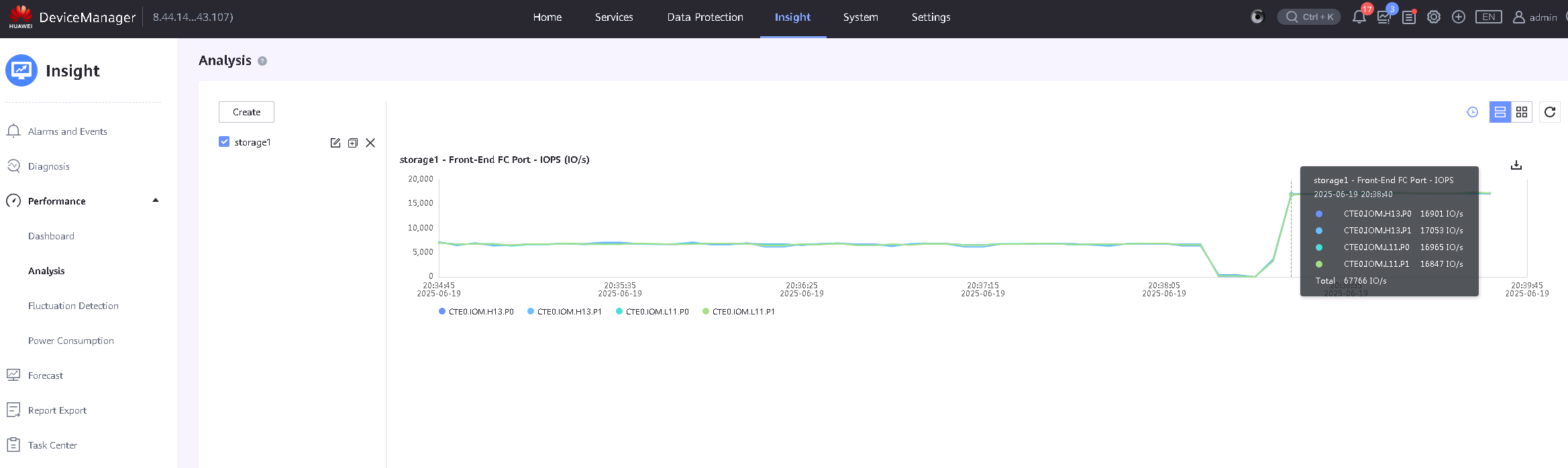

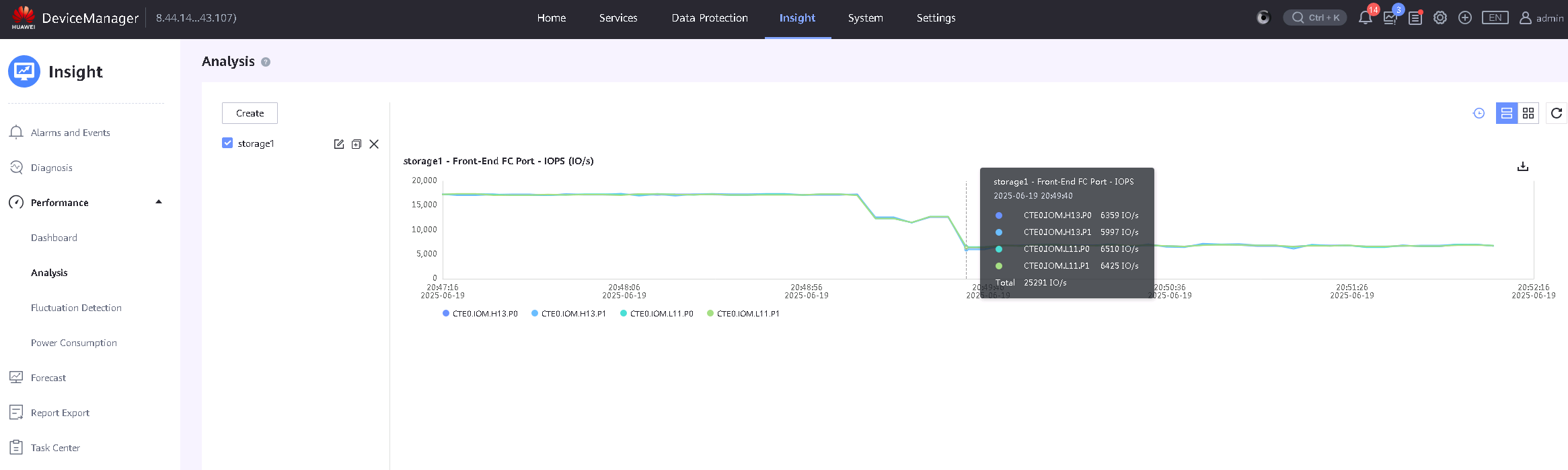

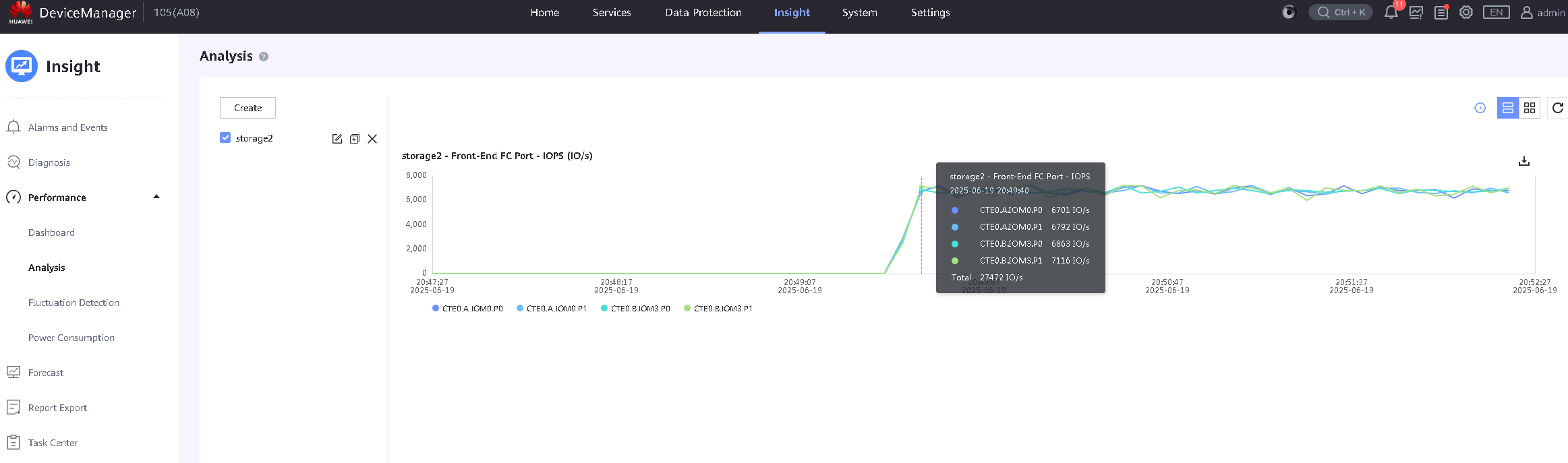

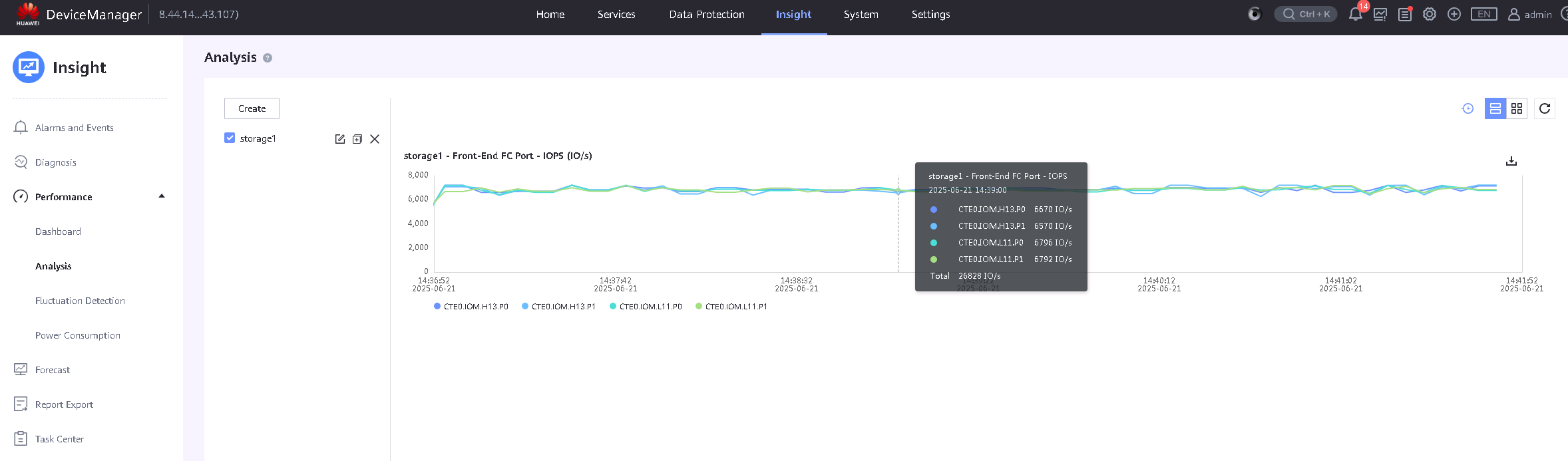

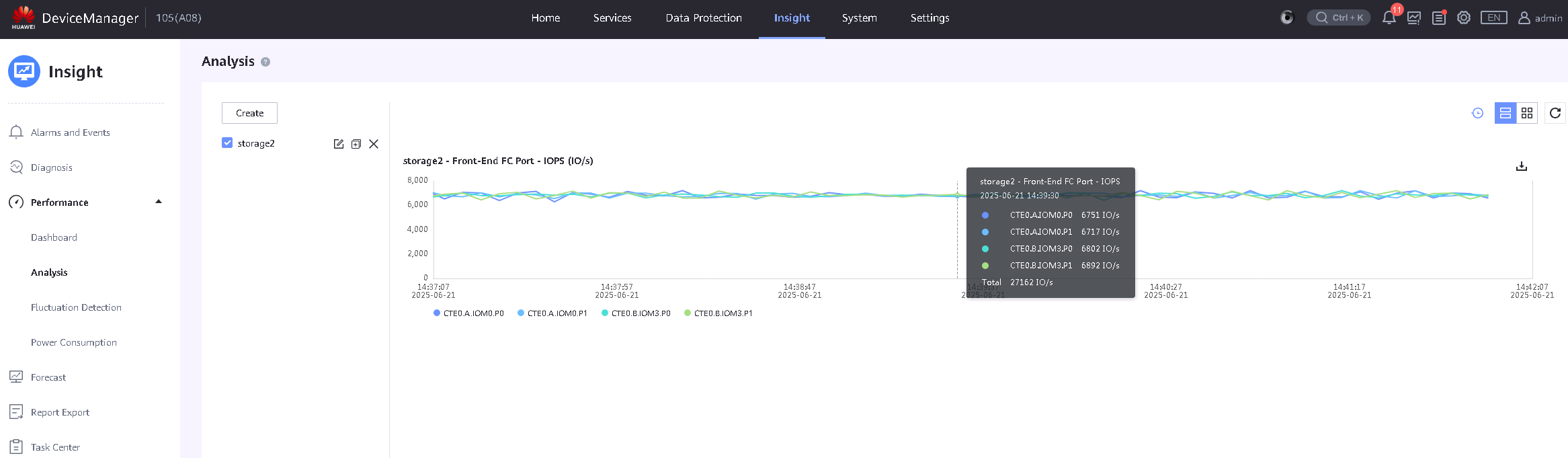

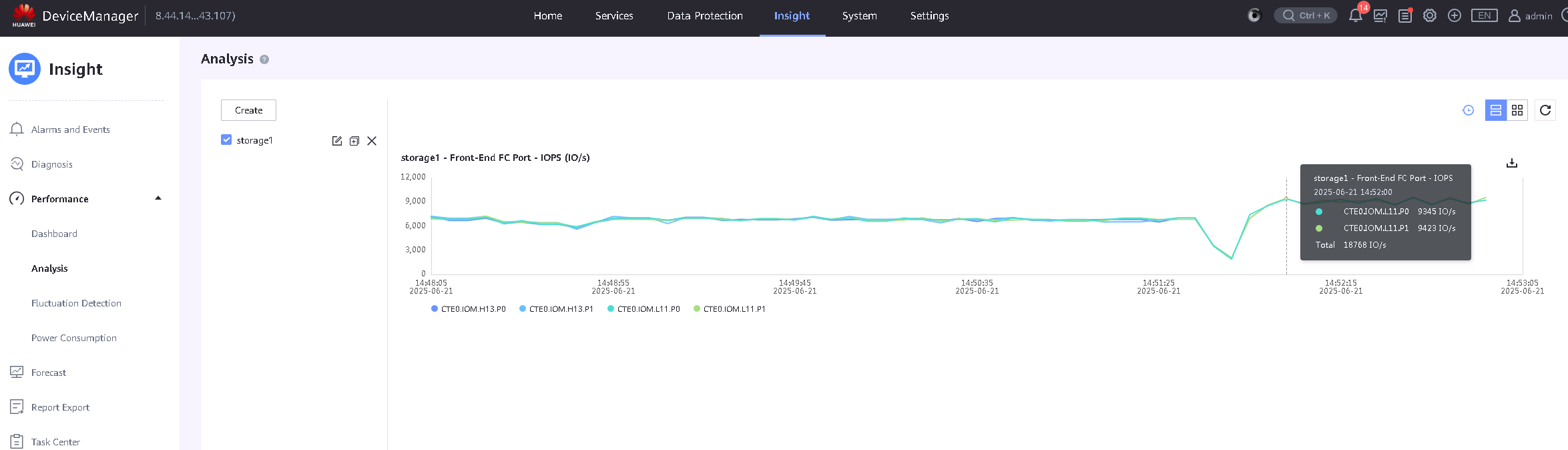

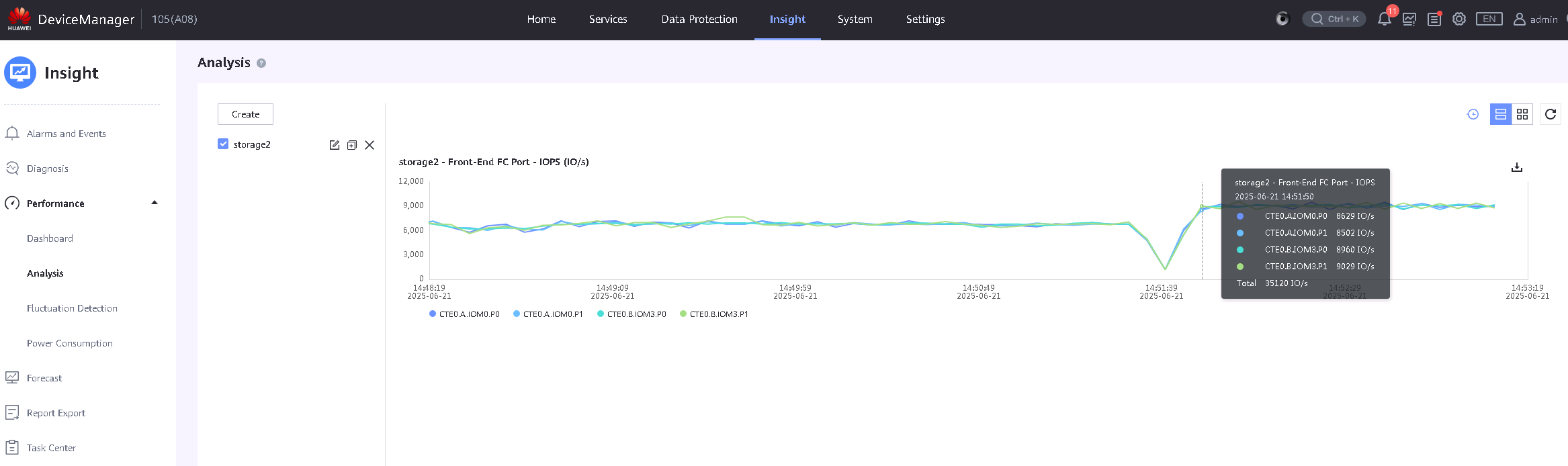

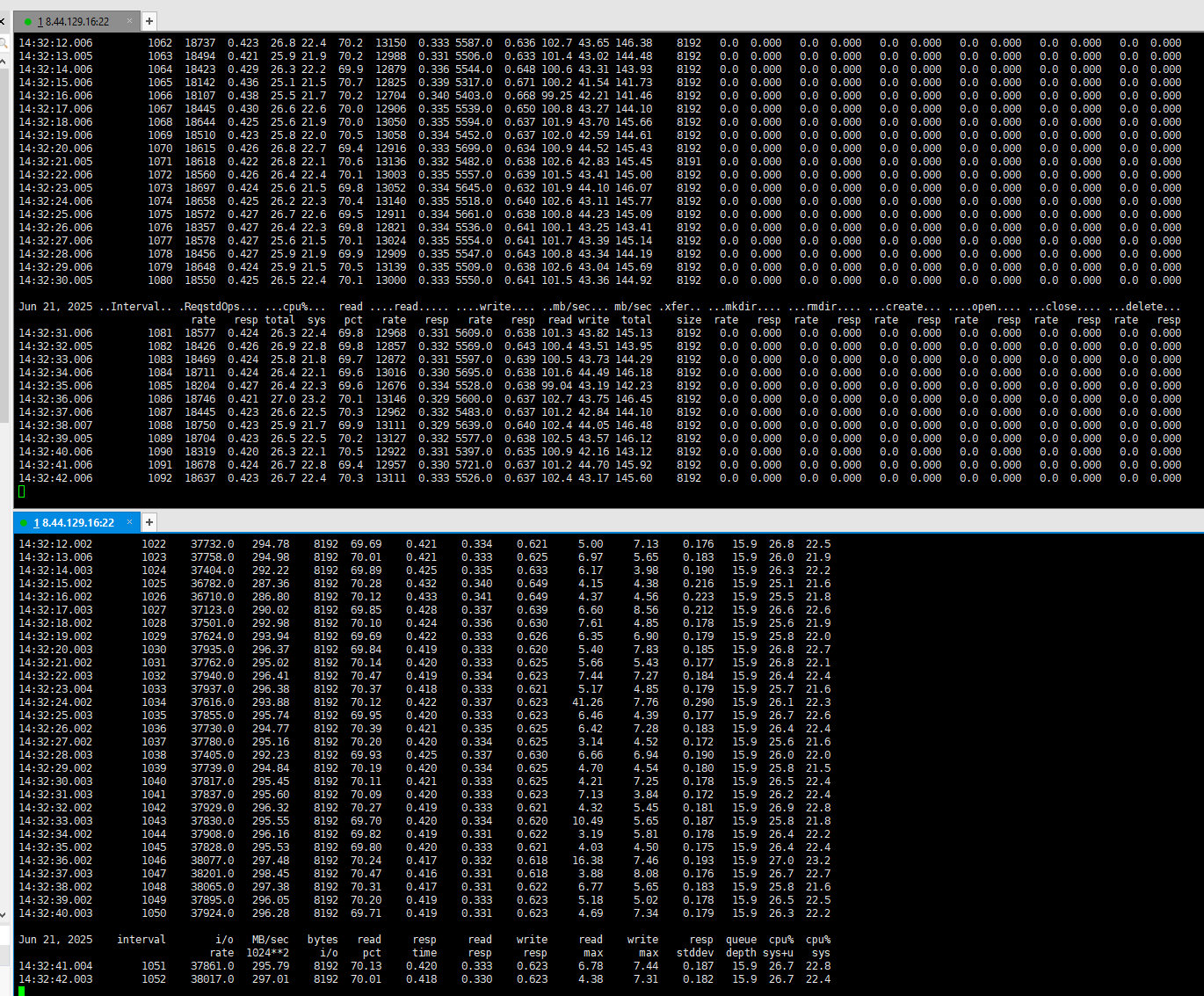

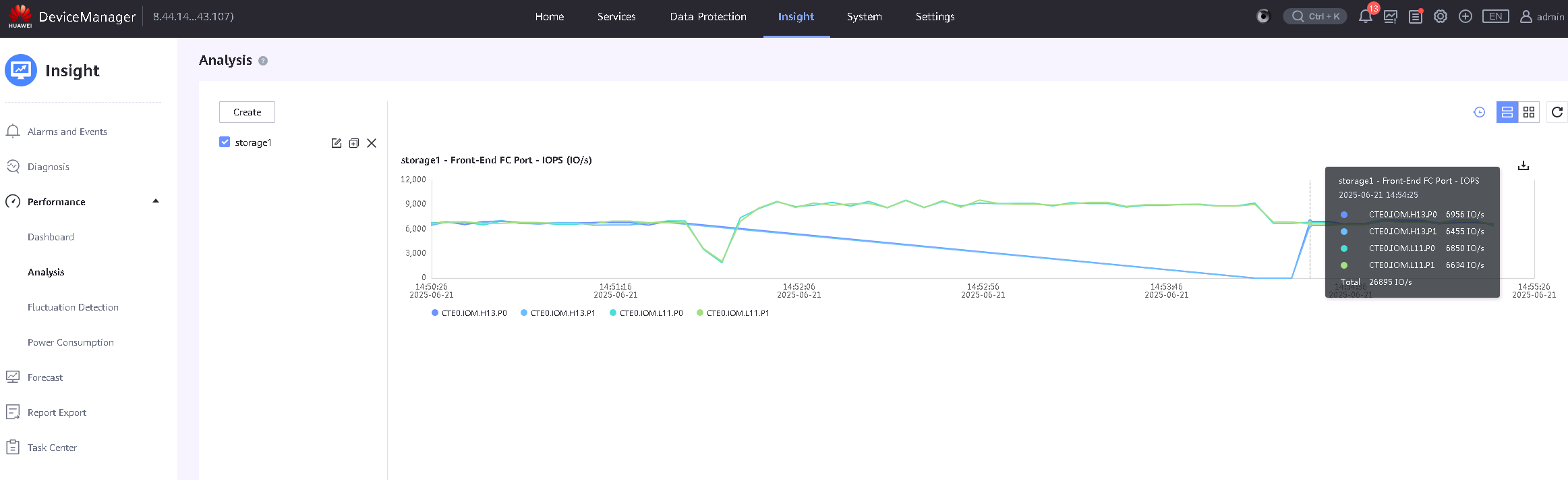

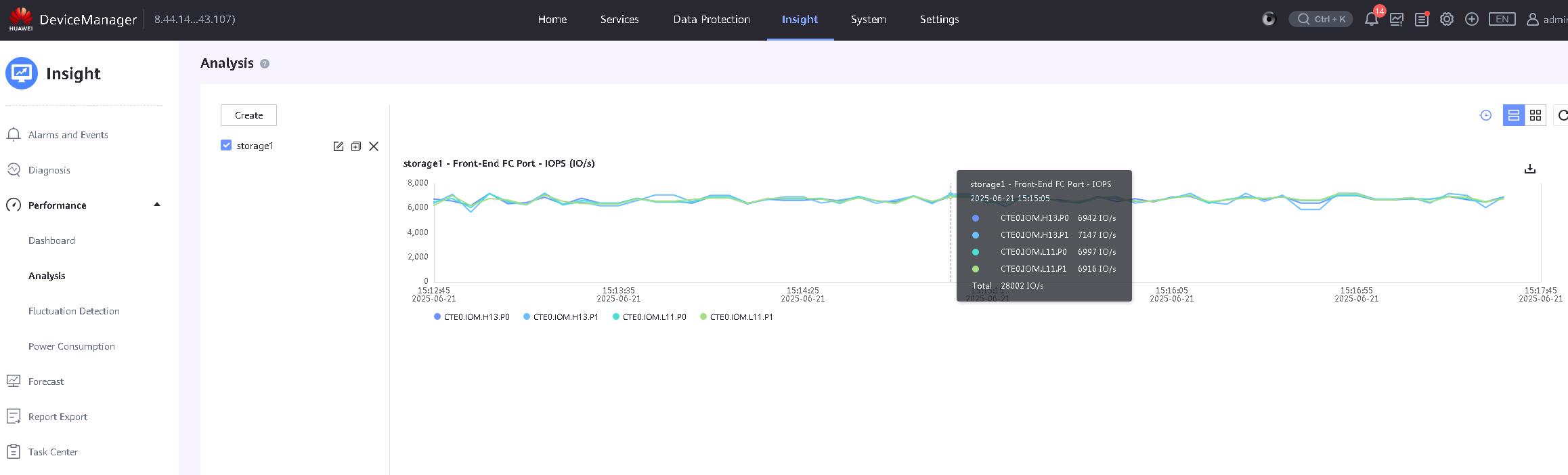

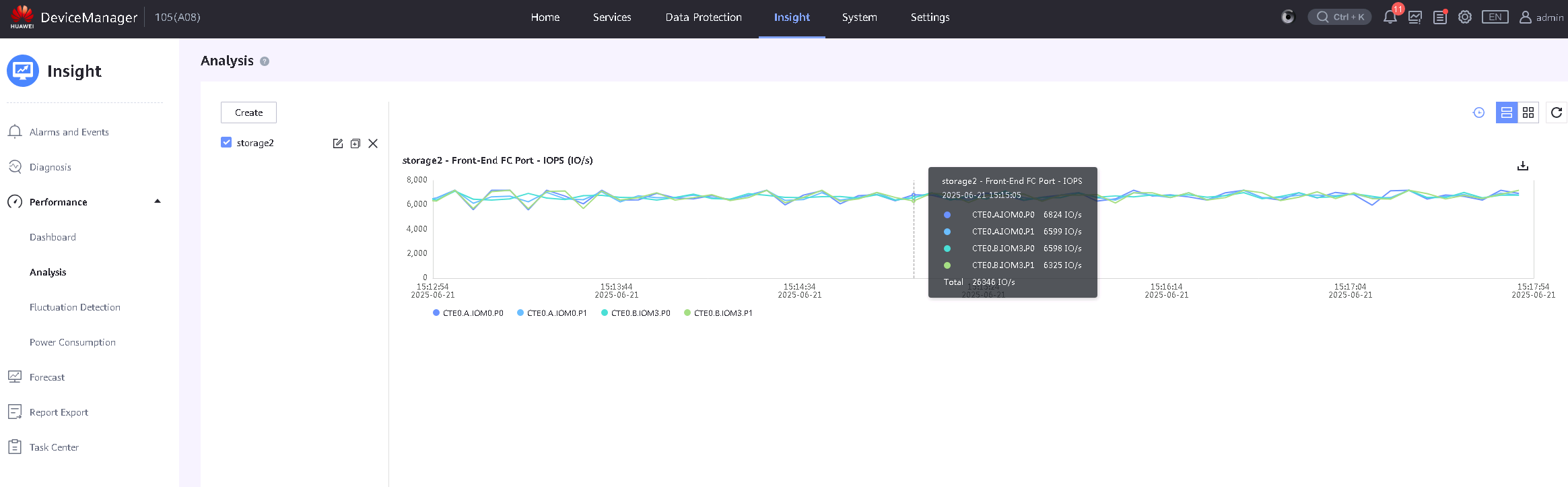

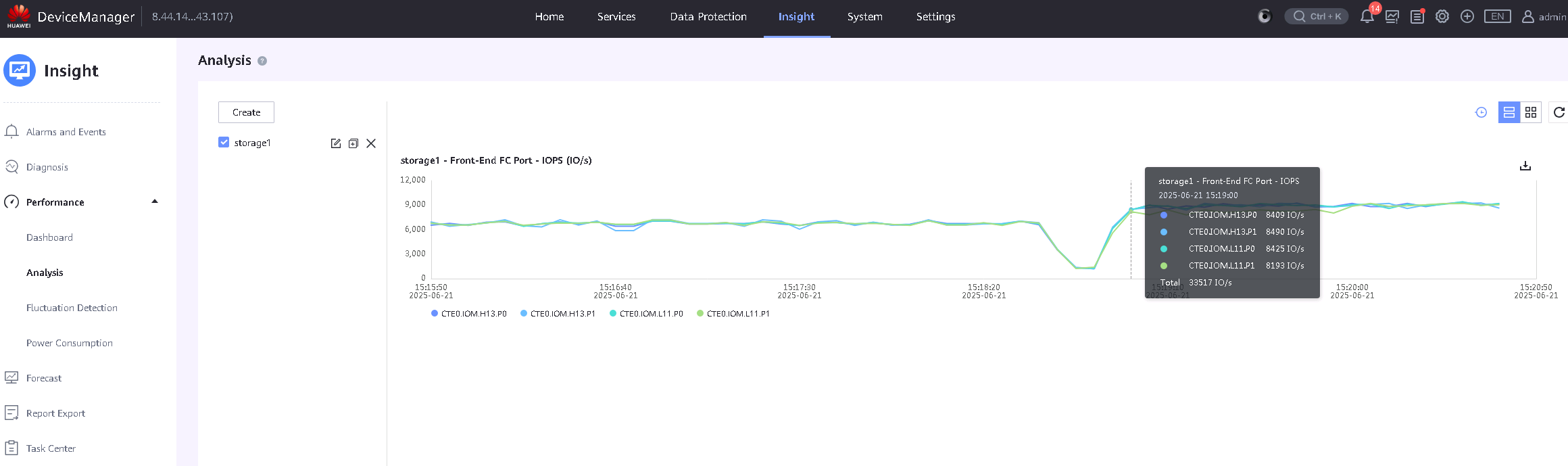

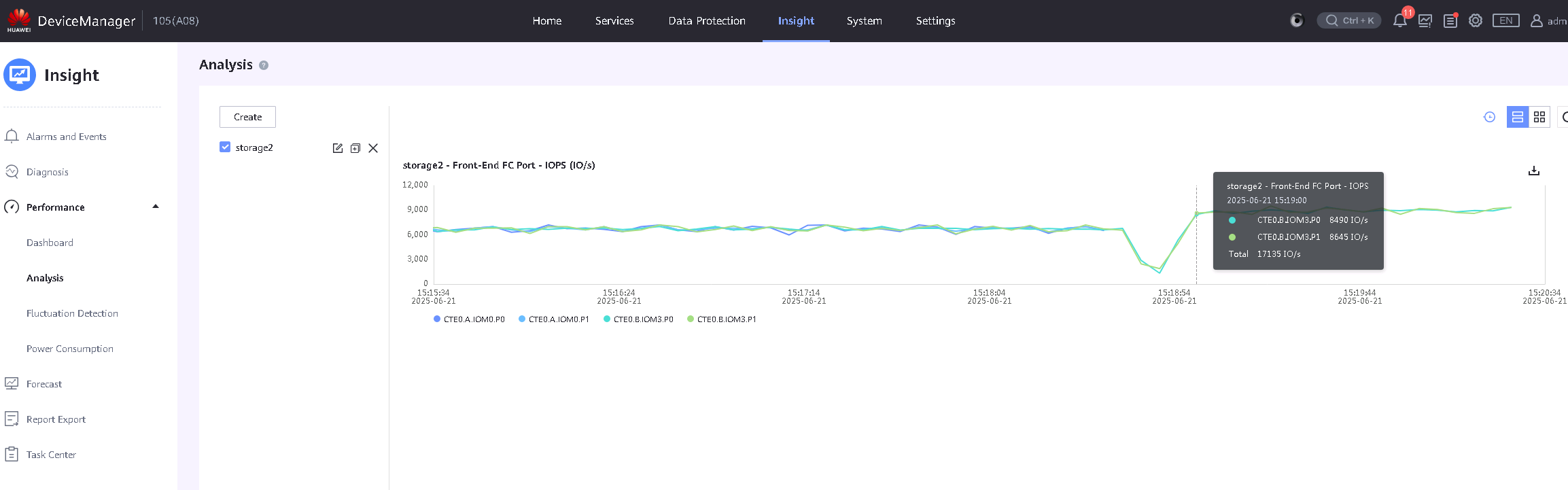

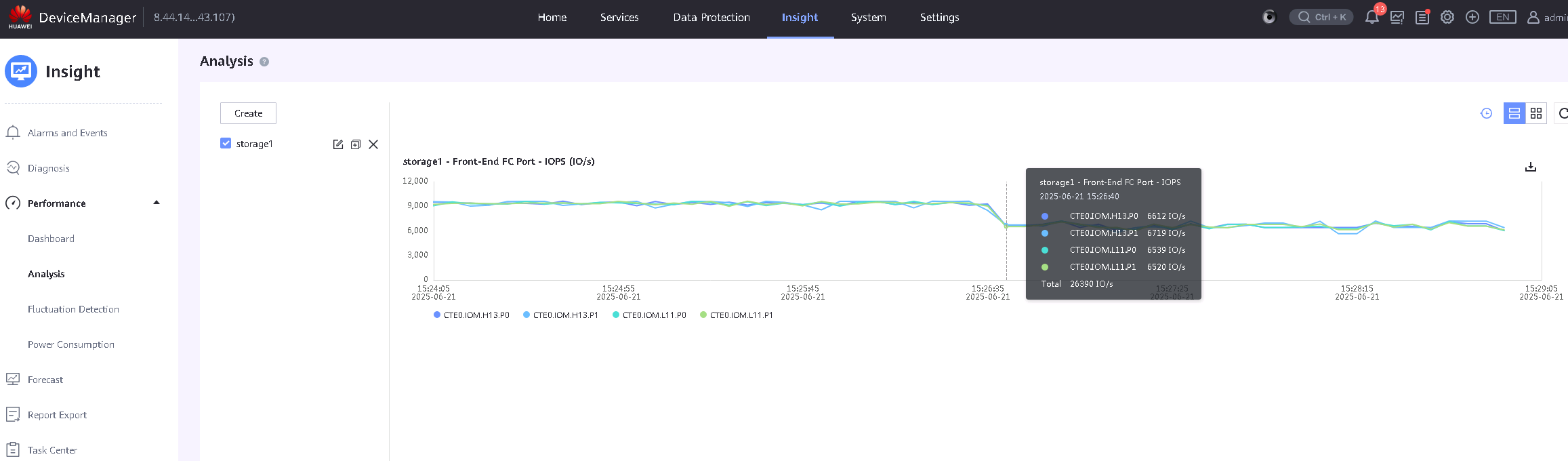

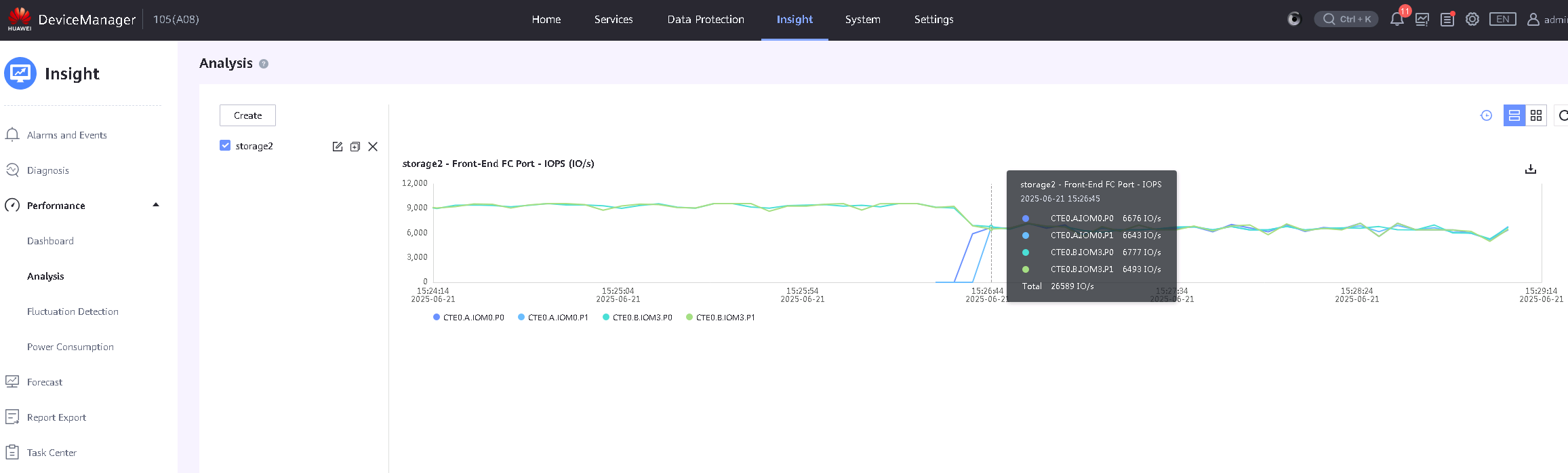

4. Performance and Stability Test

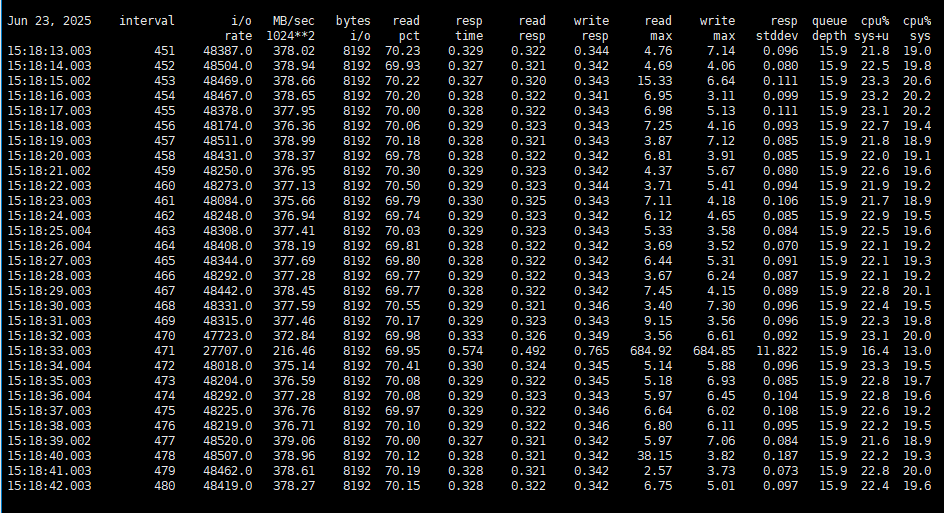

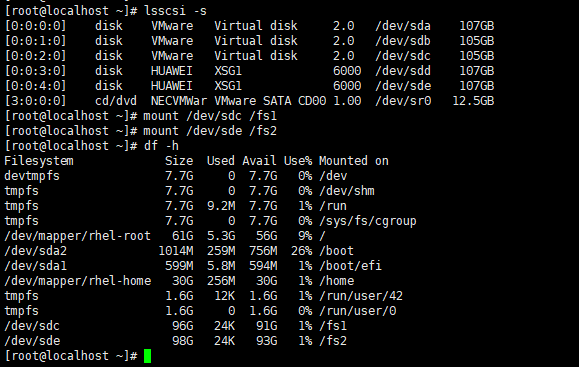

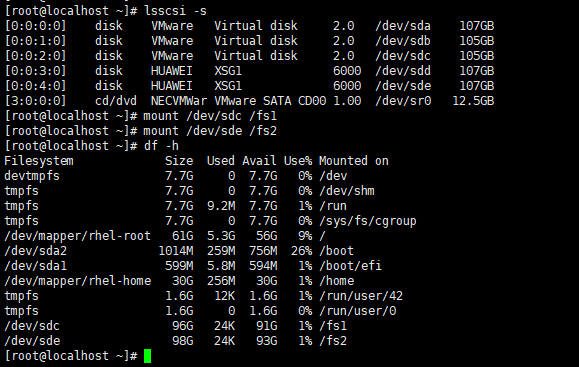

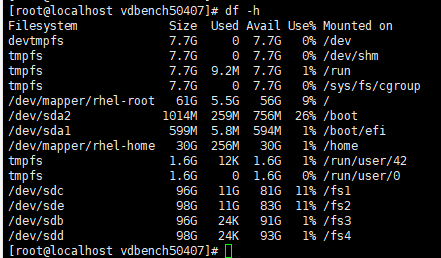

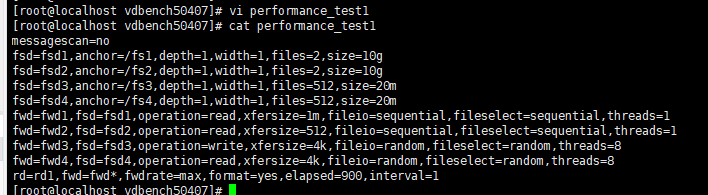

4.1 File System Mixed Read/Write Test

Test Purpose | To Verify That Read and Write Performance Meets Standards |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the networking diagram, and the physical links are functioning properly. 2.LUN mapping is functioning correctly. 3.Multipath is correctly installed and configured. 4.JAVA is correctly installed. 5.Vdbench is correctly installed. |

Test Procedure | 1.Log in to the storage management system, create mappings, and add four 100GB LUNs to the host. 2.Perform a LUN scan on the host. For half of the scanned LUNs, create datastores and add them to the VM as « Virtual Disks. » For the other half, add the LUNs to the VM as « RDM Disks. » In the VM, perform vdbench file system read/write operations on the scanned LUNs. 3.Run the Vdbench test using the command: ./vdbench -f parmfile. |

Expected Result | 1.The read/write operations should proceed without any issues. The relevant performance data (such as bandwidth, IOPS, latency, etc.) should meet the product’s performance standards. |

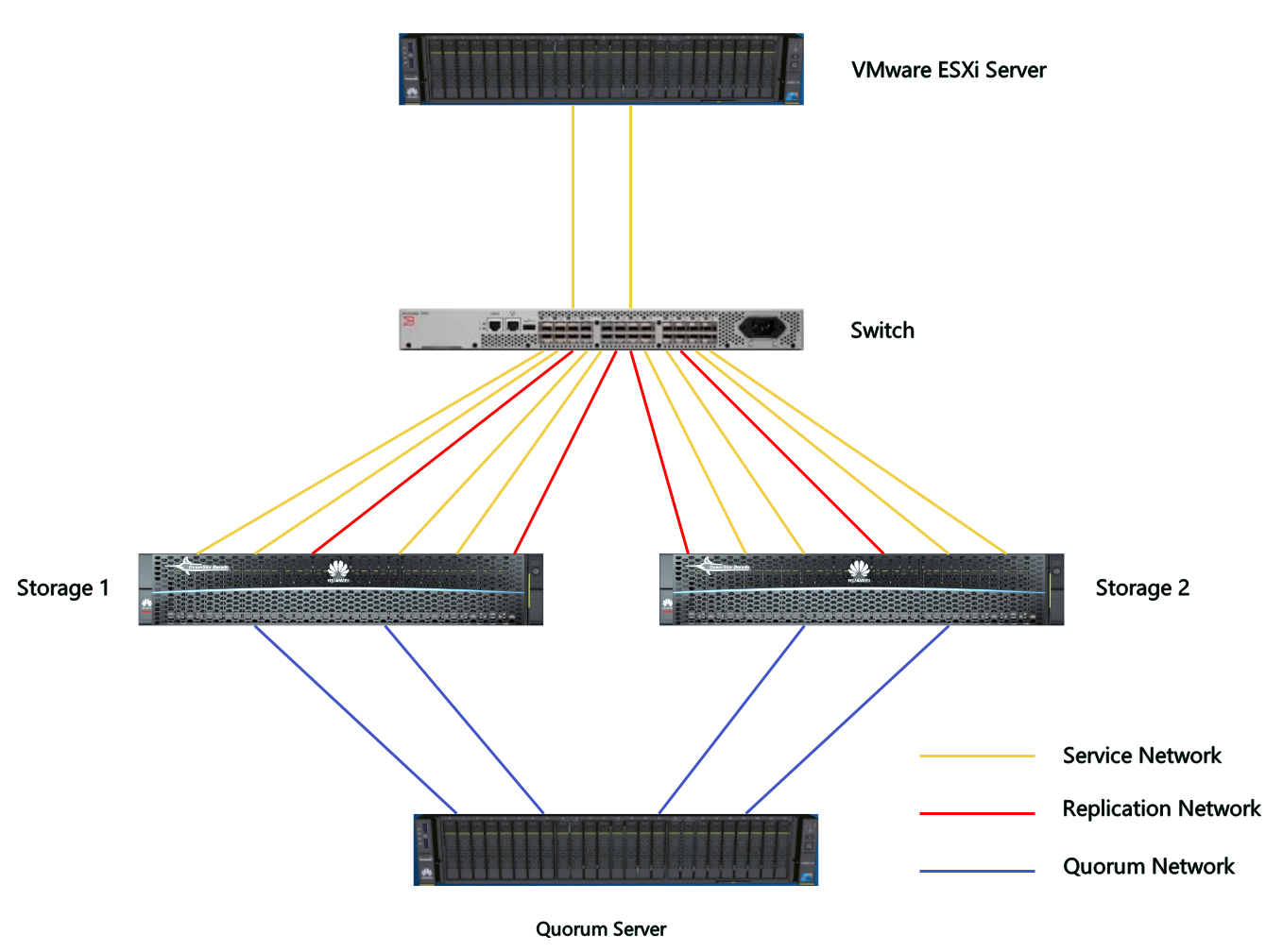

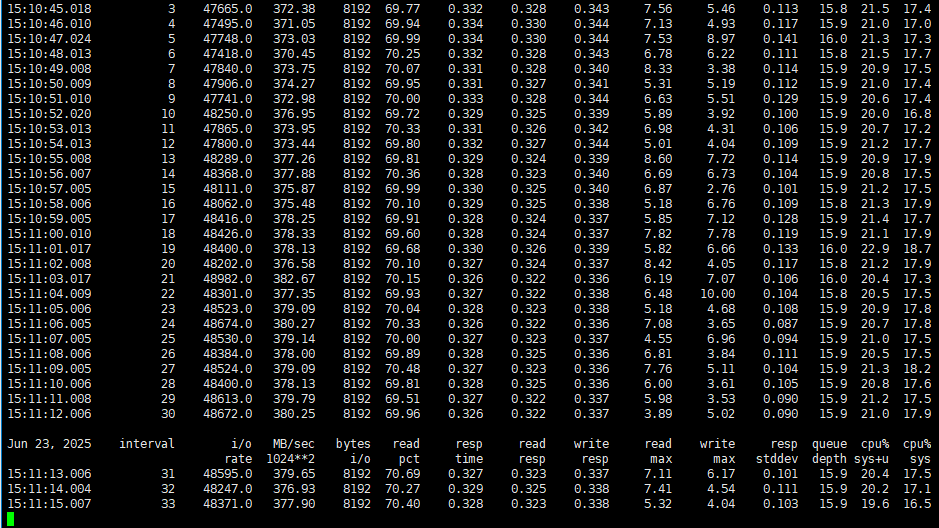

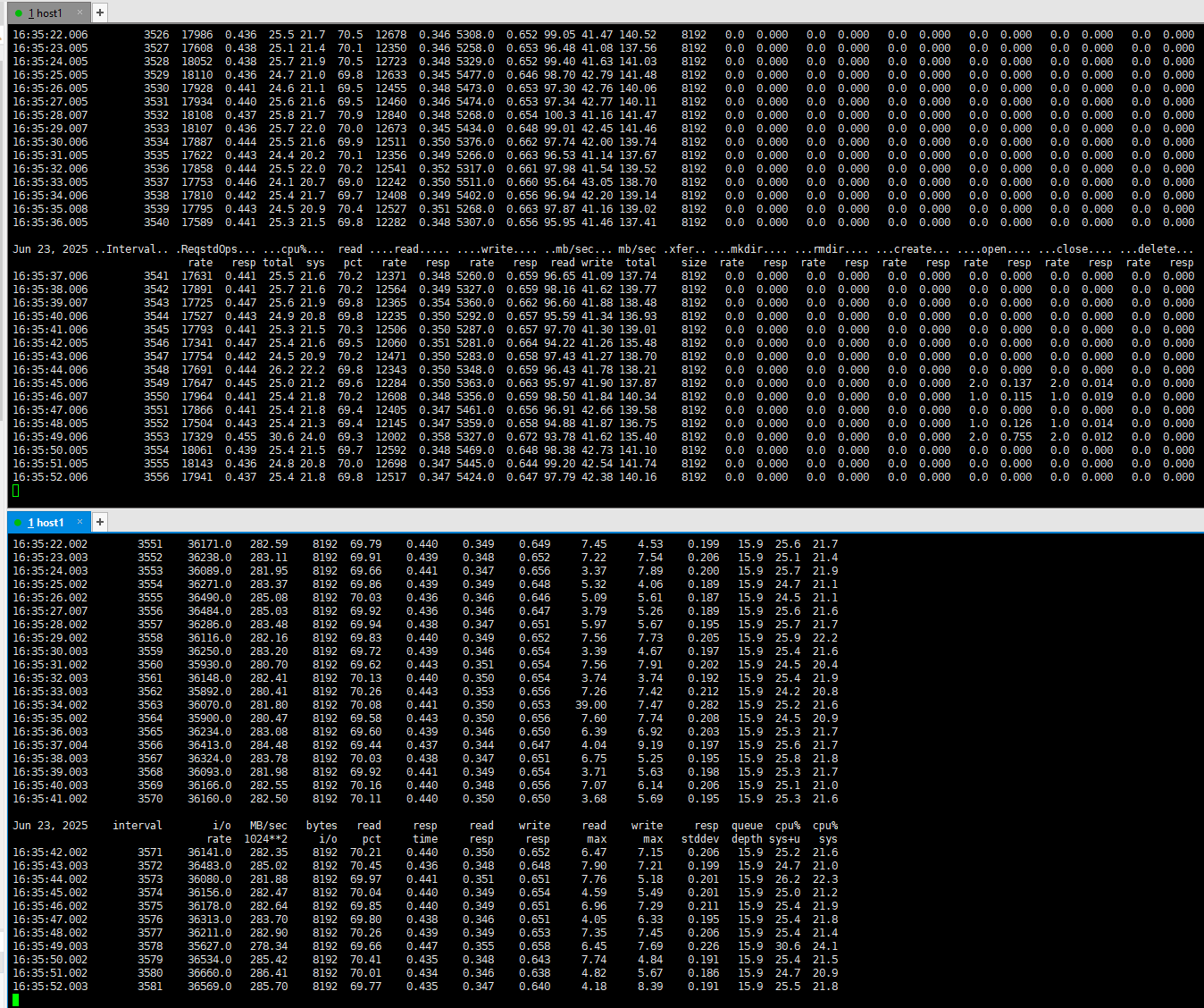

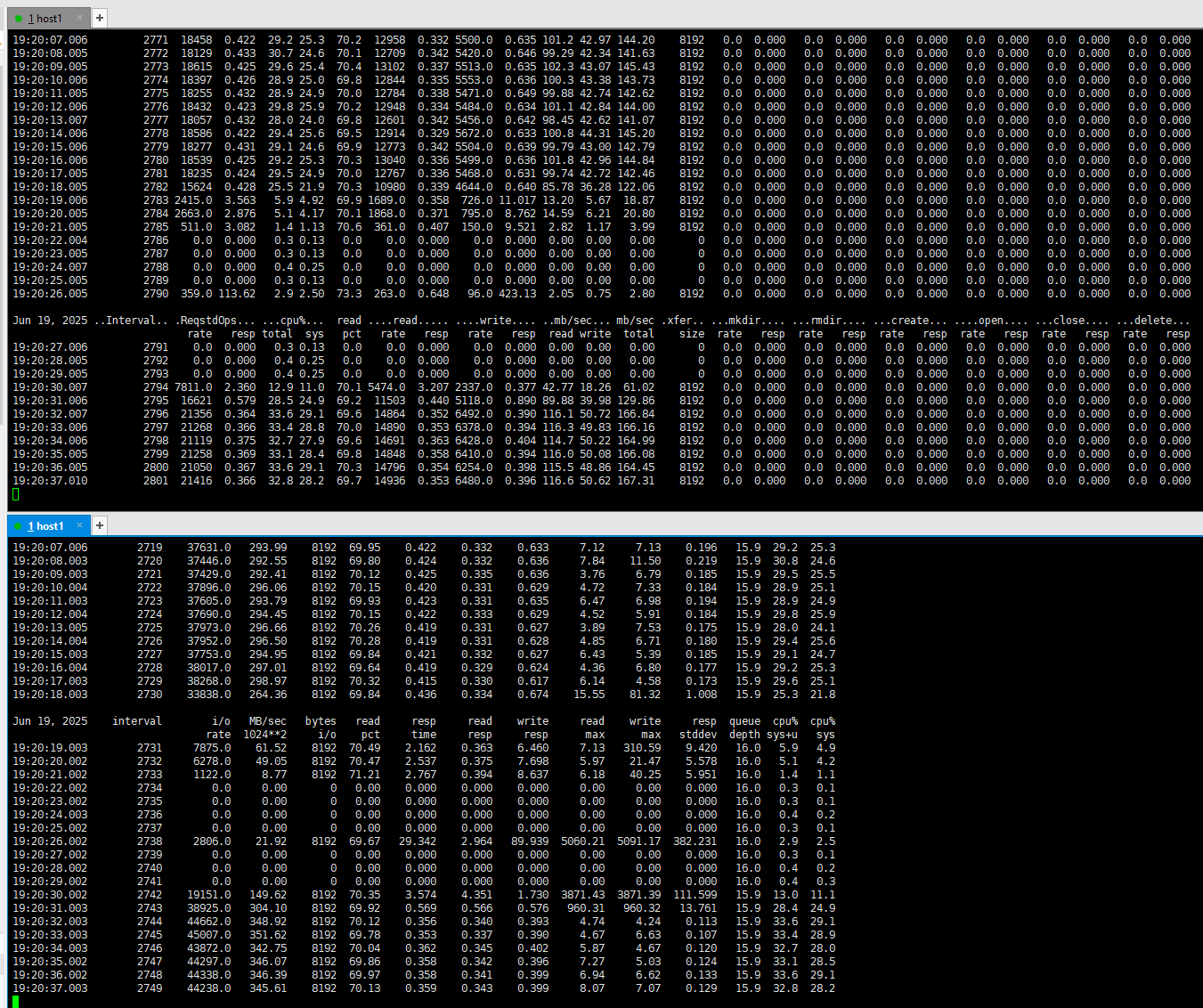

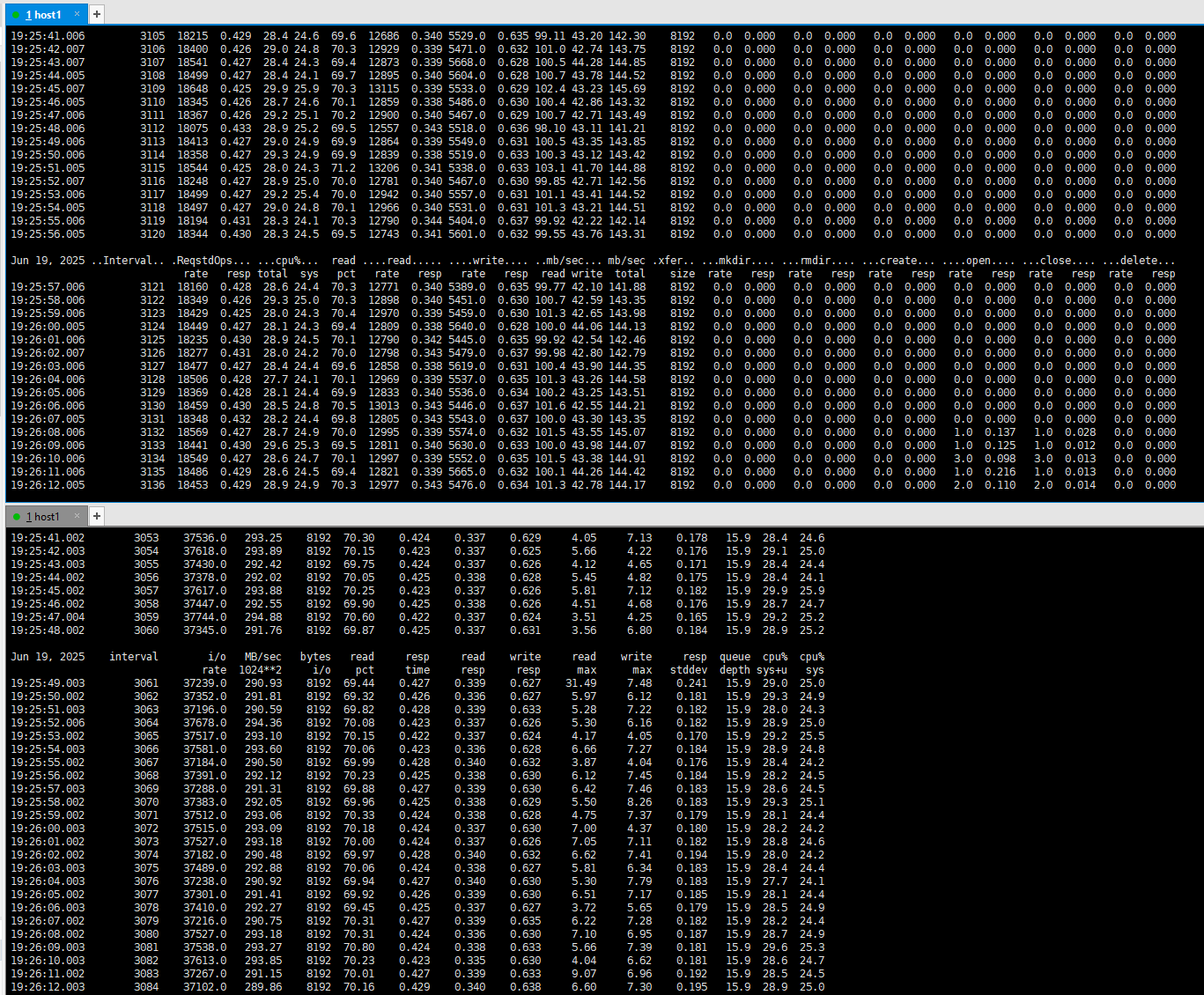

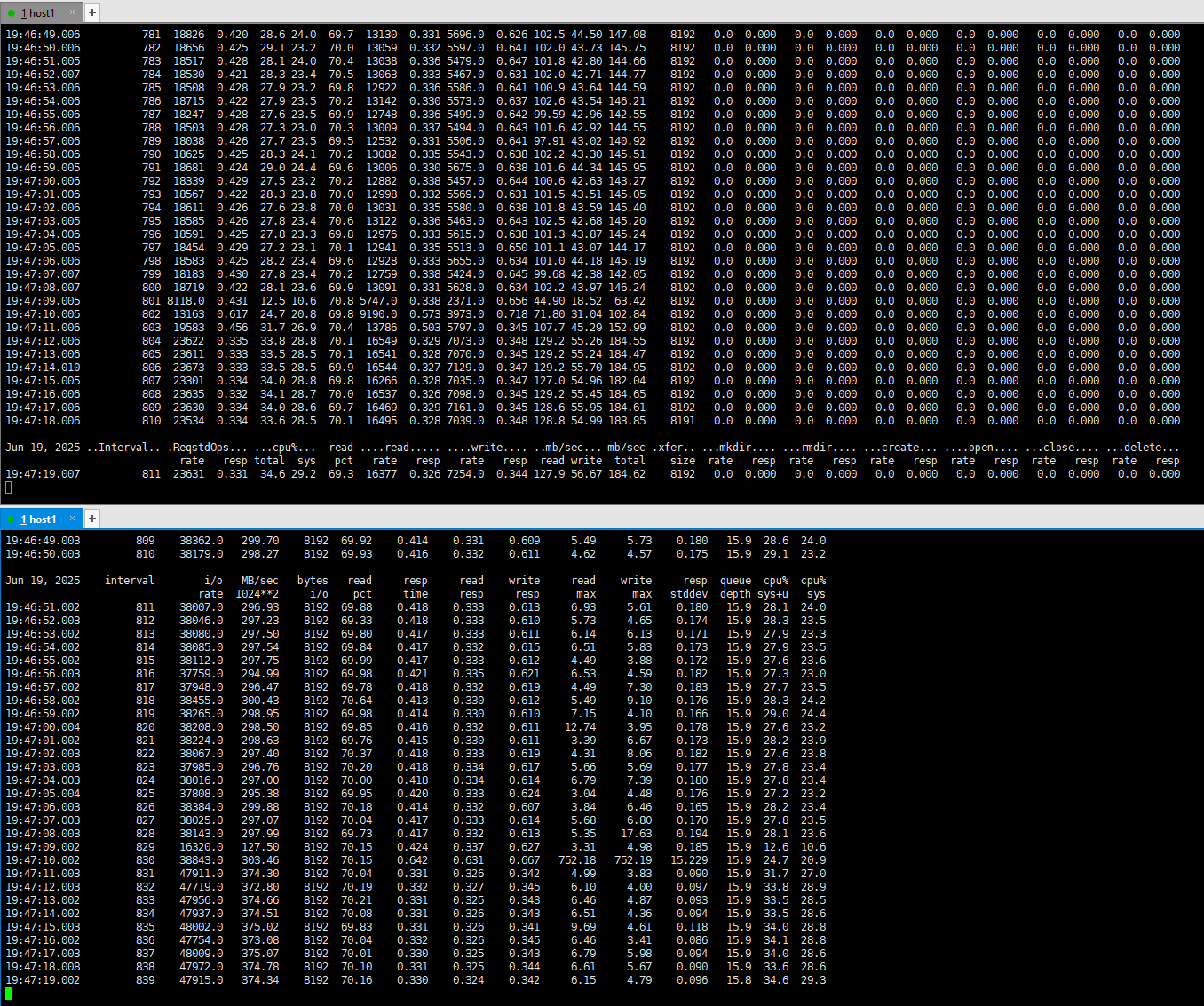

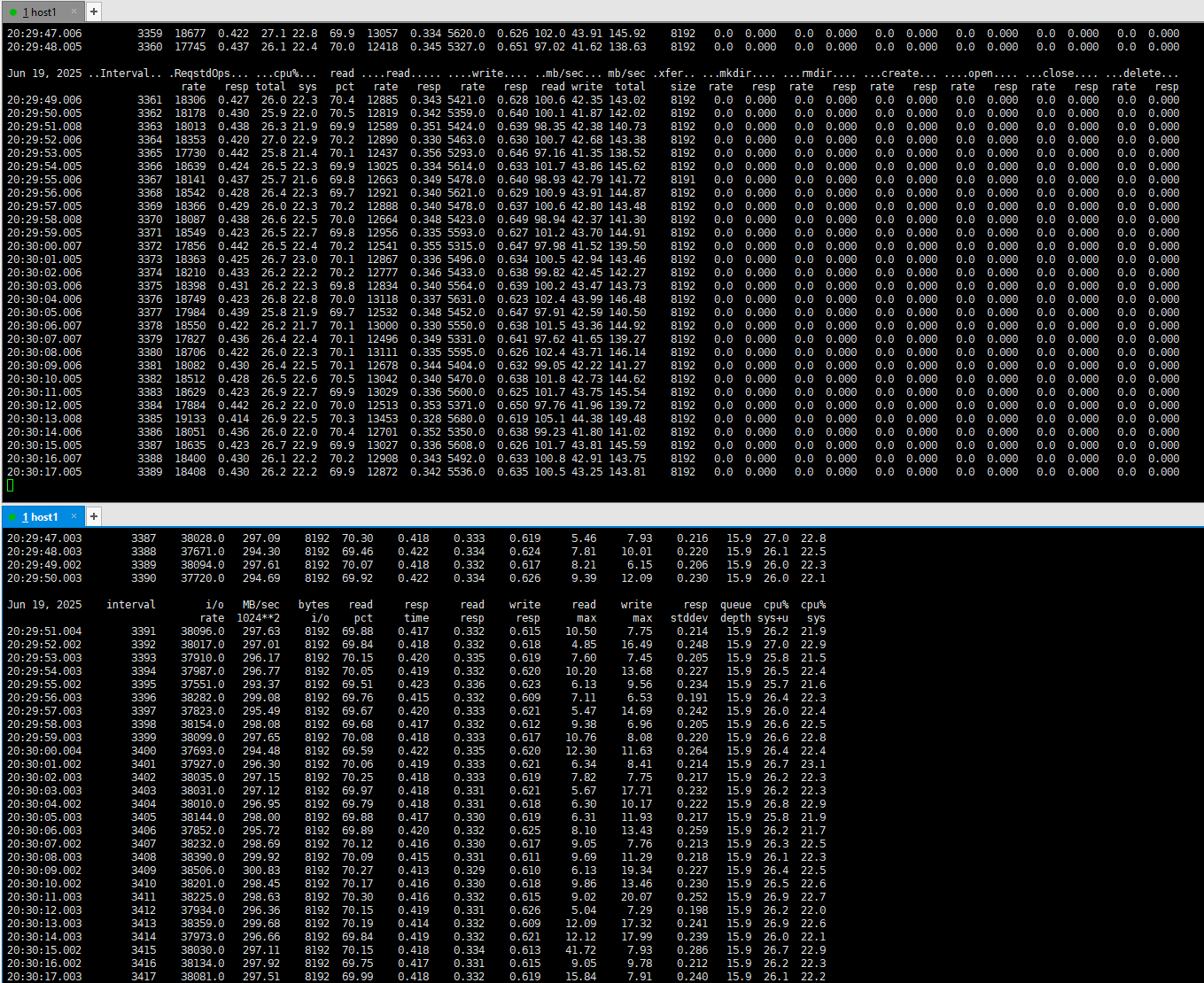

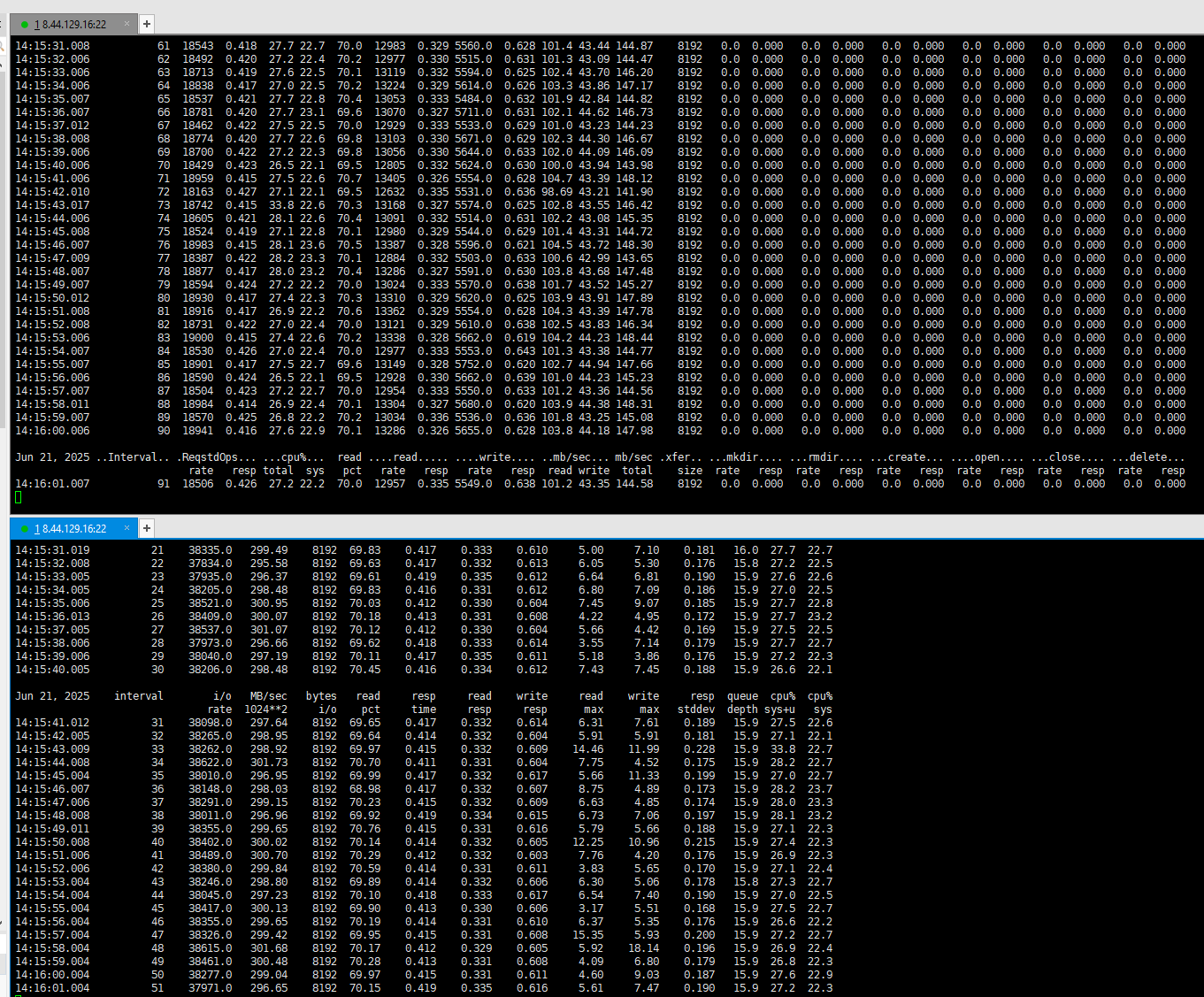

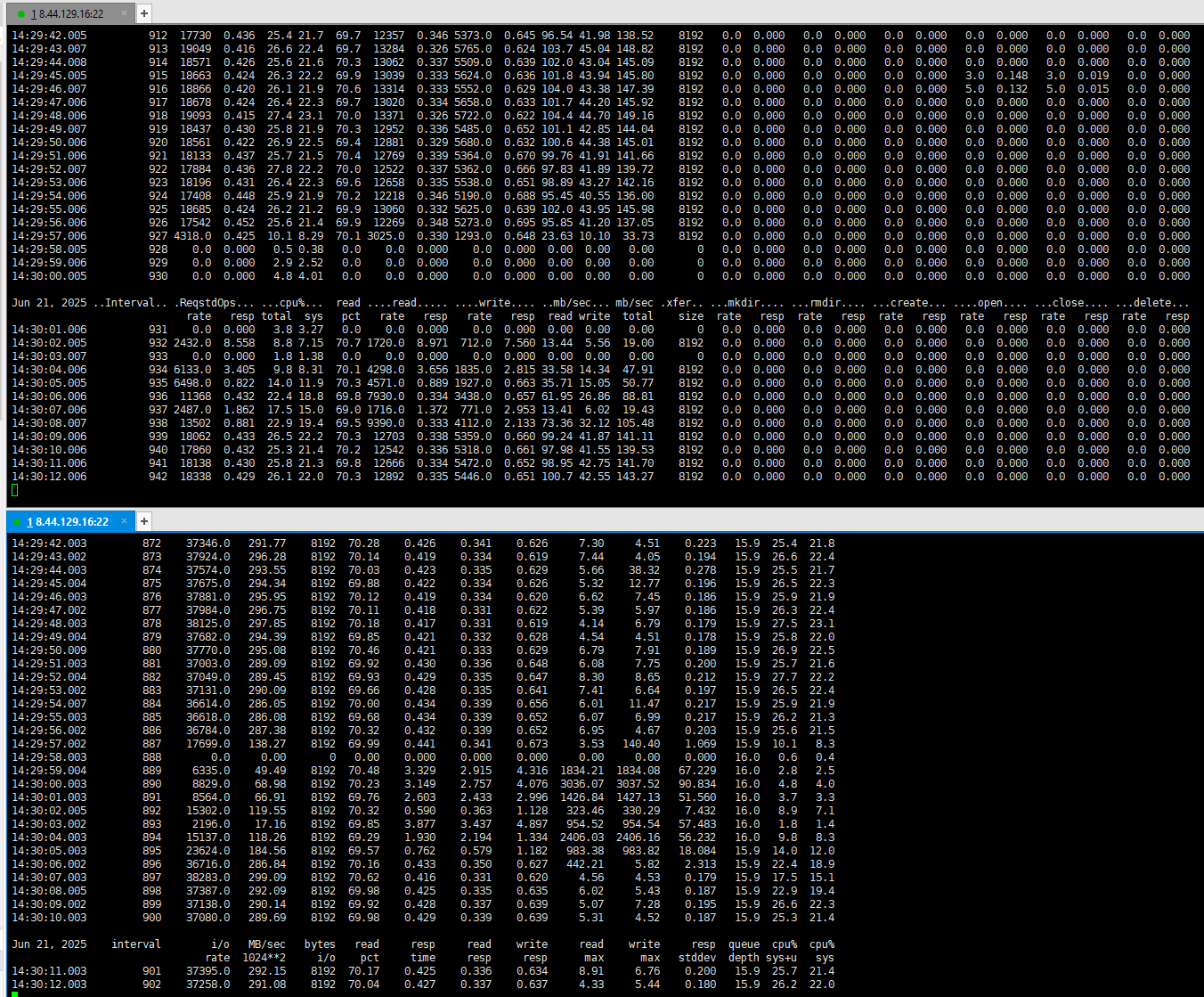

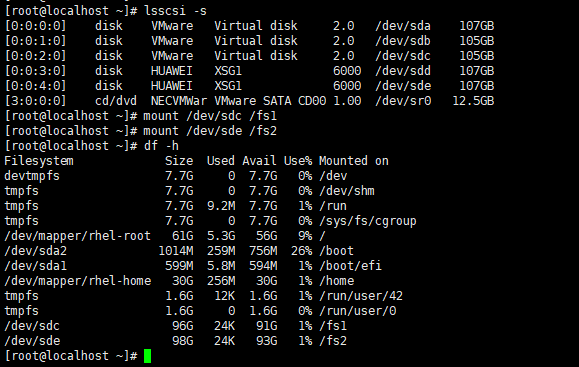

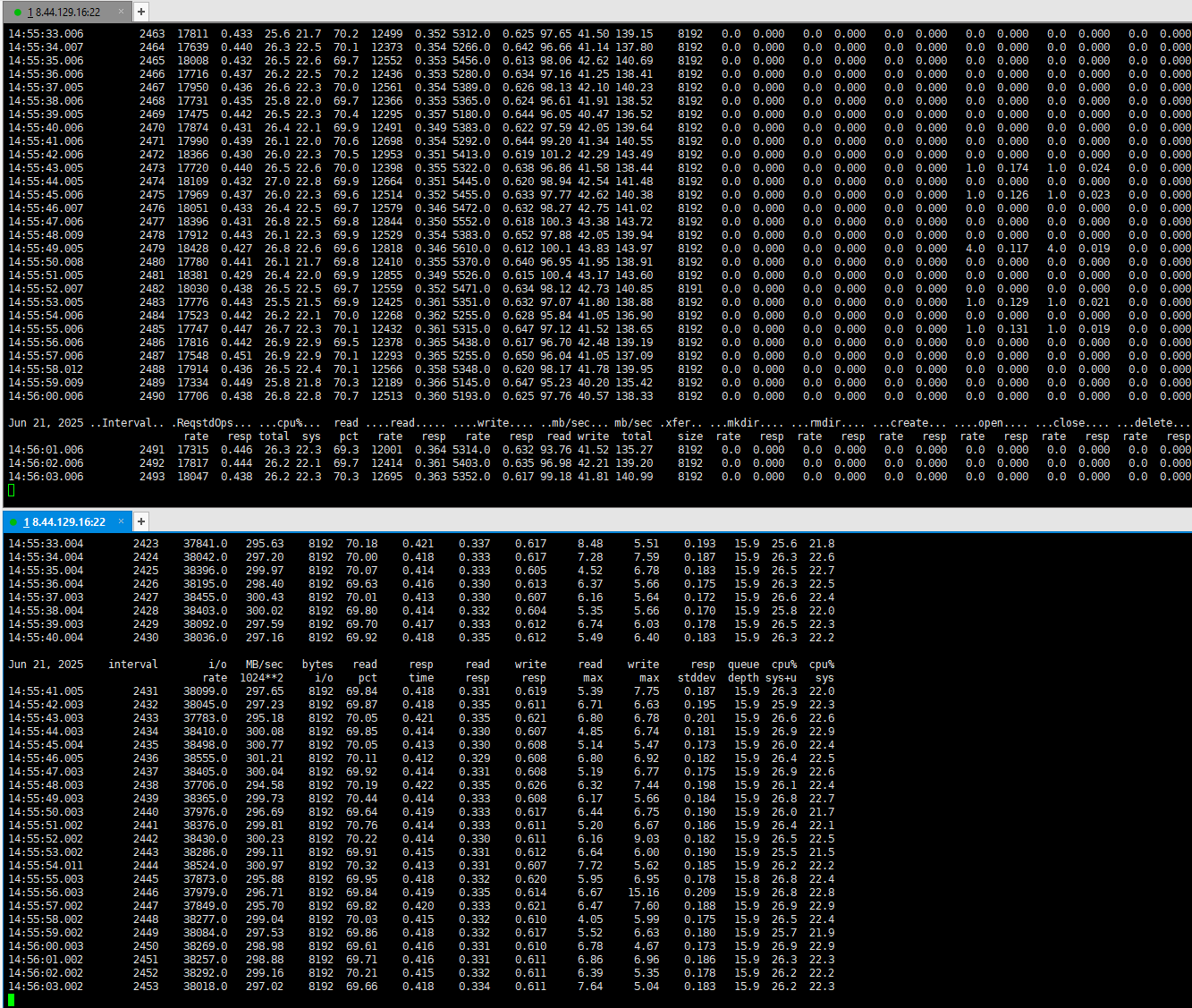

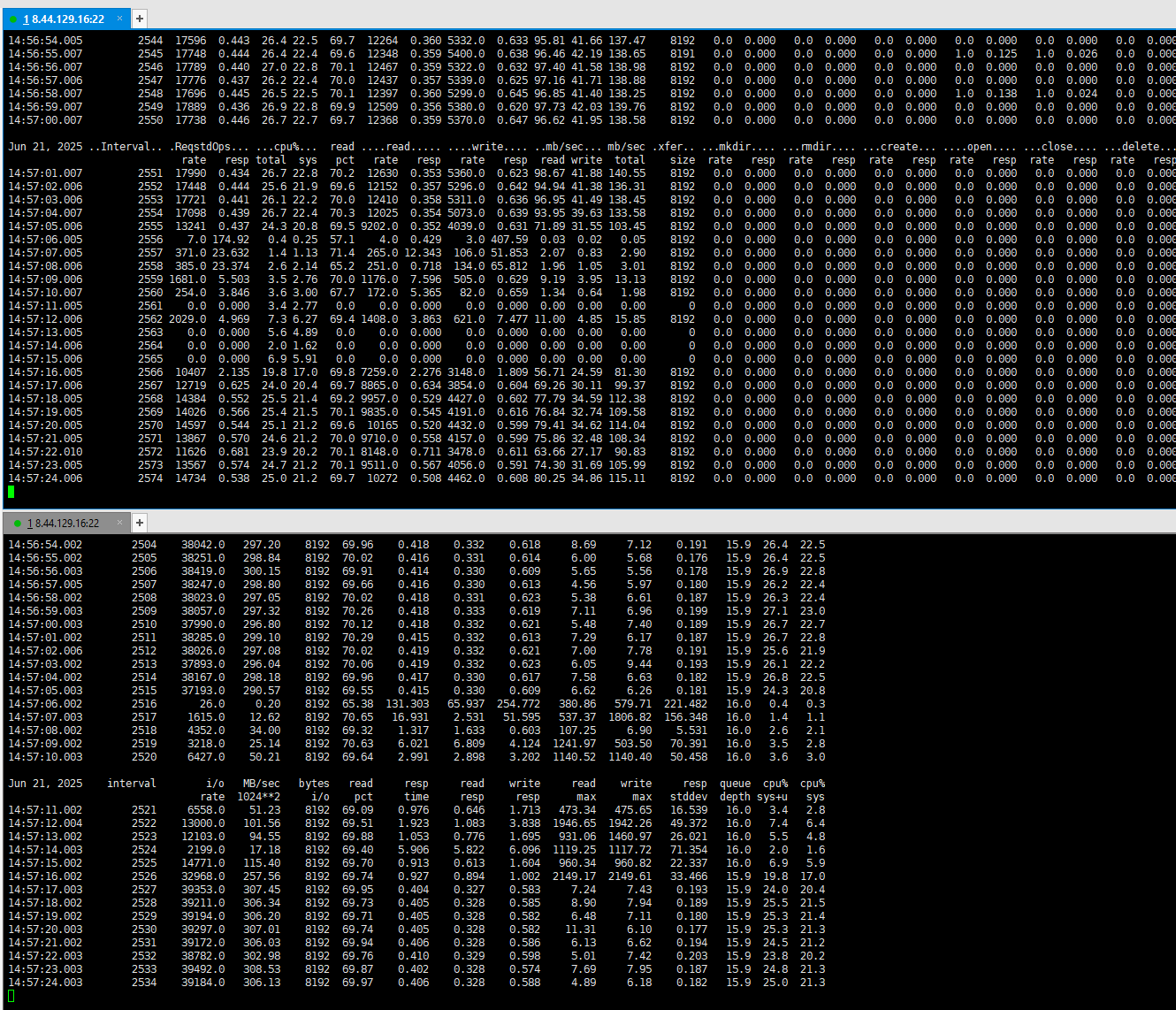

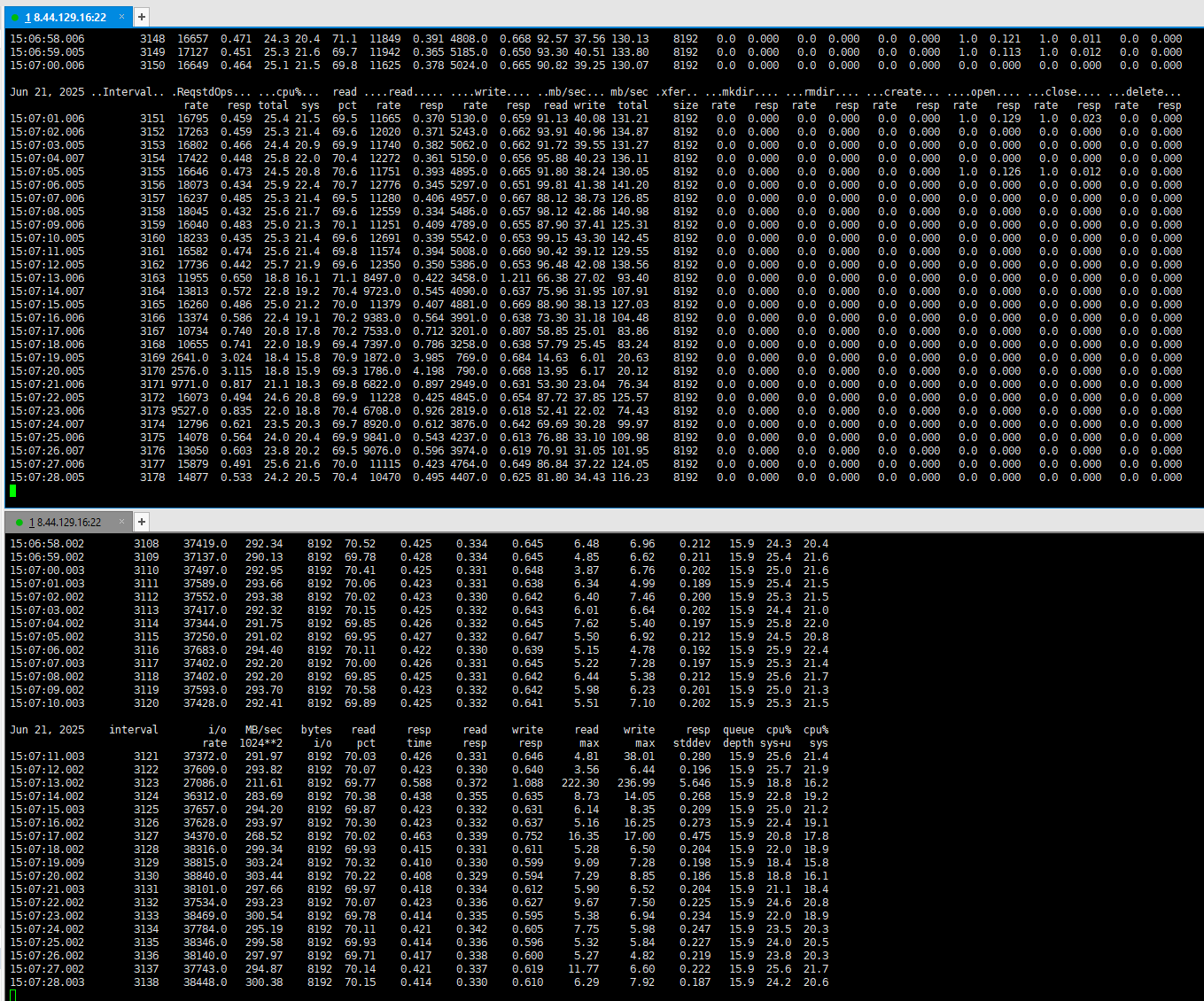

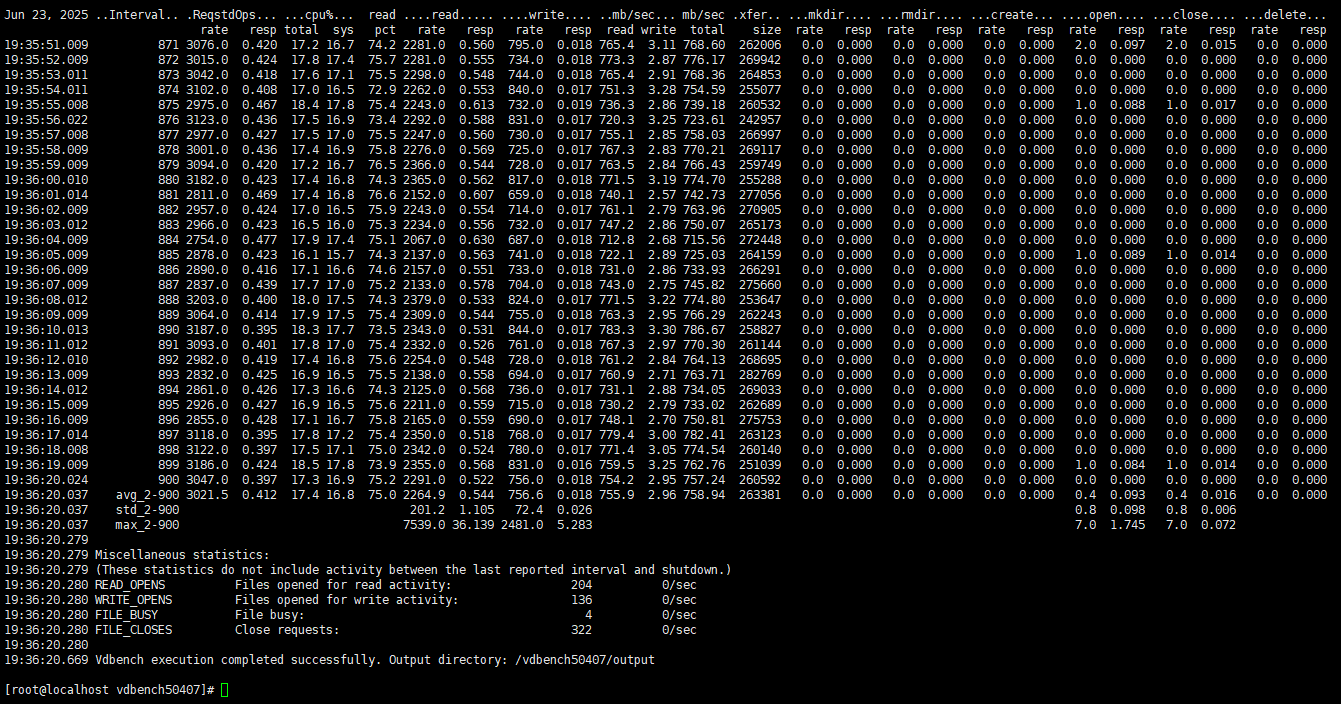

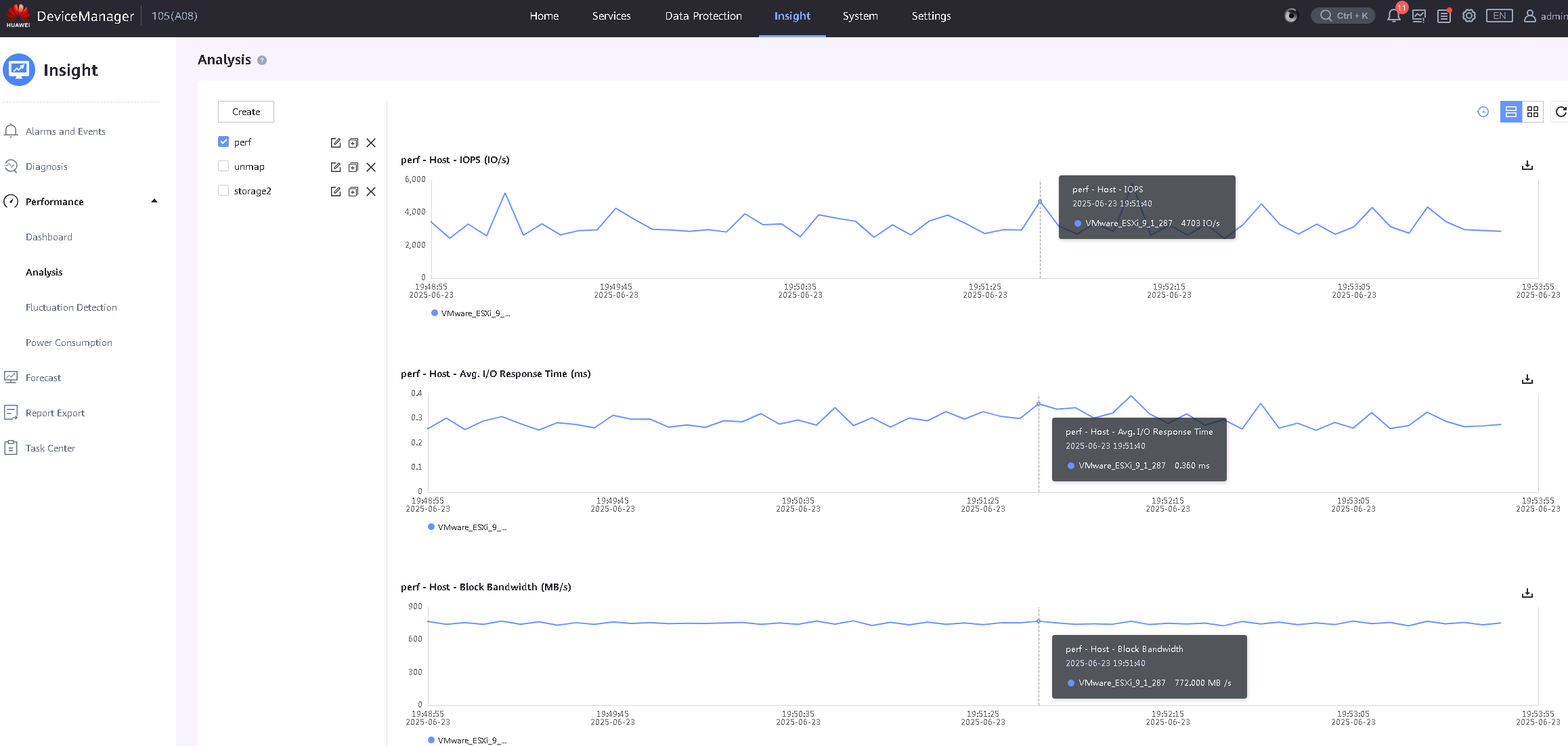

Test Record | 1.Create the file system and mount it.

2.Edit the read/write script.

3.Run the vdbench test.

|

Test Conclusion | Passed |

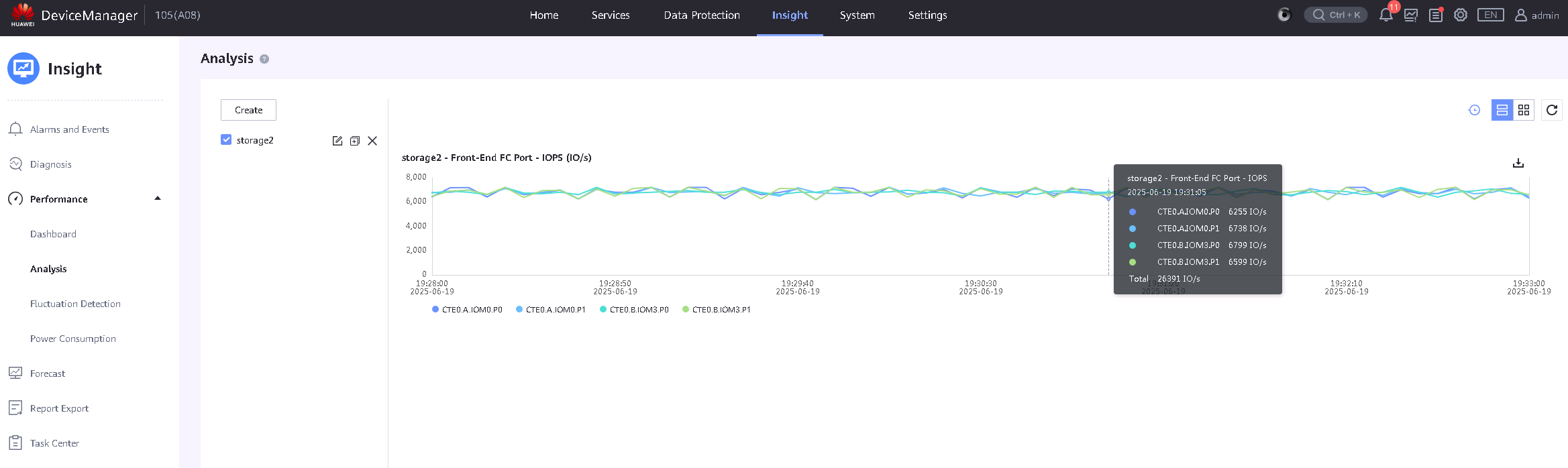

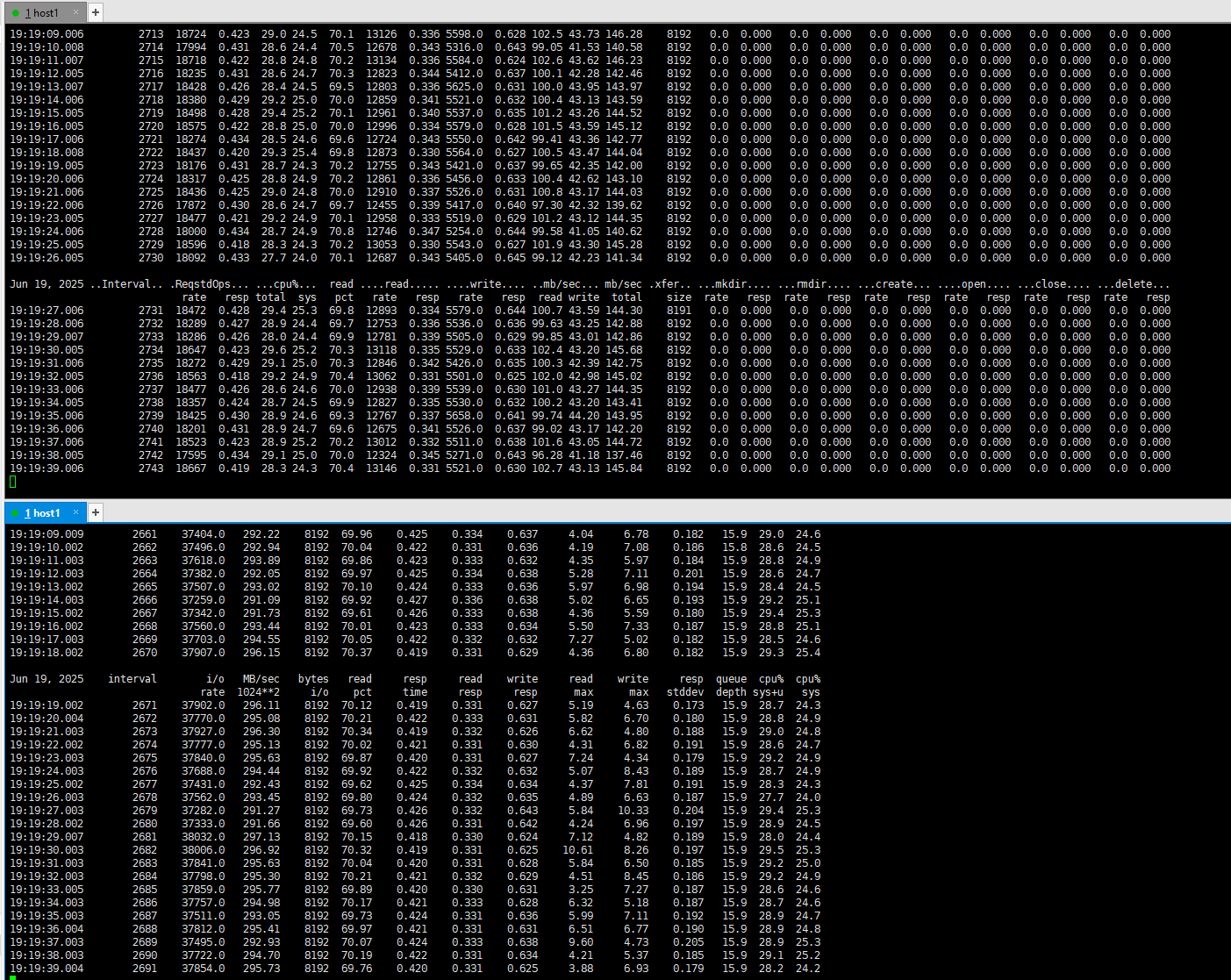

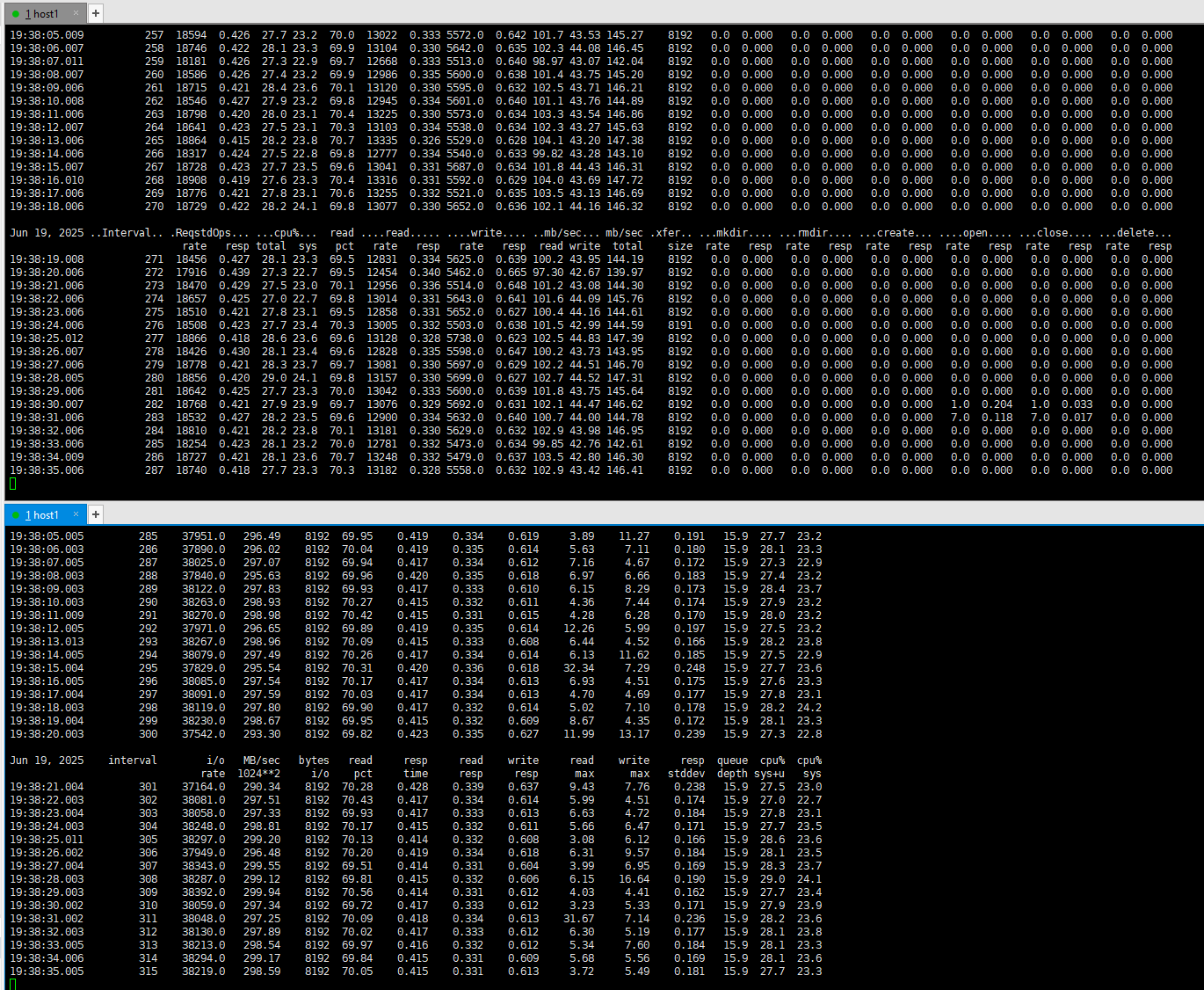

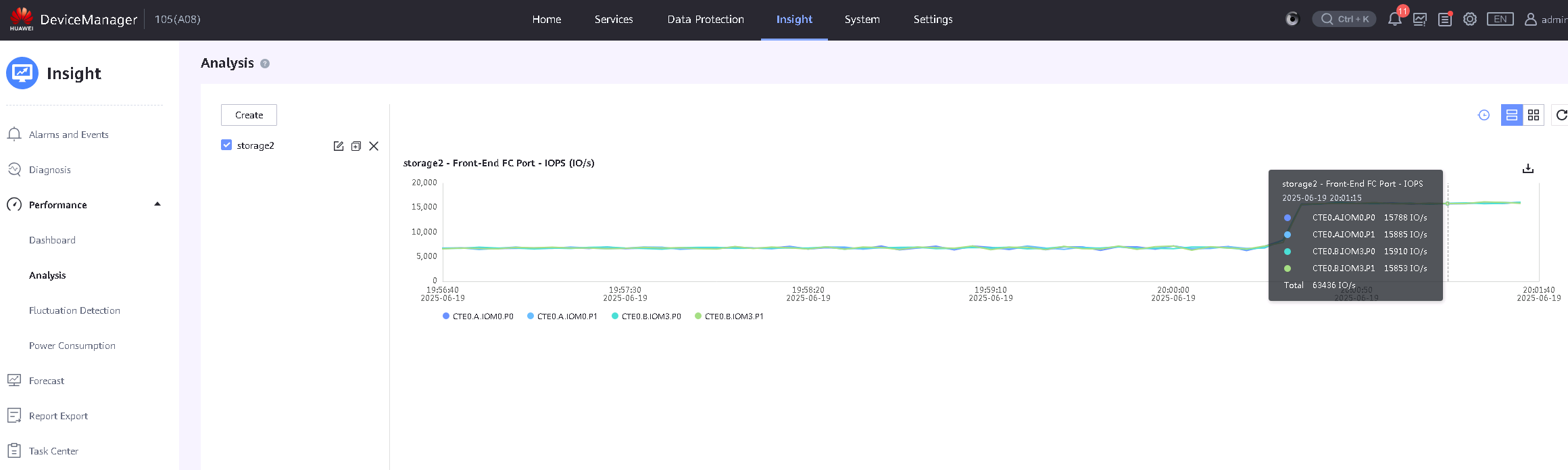

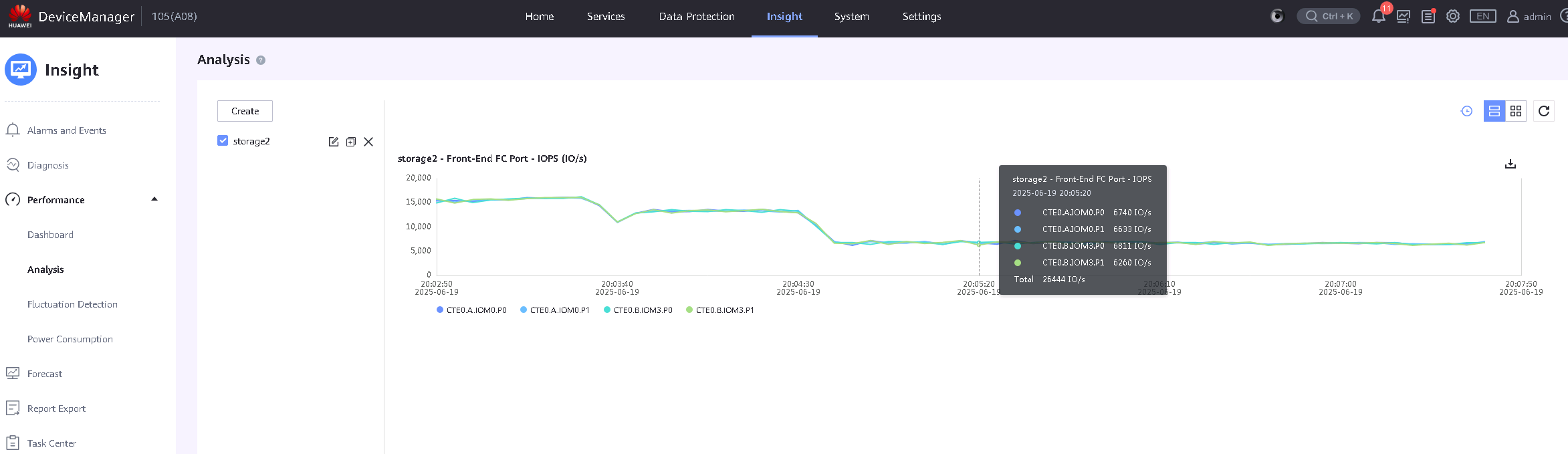

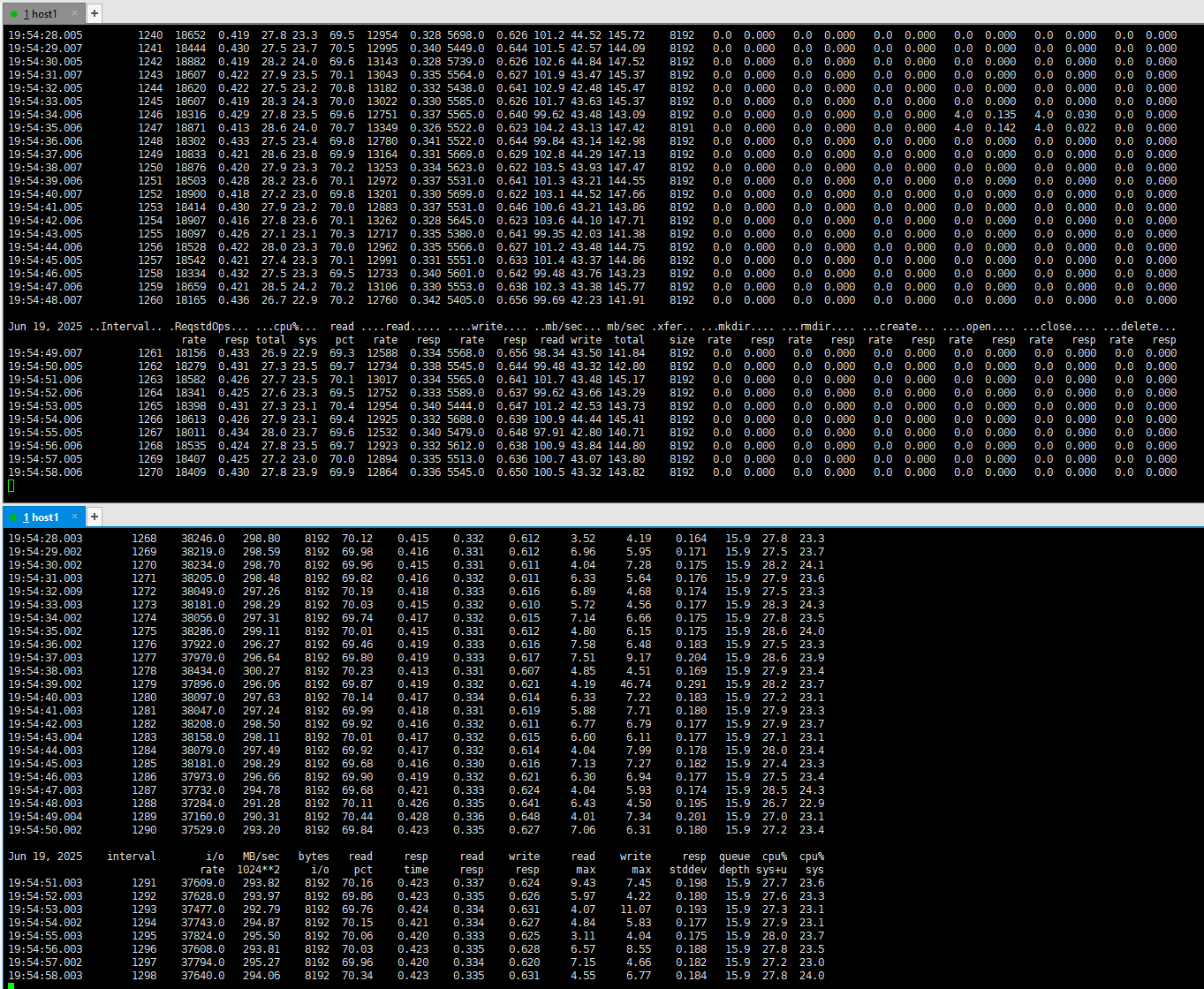

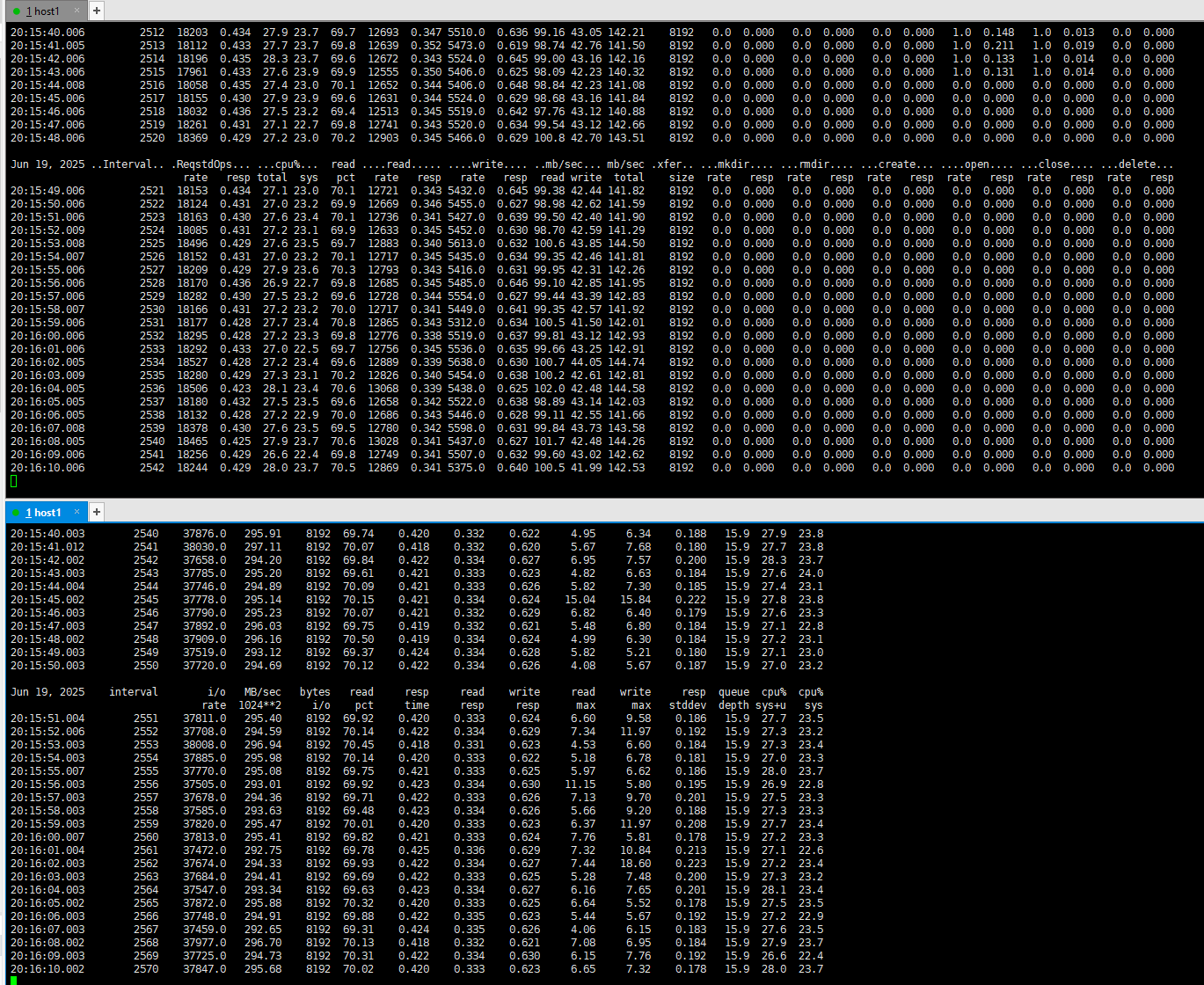

4.2 Raw Disk Mixed Read/Write Test

Test Purpose | To Verify That Read and Write Performance Meets Standards |

Test Networking | Storage and VMware ESXi Compatibility Test Networking |

Prerequisites | 1.The network has been correctly set up according to the networking diagram, and the physical links are functioning properly. 2.LUN mapping is functioning correctly. 3.Multipath is correctly installed and configured. 4.JAVA is correctly installed. 5.Vdbench is correctly installed. |

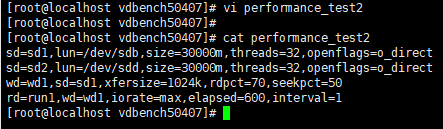

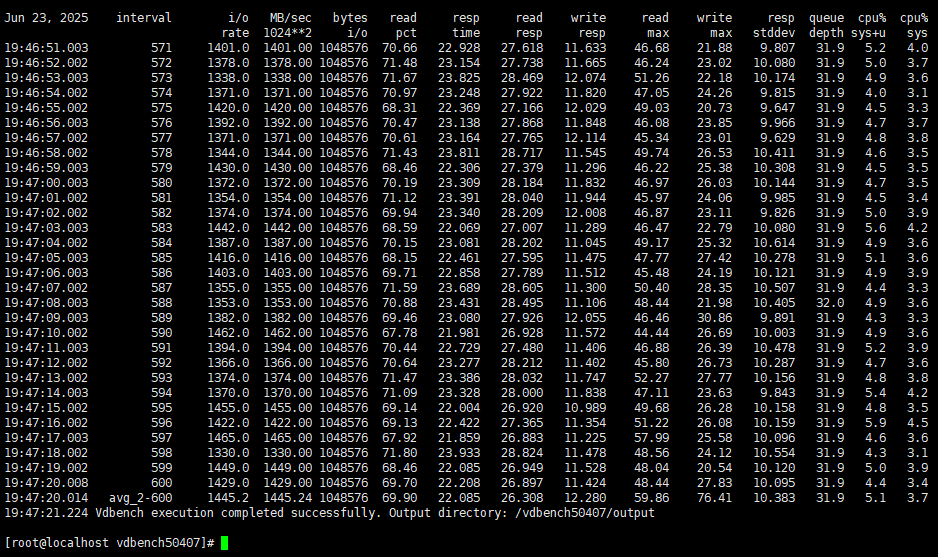

Test Procedure | 1.Log in to the storage management system, create mappings, and add two 100GB LUNs to the host. 2.Perform a LUN scan on the host. For half of the scanned LUNs, create datastores and add them to the VM as « Virtual Disks. » For the other half, add the LUNs to the VM as « RDM Disks. » In the VM, perform vdbench raw disk read/write operations on the scanned LUNs. 3.Run the Vdbench test using the command: ./vdbench -f parmfile. |

Expected Result | 1.The read/write operations should proceed without any issues. The relevant performance data (such as bandwidth, IOPS, latency, etc.) should meet the product’s performance standards. |

Test Record | 1.Edit the read/write script.

2.Run the vdbench test.

|

Test Conclusion | Passed |